The $440,000 AI Hallucination: Understanding the Deloitte AI report error and the Critical Need for AI guardrails

.png)

1. When World-Class Consulting Meets AI Hallucinations

In October 2025, one of the world’s most prestigious consulting firms found itself in an embarrassing position which no amount of corporate spin could obscure.

News headlines that rang out Deloitte refund Australian government followed the Big Four firm partially reimbursing $440,000 for delivering a report full of AI Hallucinations including phony academic references, non-existent tomes, and editorially-wrought quotes from Federal Court judges.

The Deloitte AI report error is not just another cautionary tale of “generative AI error”, per se, but a watershed revelation which additionally supports the jurisdiction that even the most sophisticated organisations are behind in the deployment of artificial intelligence, concerning the stark couple of AI guardrails lacking.

The incident raises some alarming inquiries into “risks of AI in the public sector” and the necessity of “quality control of AI outputs” across the board.

2. The Incident: A Timeline of the Deloitte AI report error

In December 2024, the Australian Department of Employment and Workplace Relations commissioned Deloitte for an "independent assurance review" for AU $440,000 (an approximate US dollar value of $440,000).

This was a serious task—namely, to evaluate the Targeted Compliance Framework, the computerized government process for automatic penalties of welfare recipients who miss heads of requirements such as job search.

The 237-page report was first put up onto the department's website in July 2025. Initially it seemed to be just what one would expect from a Big Four firm—thoroughly endowed with the attributes of a good report itself, and well-researched. But the occasion of the AI report error, shortly afterwards, was to reveal basic defects of quality control in AI outputs as well.

2.1 The Whistleblower Who Discovered the AI Hallucinations

In late August, Chris Rudge, a researcher specializing in health and welfare law from Sydney University, commenced his investigation into the report. What he discovered was a classic case of AI Hallucinations or AI-produced content which looked as if it was authoritative, but was entirely spurious.

“I knew instantly whether it was hallucinated by AI or the world's best secret”, Rudge told the Associated Press. He had discovered a supposed book (not genuine) attributed to Lisa Burton Crawford, a well-known Sydney University professor of public and constitutional law. The book's name was entirely fictitious, and also out of pack and not in her department’s remit anyhow, thus indicating the salient nature of the AI Hallucinations.

This was no mere error in a footnote. But it was the start of what would develop into the much larger AI report error. Rudge got hold of the press, describing the report as “entirely smothered with fabricated references.” It was not simply an issue of academic integrity for him, but revealing public sector “AI very serious risks.”

“They’ve got a case of a court entirely misquoted then made up the quote from the judge,” he explained, indicating how AI Hallucinations can be misleading in government decision-making.

2.2 The Scale of generative AI mistakes

As Deloitte were undertaking their internal review of the AI report error, the scale of the AI Hallucinations began to appear:

⟶ Fabricated academic citations : references to some papers which never existed—raw AI Hallucinations.

⟶ Non-existent books : references to academic publications from some

universities—e.g. Sydney, Lund in Sweden, which are entirely fictitious.

⟶ Invented judicial quotes : quote made up attributed to Federal Justice Jennifer Davies (spelt “Davis” in original report too).

⟶ Phantom footnotes : over a dozen bogus references littered through the text.

Of the 141 references in the original report's bibliography, only 127 reappeared in the modified version of the report, which was uploaded 4.10.2025—quiet enough on a Friday evening, and also that many regarded as calculatedly minimising the publicity around the Deloitte refund Australian government story.

The incident indicated catastrophic lapses in quality control in AI outputs and indicated the need for immediate AI guardrails.

3. The AI Confession: Understanding How AI Hallucinations Happened

Perhaps most sensational, though, was the pronouncement included in the amended report: that Deloitte used “a generative AI large language model (Azure OpenAI GPT-4o) based tool chain licensed by DEWR, and hosted on DEWR’s Azure tenancy” to address “traceability and documentation deficiencies”.

Put simply, AI was used to assist in analysis and cross-referencing, which are the features of analysis totally absent in the pure administration interpretation. It was crucial to understanding the AI report error, and how the AI Hallucinations crept into a high level public Government report.

What this raises immediately is the problem of AI guardrails. Why was this not mentioned in the original report? If AI was being used for analytic work, what was the quality control in the AI outputs process? Most crucially, how did so many AI Hallucinations get through multiple reviews?

According to sources quoted in Australian Financial Review, Deloitte’s internal review referred the errors to “human errors” rather than AI malapplications. But, given the staggering number of fabricated references, which all carried the signature traits of the AI hallucinations, that explanation strained credibility, and threw up questions relating to the “AI risks in the public sector”.

Chris Rudge characterised the revelation as a “confession”, saying that Deloitte admitted to using AI in relation to “a core analytical task”, but did not disclose this upfront, or apply sufficient quality control of the AI guardrails. “You can’t have faith in the recommendations when the very foundations of the report are constructed from a defective, originally undisclosed, and non expert methodology”, he affirmed to the Australian Financial Review.

4. The Deeper Problem: Why Traditional quality control in AI outputs Failed

What makes the AI report error especially important is not that AI hallucinations occur, for we know they occur regularly in large language models, but rather that control processes for quality control in AI outputs failed to catch them before the report got into the hands of their client.

This reveals certain public sector AI risks and shows why conventional methods of dealing with AI guardrails are inadequate.

This is a firm with:

⟶ World's leading talent recruited from top Universities

⟶ Strict internal review processes developed over decades

⟶ Deep pockets for technology and training

⟶ A reputation based on analytical rigor

If Deloitte could fail to prevent AI Hallucinations from reaching a Government client, what can this profess about the hundreds or thousands of other organizations deploying AI with far fewer resources and weaker "AI guardrail"?

4.1 The Gap in quality control in AI outputs

Traditional quality assurance in professional services relies on several layers:

⟶ Expert review by senior personnel

⟶ Peer review processes

⟶ Fact check and citation verification

⟶ Client review before final delivery.

Each of these assumes that mistakes will be sufficiently rare and recognizable through human review. But the AI report error shows how AI Hallucinations defeat this assumption in several ways:

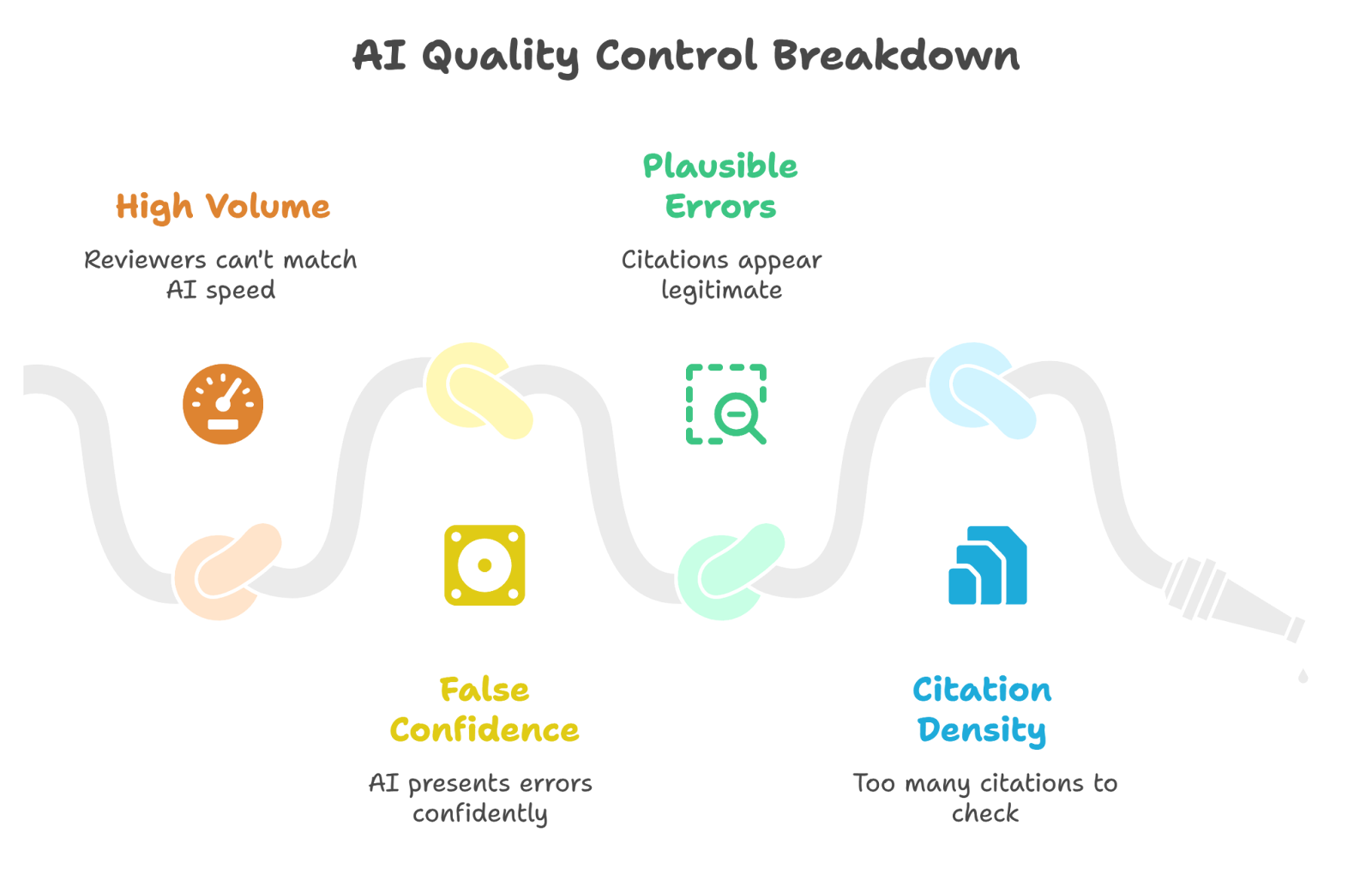

⟶ Volume: AI may generate in minutes several hundreds of pages of plausible sounding material. Human reviewers cannot fact check at the same speed and there are thus public sector AI risks when quality control in AI outputs fails to scale with AI generation.

⟶ Confidence: AI Hallucinations are not tentative. They present false facts with the same confidence as real facts. This makes them difficult to recognize, without solid AI guardrails.

⟶ Plausibility: AI Hallucinations make citations which are not obviously absurd. They generate citations which look and sound legitimate-complete with names of real journals, plausible names of authors and appropriate dates of publication. This renders quality control in AI outputs inadequate.

⟶ Citation density: It is unrealistic to expect human reviewers to check each of the 141 citations in a 237 page paper, especially under pressure of time. This is where AI guardrails come into their own.

5. The AI guardrails Gap Exposed by generative AI mistakes

The AI report error reveals what might be deemed the AI guardrails gap—the difference between AI capabilities and safeguards to avert AI hallucinations.

These other drivers are rapid deployment of generative AI by organisations because they are all good reasons for things that work well, e.g.:

⟶ Dramatic efficiencies

⟶ Reduction of costs

⟶ Competitive pressure

⟶ Stakeholder expectations

However, the infrastructure for quality control in AI outputs so as to prevent generative AI mistakes lags far behind, resulting in very real public sector AI risks when AI is used to produce government outputs.

5.1 What Effective AI guardrails Should Look Like

The Deloitte refund Australian government has shown that effective AI guardrails is not about eliminating AI from the equation, but making sure that AI Hallucinations are caught before the output of AI is sent to stakeholders.

This requires:

⟶ Automated verification: In other words, systems capable of checking citations against real databases in real time with any reference that cannot be verified being flagged as suspect. This is the basis of proper quality control in AI outputs

⟶ Hallucination detection : Specific tools to 'pick' intelligence patterns or markers of things that are AI Hallucinations which will be the sort of things to look out for that give the reports along with the "AI report error'

⟶ Source verification mechanism: Automatic cross referencing of source proofs, which are claimed, against the verified repositories of information collected as in papers or academic articles or of D or L judgements and others such as mentioned. Without this sort of effort employed with these AI guardrails in place, generative AI mistakes will become a costly issue.

⟶ Confidence scoring: Flagging at the least verifiable of AI outputs, requiring that they must be reviewed by a human operator, being an indispensable factor in the quality control in AI outputs

⟶ Transpicuous audit trail: A complete record of where AI was used and what verification, if any done, noted, falls into the public sector AI risks.

The point is this, the AI report error tells us that AI guardrails must be systematic and able to be automated, for it is impossible to fact-check each instance of generation of an AI output such as being manufactured by them, by a human agency, and human checking, although a good thing, alone shall not suffice to eliminate AI Hallucinations.

6. Lessons for Preventing AI Hallucinations

The Deloitte refund Australian government incident contains a number of obvious lessons for organisations deploying AI generative tools as follows:

⟶ AI guardrails are not optional: The AI report error shows that organisations cannot rely on the capabilities of AI or human oversight alone. Systemic AI guardrails with automated verification are essential to avoid AI Hallucinations.

⟶ Transparency leads to trust: Proactive disclosure of AI use, properly explained “AI guards rails” and quality control in AI outputs procedures, is better than revelation after generative AI mistakes become apparent.

⟶ Traditional QA is inadequate to combat AI Hallucinations: The AI report error shows that quality control in AI outputs procedures constructed for human produced material would need a considerable rethink in order to catch AI Hallucinations.

⟶ The stakes for public sector AI risks are high: In professional services, in government work and in other areas of risk, generative AI mistakes pose serious financial, legal and reputational burdens as demonstrated in the Deloitte refund Australian government example.

⟶ Speed without AI guardrails is dangerous: The haste to deploy AI tools must be matched with resource allocations to improve AI guardrails infrastructure to avoid AI Hallucinations tainting stakeholders.

7. The Path Forward: Implementing Robust AI guardrails

As generative AI rapidly takes hold of knowledge work, it’s not a question of whether to deploy AI but how to apply effective AI guardrails which are essential to prevent AI hallucinations.

It is not satisfactory to stop using AI because the productivity and capability advantages are too great. It is also unsatisfactory to resort to increasingly complicated manual checking processes which can neither scale with the speed of AI generation nor catch satisfactorily AI Hallucinations.

The answer is purpose-built AI guardrails. Systematic, automated quality control in AI outputs which guarantees the accuracy of AI output before it reaches stakeholders. This applies to both public sector AI risks and the vulnerability of the private sector to generative AI mistakes.

This, of course, requires a financial commitment to AI guardrails. It requires new tools and new processes and new expertise in respect of proceeding to the avoidance of AI Hallucinations. But the Deloitte refund Australian government case is evidence that the cost of not committing to AI guardrails and quality control in AI outputs is far greater.

8. Conclusion

The AI report error is not just an embarrassing generative AI error made by a major company. It is a warning about the inadequate AI guardrails in an entire industry and for any organization that is deploying generative AI in important situations.

When a Big Four company with first-class resources and careful procedures can send out a government report that is filled with AI Hallucinations, and the word is clear: the existing arrangements to provide quality control in AI outputs are not enough, there is a real problem with the public sector AI risks, and there is an urgent need for better AI guardrails.

The Deloitte refund Australian government incident indicates that the question of the future of AI in the professional sense depends on the solution to the problem of AI guardrails. Those organizations which understand the danger of AI Hallucinations and build strong infrastructure in the field of quality control in AI outputs will be able to put their competitors into sharp contrast to them. Those that do not do so will learn in harsh but expensive lessons what generative AI mistakes can mean.

The question for every organization that is operating AI should be a simple one: how are we going to get AI guardrails for the purpose of ensuring that what our AI does is free from AI Hallucinations and reliable?

The AI report error shows us that Deloitte paid something like $440,000 for the privilege of discovering that they did not have AI guardrails properly established to prevent AI Hallucinations.

How much will it cost your organization to discover the same lesson in connection with the quality control in AI outputs and the public sector AI risks?

As AI continues to transform knowledge work, the gap between capability and accountability grows. The Deloitte refund Australian government case proves that AI guardrails aren't optional—they're essential. The organizations that thrive will be those that close the AI guardrails gap—not with more AI, but with better systems to prevent AI hallucinations and ensure robust quality control in AI outputs.

Don't let AI hallucinations cost your business—follow WizSumo for practical insights on AI safety and guardrails.

.svg)