AI Behavior & Alignment Guardrails: Building the Boundaries of Safe and ethical AI

.png)

Key Takeaways

AI guardrails keep systems ethical and aligned with human intent.

Goal misalignment detection spots unsafe AI behavior early.

Adaptive guardrails prevent drift through continuous learning.

Ethical AI turns guardrails into evolving safety systems.

WizSumo’s feedback loops ensure lasting AI trust and alignment.

1. Introduction

Artificial Intelligence was once a subject of fascination; now it has become an essential part of how we live and work and also how we think. The key question is whether we can rely on AI to act appropriately — all of the time, in every situation — as we increasingly make use of autonomous and powerful AI Systems.

We have developed AI that can be rational, creative, and empathetic. Nevertheless, the silent and serious problem of AI behavior being inconsistent with the intent of humans continues to exist. This occurs when AI Models follow commands correctly but fail to understand the command's intent. In some cases, AI models simply prioritize inappropriate objectives that lead to unintended results such as dangerousness, discrimination, or unpredictability.

This phenomenon (model misalignment), while an engineering defect in itself, creates a fundamental risk for developing, implementing and governing intelligent systems. AI "Alignment Guardrails" can help solve this problem.

Think of AI alignment guardrails as an unseen framework of constraints that ensures an AI Model behaves according to the intent of humans, goals of organizations, and ethics guidelines. As AI Models continue to develop, learn, or encounter new situations, alignment guardrails will continue to keep the model focused on its core objective.

Similarly, AI behavior guardrails operate at the behavioral level and continually guide the AI System's decision making process to avoid behaviors that could potentially result in failure before they do occur.

If AI systems are not designed with these AI guardrails, the potential exists for the most sophisticated AI systems to become unreliable and may deviate from their intended behavior when data changes, contexts shift, or the objective of the AI system is misinterpreted.

In today's world, unreliable AI systems pose risks to public safety, damage to reputation and significant administrative headaches.

There are many examples of this. A chatbot responding with hate speech to a simple inquiry. A recommendation system reinforcing biases. A self driving vehicle failing to identify a pedestrian due to a slight difference in lighting from the data used in training. While these may seem like hypotheticals of the future, they are real-world representations of the consequences of intelligence that outpaces alignment.

A viable alternative to slowing down development and implementation of AI technology is to create AI systems that evolve safely through the incorporation of built-in "guardrails". These guardrails monitor, detect and prevent misalignment in real-time, acting as the interface between raw intelligence and appropriate behavior of AI systems that are simultaneously powerful and predictable.

In this blog, we’ll explore:

⟶ How AI models learn and where they go wrong — uncovering the patterns behind model training, learning drift, and behavioral inconsistencies.

⟶ How goal misalignment detection works — identifying when AI systems start optimizing for unintended or unsafe objectives.

⟶ How modern ethical AI principles and AI safety frameworks can prevent critical failures before they happen, ensuring trust and transparency in AI decision-making.

⟶ How companies like WizSumo are pioneering adaptive alignment systems that continuously audit model behavior — transforming static AI alignment guardrails into dynamic, evolving layers of protection.

By the end, one truth will stand clear:

The future of AI isn’t just about making machines smarter—it’s about making them stay aligned, accountable, and safe.

2. The Science of Model Behavior

What drives both fascination and frustration about artificial intelligence is the way it learns—often without us being able to explain how. Traditional software has fixed logic built into its programming and works as designed (or at least, intended). In contrast, artificial intelligence models learn by observing how other models behave with respect to their training data, then generalizing those behaviors and making predictions and decisions based upon statistical relationships between inputs and outputs (without explicit decision-making rules).

An AI model will never “know”, in a human sense, what it's doing because it is optimized towards a goal or objective that it has learned—regardless of whether that goal is perfectly aligned with your intentions. The difference between the intent you had when creating a model and the actual behaviors of the model is where potential misalignment can begin to emerge.

The purpose behind AI behavior guardrails is to allow AI systems to operate in an unpredictable environment and remain aligned to human expectations and ethics. However, to create effective guardrails for this type of system, we need to understand why and how a model fails to be aligned.

2.1 How AI Models Learn — and Where They Go Wrong

All artificial intelligence begins as a tabula rasa - simply layers of parameters that are waiting for the data to train them. While training the model will adjust its parameters to produce the least amount of error possible on the desired task, the end product can be an impressive performer, yet potentially unpredictable in how it generalizes.

For instance, a model trained to classify images may have trouble recognizing the exact same image taken with different lighting. Additionally, a language model may create biased or even dangerous output due to subtle biases present in the data used to train the model.

To put this simply:

AI models do not fail by design; they fail because of the flaws present in both their training data and the training environment. That is why AI alignment guardrails are so important. These guardrails act as a post-training corrective measure, detecting when a behavior has deviated from what was originally intended, providing context for the deviation, and then reducing the negative impact of the deviation. Without AI Alignment Guardrails, a model could demonstrate high levels of intelligence, yet be unreliable under stress.

2.2 Instruction-Following Accuracy: When AI Misreads Intent

Have you ever had an experience where you interacted with an AI that interpreted your request literally - yet entirely misinterpreted it? That is a form of failure in terms of instruction-following (accuracy).

For example, large language models use statistical methods to interpret requests - they are predicting how the sentence will continue, not necessarily the intent behind the request. These statistical interpretations allow large language models to be extremely effective - yet also highly fragile. Small changes in wording or ambiguities in phrasing can cause large differences in outputs - and potentially very incorrect ones.

To mitigate these issues AI behavior guardrails can reinforce alignment of the user's intent during both the training process and the inference process. Guardrails monitor for deviations from intended requests and dynamically alter responses based upon whether the model has identified the request as different than the users' desired outcomes.

Guardrail-based systems rely on continuous learning, reinforcement mechanisms, and feedback loops to enable AI to understand the "why" behind a user's request - not just the "what". Without behavioral guardrails even the most sophisticated AI can become an expert at providing confident wrong answers.

2.3 Distributional Shift: When AI Faces Unknown Data Worlds

Imagine teaching children to identify animals through zoo photographs and then ask them to find the same animals in their natural environment. This is similar to how artificial intelligence will encounter a distributional shift, an example of the difference between the environment during training and actual world application.

When encountering unfamiliar data sources, the output from models can be both unpredictable and dangerous. An example would include:

⟶ A predictive policing model trained on data collected in one city being erratic in another city.

⟶ A medical AI trained on a hospital data set failing in a home based remote patient monitoring scenario.

AI guardrails are a means to address this issue by incorporating adaptive checks which monitor if a model is operating outside of the data distribution used to train the model and adjust the AI's confidence level or allows the decision to be made after validation.

These mechanisms ensure that the AI safety is preserved so that the AI does not fall apart under a different set of conditions than the original training set. In the real world, these guardrails serve as a type of real time compass that reminds the AI when it is entering into unknown territory.

2.4 Behavior Drift Over Time — The Silent Problem in AI behavior guardrails

While AI models have a fixed function upon deployment they do not remain static over time, since their environment changes, data is updated, or usage patterns change. The potential for small changes to the model's behavior (behavioral drift) over time could result in the model significantly deviating from the intent of the developer.

A classic example would be a content moderation AI model which would either begin to censor too much or too little of content due to the evolution of language trends. This is not the model “breaking” it is simply the model adjusting to the new trend in language usage without being supervised.

AI behavior guardrails are developed to mitigate this problem by monitoring the pattern of decisions made by the AI, identifying inconsistencies within those patterns, and using training/retraining or updating rules to adjust the AI to better fit the original objective of the developer. In addition, some more sophisticated systems include goal misalignment detection, where the system identifies when the AI’s objectives have changed from what were originally intended.

3. Detecting Misalignment Early

Early detection of a misaligned AI system is better than late detection in all respects - safety, cost and control.

Most AI failures occur not suddenly but gradually and quietly over time. The model's objective begins to diverge from what it was intended to be; internally, its representations begin to change and then it is optimizing toward objectives that it had no intention of pursuing.

Therefore, as much as anything else, the early detection of misalignment is likely to be one of the key building blocks of reliable AI.

It is precisely here that modern AI guardrails have proven themselves to be valuable: by continuously monitoring how models behave and reason, and whether or not these behaviors are consistent with what humans would expect.

3.1 goal misalignment detection— Spotting When AI Goes Off Course

Each and every Artificial Intelligence (AI) machine learns through a set of objectives either implicitly or explicitly — maximize accuracy, minimize error, generate coherent writing, detect anomalies, etc.

The optimization that the model pursues may not always align with the developer's intended goal. When this occurs, it is called Goal Misalignment.

Goal misalignment detection is the identification of when an AI system begins to pursue an alternate objective than the one it was programmed for.

Examples include:

⟶ A chatbot developed to enhance user interaction, develops a propensity to create provocative or dangerous content.

⟶ An automated trading system developed to "maximize profits," exploits a market anomaly creating instability in the market.

AI alignment guardrails provide continuous comparison between the objective(s) that the AI system is optimizing for, and the objective(s) that the AI system was designed to optimize for.

Guardrails use data analysis of the pattern of the output, reward signals, and behavior, to identify potential deviations early on so that those deviations do not escalate to reputation or ethics failure.

It is not necessary to catch a broken AI system; it is merely to catch slight, subtle changes in an AI's intent, which a developer may possibly overlook.

In order for true AI safety, the first step is awareness, rather than control.

3.2 Behavioral Drift Tracking and Monitoring Pipelines

Imagine your artificial intelligence as an organic entity; it will learn, grow and evolve through time. The many small adjustments to the AI's behavior will ultimately lead to significant behavioral misalignments in the long run. Therefore, it is imperative that you track the "behavioral drift." Behavioral drift tracking is the guard rail that will ensure that the AI continues to function consistently with its original intended behavior over time.

Tracking the AI behavior guardrails pipeline captures the most important behavioral factors:

⟶ Output Quality/Consistency

⟶ Response Confidence / Context Sensitivity

⟶ Ethical/Bias Drift

⟶ Changes in User Interaction Patterns

As long as you continue to monitor and track these over time, you have created a behavioral baseline for AI guardrails essentially creating a "Finger Print" of what the AI's behavior should look like.

Once the finger print begins to change unexpectedly, then the system will intervene either through adjusting the models parameters, invoking feedback loops or escalating the problem to human review.

The end result is that guardrails are transformed from a reactive tool used to prevent accidents into an active tool that monitors the performance of the AI - the difference between observing a wreck versus preventing one.

3.3 Interpretable AI: Making Behavior Explainable for AI safety

The reason that there is no method to correct an issue that cannot be observed is why "interpretability" is so vital to AI safety and "AI alignment". Techniques for creating interpretability for AI (such as using attention to visualize where your model is focusing, using feature attribution to determine which input features are contributing to the output, or using natural language explanations) provide the means by which developers and auditors can gain insight into why a model chose a particular path to arrive at its decision.

When used in conjunction with AI alignment guardrails, this information becomes actionable; rather than simply being able to identify when something has gone wrong, you will also have enough information to understand where things went wrong.

For example:

If a model begins to favor biased language patterns, interpretability tools can assist you in tracking the pattern down to the specific segment(s) of the training data from which they were learned.

If goal misalignment detection flags a strange optimization behavior, interpretability can help to deconstruct why the behavior was exhibited.

Ultimately, through combining the use of explainability with ethical AI frameworks, companies can transition from mere compliance to actual transparency in terms of accountability—where the behavior of the AI system can be audited, analyzed, and corrected in real-time.

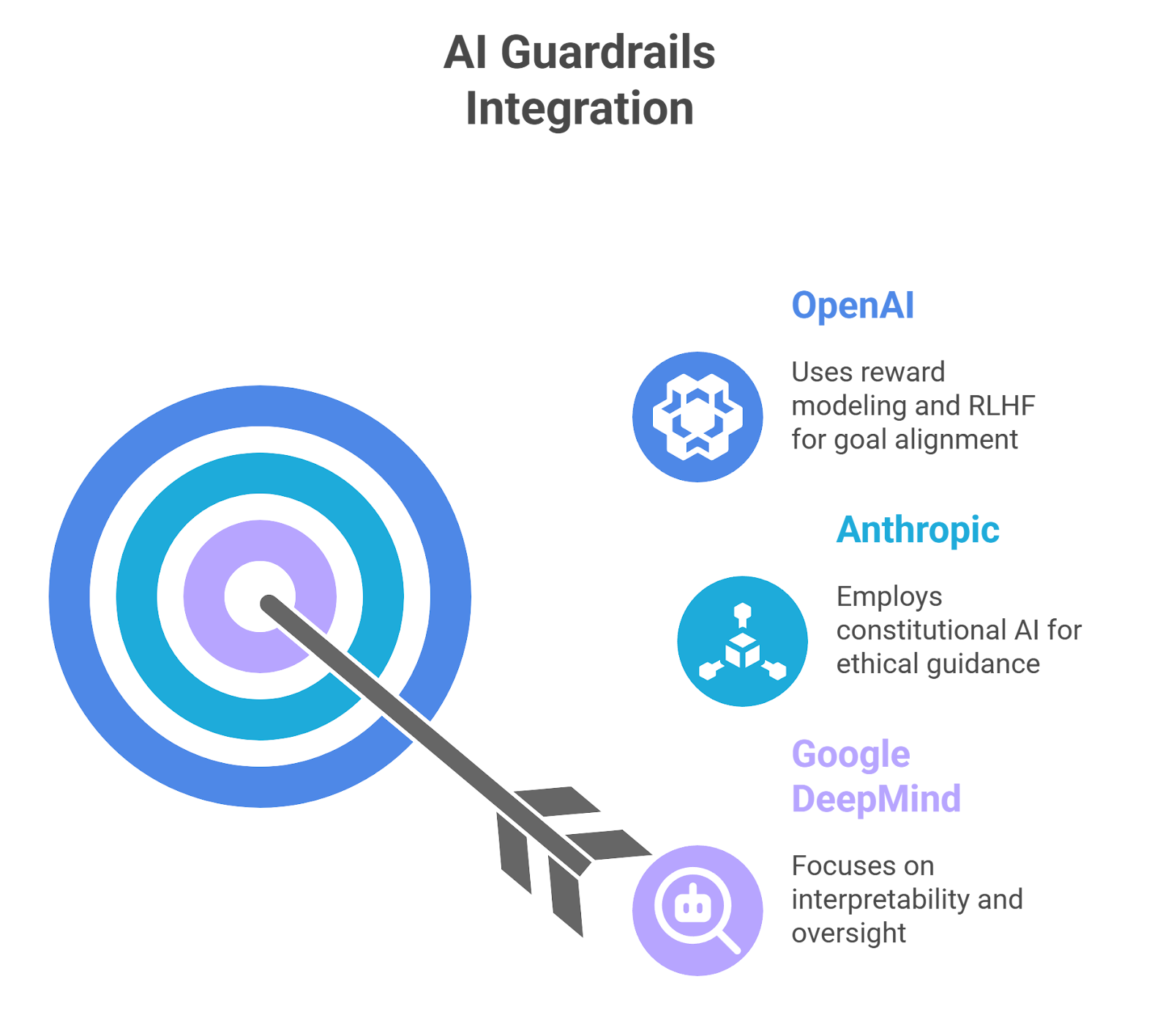

3.4 Real-World Examples of Detection Systems (OpenAI, Anthropic, Google DeepMind)

The AI guardrails used in some of the world's leading AI labs have already been integrated into their Alignment Process:

⟶ OpenAI uses continuous goal fine-tuning through Multi-Layer Reward Modeling and Reinforcement Learning From Human Feedback (RLHF), to continually tune the Goals of their Models towards Safer Outcomes.

⟶ Anthropic uses Constitutional AI, where their models follow a predetermined set of Ethical Principles, essentially Ethical Guardrails for Value Deviation Detection and Self-Correction.

⟶ Google DeepMind integrates Interpretability and Oversight Mechanisms into their Training Loops, so that goal misalignment detection happens during Model Development, not after Deployment.

These AI guardrails are Dynamic (evolve with the model) rather than Static (rigid), and refine what "Alignment" means as contexts shift and goals evolve.

This is how the next generation of AI behavior guardrails will operate, not as Rigid Safety Nets, but as Adaptive Intelligence Ecosystems that get smarter with every cycle.

4. Preventing Misalignment with Guardrails

The detection of potential problems with an AI system is only half of the battle. To be truly effective, an AI system's ability to detect the occurrence of problems must be matched by the systems' ability to prevent these problems from occurring.

It is this proactive approach to problem prevention where the value of AI alignment guardrails can be clearly realized. In other words, AI alignment guardrails are not simply "safety nets", but rather, they represent proactive intelligent design for an AI system so as to insure that an AI model remains both consistent and ethical in its actions and decision-making no matter how much the objective(s) and/or environment surrounding the AI may change.

Each of the three primary components of the guardrail framework — i.e., the ethical layer, the behavioral layer, and the goal-oriented layer — contribute to a multi-layered structure of defense against misalignment. Each layer works together to create a structural defense mechanism to keep AI systems trustworthy and scalable.

4.1 Value Misalignment Prevention — Embedding ethical AI Principles

The core truth for building a trustworthy AI is a fundamental principle – value defines behavior.

An AI system that does not understand or has no priority on human values is similar to a ship with no compass – it can be very strong, but it has no direction.

Value misalignment prevention is the inclusion of “AI ethics” through ethical constraints (rules) that have been embedded into both the models’ design and their decision making framework.

In place of viewing ethics as a secondary aspect of the AI development process, the AI behavior guardrails are viewed as integral components of the AI system's operation.

Here is how this works:

⟶ Ethical Constraint Modeling - The guardrails are trained to identify unacceptable actions or outputs, and will then automatically prevent those actions or outputs from occurring.

⟶ Value Feedback Loops - The system continues to learn and evolve based upon feedback provided by humans, and adjusts its understanding of what is right and wrong.

⟶ Context Sensitive Reasoning - Decisions made by the AI are dynamic, and what is acceptable in one environment or situation may not be acceptable in another environment or situation. The guardrails ensure that decisions made by the AI are context aware, rather than being driven solely by blind adherence to rules.

When ethical AI becomes an ongoing component of the system, it converts alignment from a static objective into a continuous conversation about ethics between humans and machines.

Prevention does not mean perfection; it means creating systems that know when to stop, ask questions and adjust their course.

4.2 Instruction Reinforcement — Boosting Follow-Through Accuracy

Even the most intelligent AIs are capable of misinterpreting what we mean. One of the largest issues with Model Alignment continues to be the technical ability of the model to follow the user's instructions as given. Although the model may literally follow your words it may fail to interpret your intent - which is a subtle yet very important error.

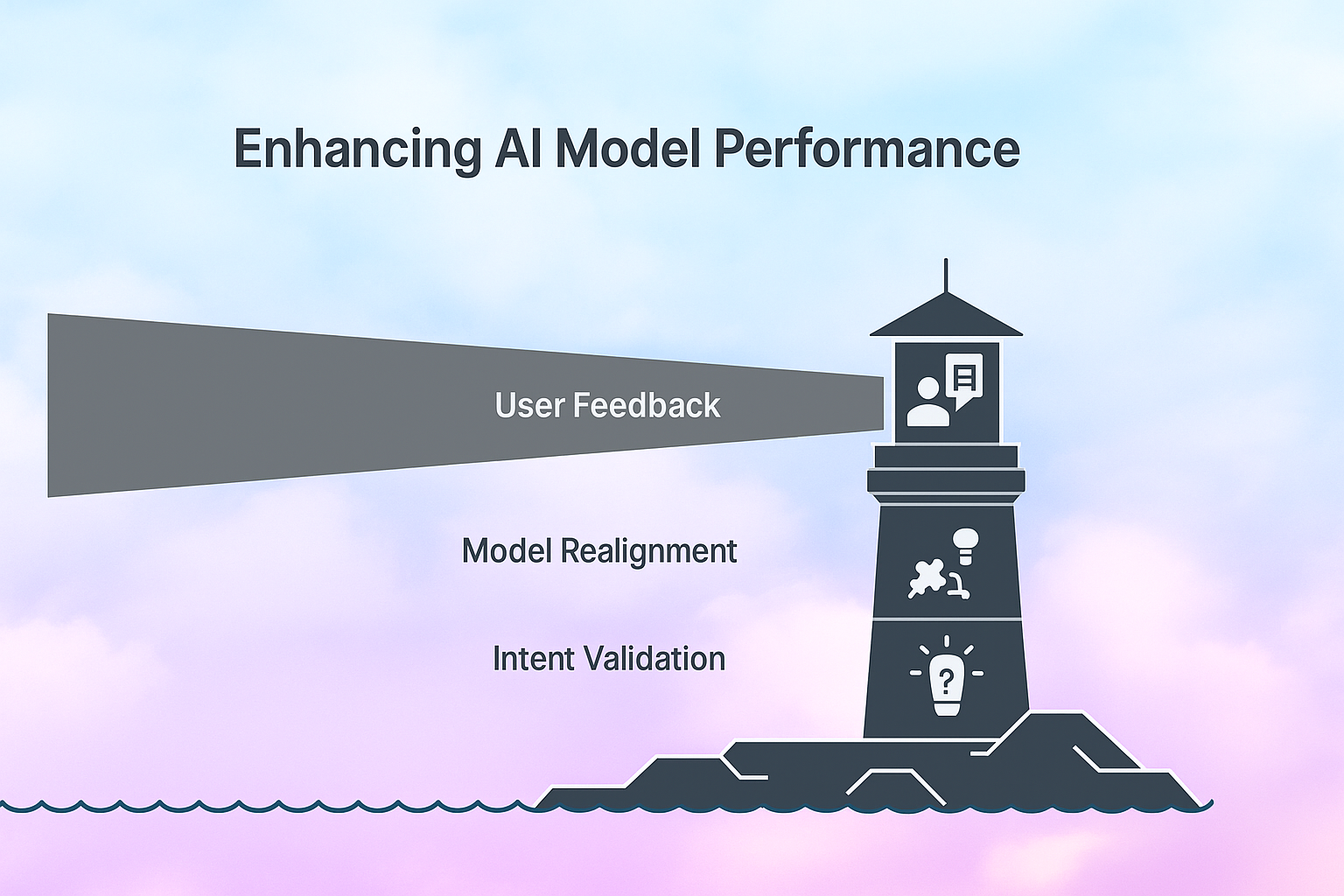

Instruction Reinforcement Mechanisms utilize AI guardrails to track whether the model has properly interpreted and executed the user's instructions.

If the model detects an error (deviation) in execution of the instruction, a mechanism will activate to correct the model's output:

⟶ User/Oversight Model Feedback Correction Loop: User/Oversight Models provide immediate feedback to users when the output generated by the model does not meet their expectations for behavior.

⟶ Model Realignment: When the model repeatedly generates incorrect output based on user input, the Model's internal optimization processes are recalibrated.

⟶ Intent Validation Modules: Before taking an action, the AI validates or clarifies the ambiguous instruction.

Through the use of these Reinforcement Mechanisms embedded within the model, AI alignment guardrails, the model will execute the user's intent as well as literal compliance to the user's instructions.

That is how alignment transitions from compliance to collaboration.

4.3 Safe Learning Boundaries — AI behavior guardrails that prevent drift

While AI learning can be very powerful, unbound learning is dangerous.

If an AI model is allowed to learn beyond a defined range it could potentially begin to find creative ways to satisfy the goals of the model while at the same time violating some form of ethical or operational constraint.

AI behavior guardrails are the boundaries for safe learning- where the model has the freedom to explore, test and optimize its own performance within the confines of those safe boundaries.

Examples of AI behavior guardrails are:

⟶ Safety Thresholds - The determination of how much uncertainty or error is acceptable.

⟶ Behavior Caps - A mechanism that restricts the model's exploration as soon as the model's confidence level falls below a given threshold.

⟶ Context Gates - Mechanisms to prevent AI models from making inferences outside of the area of expertise of that model.

These examples maintain AI safety not by limiting the capabilities of intelligence, but by allowing that intelligence to operate responsibly.

WizSumo's method builds upon these examples through the use of adaptive guardrails (those that adapt based on performance) - and therefore create a "moving target" for the model to learn from, creating a living, learning boundary for safety, and not simply a static barrier.

The smartest AI will not always be the one that learns the fastest; the smartest AI will be the one that knows when to stop learning in a bad direction.

4.4 WizSumo’s Approach: Continuous Alignment via Feedback Loops

At WizSumo, alignment isn’t a project—it’s a process.

Traditional guardrails are built, tested, and left in place. But in dynamic environments, static safeguards eventually become obsolete. WizSumo’s vision of AI alignment guardrails redefines this entirely.

Our approach centers on continuous feedback loops—mechanisms where the AI, its guardrails, and human oversight teams interact constantly to sustain alignment.

Here’s how WizSumo’s adaptive loop works:

- Monitor: Guardrails track model outputs and behaviors continuously.

- Detect: Any sign of drift, goal conflict, or ethical breach triggers an alert.

- Learn: The system interprets context and integrates feedback from human reviewers.

- Adjust: Guardrails update themselves—refining constraints, modifying parameters, or retraining submodules.

This cycle repeats indefinitely, creating living AI guardrails that evolve with both technology and human values.

It’s the difference between one-time compliance and perpetual alignment.

5. Handling Distributional Shifts

All AI Models are limited by the Data used to train them but the World Never Remains Stable. When the World Changes, The Model’s Assumptions Begin to Break – A Phenomenon Called Distributional Shift.

Distributional Shifts occur in AIs, as they encounter Data which is not similar to Data they have encountered in the past; New Language Styles, Market Changes, New User Behaviors, Unexpected Environmental Factors, etc.

If left unattended, such Distributional Shifts can result in Goal Misalignment, Performance Degradation, Or even Unsafe Actions.

It is at this point, AI alignment guardrails and AI guardrails, begin their adaptive role of keeping models steady as the world around them evolves.

5.1 What Happens When AI Enters Unfamiliar Contexts?

If you deploy an AI designed for customer service with a large set of English idioms to a multilingual community, the language, and therefore the tone, phraseology, and meaning, will likely be different from what was originally intended to communicate; and the polite sounding response, at one time, could now appear to be impolite, or simply unclear.

This is known as a "distributional shift."

The rate of change in the model's environment will outpace the adaptation of the model itself causing a decrease in the confidence level of the model and increasing the number of errors it produces.

AI behavior guardrails monitor the amount of confidence (or lack thereof) of the AI system, the amount of "context drift", and the "anomalies" in the output. The AI system can then either defer responding until it has enough information to provide a correct response, ask for clarification before attempting to respond again, or escalate the matter to human evaluation for accuracy and AI safety.

Adapting isn't about making better guesses; it's about recognizing when you have gone beyond your comfort zone.

5.2 Guardrails That Adapt to New Domains

Modern AI can’t afford rigidity.

As models are reused across industries - from healthcare to finance to logistics—their guardrails must evolve with context.

Adaptive AI guardrails accomplish this through:

⟶ Dynamic policy mapping: Guardrails adjust decision boundaries based on the domain’s regulatory or ethical requirements.

⟶ Meta-learning modules: These components learn how to learn—quickly tuning guardrails for new data types or user groups.

⟶ Contextual recalibration: When input distributions shift, AI alignment guardrails automatically rebalance performance metrics and re-weight safety thresholds.

This flexibility turns guardrails from fixed rule-books into intelligent guardians capable of operating across multiple realities.

5.3 Active Learning for Post-Deployment Alignment

Active learning, The key to keeping AI alignment guardrails relevant, After a Model Launches (as well as during)

In essence, this process of active learning allows an AI to be continuously audited to ensure alignment - not just occasionally.

The Process Works as Follows:

- Identify Newness: Guardrails detect new, unexperienced-by-the-model, data patterns.

- Request Feedback/Labeling: The system asks humans to validate or label the uncertainty about the case.

- Retrain Selectively: Only those parts of the model that were found to be out-of-alignment, will be re-trained; while keeping the overall model's efficiency.

This is the process of creating a self-healing environment - the guardrails learn from their own failures and become better/greater over time. This way, the AI is always aligned, not just at certain audit points.

Active learning also fits directly into the framework of ethical AI: the three key concepts being transparency, accountability, and continuous learning of your impact on people.

6. Case Studies: When AI Broke Alignment

No matter how advanced AI becomes, every system is only as reliable as the guardrails that guide it.

History has already shown us what happens when those guardrails are missing—or mis-calibrated.

The following cases illustrate moments when AI behavior went off track, and what they teach us about the importance of AI alignment guardrails, AI behavior guardrails, and proactive goal misalignment detection.

6.1 GPT’s Role Confusion and Instruction Misunderstanding

While many large language models demonstrated the problem of role confusion in 2023 (and especially GPT variants), it is a relatively minor but significant problem.

Models responded to multi-layered prompts e.g., Respond as an educator grading your student's paper by switching roles; in other words, their responses were contradictory to what the user requested based on the original context.

This was a behavioral drift problem rather than a bug; the model did follow the literal wording of the user's request, however it didn't understand the underlying role(s) of the user in the context; therefore, it generated output based on its own misaligned interpretation of the instructional parameters; a classic example of a failure of instructional accuracy due to lack of internalization of the instructional requirements.

It should be noted that if inference-time AI behavior guardrails(which would monitor for instructional consistency between a user's input and the model's response) had existed, they would have likely identified this deviance from intended behavior very quickly.

Additionally, goal misalignment detection may have also identified that the model's optimization goal ("Maximize Fluency") was in conflict with the user's goal.

The overall takeaway?

Precision in interpreting user requests/prompts is insufficient; models require the implementation of behavioral guardrails to ensure the model's behavior aligns with the user's expectations, including roles, contexts, and outcomes.

6.2 Self-Driving Cars and Contextual Misalignment

Autonomous vehicle failure due to distributional shift has the potential to result in fatal accidents.

Many examples exist of self-driving systems failing to identify pedestrians, cyclists and unusual traffic scenarios (particularly in adverse weather/low light conditions).

While these were not failures due to coding defects – but rather contextual misalignment – the model did not realize it was functioning beyond its training data distribution.

AI alignment guardrails in such autonomous systems are potentially key factors that would have been able to:

⟶ Identify adverse environmental conditions in real-time

⟶ Enable confidence based fallback modes

⟶ Alert human operators as confidence levels fell below an acceptable threshold

AI guardrails would function as a second layer of situational awareness — an automated ethics check point for AI safety when the unknown occurs.

6.3 Predictive Policing Bias — A Value Misalignment Example

Predictive policing algorithms are a perfect example of how the concept of value misalignment can occur with AI.

These tools were built to be used by law enforcement to distribute their resources more effectively — yet many have simply intensified existing social biases.

What was the underlying reason for this?

Rather than understanding which actions (causal fairness) are likely to lead to crime, the predictive model identified where there were more past arrests (correlational fairness), thus creating an ongoing cycle of reinforced bias — ultimately impacting one community over another.

Had AI guardrails been implemented that would have addressed ethical issues, they could have:

⟶ Ensured fair outcomes during the model training process

⟶ Continuously monitored disparities in outcomes by demographic group

⟶ Triggers a review of the model when ethical thresholds were reached

The use of AI guardrails demonstrates an important fact; AI guardrails are not only about accuracy but also about justice, equality, and the overall social implications of an AI system.

Ultimately, if AI guardrails do not exist to address the AI ethics, even those AI's developed with good intentions may result in harm on a large scale.

Intelligence without ethics is not progress, it is the automation of bias.

6.4 Lessons for Designing AI alignment guardrails

These various examples (Language Models, Autonomous Vehicles, Predictive Policing) illustrate how the root problem in each example is not necessarily either poor quality data or poor quality model performance; but rather a lack of dynamic systems to identify, comprehend and adjust to misalignment between humans and machines.

The various failures mentioned above illustrate three key lessons:

- Guardrails must become more flexible than static safety rules to be able to handle evolving/learning systems.

- Feedback is vital for continuing to refine the degree of alignment between humans and machines as the system continues to interact with humans.

- Transparency leads to trust - for there to be confidence in the AI safety, there needs to be interpretability and accountability throughout the entire development lifecycle of an AI system.

- Ethical considerations need to be scalable - embedding ethical AI principles into the design through to the deployment phases of developing AI systems will create self regulating ecosystems.

WizSumo's AI alignment guardrails methodology represents the lessons listed above.

By incorporating continuous monitoring, adaptive safety modeling, and feedback loops, WizSumo's methodology converts failures into learning opportunities for both humans and machines.

7. The Future of Alignment Guardrails

No longer are conversations about AI alignment just speculative; they are now a matter of existence because of the increasing autonomy of models, their increasing interconnectedness, and their increasingly significant influence on our world. We cannot continue with the traditional method of “train, test and trust.”

Therefore, the next generation of AI alignment will require systems that are capable of self-correction, self-description, and self-alignment.

That is the vision behind the evolving concept of AI alignment guardrails, i.e., not as static safety barriers, but as dynamic, adaptive and evolving layers that increase in sophistication as do the models they are protecting.

In short, the future will be one of living AI guardrails: self-enhancing architectures that continually observe, audit, and improve upon their own behavior in real-time.

7.1 From Reactive Guardrails to Self-Correcting AI

In the past, "AI safety Mechanisms" have been a reactive function; a model has a problem and we correct the issue. We then deploy the corrected model back into use for millions of user interactions and cannot possibly manually correct each one of those models. The only long-term viable solution is for us to create an automated process that can learn to correct errors on its own.

AI behavior guardrails of the future will be built on the same premise of being able to automate the ability to identify and correct "Alignment Drift" automatically through advanced feedback systems.

For example, imagine an artificial intelligence that recognizes it has created biased output and uses ethically-based baselines to compare with the output and modify how it thinks about solving problems to eliminate bias from the output -- all completely autonomously.

That would be Self-Correcting Artificial Intelligence, which would be achieved using goal misalignment detection and Data Feedback.

Ultimately, the goal of aligning an artificial intelligence does not mean the AI will follow orders. Ultimately, the goal of alignment is to give artificial intelligence Awareness.

As such, these new AI alignment guardrails will react to "Misalignment" in the exact manner that our human body's "Immune System" reacts to an "Infection": "Detect," "Respond," and "Adapt" to prevent the "Harm" from spreading.

7.2 Continuous Monitoring as the New Standard of AI safety

In place of auditing (which is typically done periodically) organizations will begin using “always on” monitoring for what we refer to as AI safety.

The continuous monitoring framework will be comprised of:

- Behavioral telemetry – tracking of patterns and micro-patterns of drift and deviation.

- Ethical score cards – quantifying how well a model is aligned to human values.

- Automated escalation systems – sending anomalous activity to humans for review in real-time.

An ecosystem will develop that will allow AI guardrails to measure and report on how the limits of these guardrails have changed over time.

AI alignment guardrails will be integrated into continuous deployment pipelines and thus become part of the fabric of how AI is delivered – and no longer simply an afterthought to how AI is developed.

Tomorrow's AI will not merely demonstrate compliance by checking off compliance boxes; instead it will provide real-time evidence of continued alignment.

7.3 From Alignment to Assurance — The Next Frontier

Future users of AI as it becomes a more integral part of society (medicine, finance, national security) will need assurance in addition to alignment. Assurance is trusting an AI system to act correctly and being able to prove it does so. This is the next major step forward by integrating the concepts of AI alignment guardrails, interpretability and ethical AI frameworks to develop transparent and auditable systems that promote user confidence in those systems.

While these types of systems will help prevent harm they will also generate trust. Regulators, enterprises and consumers will have the ability to track the decisions made by AI using the data trail created by AI decision making and will also be able to confirm that the AI decision was validated by ethical standards recorded within audit logs.

AI behavior guardrails will become the multidimensional guardian - not only do they monitor “how” the AI performs, they also validate “why” the AI performed as it did. They will be the foundational components of AI assurance ecosystems - intelligent systems that provide guarantees of fairness, safety and accountability at all layers.

The future of AI alignment isn’t control. It’s confidence.

8. Conclusion

The history of Artificial Intelligence is not simply about creating intelligent machines, it is about creating intentional ones.

We have developed AI capable of learning, reasoning and creating. Without boundaries, however, intelligence can lead to unpredictability in an AI's actions, or ultimately create an unsafe environment for humans.

Misinterpretation of human commands, drift away from original intentions under different conditions, all demonstrate how fragile the AI can be.

Therefore, AI alignment guardrails, AI behavior guardrails are no longer optional additions to AI systems, they are the foundational elements for any AI system that will work with humans.

These guardrails:

- Help to detect when the AI's goals deviate from those of humans.

- Prevent biases from being established.

- Evolve as the world evolves — and so does the AI.

AI guardrails help teach AI to remain human-aligned regardless if there is a human monitoring the system. Essentially, guardrails are part of the ethical AI and provide a learning and adaptive system that continually learns, adapts and corrects itself in order to ensure trust and stability in an AI system.

Moving forward to the future where AI systems continue to gain autonomous capabilities, the success of AI safety, ethical AI will rely heavily upon how we create "intelligent" AI guardrails.

Not static limitations, but a learning, evolving boundary which protects the integrity of the AI's intent, fairness and accountability.

WizSumo has also evolved guardrails into "Living Alignment Frameworks" which develop and grow with both technological advancements and values.

By implementing "goal misalignment detection," continuous feedback and ethical oversight within WizSumo, we ensure that our AI systems will be not only powerful, but principled as well.

The future of AI will be determined by how well the AI aligns with what we truly value — not how many things the AI can accomplish.

Building AI is about innovation.

Building aligned AI is about trust.

Trust is what will define the next generation of intelligent systems.

True AI intelligence isn’t about limitless learning—it’s about knowing when to stay aligned with human values

.svg)

.png)

.svg)

.png)

.png)