AI Guardrails for Transparency & Explainability: The New Rulebook for Responsible AI Governance

Key Takeaways

1. Introduction: The Hidden Walls of AI — Why Transparency & Explainability Matter

Artificial intelligence is now woven into the decisions that shape our lives — from what we watch and buy, to how we’re hired, diagnosed, or even judged. Yet, beneath all this progress lies an uncomfortable truth: we often have no idea how AI reaches its conclusions. Its logic sits behind invisible walls, known to only a handful of engineers or not even to them.

AI transparency is now emerging as perhaps the greatest ethical and operational challenge facing the modern technology landscape today. While reliance on AI systems that cannot fully explain their reasoning is currently widespread, individuals simply do not trust AI if it cannot provide explanations. Similarly, governments are hesitant to approve AI deployments, while businesses are reluctant to utilize such deployments due to concerns about their potential reliability and efficacy. And users are similarly hesitant to accept the results generated by unexplainable AI systems.

However, AI explainability can serve as the "bridge" between complex algorithms and human understanding. Many people ask themselves the question -- What is meant by AI transparency, and why is it so important? The answer is quite straightforward: transparency leads to trust in AI systems. In essence, when individuals can see how an AI system was trained, the data it used, and the limitations it contains, they are significantly more likely to trust the outputs produced by the AI system.

However, the problem of achieving AI transparency is not simply a function of providing access to code and/or data. Rather, transparency in AI also requires adherence to certain structures, disciplines, and ethical guidelines (i.e., AI guardrails). These guardrails refer to the "invisible rails" that ensure AI behavior remains explainable, audit-able, and accountable.

In recent years, several prominent AI organizations have begun developing AI guardrails in the form of model card documentation, training data disclosures, capability and limitation statements, and decision explainability frameworks. Collectively, these represent a new ecosystem of trust that allows AI systems to be examined, understood, and improved without stifling innovation.

As AI continues to grow both in power and in terms of the role it plays in making increasingly critical decisions, the need for AI transparency and AI explainability is no longer a desirable feature -- rather, they are non-negotiable. Transparency and explainability are foundational to the future development of responsible AI.

What This Blog Covers

In this blog, we’ll explore how AI guardrails can make artificial intelligence systems more transparent, explainable, and trustworthy.

You’ll discover:

⟶ Why AI transparency and AI explainability are essential for trust in AI systems

⟶ How lack of transparency has led to real-world failures and ethical concerns

⟶ The key AI guardrails that ensure accountability — including model cards, training data disclosure, and decision explainability

⟶ How organizations like Google, OpenAI, and Anthropic are implementing transparency guardrails

⟶ And finally, how governance guardrails and global regulations are shaping the path to responsible AI

By the end, you’ll see that transparency isn’t just about openness — it’s about creating AI that people can trust.

2. The Transparency Imperative: Understanding the Core of Trust

When people talk about AI transparency and AI explainability , they’re often addressing the same root concern — can we understand why an AI made a certain decision? In an age where algorithms screen resumes, detect diseases, and recommend bail, this isn’t a technical curiosity; it’s a question of accountability.

2.1 Why AI transparency and AI explainability Matter

While many people are trying to figure out — what really is AI transparency, and why is it so important?

For most people, AI transparency is simply visibility. In other words, you need to be able to see into an artificial intelligence (AI) system’s inner workings — including what data the system was trained with, what assumptions were made by the system, and what are the limitations of the system. Without this level of transparency, users will have no choice but to trust AI as a “black box”, which will ultimately lead to less user confidence and higher levels of risk.

The next commonly asked question is: what does AI explainability actually mean in plain English?

If AI transparency is all about “seeing”, then AI explainability is all about “understanding.” AI explainability is the ability to analyze a model's reasoning — to map how the input data into an AI system relates to the final output from the AI system. For example, if an AI-based credit-scoring model refuses a loan application, “AI explainability” would provide the individual applying for the loan and the lender with information about what factors most impacted the final decision.

Together, AI transparency and AI explainability establish the fundamental principles of trust in AI systems. The absence of these characteristics makes it impossible for users, auditors, and even developers to evaluate whether the decisions made by the AI system are reasonable, dependable, or safe. While transparency provides insight into an AI system's operations, explainability allows the individual making a decision based on that AI system to understand the reasoning behind that decision — both are key to establishing responsible AI.

Lacking these two attributes, organizations may face consequences beyond those related to technological failures — social backlash, regulatory action, and decreased public confidence in the organization, for example. On the contrary, when an AI system is transparent and explainable, the system is accountable, governable, and — most importantly — trusted.

2.2 How Transparency Builds responsible AI

As a type of “guardrail” in the larger ecosystem of AI ethics, transparency serves as the initial barrier of entry for preventing unethical behavior through AI systems. This is because transparent AI systems require that all decisions made by the AI system are documentable to some degree — limiting the opportunity for hidden biases or errors to exist within the AI system. Transparent systems enable development teams to inquire as to why a particular model behaves in a specific manner — and that inquiry alone may prevent damage to others and/or to society at large.

Frameworks such as the European Union's (EU) Artificial Intelligence Act (AI Act), the National Institute of Standards and Technology's (NIST) Artificial Intelligence Risk Management Framework, and the Organization for Economic Cooperation and Development's (OECD) Artificial Intelligence Principles currently include AI transparency and AI explainability as requirements for compliance. These entities recognize that transparency and explainability are not optional — they are both required as a result of legal and moral obligations.

Transparency also facilitates innovation. As teams develop a better understanding of how their models learn and function, they are capable of developing, debugging, and scaling their models faster and with greater success. Ultimately, transparency is beneficial — both for ethics and for performance, governance, and sustainability.

Finally, AI transparency and AI explainability do not merely make AI systems understandable — they also make them trustworthy. Together, they are the foundation for creating responsible AI

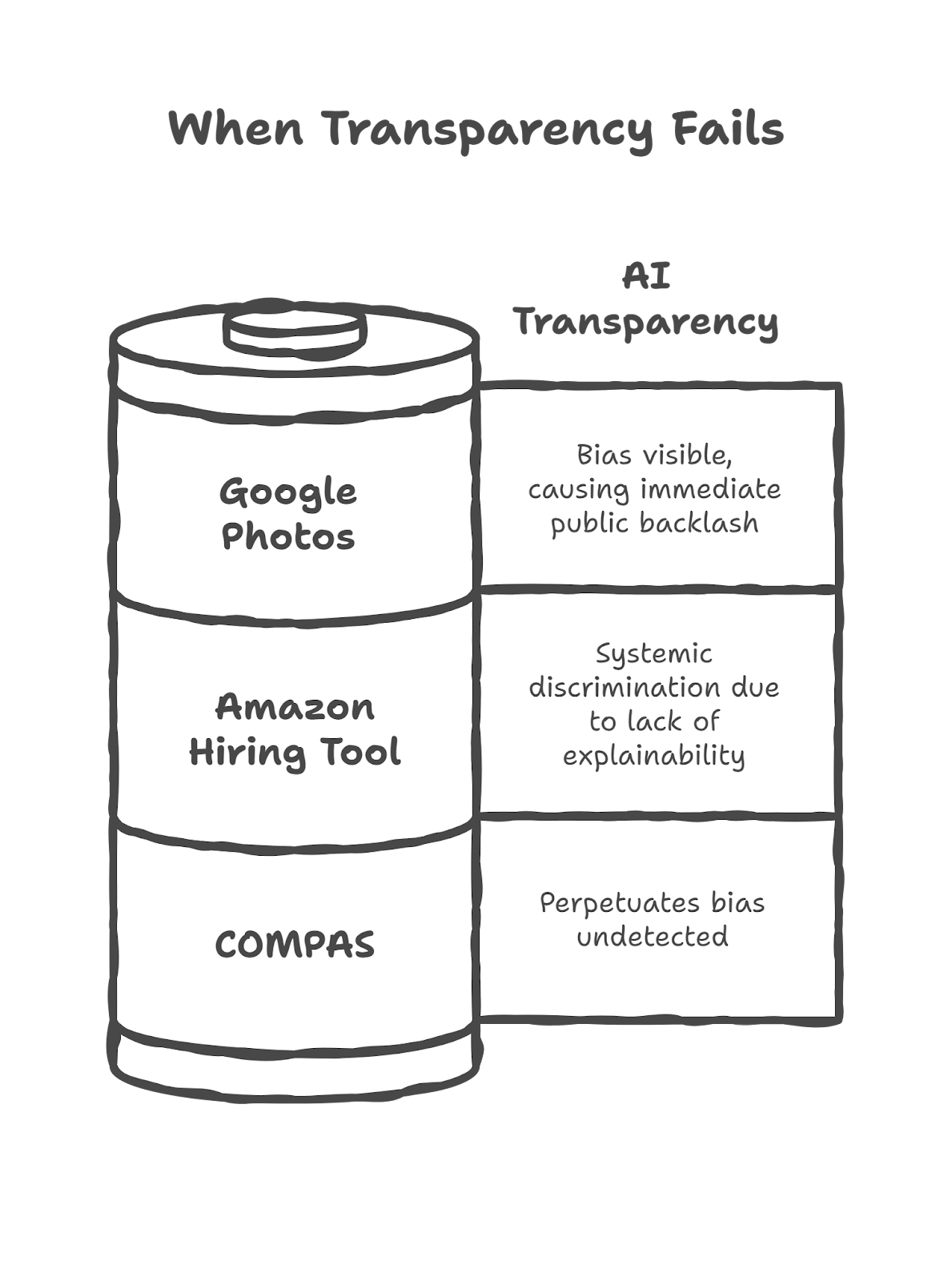

3. When Transparency Fails: Lessons from the Real World

The world has already seen what happens when artificial intelligence operates without AI transparency or AI explainability . Hidden decisions, unseen biases, and opaque outcomes have led to some of the most publicized ethical and technical failures in AI history. Each of these incidents teaches one clear lesson — without visibility, there can be no accountability.

3.1 The Cost of Opacity

This is probably the first of many examples of lack of AI transparency. The COMPAS algorithm, which has been used in the criminal justice system to determine whether a convicted individual should be given a prison sentence based upon the likelihood that he/she will re-offend, was a perfect example of this issue. In fact, judges have been relying upon COMPAS’s predictive scores to make their sentencing decisions. However, the researchers who wanted to study the internal workings of COMPAS were unable to do so because the company that developed COMPAS refused to provide them with access to the internal workings of the program. The developers claimed that their internal workings were proprietary information. Researchers later determined that COMPAS's predictive ratings were biased against African American defendants. Specifically, COMPAS rated many black defendants as being at a greater risk for re-offending than similarly situated white defendants. Because COMPAS' internal workings were not transparent, it was almost impossible for anyone to determine whether the biased ratings were due to the algorithm itself or the data that was entered into it. This demonstrates how dangerous it can be to rely upon a black box system.

Another example of lack of AI transparency occurred when Amazon decided to use an AI-based tool to assist in making hiring decisions for job openings within the company. After using the tool for some time, researchers were able to discover that the AI system was biased against women by preferring male applicants. The reason for the bias was that the system was trained on a large database of historical hiring decisions, and most of those decisions involved male candidates. Therefore, the system learned to prefer male candidates. Unfortunately, the AI system was not explainable, and therefore, Amazon did not realize that the system was biased until the results were audited. After discovering the bias, Amazon abandoned the AI hiring tool -- a very expensive lesson in what can happen when you ignore the importance of AI explainability in applications.

Google Photos is perhaps the best-known example of lack of AI transparency. Google Photos uses image recognition technology to identify objects in photographs, including people. Unfortunately, the image recognition technology misidentified several individuals in photographs as being of a different race. For instance, a photograph of a black person may have been identified as being of a white person. The reaction to this problem was instantaneous and widespread. Google apologized publicly and disabled the image recognition feature. As was the case with Amazon and COMPAS, the primary problem was not the data used to train the model, but rather the lack of transparency as to how the model made its classification decisions and the biases that were inherent in the data that was used to train the model.

Each of these three problems resulted in serious negative consequences for the companies involved. The problems damaged the companies' reputation, led to regulatory scrutiny and public criticism of their AI applications. Furthermore, the problems damaged the general public's perception of AI and diminished their confidence in AI applications.

3.2 What We Learn from These Failures

The three problems listed above are evidence of the significant costs associated with a lack of AI transparency. When an AI application operates without AI guardrails such as model documentation, disclosure of the data used to train the model, and capability disclosure, the risk of developing an AI application that contains embedded bias, produces unjust results, and diminishes the confidence of the end-user increases dramatically. The lack of transparency creates a situation in which even the most advanced AI application can become a liability.

If these companies had implemented AI guardrails systems such as providing open documentation for the models, requiring transparency in the data that was used to train the models, and providing capability disclosures, developers would have been able to identify data imbalance issues prior to deployment, and users would have been able to understand why specific outputs were generated. Furthermore, such practices would have provided evidence of responsible AI.

However, there is yet another level of guardrail that can be established for AI systems -- governance guardrails. Organizations can establish internal policies that require transparency such as conducting audits of AI applications, establishing ethics review boards for new AI applications, etc. When organizations implement such governance guardrails, the risk of developing AI applications that contain biased or unethical results decreases significantly. Governance guardrails do not simply regulate AI applications -- they guide the development of AI applications.

These problems demonstrate one thing -- the failure to develop and deploy AI applications with transparency is not simply a gap in knowledge -- it is a failure to fulfill the responsibility of deploying responsible AI. The ability of organizations to build trust in AI applications will depend upon the degree to which they can learn from the mistakes described above and embed transparency throughout the entire development cycle of AI applications.

4. AI Guardrails: The Framework of Transparent Intelligence

When we talk about creating AI that’s ethical, understandable, and fair, one principle rises above all others — control through clarity. That’s where AI guardrails come in. They are not just constraints; they are design principles that ensure AI transparency, maintain AI explainability , and protect trust in AI systems from being compromised.

4.1 What are AI guardrails?

For many teams, the most significant question is - how do AI guardrails support transparency for AI Systems? One of the easiest ways to understand the concept of AI Guardrails, is as protective frameworks that align an AI System's behavior with a team's expectations and ethics.

Just as physical guardrails on highways protect vehicles from veering off course, AI guardrails protect an AI model from drifting into areas that may be hazardous, biased, or unexplainable.

Guardrails also define what an AI Model can do (the allowable actions), should do (the best practices), and cannot do (prohibited actions).

There are several levels at which an AI System can have guardrails:

⟶ Data Guardrails - establish requirements for the quality, diversity, and transparency of the data used to train an AI System.

⟶ Model Guardrails - provide boundaries for how an AI Model will operate, and define the level of explainability required.

⟶ Deployment Guardrails - enforce adherence to usage policies, human oversight and ethical monitoring of the deployment of an AI System.

Therefore, AI guardrails serve as the architectural foundation for an accountable AI System. They enable traceability of decisions, interpretability of reasoning, and justification of outcomes, thus enabling trust in AI systems.

Thus, without AI guardrails, an AI System that has been built with good intentions, can still produce results that are both opaque and potentially detrimental. Therefore, transparency is not simply about revealing the contents of the "black box." It is about establishing a structure so that when the "box" is opened, it is safe.

4.2 Governance guardrails and Responsible Oversight

To be able to provide transparency you will need to have governance in place as well as technology; The majority of companies that are leaders today are implementing governance guardrails which are formal oversight mechanisms to maintain the requirements of AI transparency, and AI explainability throughout all phases of AI.

These governance guardrails function at the intersection of Ethics, Compliance, and Engineering. They determine who is responsible for a decision made by AI, how to keep AI transparent, and what documentation has to exist prior to deployment. For example, many companies conduct an internal Model Impact Assessment or Explainability Audit before launch – a governance element that ensures every AI model passes a transparency test before going live.

Additionally, these AI guardrails reinforce responsible AI practices by establishing formal reviews, establishing standards for interpretation, and establishing mandates for data disclosure policy; governance transforms transparency from an abstract concept to a tangible operational concept.

Regulatory frameworks such as the EU AI Act and NIST AI RMF are beginning to establish these same expectations, and thus, AI transparency is becoming both legally and ethically required. Companies that do not establish these layers of governance, while risking noncompliance, risk losing the trust of the public – a much larger price to pay over time.

While AI guardrails focus on how systems are built and behave, governance guardrails ensure those systems remain accountable and explainable throughout their lifecycle. Together, they form the twin pillars of responsible AI — aligning innovation with integrity.

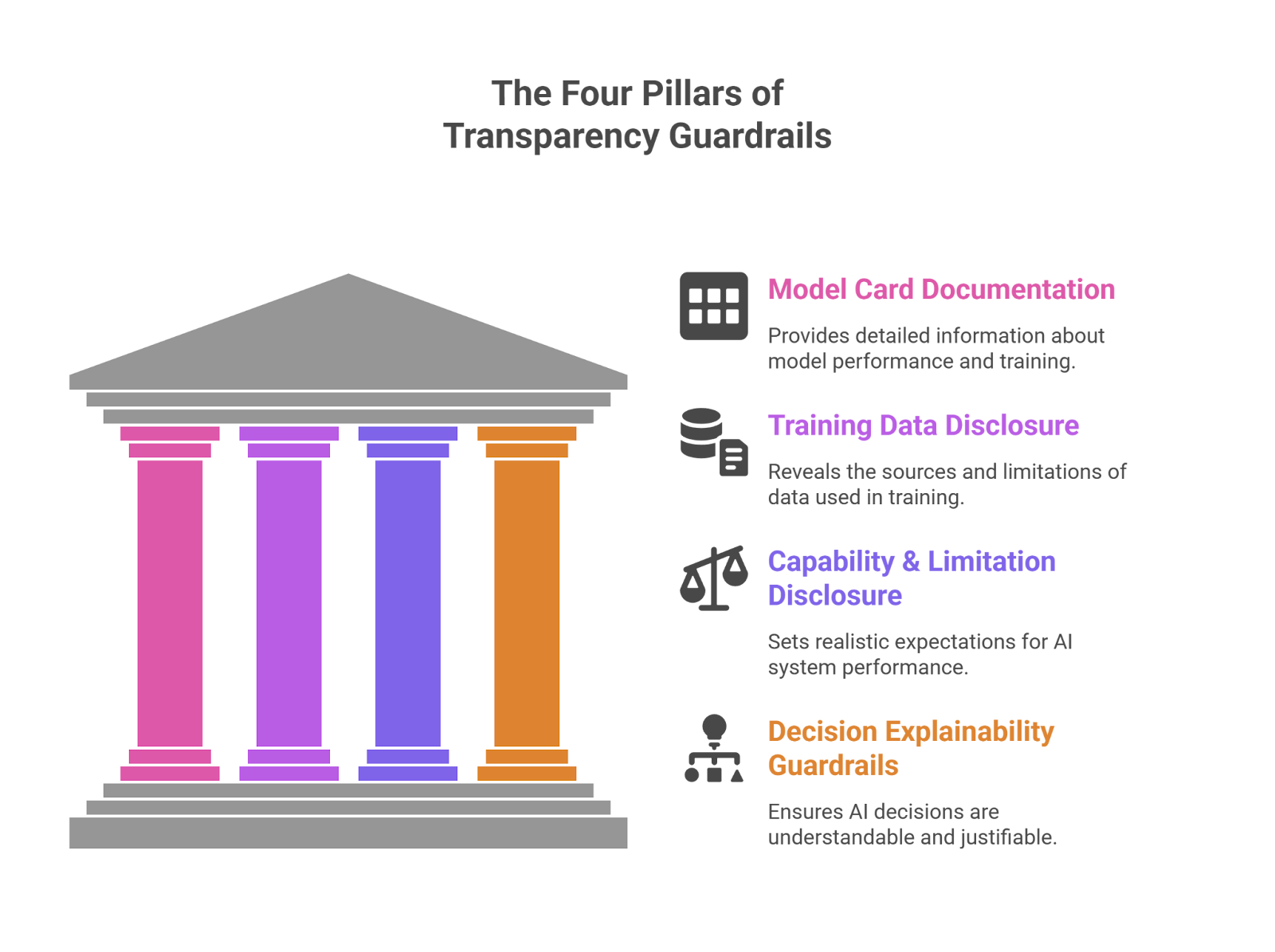

5. The Four Pillars of Transparency Guardrails

For organizations truly committed to responsible AI, talk alone isn’t enough — they need structure. That structure comes from four fundamental mechanisms that serve as the backbone of AI transparency and AI explainability . These are not just optional tools; they’re operational necessities that define how transparent, interpretable, and trustworthy a model can be.

5.1 Model Card Documentation: Turning Models into Open Books

One of the most practical and widely adopted transparency mechanisms is model card documentation. Originally proposed by Google AI researchers, model cards function like a nutritional label for machine learning models — describing what the model does, how it was trained, where it performs well, and where it doesn’t.

Many organizations now view model cards as their first real AI guardrails. They make AI transparency tangible by revealing information such as:

This is where a common reader question arises: “How do model cards improve AI transparency?The answer lies in clarity. When teams, regulators, or even end-users can easily access this documentation, they can evaluate whether an AI system aligns with ethical and performance expectations.

Model cards not only enhance trust in AI systems but also support auditability — they ensure every version of a model has a traceable record of what it was designed to do. In this way, model cards operationalize AI explainability and bring governance into everyday AI practice.

5.2 Training Data Disclosure: Opening the Black Box of Data

Data transparency is a major AI guardrails and has emerged to be among the most important factors in regards to the transparency of an organization's use of its training data.

By providing information on the type(s) of data used, the source(s) of that data, and the potential limitations or biases contained within them, organizations can transform their models from "black box" systems to transparent and accountable systems.

Today, most developers understand that the level of data transparency will directly affect the AI explainability . The greater the level of transparency with regard to data utilized during the development of an AI system; the greater the level of clarity regarding the reasoning behind that model. The LAION initiative which provides data for several of OpenAI's image-based AI models has taken steps to make their dataset both publically accessible and well-documented. This has improved trust in AI systems by allowing the community to independently verify the integrity of the dataset.

Of course, there’s a balance to strike. Many teams face the question — how can we be transparent about training data without exposing sensitive or proprietary information? The answer lies in structured disclosure: publishing metadata, data categories, and sourcing principles, even when raw data can’t be shared.

This balance between openness and protection is a cornerstone of responsible AI. It ensures visibility without risking privacy or intellectual property, aligning perfectly with modern governance guardrails.

5.3 Capability & Limitation Disclosure: Setting Honest Boundaries

Capability and limitation disclosures provide another example of a commonly underutilized yet very important tool for increasing transparency into an organization's AI capabilities.

It involves establishing realistic expectations on how well an organization's AI system will perform, and where it is likely to fail.

Without the disclosure of a company's capability limitations, the end-user will either have too much faith in the performance of the AI (i.e., overuse), or will misapply it, ultimately leading to significant damage down the line. Capability limitation disclosure will allow all stakeholders to understand the levels of the system's confidence, at which point additional human oversight may be needed, and the various conditions which may result in model drift or errors.

In addition to being necessary for a transparent approach to AI, many organizations now recognize that clearly disclosing the limitations of their AI capabilities does not indicate any form of weakness in their AI system; rather, it is a demonstration of their commitment to responsible AI.

As such, the disclosure of capability limitations provides a dual role as a form of 'behavioral' AI guardrails. They prevent end-users from misusing the AI, promote the use of the AI in a manner that is considered safe and increase the trust in AI systems through the establishment of transparency and honesty.

5.4 Decision Explainability Guardrails: Making AI Reasoning Visible

A common question among developers is — how can decision explainability guardrails prevent AI misuse?The answer lies in interpretability. Decision-level guardrails ensure that every AI prediction or action can be understood and justified after it happens — or even while it’s happening.

AI explainability techniques like SHAP, LIME, and Integrated Gradients give insight into which features most influenced an AI’s output. When embedded as guardrails, they provide an ongoing audit trail of reasoning.

Imagine a healthcare AI that predicts patient risk. With explainability guardrails, clinicians don’t just see the result — they see why the model made that prediction. That transforms blind automation into a collaborative, transparent decision-making process.

Moreover, explainability guardrails can feed into governance guardrails by flagging when a model’s decisions drift outside expected patterns or compliance zones. This closes the feedback loop between technology and oversight — a crucial factor in achieving responsible AI at scale.

By integrating explainability as a structural component rather than an afterthought, organizations create AI that is not only intelligent but intelligible.

These four mechanisms — model cards, data transparency, capability disclosures, and explainability frameworks — together form the practical foundation of AI transparency and AI explainability . They make the abstract concept of accountability measurable, repeatable, and scalable.

Without them, even the most advanced AI remains a mystery. With them, AI becomes a system that humans can understand, audit, and, most importantly, trust.

6. Real-World Implementation & Governance Alignment

Practice is important, yet Theory will build trust. There are many examples from the top global firms of structured AI guardrails, governance guardrails, which demonstrate how to apply real AI transparency, measure AI explainability , and enable responsible AI within production environments.

These examples show that while transparency is ethically good, it is also competitively advantageous.

6.1 Industry Examples: When Transparency Meets Innovation

Over the last several years, several major AI firms have centered transparency on their credibility.

DeepMind released extensive transparency reports regarding its large language and reinforcement learning models. The reports detail training data sources, model limitations, and human evaluation processes, and provide an overall view of AI transparency. Additionally, DeepMind created internal review procedures that serve as "AI guardrails," ensuring that each deployment of an AI system meets standards for explainability and fairness prior to launch.

OpenAI has furthered AI explainability by releasing System Cards related to each major model release, which outline intended use cases, limitations, and potential risks of each release. For example, OpenAI's System Card for GPT-4 includes benchmarking and ethical safeguard information for potential users and partners, providing a deeper level of trust in AI systems In addition to supporting regulatory compliance, this enables deeper levels of trust among users and partners.

Anthropic, the firm developing Claude Models, has incorporated transparency into its models using Constitutional AI. This incorporates a defined set of ethics ("the constitution") into the model's learning process, making it one of the most clear examples of how AI guardrails can be integrated into model design, rather than merely added after the fact.

Microsoft established operational transparency at scale with its responsible AI Standard V2, which requires documentation for every AI system developed, including reporting on explainability, assessing risk, and testing for bias. This is an excellent example of how governance guardrails can turn ethics into a normal part of day-to-day development.

While there are differences between these examples, there is one principle that unites them: AI transparency and AI explainability are no longer "soft values" -- they are strategic advantages. The companies that can clearly explain their AI systems create greater user trust, secure regulatory support, and protect their brands.

6.2 Global governance guardrails and the Policy Revolution

No longer are transparency and explainability options — today global regulators are making them mandatory standards.

The European Union AI Act is emerging as one of the first and possibly the most complete framework to establish guidelines for AI development and deployment. Specifically, the EU AI Act states that all High-Risk AI Systems must document their models, the data used for training, and the explanations or explainability mechanisms that will allow users to understand the decisions made by those systems. In short, the EU AI Act will require businesses to develop AI guardrails and to achieve AI transparency in order to meet regulatory requirements.

Similar to the EU AI Act, the National Institute of Standards and Technology (NIST) Artificial Intelligence (AI) Risk Management Framework (RMF) also outlines trustworthiness as a core component of AI, including AI transparency and AI explainability. Furthermore, the RMF makes explicit reference to AI explainability being tied to organizational accountability — i.e., the organization must be able to identify why an AI system made a particular decision, as well as what decision was made.

In addition, both Singapore’s Model AI Governance Framework and Canada's Directive on Automated Decision Making also support the developing trend of using transparency and explainability through open disclosures, algorithmic auditing and human-in-the-loop systems. Collectively, these represent an emerging global standard — that for there to be a viable and responsible AI ecosystem, there must be AI transparency.

Increasingly, business leaders are asking how global regulations can support the use of transparency and explainability. The answer to this question lies with the enforcement of governance guardrails. Governance Guardrails translate theoretical and abstract principles into measurable, reportable and actionable items.

Regulations and frameworks are creating a new culture of governance around transparency and accountability. However, forward-thinking organizations are already establishing AI guardrails before governments make them mandatory. Accountability through “Guardrails”, creates the fastest path to building trust in AI systems than marketing will ever create.

6.3 The Business Case for Transparency

Beyond compliance, transparency has measurable financial benefits. Teams that are transparent regarding AI transparency and AI explainability spend less time debugging; have improved customer satisfaction; and have reduced reputational risk. Organizations that are transparent also attract more reliable partners – as clearness (transparency) is the currency in a world where most algorithms are opaque.

Internally and externally, employees are no longer fearful of how the AI tool they use works; customers know what to expect from the company; and regulators can be confident that there are oversight mechanisms in place. The principle behind responsible AI is simply that responsible AI does not slow down innovation but rather ensures that it continues.

In light of the accelerated move towards regulated AI globally, those companies that prioritize governance guardrails today will be the leaders of the trusted AI economy of tomorrow.

7. The Challenges and Path to responsible AI

Even as organizations recognize the importance of AI transparency and AI explainability , they face a difficult question — how transparent is too transparent? It’s a challenge of balance: how do we make AI understandable without exposing sensitive data, intellectual property, or vulnerabilities that could be exploited?

7.1 Balancing Openness and Protection

Most organizations are in this same boat. On the one side, there are those who require openness (i.e., customers, regulatory agencies), and on the opposite side, those who want to protect their competitive advantage from being exposed or from being misused (i.e., security teams).

For example, disclosing all of the organization’s training data would improve AI transparency, however, that same data could be used to breach an individual's privacy or possibly break a licensing agreement for using certain data. Likewise, publishing all of the details about the model logic may expose a company to potential adversarial attacks, and/or, a company may lose its proprietary advantage.

That is where governance guardrails come into play. Governance guardrails do not just promote transparency, but rather, they regulate it. By creating internal policies around what to disclose, when to disclose it, and to whom to disclose it, organizations can ensure AI explainability while ensuring integrity.

Nowadays, leading enterprises are adopting a tiered transparency model — i.e., internal teams have complete access to the data/model; regulators have some level of partial disclosure; and, publicly disclosed reports provide a high-level summary of the most important aspects of the model/data. The multiple layers of this model satisfy the principles of responsible AI while protecting the organization’s interests.

In short, we’re not talking about having no secrets, but about having intentional secrets.

7.2 Building responsible AI Through Explainability

Most developers today ask — how can we actually build responsible AI through explainability frameworks? The answer lies in combining technology, governance, and culture.

Transparency will become integral to our AI technology systems. To achieve this, teams need to embed explainability into their systems from the beginning (at the development stage), and implement interpretability tools (such as SHAP, LIME or Integrated Gradients) into the development pipeline. Explainability will then no longer be an afterthought when the model is developed. Once explainability has been integrated into the entire system, AI systems will transition from being functionally capable to being accountable.

In terms of governance, we are seeing the introduction of AI guardrails systems to provide governance around documentation standards, evaluation procedures and human oversight points for AI systems. As such, each model deployed will have traceability records, limitation statements, and explainability reports.

When users, auditors and decision makers can clearly understand how an AI reached an outcome, trust in AI systems is fostered.

These are just a few examples of how governance guardrails can support all three areas above. Governance guardrails also institutionalize AI explainability and AI transparency as defaults instead of reactive measures.

Finally, to make this happen, we must get everyone on board - including the engineers who develop these models, the ethicists who evaluate the ethical implications of these models and the policymakers who create regulations surrounding AI.

7.3 The Path Forward: Guardrails as the New Standard

The next frontier in AI development isn’t just more intelligence — it’s more intelligible intelligence.

As AI grows in complexity, scalable AI guardrails and governance guardrails will define the new normal. Systems that can explain themselves in real time, adjust their disclosures dynamically, and self-audit for bias will form the backbone of trust in AI systems globally.

In the near future, transparency reports and explainability dashboards will be as standard as model accuracy metrics. Organizations that embrace these frameworks early will not only comply with evolving regulations but also lead the narrative of ethical AI innovation.

The road to responsible AI isn’t about perfection — it’s about progress. Every small step toward openness, documentation, and explainability contributes to a future where AI can be both powerful and principled.

8. Conclusion: From Opacity to Openness — The Future of AI Trust

In the past, artificial intelligence was viewed as a "black box". It was powerful, mysterious, and to a great extent misunderstood. As the influence of AI in shaping the course of human life has increased, society has begun to ask the appropriate question: Can we trust what we do not know?

This inquiry led to the concept of AI transparency, and AI explainability -- two concepts that are now an integral part of defining "ethics" in the context of technology. We have demonstrated the negative consequences of the lack of AI transparency (i.e., bias, misuse, distrust) and conversely the positive outcomes associated with the use of transparency, documentation, and explainability (i.e., transforming AI from something to be feared to something to be trusted).

The key to achieving this transition is through the development of AI guardrails Guardrails represent the frameworks that enable each decision made by an AI system to be accountable and capable of justification and improvement. In other words, guardrails are not barriers to innovation, but rather the architectural support for sustainable innovation. When governance guardrails are used in conjunction with AI guardrails, these systems provide a living, breathing structure for accountability, while balancing the need for advancement with the need for protection.

Organizations are using model cards, training data disclosures, capability statements, and explainability frameworks to begin treating transparency as a design principle and not merely as an afterthought. The cultural shift described above represents the foundation of responsible AI -- technology that is designed with integrity, fairness, and foresight.

The future will not be defined by the organization that develops the best algorithm, but by the organization that develops the most understandable AI. The next generation of AI leaders will be judged based upon their ability to demonstrate trust in AI systems -- confidence in the decisions made by the AI systems developed by them.

Transparency and explainability are not simply check boxes to be checked to satisfy regulatory requirements. Rather, they are an ethical commitment -- a promise that each output, decision, and model produced by an AI system can be explained. And, given the increasing influence of AI in virtually all aspects of our lives (e.g., credit scoring, health care), the promise described above is what will differentiate the trustworthy from the unaccountable.

WizSumo believes that AI transparency, AI explainability , and strong AI guardrails are not merely tools, but rather the guardians of the future. These tools will ensure that AI systems remain human centered, fair, and open. Ultimately, AI cannot be held accountable unless it is explainable -- and AI cannot be explainable until it is transparent.

AI will only be trusted when it can be understood — transparency is the bridge between intelligence and integrity

.svg)

.png)

.svg)

.png)

.png)