AI Red Teaming in Automotive & Autonomous Systems : Testing Safety-Critical AI

.png)

Key Takeaways

AI red teaming exposes how autonomous vehicle AI fails in real-world conditions.

Self-driving car security risks stem from confident AI decisions, not crashes.

AI security testing in automotive must test model behavior, not just software.

AI hallucinations in autonomous systems are silent risks without adversarial testing.

Continuous AI red teaming is key to safe and trusted autonomous mobility.

1. Introduction

As long as modern vehicles are being controlled by AI that takes data from sensors, makes real-time decisions, and is able to operate autonomously in unpredictable environments, there will be a growing risk profile. If one decision made by an AI system is wrong, it could lead to physical damage to the vehicle, create potential safety concerns, create regulatory issues, and cause loss of brand identity.

The increasing use of AI within the automotive industry makes AI red teaming a required testing process for automotive manufacturers and mobility providers. Automotive testing has historically focused on ensuring that systems operate as intended in expected conditions. AI red teaming, however, assumes that systems will be tested against abnormal inputs, adverse environments, and edge cases that were never anticipated by designers. Autonomous vehicles are a great example of this type of environment, as autonomous vehicles operate in uncontrolled, dynamic environments.

Red Teaming AI security testing in automotive is becoming a requirement for manufacturers and mobility providers. Self-driving car security risks such as perception errors, planning errors, and misinterpreting actual real-world signals can occur when using models that have been proven to work well in simulated tests. These failures often go undetected until they become widespread problems.

Also, AI security testing in automotive needs to transition away from traditional software vulnerability based testing and toward testing model behavior, data assumptions, and decision logic. The most damaging failure mode is AI hallucinations in autonomous systems. An autonomous vehicle that believes it knows what's around it (but doesn't) is not a visible failure. It is hard to identify, easy to overlook, and expensive to deploy in the real world.

This blog outlines why thinking like a red team is important for secure automotive AI and explains how structured red teaming can help identify and mitigate those risks before they become a real-world incident.

2. What is AI red teaming in autonomous systems?

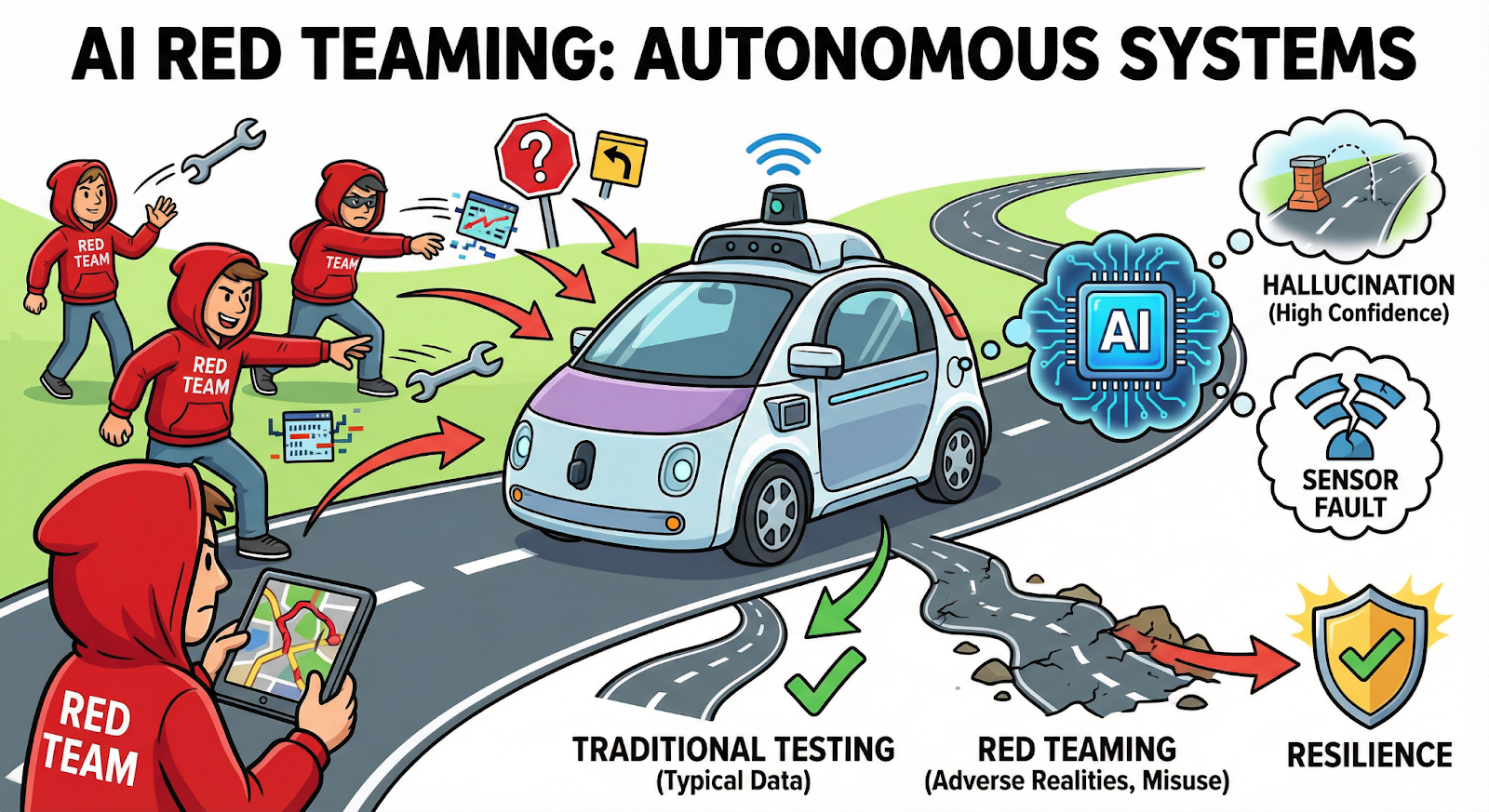

The term AI red teaming in an automotive setting refers to the intentional "stress-testing" of an automotive's AI-driven vehicle systems with the assumption that these systems will become confused, misused, manipulated, or exposed to unanticipated, abnormal real-world conditions. In contrast to traditional testing methods, which may examine if an AI model performs well on typical data sets, AI red teaming seeks to understand how and why an AI model fails when all its underlying assumptions fail.

In the automotive context of AI red teaming in autonomous systems, AI models are treated as decision-making entities operating within adversarial environments (e.g., roads are noisy, sensors are faulty, humans act erratically, and there are potential active manipulations of input). As such, AI red teaming in autonomous systems primarily concerns itself with perception errors, unsafe reasoning, cascading failures, and silent decision errors associated with AI models; those issues typically remain undetected during traditional validation processes.

A significant distinction exists between AI red teaming in autonomous systems and AI security testing in automotive. Traditional AI security testing in automotive stops at evaluating an individual component's code or infrastructure. AI red teaming in autonomous systems, however, evaluates the behavior of an AI model, the sensitivity of an AI model's training data, the logic governing sensor fusion, the constraints placed upon the deployment of AI models at the edge, and the interactions between AI models deployed across various systems. This evaluation methodology is necessary because many Self-driving car security risks arise from the confidence levels of AI models, systems that make decisions with absolute confidence while actually producing incorrect results.

One of the most significant areas for vulnerability identified through AI red teaming is AI hallucinations in autonomous systems. An AI hallucination occurs when a perception or reasoning model generates certainty regarding non-existent objects (obstacles), absent objects (hazards), or misinterpretations of traffic signal information. AI hallucinations in autonomous systems pose unique risks due to their ability to pass internal confidence checks and then propagate downstream into the planning and control functions of an autonomous system.

From a practical perspective, AI red teaming in autonomous systems shifts the focus of safety from simply ensuring that there are no bugs present to building resilience to misuse, uncertainty, and adverse realities.

3. Why Automotive AI Needs Red Teaming

The context of Automotive AI is an environment where failure is physical, immediate and typically irreversible. Therefore, AI red teaming is vital to both present day automobiles and future Mobility Platforms. While traditional testing validates that systems operate as designed, it cannot provide insight into how they will operate if/when actual operating conditions differ from those assumed in the design process.

As we move toward increasing levels of Autonomy, AI red teaming in autonomous systems will become a safety imperative versus a security improvement. Vehicles must identify and correctly classify increasingly complex traffic events, and recognize ambiguity and conflicting human behavior, all while continuing to function under degrading sensor conditions. In such environments, minor errors in Perception or Reasoning can produce extreme Self-driving car security risks, which may be undetectable with simulated testing methods.

Historically, AI security testing in automotive has primarily addressed software reliability and hardened infrastructure. Real-world events have shown however, that many of the root causes of such failures were in the AI Decision Logic itself, rather than in the code itself. It is here that AI red teaming identifies vulnerabilities by deliberately probing how models respond to various forms of Manipulation, Uncertainty, and Edge Cases.

One of the most concerning findings produced by AI red teaming in autonomous systems is the existence of AI hallucinations in autonomous systems. Hallucinations result in a vehicle incorrectly interpreting its surroundings, identifying shadows as obstacles, missing pedestrians, misinterpreting traffic lights, etc. As Hallucinations flow through Planning Systems, they can generate chain reaction errors that pose direct threats to Safety.

Unless an organization utilizes AI red teaming, these types of problems exist dormant until they are deployed at scale. Consequently, effective AI security testing in automotive necessitates an Adversarial mindset that accepts Models will be incorrect; Inputs will be hostile; Environments will be unpredictable. It is only through embracing this reality, will organizations be able to effectively mitigate Self-driving car security risks prior to deployment onto Public Roads.

4. Automotive & Autonomous AI Systems That Must Be Red Teamed First

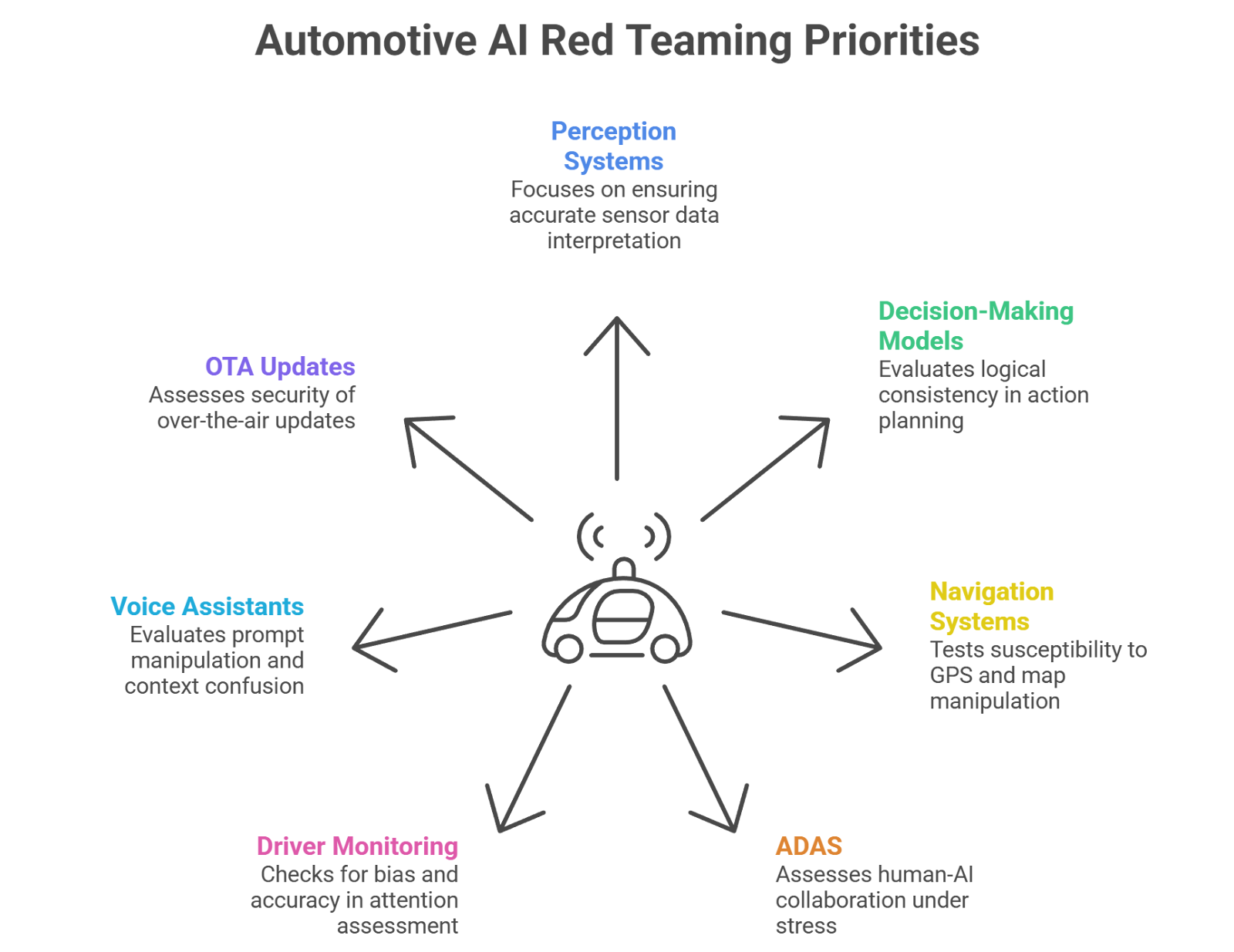

Not all automotive AI systems carry the same level of risk. AI red teaming must be applied first to systems where failure can scale rapidly, remain undetected, or directly impact physical safety. In AI red teaming in autonomous systems, prioritization is critical because resources must focus on AI components most likely to introduce Self-driving car security risks under real-world conditions.

This section mirrors how high-risk systems were identified in the banking example, by asking where AI failure hurts the most.

4.1 Perception Systems (Camera, LiDAR, Radar Fusion)

Perception systems form the foundation of autonomous decision-making. Any misinterpretation at this layer propagates downstream into planning and control.

Through AI security testing in automotive, red teams deliberately introduce adversarial environments such as unusual lighting, occlusions, reflective surfaces, and sensor spoofing. These tests frequently expose AI hallucinations in autonomous systems, where the vehicle detects phantom obstacles or fails to recognize real ones.

Failure impact: missed pedestrians, phantom braking, unsafe maneuvers, all critical Self-driving car security risks.

4.2 Decision-Making & Path Planning Models

Planning models convert perception into action. Even when perception is partially correct, flawed reasoning can result in unsafe outcomes.

AI red teaming challenges these systems with contradictory signals, ambiguous scenarios, and edge-case traffic behavior. In AI red teaming in autonomous systems, teams look for logical consistency that still leads to unsafe decisions, a subtle but dangerous failure mode.

Failure impact: incorrect lane changes, unsafe merges, collision scenarios.

4.3 Autonomous Navigation & Localization Systems

Navigation systems rely on maps, GPS, and environmental cues. These are highly susceptible to manipulation.

Using AI security testing in automotive, red teams simulate GPS spoofing, map drift, and outdated environmental data. These tests often trigger AI hallucinations in autonomous systems, where the vehicle confidently believes it is somewhere it is not.

Failure impact: route deviation, geofence violations, regulatory exposure.

4.4 Advanced Driver Assistance Systems (ADAS)

ADAS represents partial autonomy, where humans and AI share responsibility, making it one of the riskiest configurations.

AI red teaming evaluates over-reliance, delayed handover, and misinterpreted driver intent. In AI red teaming in autonomous systems, these systems are tested under distraction, fatigue, and unexpected road behavior.

Failure impact: delayed human response, increased Self-driving car security risks, liability disputes.

4.5 Driver Monitoring & Human-Machine Interaction AI

Driver monitoring systems assess attention, readiness, and compliance. Failures here are often silent.

Through AI security testing in automotive, red teams test demographic bias, false positives, and false negatives that can cause unsafe autonomy handoffs. These systems can also suffer from AI hallucinations in autonomous systems, misclassifying alert drivers as inattentive, or vice versa.

Failure impact: unsafe transitions, regulatory and legal exposure.

4.6 In-Vehicle AI Assistants & Voice Systems

Conversational AI expands the attack surface inside vehicles.

AI red teaming tests prompt manipulation, unsafe command execution, and context confusion. In AI red teaming in autonomous systems, assistants are evaluated for how hallucinated responses influence driver behavior.

Failure impact: distraction, unauthorized actions, erosion of user trust.

4.7 OTA Updates & Edge AI Deployment Pipelines

Over-the-air updates allow rapid improvement, but also rapid failure propagation.

Using AI security testing in automotive, red teams assess poisoned updates, model drift, and rollback failures. These scenarios often amplify AI hallucinations in autonomous systems across entire fleets.

Failure impact: fleet-wide vulnerabilities, large-scale recalls, severe Self-driving car security risks.

5. How AI red teaming Reduces Self-driving car security risks

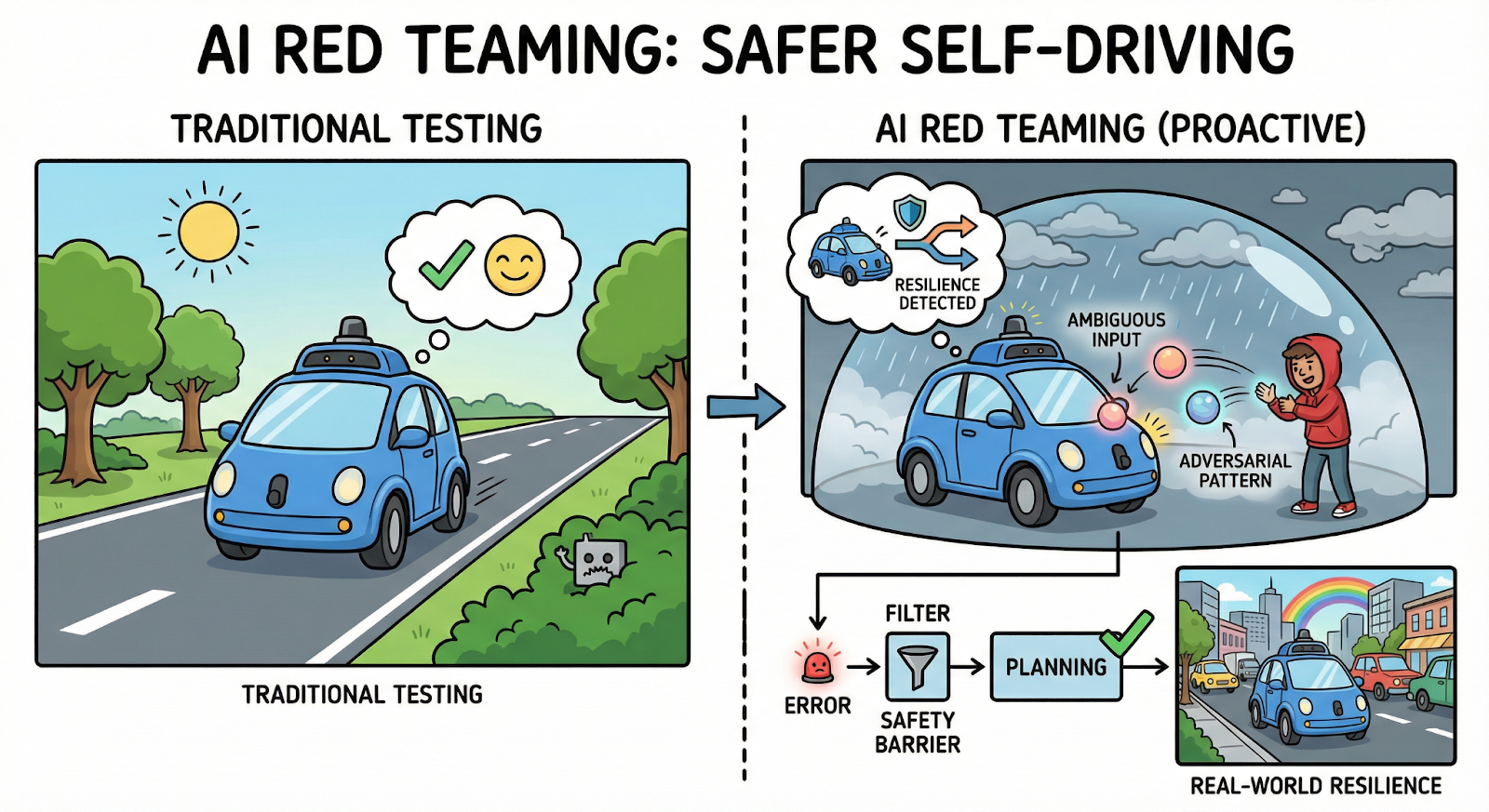

Red teaming, as a method for identifying potential failures in artificial intelligence (AI) autonomous systems, is much more than just a way to test the performance of a model; it's a systematic means of determining how an AI behaves in situations where its design assumptions are not valid. Therefore, it represents a key mechanism for reducing the risks associated with self-driving cars.

Practically speaking, AI red teaming in autonomous systems involves continuous simulation of adverse, anomalous, and stressful behaviors throughout the life cycle of AI. Unlike traditional validation methods, red teaming involves targeted testing of the types of failures that could result from self-confidence in a model, ambiguity in the environment, and interactions between components in the vehicle.

One of the primary contributions of AI security testing in automotive using red teaming is the identification of silent failures. Autonomous systems typically fail in ways that seem reasonable, but are actually incorrect. An example of this is AI hallucinations in autonomous systems, where perception and/or reasoning models create false confidence based upon incomplete or misleading input. Once created, these hallucinations will frequently pass threshold checks and be propagated down-stream into planning and control functions, making them extremely hazardous.

Using structured AI red teaming, organizations deliberately cause these conditions to occur. Red teams introduce conflicting sensor data, ambiguous behavior from other drivers, degraded environmental stimuli, and adversarial patterns intended to confound the model. The purpose of AI red teaming in autonomous systems is not to overwhelm the system, but to determine how it will reason under conditions where the truth of the world does not match what was learned during training.

Another significant advantage of AI security testing in automotive is to understand how errors compound. For example, a small error in perception can lead to a large error in planning, and then an unsafe action by the vehicle. Through AI red teaming, organizations map out these compounding chains of error and provide barriers to prevent localized errors from propagating into full-scale incidents.

It is also important to note that AI red teaming in autonomous systems is not a static process. As new models are developed, data changes over time, and the environment evolves, there needs to be continuous adversarial testing so that AI hallucinations in autonomous systems are identified prior to widespread deployment.

Over time, the use of AI red teaming provides evidence of an organization's ability to build measurable resilience into their automotive AI systems, and ultimately shifts the automotive AI industry away from a reactive response to failures occurring in the field and toward a proactive stance where those same failures are identified in controlled environments.

6. Conclusion

Autonomous and AI-driven vehicles represent one of the most safety-critical deployments of artificial intelligence today. As these systems move from controlled testing environments to public roads, the margin for error disappears. What makes this challenge especially difficult is that many failures do not look like failures at all. They appear as confident decisions made under uncertainty, decisions that introduce serious Self-driving car security risks only after they scale.

This is why AI red teaming is no longer optional for automotive organizations. Unlike traditional testing, AI red teaming accepts that models will be wrong, environments will be adversarial, and assumptions will break. Through AI red teaming in autonomous systems, manufacturers and mobility providers can systematically uncover how perception, reasoning, and decision layers fail when exposed to real-world complexity.

Effective AI security testing in automotive must also address one of the most dangerous failure modes in autonomy: AI hallucinations in autonomous systems. When vehicles hallucinate obstacles, signals, or intent, the result is not a system crash, it is unsafe behavior that appears internally valid. Without adversarial testing, these issues often remain invisible until they cause physical incidents, regulatory intervention, or costly recalls.

This is where WizSumo AI plays a critical role. WizSumo specializes in AI red teaming for high-risk, real-world deployments, including AI red teaming in autonomous systems. By simulating realistic adversarial conditions across perception models, planning logic, in-vehicle AI, and edge deployment pipelines, WizSumo helps organizations uncover vulnerabilities that traditional AI security testing in automotive approaches miss. The focus is not theoretical compliance, but practical risk reduction, before failures reach the road.

Ultimately, safe autonomy is not achieved by trusting AI performance metrics alone. It is earned through continuous adversarial evaluation, realistic misuse simulation, and disciplined AI red teaming. Organizations that adopt this mindset will be far better positioned to reduce Self-driving car security risks, control AI hallucinations in autonomous systems, and scale autonomous technology responsibly.

“Autonomous AI doesn’t fail loudly, it makes confident decisions that go wrong.”

.svg)

.png)

.svg)

.png)

.png)