AI red teaming in banking: Critical Use Cases to Prevent High-Impact AI Failures

.png)

Key Takeaways

⮳ AI red teaming in banking focuses on how AI systems fail, not just how they perform.

⮳ Traditional model validation alone cannot detect adversarial AI risks.

⮳ High-impact banking AI systems must be tested under real-world misuse scenarios.

⮳ Adversarial testing helps banks uncover silent failures before regulators do.

⮳ Secure banking AI is the result of continuous red teaming, not assumptions of safety.

1. Introduction

Banks are rapidly embedding AI into core decision-making systems, from credit approvals and fraud detection to compliance reporting and customer interactions. These systems operate at scale, make high-impact decisions, and increasingly influence regulatory outcomes. As a result, traditional security testing and model validation are no longer sufficient. What banks need today is AI red teaming , a disciplined, adversarial approach designed to uncover how AI systems actually fail in real-world banking conditions.

AI red teaming is a much broader concept than just ensuring that models are correct when validated using expected input. It involves simulating malicious user behavior, insider threats and external attackers to test vulnerabilities that exist within AI models, data pipelines, prompts and system integration. AI red teaming in banking will assist regulated financial institutions to identify latent failure modes that may never be identified until there is financial loss, a breach of compliance regulations or reputational damage.

The use of more sophisticated machine learning models and large language models by banks creates a larger attack surface in subtle and unusual ways. Examples include prompt injection, data poisoning, model manipulation and biased decision making, none of which will typically raise alarms with traditional security tools. Therefore, it is essential to have adversarial testing AI banking environments to understand how AI behaves when misused, stressed and abused intentionally.

This blog focuses on where AI red teaming in banking delivers the most value. It explains what AI red teaming means in practice, why banks need it, which AI systems should be prioritized, how to test AI models in banks effectively, and how AI red teaming complements existing model validation efforts. The objective is to provide a clear, practical foundation for securing high-risk banking AI systems before they fail.

2. What is AI red teaming in banking?

AI red teaming in banking, is the act of intentionally testing (or stressing) AI Systems through Adversarial Thinking with the intention of identifying how those systems may be used for nefarious purposes, or how those systems could be used to manipulate them or their outputs.

As opposed to other forms of testing which typically assume that the inputs are going to be benign, and that the behavior of the system will follow the expected path, AI red teaming testing makes the assumption that the inputs are going to be from malicious users, that there will be edge case inputs, and that there will be unintended usage patterns.

In terms of application within banking, AI red teaming in banking focuses on higher-risk deployments of AI technologies that result in both a significant impact to the financial bottom line, and/or compliance issues, as well as having an impact upon customers. This is not limited to Machine Learning Models, however. It also includes large Language Models, Decision Engines, Data Pipelines, Prompts, APIs and downstream integration(s). The objective of AI red teaming testing is not to demonstrate that the AI System Works, but to demonstrate how it fails.

An integral component of this methodology is creating an environment for adversarial testing AI banking. This would include the simulation of attacks such as prompt injection, data poisoning, evasion techniques, biased input manipulation, and logic abuse, etc. Through these types of tests, Banks can determine if their AI Systems can be tricked into producing unsafe outputs, leaking sensitive information, or make non-compliance decisions, all without tripping any conventional security controls.

It's worth noting that AI red team testing is distinct from Quality Assurance (QA), Penetration Testing, and Model Performance Evaluation. It views AI Systems as decision-making Assets that can be influenced, Coerced, or Exploited. As such, this type of thinking is critical for Banks, since AI failures almost never present themselves as System Outages. Instead, they present themselves as incorrect Approvals, missed Fraudulent Transactions, Unfair Treatment of Customers, or Accurate Regulatory Outputs.

The ability to Test how to test AI models in banks through the use of AI red teaming testing allows Institutions to transition from a Reactive Incident Response paradigm to a Proactive Risk Discovery paradigm - before any AI-Driven Decisions can do Irreversible Damage.

3. Why Banks Need AI red teaming

Banks work in a very high-risk, highly regulated environment. AI can be introduced in the decision-making process for lending; fraud detection; and, compliance workflows; and, customer interaction. Therefore, if there are problems with the AI system (even small), it could result in significant financial loss; or, regulatory action.

Therefore, AI red teaming, for banks who are using AI extensively, is no longer discretionary.

Traditional approaches to controls are focused on whether a model performs according to expectations. In contrast, the environment in which banks operate is inherently adversarial. There are many types of people who will try to exploit weaknesses in AI systems including: those who commit fraud and/or money laundering; malicious insiders; and, even users who have good intentions but inadvertently find a weakness in the AI system. AI red teaming in banking, is a means of addressing this problem by intentionally trying to simulate what happens to AI behavior when assumptions about the way data is generated and inputted into the system are broken; or, when the inputs into the system are intentionally changed from their expected values.

Another important reason that banks should use AI red teaming in banking, is because of the expectation of supervisors to ensure that banks are demonstrating that models are accurate; that models are fair; that models provide transparency; that models are controlled; and that models do not fail due to unforeseen factors. If a bank is unable to describe why an AI system failed; or, if the bank has not demonstrated prior practices related to the proactive testing of AI systems (adversarial testing AI banking), then the burden of proof is shifted heavily in favor of the regulators and against the bank. AI red teaming in banking, provides demonstrable evidence that the risks associated with the implementation of AI in a banking setting have been identified and addressed.

Banks also face unique reputation-based risks. Errors resulting from AI-driven systems used for loan decisions; AML screening; and, customer communication may create rapid erosion of trust. Although failures of traditional systems typically are evident as soon as the error occurs, AI-driven failures are often undetectable until a pattern emerges after the fact at a large scale. By identifying these "silent failure modes" early, AI red teaming in banking, allows banks to mitigate large-scale issues of customer trust; or, prevent potential regulatory actions.

The final reason that banks should engage in AI red teaming is because as AI becomes increasingly connected to other systems, AI system failures are transmitted throughout the workflow in a short amount of time. For example, a failure of an upstream model can affect the downstream models without producing obvious signs of the failure. By continuously engaging in AI red teaming in banking, banks are able to identify the likelihood of failures being propagated across multiple workflows; and, develop stronger boundaries to protect critical processes that rely on AI.

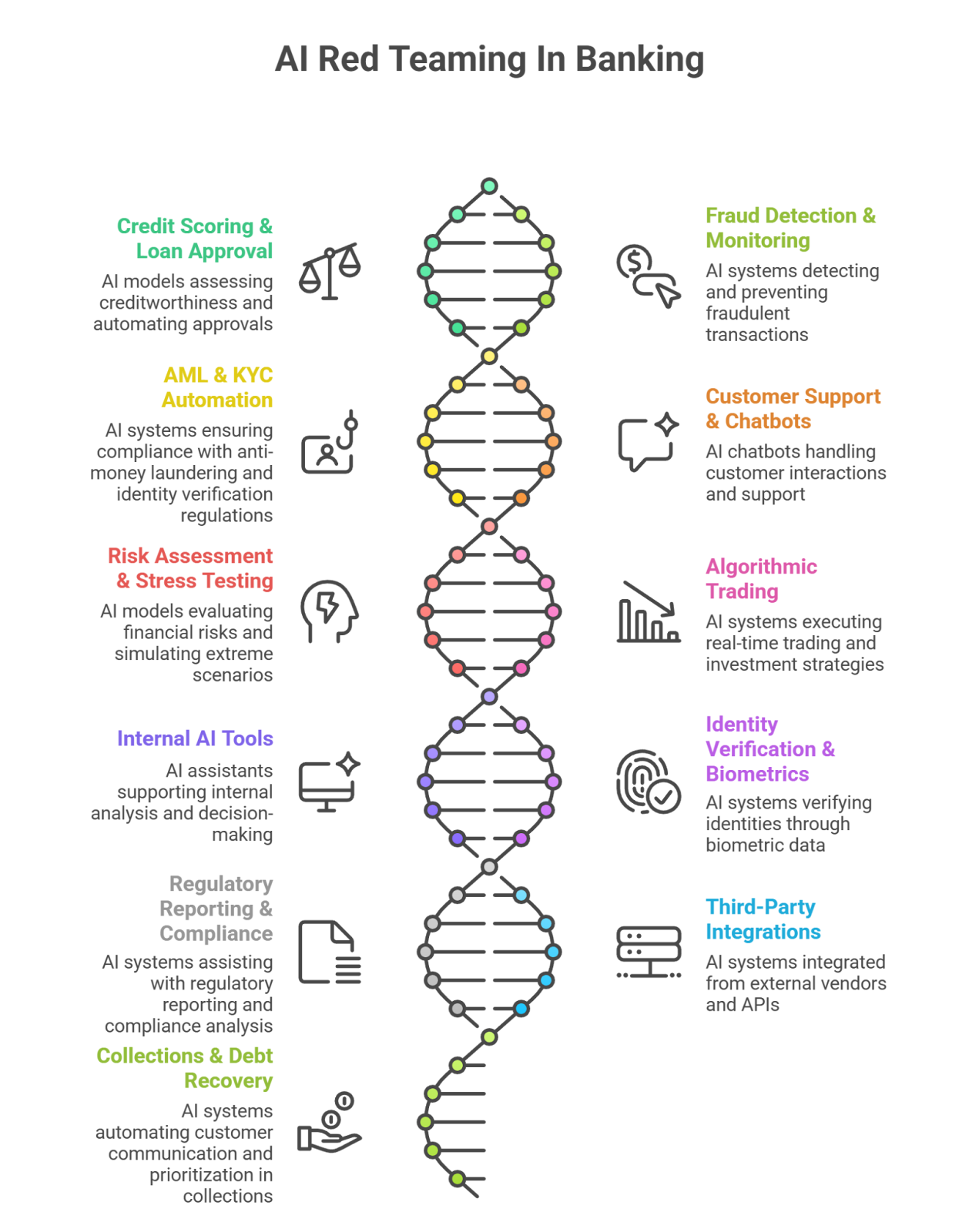

4. Banking AI Systems That Must Be Red Teamed First

Not all AI systems in a bank carry the same level of risk. AI red teaming should be prioritized for systems that directly influence financial decisions, regulatory outcomes, or customer trust. In AI red teaming in banking, the focus is on AI systems where silent failure can scale quickly and where adversarial misuse is realistic.

Below are the most critical banking AI systems that must be subjected to continuous adversarial testing AI banking practices. For each use case, the emphasis is on how the system can fail and what that failure means for the bank.

4.1 AI-Driven Credit Scoring & Loan Approval

AI models are widely used to assess creditworthiness, automate approvals, and price risk. These systems are prime candidates for AI red teaming because they directly affect fairness, compliance, and balance-sheet risk.

Red teaming focuses on biased feature exploitation, proxy discrimination, and adversarial input manipulation that artificially inflates or suppresses credit scores.

Failure Impact:

⟶ Fair lending violations

⟶ Regulatory penalties

⟶ Increased default risk and reputational damage

4.2 Fraud Detection & Transaction Monitoring

Fraud detection models are inherently adversarial. Attackers continuously adapt their behavior to evade detection, making this a core target for AI red teaming in banking.

Through adversarial testing AI banking, teams simulate transaction patterns designed to bypass thresholds, exploit timing windows, or overwhelm models with false positives.

Failure Impact:

⟶ Undetected fraudulent transactions

⟶ Customer friction due to false alerts

⟶ Direct financial losses

4.3 AML & KYC Automation Systems

AI-driven AML and KYC systems assess identities, monitor behavior, and flag suspicious activity. These systems are high-risk because failures often remain invisible until regulators intervene.

AI red teaming evaluates synthetic identity attacks, adversarial behavior that mimics legitimate activity, and evasion strategies designed to stay below reporting thresholds.

Failure Impact:

⟶ Regulatory non-compliance

⟶ Criminal misuse of banking services

⟶ Significant fines and enforcement actions

4.4 AI-Powered Customer Support & Chatbots

Banks increasingly use AI chatbots to handle sensitive customer interactions. These systems expand the attack surface dramatically and must be included in AI red teaming in banking programs.

Red teaming tests prompt injection, jailbreaks, and conversational manipulation that lead to unauthorized disclosures or unsafe actions.

Failure Impact:

⟶ Data leakage

⟶ Account compromise

⟶ Loss of customer trust

4.5 Risk Assessment & Stress Testing Models

Risk and stress testing models influence capital planning and regulatory reporting. Unlike transactional systems, their failures are strategic and systemic.

AI red teaming challenges assumptions, simulates extreme scenarios, and tests model behavior under conditions not represented in historical data.

Failure Impact:

⟶ Incorrect capital allocation

⟶ Misjudged risk exposure

⟶ Regulatory audit failures

4.6 Algorithmic Trading & Investment Systems

Trading and investment AI systems operate in real time and at scale. Small model errors can rapidly compound, making them critical for adversarial testing AI banking.

Red teaming evaluates susceptibility to adversarial market signals, feedback loops, and poisoned data feeds.

Failure Impact:

⟶ Immediate financial loss

⟶ Market instability

⟶ Legal and compliance exposure

4.7 Internal AI Tools for Employees

Banks now deploy internal AI assistants for analysis, reporting, and decision support. These tools often have access to sensitive data and are frequently under-tested.

AI red teaming in banking examines data leakage via prompts, access control bypass, and hallucinated internal guidance.

Failure Impact:

⟶ Confidential data exposure

⟶ Poor internal decisions

⟶ Governance and audit failures

4.8 Identity Verification & Biometric Systems

Biometric AI systems are used for onboarding and authentication. They are increasingly targeted by spoofing and deepfake attacks.

AI red teaming tests replay attacks, synthetic media, and demographic bias in biometric accuracy.

Failure Impact:

⟶ Account takeovers

⟶ Legitimate user lockouts

⟶ Regulatory concerns

4.9 Regulatory Reporting & Compliance Automation

Some banks rely on AI to assist with regulatory reporting and compliance analysis. These systems carry extreme regulatory risk.

Red teaming evaluates hallucinated interpretations, suppressed risk signals, and incomplete disclosures.

Failure Impact:

⟶ Regulatory penalties

⟶ Legal action

⟶ Loss of institutional credibility

4.10 Third-Party & Vendor AI Integrations

Banks increasingly consume AI through vendors and APIs. These integrations extend risk beyond the bank’s direct control.

AI red teaming assesses supply-chain risks, hidden data usage, and cross-system prompt injection.

Failure Impact:

⟶ Indirect data breaches

⟶ Compliance violations

⟶ Vendor-driven incidents

4.11 AI Systems for Collections & Debt Recovery

AI is used to automate customer communication and prioritization in collections. Failures here often trigger legal and ethical issues.

adversarial testing AI banking explores aggressive output generation, biased treatment, and non-compliant messaging.

Failure Impact:

⟶ Legal exposure

⟶ Brand damage

⟶ Increased regulatory scrutiny

5. how to test AI models in banks Effectively

Testing AI systems in banking requires a fundamentally different approach than testing traditional software or validating statistical models. Banks are not just asking whether an AI model works, but whether it can be misused, manipulated, or silently fail under adversarial conditions. This is where AI red teaming becomes central to understanding real-world risk.

how to test AI models in banks effectively starts with adopting an attacker’s mindset. Instead of validating expected behavior, banks must intentionally challenge AI systems with abnormal, malicious, and edge-case inputs. This includes testing how models respond to biased data, adversarial patterns, misleading prompts, and unexpected user behavior. AI red teaming in banking focuses on discovering failure paths that normal testing never exercises.

A practical AI testing program in banks typically includes continuous adversarial testing AI banking environments across three dimensions. First, models must be tested for robustness, ensuring they behave safely when inputs are manipulated or incomplete. Second, systems must be tested for fairness and bias, identifying whether protected attributes or proxies can influence outcomes. Third, banks must test failure behavior, understanding what happens when AI systems are wrong, uncertain, or exposed to novel scenarios.

This is where specialized AI red teaming capabilities become critical. WizSumo, for example, provides dedicated AI red teaming services designed specifically for regulated industries like banking. WizSumo helps banks simulate realistic adversarial scenarios across credit models, fraud systems, AML workflows, LLM-powered chatbots, and internal AI tools. By combining domain knowledge with hands-on adversarial testing AI banking techniques, WizSumo enables banks to uncover vulnerabilities that are often missed by internal testing teams.

Another key aspect of how to test AI models in banks is continuity. AI red teaming should not be a one-time exercise conducted before deployment. Models evolve, data drifts, prompts change, and attackers adapt. Continuous AI red teaming in banking allows institutions to reassess risk as systems change and to demonstrate ongoing control effectiveness to regulators.

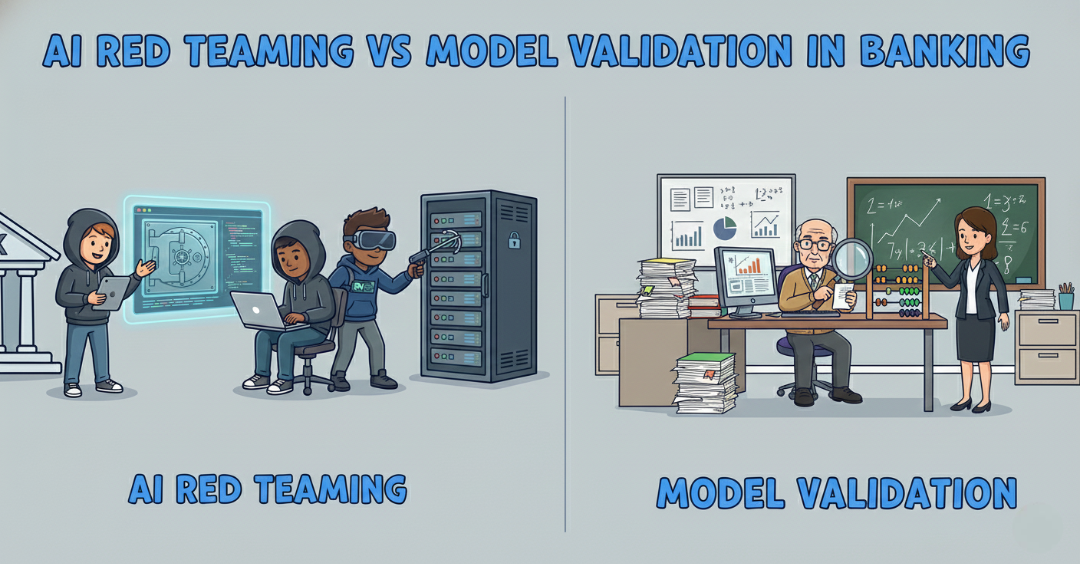

6. AI red teaming vs Model Validation in Banking

Validation of models has been an essential component of model risk management (MRM) for many years at banks; however, it now represents a different approach than what we need today. Validation assesses if a model's conceptual framework is sound, if it is statistically sound, and if it complies with bank policy and regulatory expectations. However, in itself it is not enough.

AI red teaming addresses a new type of risk which is not addressed by validation.

Traditional model validation usually assumes that input to a model is correct, data used to train the model is representative of the population, and that end-users will use the model as intended. Traditional validation also looks at the performance metrics of a model, how stable the model is over time, how well documented the model is, and how well the model can be explained in a way that makes sense to the user of the model, all in a controlled environment. On the other hand, AI red teaming in banking assumes that AI systems will be attacked and misused by individuals and groups, that they will be subjected to edge case attacks, and that there will be ongoing attempts to attack them in innovative ways. Therefore, the primary goal of AI red teaming in banking is to intentionally break AI systems, not to ensure that they meet some basic requirement.

The two approaches represent fundamentally different views of validating an AI system. Many of the most damaging AI system failures in banking occur when models are technically "validated". Examples of damage that occur include bias exploitation, prompt injection, data poisoning, and logic abuse, all of which lie outside the scope of traditional validation reviews. Banking environments where AI red teaming is performed are specifically designed to allow for the identification of these types of failure modes through the simulation of realistic scenarios that reflect potential attackers and misuse scenarios.

The second major difference between the two approaches is related to the frequency and timing of when validations are done. Traditional validation is typically done periodically and based upon documents generated during those validations, while AI red teaming is typically continuous and behavior-based. For example, as banks continue to deploy AI systems that continue to learn from new data, integrate with third-party applications, and rely on large language models, the risk profile of the bank changes continuously. Continuously performing AI red teaming allows banks to ensure that their controls remain valid as their AI systems continue to evolve.

Therefore, the best AI governance programs will not select between using validation and AI red teaming. Rather, they will use both. Validation establishes a level of trust in an AI system, and AI red teaming tests the validity of that trust in a manner that reflects real world conditions. Using both approaches together enables banks to understand not only if an AI system performs according to expectations, but also if it can perform as expected despite being subject to misuse, manipulation, and adversarial pressure.

7. Conclusion

AI has become deeply embedded in the way banks make decisions, manage risk, and interact with customers. As these systems grow in complexity and autonomy, the cost of failure increases sharply. The most damaging AI incidents in banking rarely come from obvious bugs or outages, they emerge from subtle weaknesses that only appear under misuse, pressure, or adversarial behavior.

This is why an adversarial mindset is essential. Banks that rely solely on traditional controls often discover AI risks too late, after customer harm, regulatory intervention, or financial loss has already occurred. By systematically challenging assumptions, probing edge cases, and simulating real-world abuse, banks gain a far clearer understanding of where their AI systems are resilient and where they are fragile.

Equally important, this approach strengthens governance. It helps banks demonstrate to regulators, auditors, and internal stakeholders that AI risks are being actively identified and managed, not passively assumed away. When combined with strong model validation and oversight, adversarial testing becomes a powerful mechanism for building trust in AI-driven decisions.

Ultimately, secure and compliant banking AI is not achieved by confidence in models, but by evidence of rigorous testing. Institutions that invest early in structured adversarial evaluation will be far better positioned to scale AI safely, responsibly, and sustainably.

“Banking AI doesn’t fail loudly, it fails silently, until the damage is done.”

.svg)

.png)

.svg)

.png)

.png)