AI red teaming in Ecommerce: Exposing Hidden AI Risks in Pricing, Fraud, and Automation

.png)

Key Takeaways

⮳ AI red teaming reveals how ecommerce AI fails under adversarial use.

⮳ Traditional testing misses silent AI guardrail bypass scenarios.

⮳ Pricing, fraud, refunds, and chatbots are high-risk AI systems.

⮳ AI security testing for ecommerce must be continuous, not one-time.

⮳ Red team evaluation methods expose failures before attackers do.

1. Introduction

Nowadays, most e-commerce platforms rely heavily on artificial intelligence systems that drive major parts of the business. The following areas have moved beyond being supported by AI to being completely driven by AI: product discovery, personalization through recommendations, price optimization, fraud prevention, customer service, and refund processing.

These systems make decisions autonomously, make decisions at an enormous scale and significantly affect consumer confidence, financial performance, and market fairness.

However, with growing adoption of AI within e-commerce companies are finding out that AI failures don't generally resemble system crashes. Rather, AI failures can manifest as unnoticeable losses. For example, an altered recommendation algorithm can cause biased product exposure without generating an alert. An automated chatbot can generate refunds when it shouldn’t. A fraud-detection algorithm can be circumvented while looking healthy statistically. These kinds of losses silently build up until an e-commerce company loses money due to revenue loss, abuse, or brand reputation loss.

That's why AI red teaming is becoming important for modern e-commerce platforms. AI red teaming in ecommerce does not validate whether AI performs as it is supposed to, as traditional testing does. Instead, it assumes that AI systems will be used against its design. Therefore, AI red teaming views customers, vendors, hackers, and other types of adversaries as adversarial entities attempting to obtain benefits, avoid controls or modify results by probing how an AI behaves and what behaviors lead to AI guardrail bypass.

Almost all AI systems deployed within e-commerce use weak controls like user prompts, thresholds, or rules to protect their functionality. In reality, these controls are weak. Adversaries do not attack AI systems directly; instead, they find ways to work around them. For instance, they may ask indirect questions, create multiple chained conversations or violate intended usage workflows to identify "weak points" within an AI system guardrails that can be exploited without triggering security-related alerts.

Therefore, the current methods of testing ecommerce AI systems -- which primarily focus on accuracy, speed, and regression, do not capture the intent of the test cases. Consequently, many ecommerce platforms successfully deploy AI systems that function properly in controlled environments, yet, when subjected to adversarial pressures, fail to operate as designed. Thus, AI security testing for ecommerce needs to transition from validating whether an AI system functions correctly to evaluating whether an AI system has vulnerabilities that can be exploited by adversaries using "real world misuse".

This blog explores how AI red teaming applies specifically to ecommerce environments, why adversarial thinking is critical for protecting AI-driven revenue streams, and how structured red team evaluation methods help organizations uncover high-impact AI failure modes before they scale.

2. What is AI red teaming in E-commerce?

The practice of intentionally stressing AI systems through the use of adversarial thinking to understand how AI systems can be used against their intended purpose (i.e., exploited, manipulated, or coerced) is called AI red teaming. Unlike traditional testing where you would ask if your AI model works under normal expectations, AI red teaming in ecommerce asks the opposite question: how does your AI system behave when users attempt to deliberately violate your assumptions, logically exploit your logic, or extract from your system what you did not intend to provide?

In ecommerce systems, AI systems typically operate within larger workflows including pricing algorithms, refund policies, fraud detection systems, recommendation logic, and customer communication channels. The interconnectivity of the workflow increases the number of possible entry points (or attack surfaces) for malicious actors. Therefore, even if a model performs correctly on its own, it could potentially generate bad results when its output triggers additional automated processes.

An important aspect of AI red teaming is the assumption of malicious or opportunistic intent. Red teams will simulate user behavior which includes but is not limited to; attempting to obtain unauthorized discounts by the customer, artificially manipulating ranking signals by the seller, fraudulent activities that evade the anti-fraud system, and/or probing customer support agents for loopholes in the company's policies. As these types of behaviors are typical, repeatable, and financially driven, they are particularly applicable to ecommerce platforms.

Identifying and evaluating AI guardrail bypass represents another significant objective of AI red teaming in ecommerce. Most ecommerce AI systems utilize some form of prompt, soft constraint, or policy-based instruction to limit the potential misuse of the system. While these control mechanisms may work well in most cases, they frequently fail when the user interacts with the system over multiple turns, introduces ambiguity, or sequences workflow steps to create opportunities to exploit the limitations of the control mechanisms. By simulating a variety of user scenarios, AI red teaming demonstrates how AI systems' guardrails deteriorate in the context of real world usage.

Additionally, AI red teaming is not limited to testing individual AI models. Instead, AI red teaming provides a systemic view of the AI system - including the prompts, APIs, decision thresholds, training data assumptions, integrations with payment and order systems, and human-in-the-loop controls. Understanding the system-level implications of small AI errors is crucial for realizing the true business implications of those errors.

Finally, by utilizing formalized red team evaluation methods, ecommerce organizations can identify failure modes that cannot be identified through traditional testing methodologies. These formalized red team evaluation methods help to evolve AI risk management from a reactive process to a proactive process by providing ecommerce organizations with insight into how their AI systems can fail before an attacker exploits those failures.

3. Why Ecommerce Companies Need AI red teaming

The environment in which Ecommerce platforms operate is the most aggressive digital environment. Every single automated action taken by an AI system has a direct impact on the company's bottom line, and every single AI driven process represents a possibility of misusing the data.

Therefore, AI red teaming is not a theoretical security test for ecommerce companies; it is an operational necessity to protect their revenue and build trust in their platform.

Scale is one of the major reasons ecommerce companies need to utilize AI red teaming in ecommerce. AI systems generate thousands of automated decisions continuously and independently, across multiple millions of interactions. Therefore, when a vulnerability exists, it does not stay localized. A relatively minor logic error in how an AI system generates prices, manages returns, or recommends products can create a scalable means of exploitation, potentially generating significant monetary loss before it is even discovered.

Traditional monitoring systems do not have the capability to identify the types of slow and distributed abuses that occur from repeated exploitation of a particular vulnerability.

Intent is another important reason ecommerce companies need to use AI red teaming. The users of ecommerce platforms are not all alike. There are customers, there are sellers, there are affiliates, and then there are the fraudulent users (bots) that interact with AI systems in a variety of different manners. All of these user groups have a financial incentive to find weaknesses or vulnerabilities in AI systems that they can exploit. Unlike traditional testing methods, AI red teaming simulates the intent of an adversary rather than assuming a cooperative and/or benign user base.

Most ecommerce AI systems rely on soft controls like prompts, threshold values, and confidence levels to limit potential harm. Soft controls provide some level of basic safety, however, they are vulnerable under pressure. Users who are skilled at finding weaknesses will often identify AI guardrail bypass methods using indirect pathways that do not violate explicit rules, but take advantage of ambiguity, sequence, or edge case conditions across multiple workflows. Therefore, AI red teaming in ecommerce provides a way for ecommerce companies to expose weaknesses in their AI systems prior to exploitation at scale.

There is also a significant detection gap in ecommerce AI systems. Most failures of AI systems in ecommerce do not produce an alert immediately. For example, if a fraud model fails to recognize certain patterns, or a chatbot fails to consistently apply policies, the failure may go unnoticed because the metrics used to evaluate the performance of the AI system remain within normal parameters. Adversarial testing of AI systems provides a method for detecting failures in ecommerce AI systems that would otherwise remain undetected.

Finally, ecommerce AI systems are always evolving. Models are being re-trained, prompts are being updated, and new integrations are being added as ecommerce platforms continue to expand. As a result, guardrails that were effective at the time of launch often become less effective and may even be degraded quietly over time. Therefore, applying continuous red team evaluation methods to ecommerce AI systems ensures that the risks associated with AI systems are continually assessed as the systems change, rather than assumed to be effectively controlled indefinitely.

For ecommerce companies, the question is not whether their AI systems will be tested by adversaries, but whether they allow those tests to occur internally through "AI red team" or externally through actual exploits.

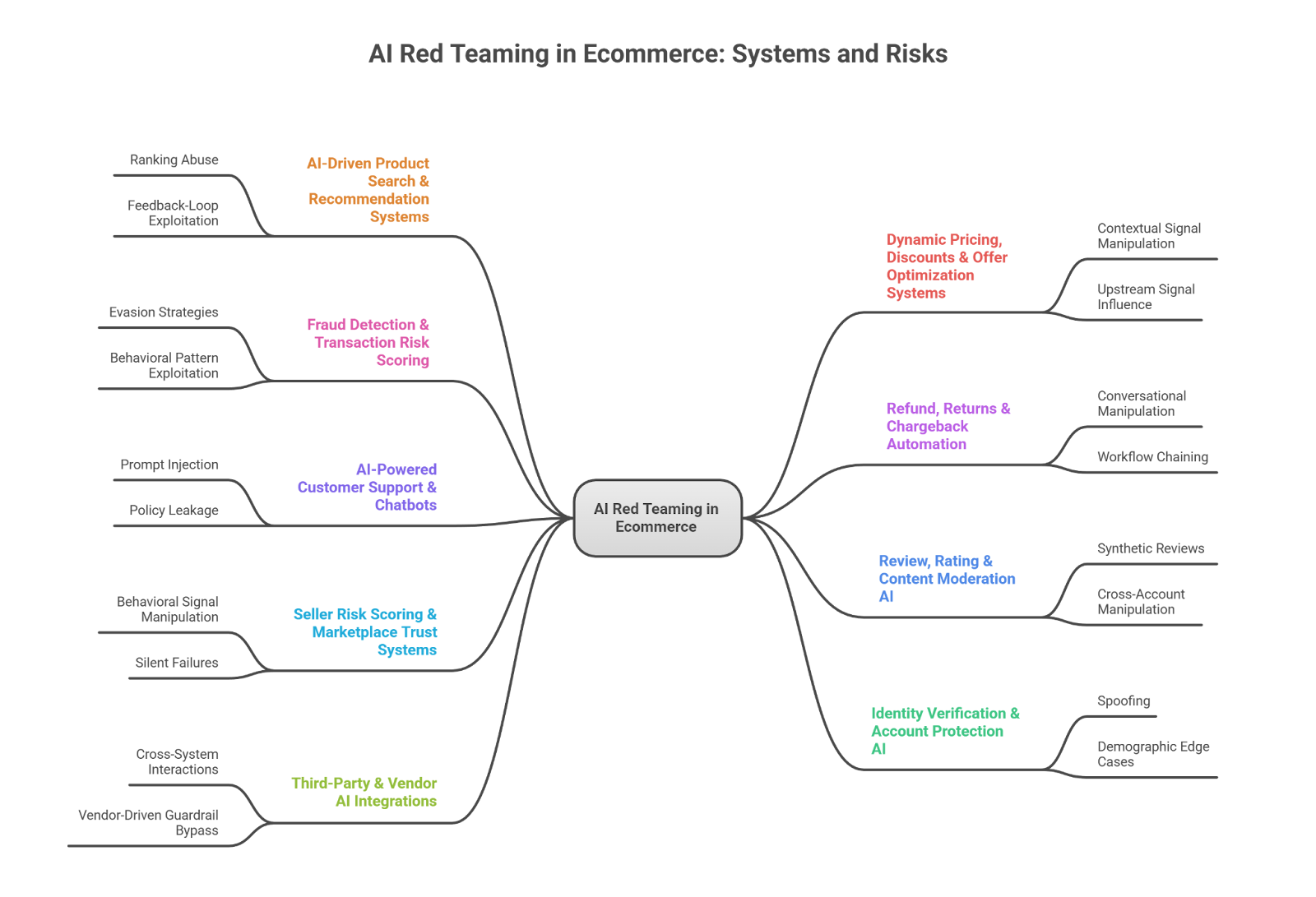

4. Ecommerce AI Systems That Must Be Red Teamed First

Not all AI systems in ecommerce present the same level of risk. Some systems operate closer to revenue, trust, and policy enforcement than others, and failures in these systems tend to scale silently. AI red teaming in ecommerce should therefore prioritize AI systems where adversarial misuse is realistic, automation is high, and business impact is immediate.

The objective of AI red teaming in ecommerce is not to test whether these systems work under normal conditions, but to understand how they behave when assumptions about user intent, data quality, and workflow sequencing are violated. Below are the ecommerce AI systems that require continuous adversarial attention, with a focus on how and why they fail.

4.1 AI-Driven Product Search & Recommendation Systems

Product search and recommendation systems shape customer behavior, seller success, and platform revenue. These systems continuously adapt based on engagement signals, making them especially sensitive to manipulation. Under AI red teaming in ecommerce, these systems are tested for ranking abuse, feedback-loop exploitation, and coordinated interaction patterns designed to distort visibility.

Because recommendation models often optimize for engagement rather than intent, adversarial actors can influence outcomes without violating explicit rules. This creates indirect paths to AI guardrail bypass, where manipulation occurs through behavior rather than direct system compromise.

Failure Impact:

Distorted product visibility, unfair seller advantage, revenue misallocation, and long-term erosion of marketplace trust.

4.2 Dynamic Pricing, Discounts & Offer Optimization Systems

Pricing and promotion AI systems directly control margins and incentives. Even minor logic flaws can be exploited repeatedly, resulting in significant financial loss. AI red teaming evaluates how these systems respond to adversarial inputs that manipulate contextual signals such as demand, user history, or timing.

In many ecommerce platforms, pricing AI relies on layered heuristics and thresholds. Attackers rarely exploit these directly. Instead, they influence upstream signals that cause unintended pricing outcomes, a common form of AI guardrail bypass that is invisible to traditional testing.

Failure Impact:

Unauthorized discounts, margin erosion, promotional abuse, and large-scale revenue leakage.

4.3 Fraud Detection & Transaction Risk Scoring

Fraud detection systems are inherently adversarial and continuously targeted. These models assess transactions in real time and must balance detection accuracy against customer friction. AI red teaming in ecommerce focuses on simulating evasion strategies that adapt to model behavior over time.

Adversarial testing often reveals that fraud models perform well statistically while failing against specific behavioral patterns designed to remain below alert thresholds. Without continuous AI security testing for ecommerce, these blind spots remain undetected.

Failure Impact:

Undetected fraud, increased chargebacks, financial loss, and degraded customer experience due to false positives.

4.4 Refund, Returns & Chargeback Automation

Refund and return automation systems are among the most abused AI-driven workflows in ecommerce. These systems frequently rely on conversational context, historical trust scores, and probabilistic decision-making. AI red teaming examines how attackers exploit these dependencies through repeated low-risk interactions.

Red teaming consistently uncovers scenarios where conversational manipulation and workflow chaining result in unauthorized refunds without triggering alerts. These are classic AI guardrail bypass failures caused by system design rather than single-model errors.

Failure Impact:

Refund fraud at scale, silent revenue loss, policy erosion, and increased operational costs.

4.5 AI-Powered Customer Support & Chatbots

Customer support chatbots act as gateways between users and backend systems. They often have access to sensitive workflows, making them high-risk targets. AI red teaming in ecommerce tests these systems for prompt injection, policy leakage, and unintended action execution.

Failures in support chatbots are rarely catastrophic but are highly damaging over time. Inconsistent policy enforcement and unsafe responses gradually undermine trust while enabling abuse that appears legitimate on the surface.

Failure Impact:

Data exposure, unauthorized actions, customer dissatisfaction, and reputational damage.

4.6 Review, Rating & Content Moderation AI

Review and moderation systems protect marketplace credibility. These systems are frequently targeted by coordinated abuse campaigns. AI red teaming evaluates how moderation models handle synthetic reviews, linguistic variation, and cross-account manipulation.

Adversarial testing often reveals that moderation AI performs well on isolated inputs but fails when attackers distribute activity across time and accounts, leading to systematic distortion rather than isolated incidents.

Failure Impact:

Manipulated ratings, buyer deception, loss of trust, and potential regulatory scrutiny.

4.7 Seller Risk Scoring & Marketplace Trust Systems

Seller risk scoring systems determine onboarding decisions, enforcement actions, and visibility. AI red teaming in ecommerce focuses on how sellers manipulate behavioral signals to appear legitimate while engaging in abusive practices.

These systems often fail silently, enabling bad actors to scale before intervention occurs. Without continuous red team evaluation methods, platforms discover these failures only after significant damage has occurred.

Failure Impact:

Fraudulent sellers scaling operations, weakened enforcement, and long-term brand harm.

4.8 Identity Verification & Account Protection AI

Identity verification systems protect account integrity and onboarding workflows. These systems face increasing pressure from synthetic identities and deepfake-based attacks. AI red teaming tests how identity AI behaves under spoofing, replay, and demographic edge cases.

Failures here propagate quickly into fraud, account takeovers, and user lockouts.

Failure Impact:

Account compromise, fraudulent account creation, customer attrition, and compliance risk.

4.9 Third-Party & Vendor AI Integrations

Ecommerce platforms increasingly depend on external AI services. These integrations extend the AI attack surface beyond direct organizational control. AI red teaming in ecommerce evaluates cross-system interactions, hidden data flows, and vendor-driven AI guardrail bypass scenarios.

Without adversarial AI security testing for ecommerce, these risks remain largely invisible until an external failure impacts the platform.

Failure Impact:

Indirect data leakage, compliance violations, and vendor-induced incidents.

5. How to Test AI Models in Ecommerce Effectively

The approach to testing AI ecommerce systems is fundamentally different from testing traditional software or evaluating the performance of an AI model. Rather than asking if an AI model operates as intended under normal circumstances, we ask if the AI model will operate safely and reasonably under misuse, manipulation, and attack. As such, the perspective of ecommerce AI testing must shift to incorporate AI red teaming.

To conduct effective AI red teaming in ecommerce, ecommerce platforms must give up the assumption of benign user behavior. Ecommerce platforms should expect users to test the ecommerce platform's AI systems in order to gain unanticipated benefits; including, but limited to, customers who seek to obtain unauthorized refunds, sellers who attempt to artificially affect rankings, and fraudsters who seek to identify weaknesses in the ecommerce platform's detection systems. Thus, ecommerce testing must represent realistic misuse scenarios versus idealized input.

In addition to the need to focus on misuse and misbehavior in ecommerce testing, a primary consideration for AI security testing for ecommerce is system-level testing. AI failures typically arise through the interaction of multiple AI models, prompts, thresholds, APIs, and automated workflows. For example, a chatbot may be able to determine a customer's intent correctly, but the chatbot's output may cause an undesirable downstream reaction due to the presence of permissive refund logic. AI red teaming in ecommerce seeks to understand these chain reactions of failure versus singular failures.

Additionally, ecommerce AI testing must consider the evolving behavior of AI over time. Most ecommerce AI systems adjust their behavior based upon the responses of the users interacting with those AI systems. Attackers take advantage of this by modifying their input over time to alter the results. Traditional ecommerce testing does not account for this dynamic since it typically uses static data sets. Using red team evaluation methods allows teams to see how ecommerce AI systems change, decline, and/or become exploitable through continued adversarial interaction.

Finally, testing must be performed continuously. Ecommerce platforms change quickly: new versions of AI models are trained, pricing logic is modified, additional integration is completed, and user behavior changes. Therefore, a one-time assessment of the ecommerce platform will provide only temporary comfort. Performing continuous AI security testing for ecommerce (using AI red teaming) allows ecommerce organizations to continually assess their risk as their systems evolve and to identify potential new failure modes before they occur.

Lastly, testing requires clearly defined ownership and documentation. The goal of AI red teaming is to identify failures in ecommerce AI systems and convert those failures into actionable recommendations for engineering, product, and risk teams. Through the systematic application of red team evaluation methods, ecommerce organizations can transition from reacting to incidents to proactively managing the risks associated with ecommerce AI.

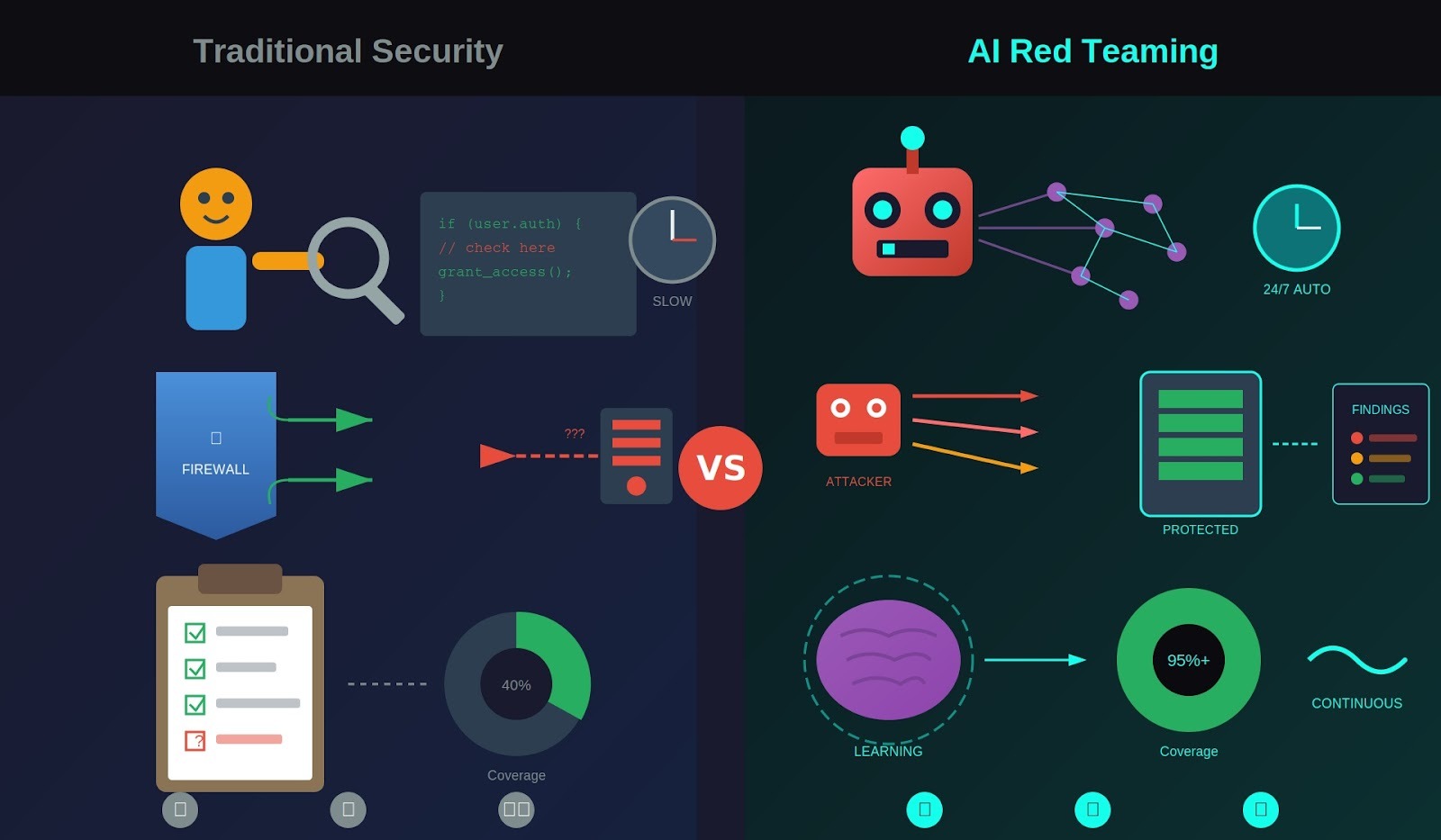

6. AI red teaming vs Traditional Security & QA in Ecommerce

While Quality Assurance (QA), Security Testing and AI red teaming, may seem to have the same end goals, which include ensuring that AI systems are safe, they operate from completely different perspectives and ask very different questions. Confusing these approaches is among the main causes why there continues to be so many failures of e-commerce AI, even after a considerable amount of testing has been done.

Quality Assurance in e-commerce is essentially about confirming correctness. It tests whether an AI system behaves as its designer(s) originally stated it would when users act as they are supposed to. For example, recommendation engines are tested using clean data, chatbots are tested by designers using compliant prompts and pricing logic is tested against a variety of pre-determined scenarios. Since the output matches what was expected, the system is deemed safe enough to be deployed. This QA model assumes that users will behave exactly how designers expect them to.

On the other hand, AI red teaming in ecommerce operates from the exact opposite perspective than QA. Rather than assuming users will behave as designers intend, AI red teaming assumes that users are economically incentivized to violate those intentions. Therefore, instead of asking "Does this system work?", AI red teaming asks "How will this system react if users deliberately attempt to exploit it?" The difference between the two is not incremental, rather it is fundamental.

Security testing introduces yet another, albeit similar, restricted view point. Security testing provides protection to systems by preventing unauthorized access, data breaches, and infrastructure attacks. It is focused on boundaries such as: authentication, authorization, APIs, and networks. An attacker who cannot gain unauthorized access to a system is said to be unable to breach its security.

However, the majority of e-commerce AI failures do not need to occur due to unauthorized access. Most occur due to valid usage behaviors.

Therefore, this is where AI red teaming is distinct. AI red teaming views AI as a decision-making system, not simply as a component behind an access control boundary. A chatbot that meets access controls and still allows an improper refund to be approved has not breached security testing - however it has failed under adversarial use conditions. AI red teaming in ecommerce is specifically designed to identify these behavior-based failures.

Lastly, Guardrails further illustrate the differences in each of the three approaches. QA verifies that guardrails prevent obvious misuse. Security testing ensures guardrails can't be bypassed through technical exploits. However, AI red teaming seeks out AI guardrail bypass through indirect means such as: ambiguous language, multi-step interaction, signal manipulation, and sequence of workflows. In the case of e-commerce, some of the greatest risks arise from situations in which guardrails functionally meet their design requirements, yet practically fail to protect the business.

Additionally, the definitions of successful outcomes are significantly different. Successful outcomes are defined as passing tests in both QA and security testing. As few issues as possible are identified during testing, confidence in the test results increase. On the other hand, successful outcomes for AI red teaming are based upon identifying failures. The value of AI red teaming in ecommerce lies in the identification of uncomfortable truths: Where AI makes decisions are weak links, where incentives don't align, and where automation creates risk.

Timing further supports this separation. QA and security testing are typically executed at a given milestone in a project. Once past a milestone, systems are generally assumed to be safe until the next milestone. However, AI red teaming, utilizing continuous red team evaluation methods assumes that the safety of a system degrades over time. Therefore, as models are retrained, prompts change, and users adapt their behavior, new ways of failing the system emerge. AI red teaming in ecommerce identifies these changes before an attacker can.

In summary, QA and Security testing verify that AI systems perform as expected when acting in concert with others. AI red teaming verifies if the systems remain safe when in conflict with others. E-commerce businesses relying on QA and Security testing alone are prepared for ideal users. Businesses implementing AI red teaming in ecommerce are prepared for actual users.

7. Conclusion

AI has become an integral part of today's ecommerce ecosystems; determining what customers view, setting price points, determining whether a refund will be granted or whether fraud will be prevented via AI systems are now common decision-making elements within ecommerce platforms. The cost of failure for ecommerce platforms using AI increases steadily with each level of autonomy and scalability.

Most of the destructive failures for ecommerce AI occur from hidden behavioral weaknesses that can only be uncovered once a system is misused, stressed or subject to adversarial intent. Many of these failures go undetected through standard monitoring and validation methods until there has been some form of financial loss, evidence of abusive behavior, or reputational harm. For this reason, AI red teaming is no longer an option for ecommerce businesses that operate at scale.

When organizations adopt AI red teaming in ecommerce, they shift their focus from simply determining if AI systems perform as intended to discovering how those systems will behave when assumptions made about them are broken. An adversarial approach provides insights into vulnerabilities that conventional testing methods may miss, such as silent fraud paths, manipulation of product recommendations and repeated AI guardrail bypass throughout automated workflows. With this visibility, ecommerce platforms can transition from being reactive to incidents, to proactively preventing them.

To successfully manage AI risks, it is essential to recognize that AI safety is not fixed; AI models are constantly evolving, new prompts are continually added, integrations are expanding and users' behaviors are adapting all the time. Therefore, AI security testing for ecommerce needs to be continuous and behavior driven (as opposed to periodic and checklist based). Using a structured red team evaluation methods will enable organizations to assess risks as AI systems evolve versus relying on a model that assumes controls will continue to be effective over time.

Specialized expertise is key to identifying and mitigating true world AI risks to ecommerce companies. WizSumo delivers ecommerce companies AI red teaming services to help identify and mitigate AI related risks to ecommerce businesses. WizSumo uses adversarial testing of high-risk ecommerce AI systems (including pricing, fraud detection, returns, customer service chat bots, recommendation engines and marketplace trust systems) to simulate realistic abuse scenarios and behavior of an attacker to allow organizations to discover failure modes that internal testing commonly fails to find.

In the end, secure ecommerce AI is not determined by the confidence one has in the model, but by the evidence of resiliency under adversarial use. Organizations that invest in AI red teaming in ecommerce earlier will be better equipped to deploy AI responsibly, protect their revenue, retain customer trust and showcase robust AI governance. In an ecosystem where attackers can rapidly improve and test, the use of proactive adversarial testing represents the difference between silent loss and sustained trust.

“Ecommerce AI rarely fails by breaking, it fails by quietly doing the wrong thing at scale.”

.svg)

.png)

.svg)

.png)

.png)