AI red teaming in Education : Testing Assessment, Learning, and Proctoring AI

.png)

Key Takeaways

AI red teaming focuses on how education AI systems fail, not just how they perform.

Traditional AI security testing in education cannot detect adversarial misuse or silent failure modes.

High-impact education AI systems must be tested under real-world misuse scenarios.

Strong Educational AI security requires continuous adversarial evaluation, not one-time validation.

Embedding AI safety in education early prevents student harm, trust erosion, and regulatory risk.

1. Introduction

Modern education is increasingly using artificial intelligence as part of its basic infrastructure. Schools, colleges and universities along with many edtech companies now use AI for tutoring students, assessing assignments, suggesting pathways for learning, and informing administrative decision-making. Educational AI systems are often deployed at a massive scale and significantly impact academic results, which means that when they fail, the consequences can be broad, and may have serious implications for both the students affected and their institutional partners as well as their overall ability to believe in and utilize learning technologies in the future.

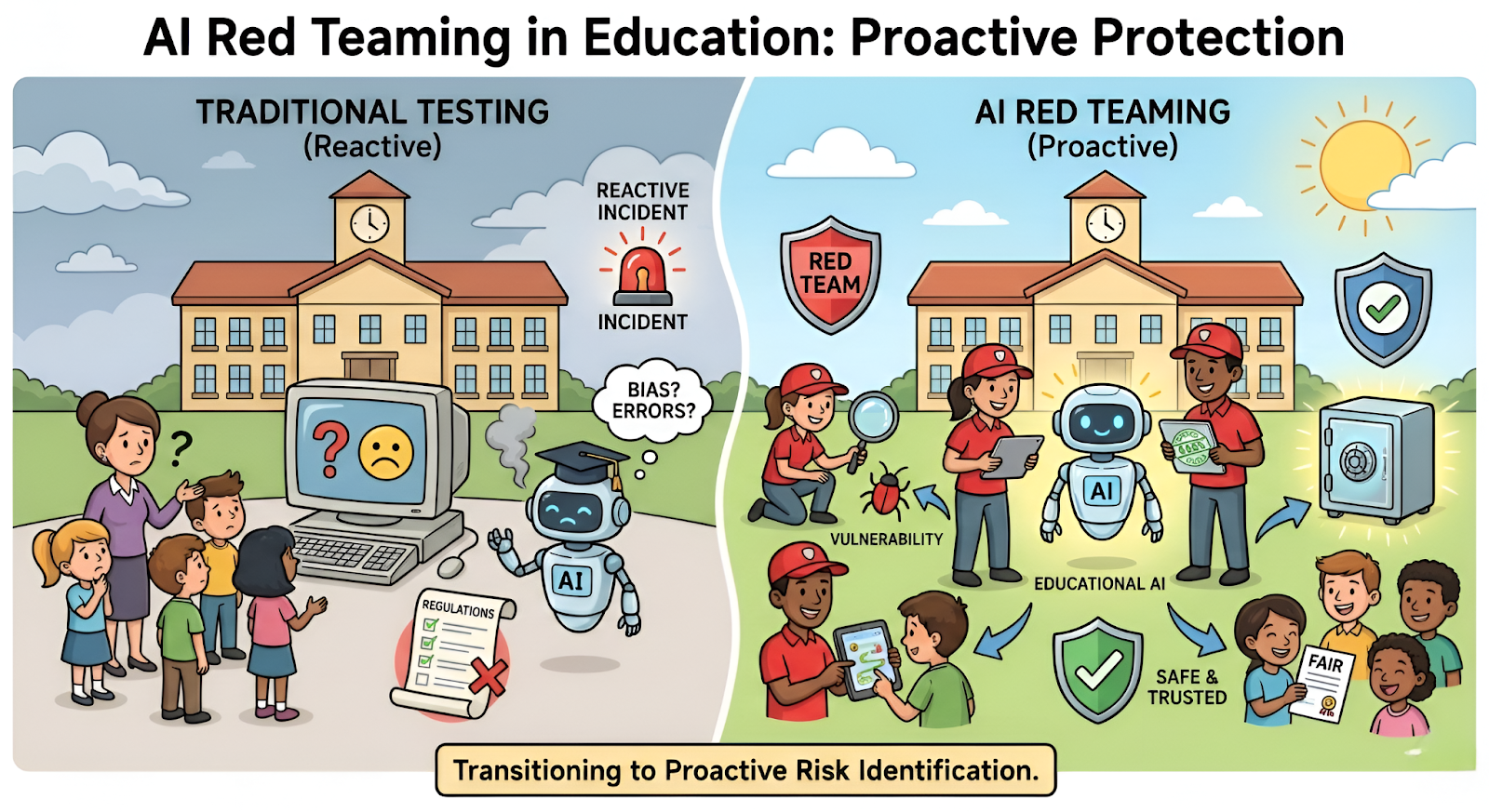

Many institutions currently address AI-related risks in education by utilizing testing methodologies similar to those used for other forms of technology to measure accuracy, performance, and bias within controlled test environments. Although important, this type of testing does not accurately represent how educational AI systems will behave in misused scenarios, edge case situations, and/or under adversarial attacks. It is here where AI red teaming will become crucial to understanding how educational AI can fail in actual practice (as opposed to being asked if an AI system operates as intended).

The risks associated with educational AI systems are also particularly high. In addition to processing highly sensitive student information, educational AI systems make recommendations regarding grades and admission into programs, and ultimately, inform the way in which students learn. Thus, without intentional and adversarial testing of these systems, well-tested systems can provide inaccurate, biased, and/or provide silent exposure to student data. The purpose of AI red teaming in education, therefore, is to identify these hidden risks prior to the potential for significant academic injury, potential regulatory issues, or institutional reputation damage.

Given the increasing rate of AI adoption in all aspects of education, institutions can no longer assume AI systems are safe based solely on their belief of safety. Proactive AI security testing in education using a framework of adversarial thinking is required to enable educators and administrators to ensure that educational AI systems remain trustworthy, equitable, and resilient. This paradigmatic shift is not about impeding innovation; rather it is about ensuring that educational AI systems can be scaled appropriately to maintain the public's faith in education technologies.

2. How AI Is Being Used Across Modern Education Systems

AI has now been integrated into almost all levels of modern education. From how teachers teach (and how students learn) to how institutions make decisions about their students and staff. Therefore, these systems are no longer independent, but rather linked to other systems including data pipes, student records, and subsequent academic processes and workflows. The increasing usage of AI in education creates a much larger “risk surface” for AI red teaming and AI red teaming in education, and will be essential to understand how Educational AI behaves in the face of realistic stressors.

As AI becomes more autonomous, and therefore influential, the concern of Educational AI security has moved beyond just evaluating the performance of models toward the evaluation of the behavior of systems. Most failures in education AI are not immediately recognizable as technical errors, but as small, repetitive decisions made by an AI that either disadvantages a student or exposes sensitive information. Thus, AI security testing in education must assess how AI systems are both being used and misused, and how they function when subjected to real world stressors.

2.1 AI in Classrooms, Universities, and EdTech Platforms

AI is already changing educational experiences in the classroom; university setting and as part of EdTech platforms. AI-based tutoring systems create explanations for students, answers to their questions, and guides them through difficult areas of study. AI-based automated assessment systems assess student work and assignments at a level that can occur without human oversight. The decision-making process as to what type of material the student will encounter next is determined by recommendation engines that use AI to make decisions on a student's learning path and potential outcomes.

The learning processes provided by adaptive and personalized learning systems continuously change the difficulty of the lessons, the pathway the student takes and the type of feedback they receive based upon their behavior. Although these systems increase efficiency, there are now many additional risks introduced into the system. Until AI red teaming in education, there has been no way to test whether an AI system reinforces bias or provides wrong guidance and/or if the system is vulnerable to being manipulated using Adversarial Inputs. Therefore, AI safety in education requires that the testing of an AI system goes beyond testing accuracy to testing how the system responds to violations of its assumption regarding student behavior.

2.2 Sensitive Data and High-Risk AI Workflows

Highly sensitive student information is processed by Education AI systems. Student information includes: academic records; grades; assessment scores; behavioral data; and learning analytics. The workflows using this sensitive data will directly affect the academic progress of students; whether a student is eligible for academic programs; and ultimately the long-term success of the students in terms of their educational outcome.

Therefore, these workflow processes using AI have significant value as they become prime targets for misuse, manipulation, and accidental disclosure.

The integration of AI within the workflow processes for many of these systems enables silent propagation of system failures across multiple platforms. Thus, relying solely upon traditional methods of control will be insufficient to protect against misuse, manipulation, or unauthorized disclosure. Therefore, it is necessary to perform continuous AI security testing in education, and AI red teaming in education, to discover potential data leakage, biased decision making, or logic abuse. By performing continuous AI security testing in education and continuous AI red teaming in education we ensure that the decisions made through AI Systems used in Educational settings are both fair and secure, and therefore, defensible.

3. Why Education Institutions Need AI red teaming

The environment of an educational institution has the highest level of trust of any. In fact, students, parents, teachers, and government officials all expect academic decision-making to be fair, transparent, and safe. Therefore, when an artificial intelligence (AI) system fails within such a trusted environment, the consequences go beyond just a technical issue, and can negatively impact student success, reputation of the educational institution, and the trust in the educational institution.

Therefore, AI red teaming will be required for every education institution that intends to implement AI at large-scale.

Most educational institutions test their AI systems prior to implementation using traditional testing methods, such as evaluating how accurate an AI system is, whether it contains biases based upon race, gender, etc., and whether it complies with all applicable laws and regulations. Educational environments, however, are rarely controlled or benign; students experiment with AI systems, teachers rely upon AI system output while working under time constraints, and the input to AI systems is often unpredictable. AI red teaming in education fills the void by testing AI systems in educational environments to determine how they operate when the assumptions that govern its use fail.

Additionally, there is a growing concern regarding the potential for AI systems to violate both regulatory requirements and ethics requirements. Since most AI systems used in education collect sensitive information about students, and since many educational decisions (e.g., course placement, graduation eligibility, etc.) are influenced by AI systems, the potential for an AI system to experience a failure could result in serious repercussions including: violation of students' rights to protect their private data, denial of opportunities to students who were unfairly treated by an AI system, and/or failure to comply with regulations governing education.

Thus, AI red teaming provides a framework for identifying potential vulnerabilities in an AI system's operation that would otherwise go undetected until they have manifested themselves in some way in a live production environment. Conversely, AI security testing in education that does not incorporate adversarial testing of an AI system's operation in educational environments is unlikely to uncover these vulnerabilities.

Lastly, reputational risk associated with the use of AI systems in educational environments is substantial. Unlike technical outages which are typically evident and receive immediate attention, AI system failures in educational environments are generally quiet and often occur over long periods of time. Examples of AI system failures in educational environments include consistently providing inaccurate or unfair grades to students, advising students in ways that place them at risk, and making inconsistent decisions. The lack of proactive testing makes it difficult for educational institutions to detect these types of failures until they become widespread and well-documented. By incorporating AI safety in education ,through the structure of adversarial evaluations, educational institutions can identify and address potential silent failure modes before they cause widespread harm.

In conclusion, having Educational AI security requires demonstrating that an educational AI system can perform under the stresses of a real world environment, not simply relying upon the accuracy of the model. AI red teaming in education allows educational institutions to transition from reactive incident management, to proactive risk identification, thereby protecting students and maintaining the integrity of AI-based learning systems as they grow and expand.

4. Education AI Systems That Must Be Red Teamed First

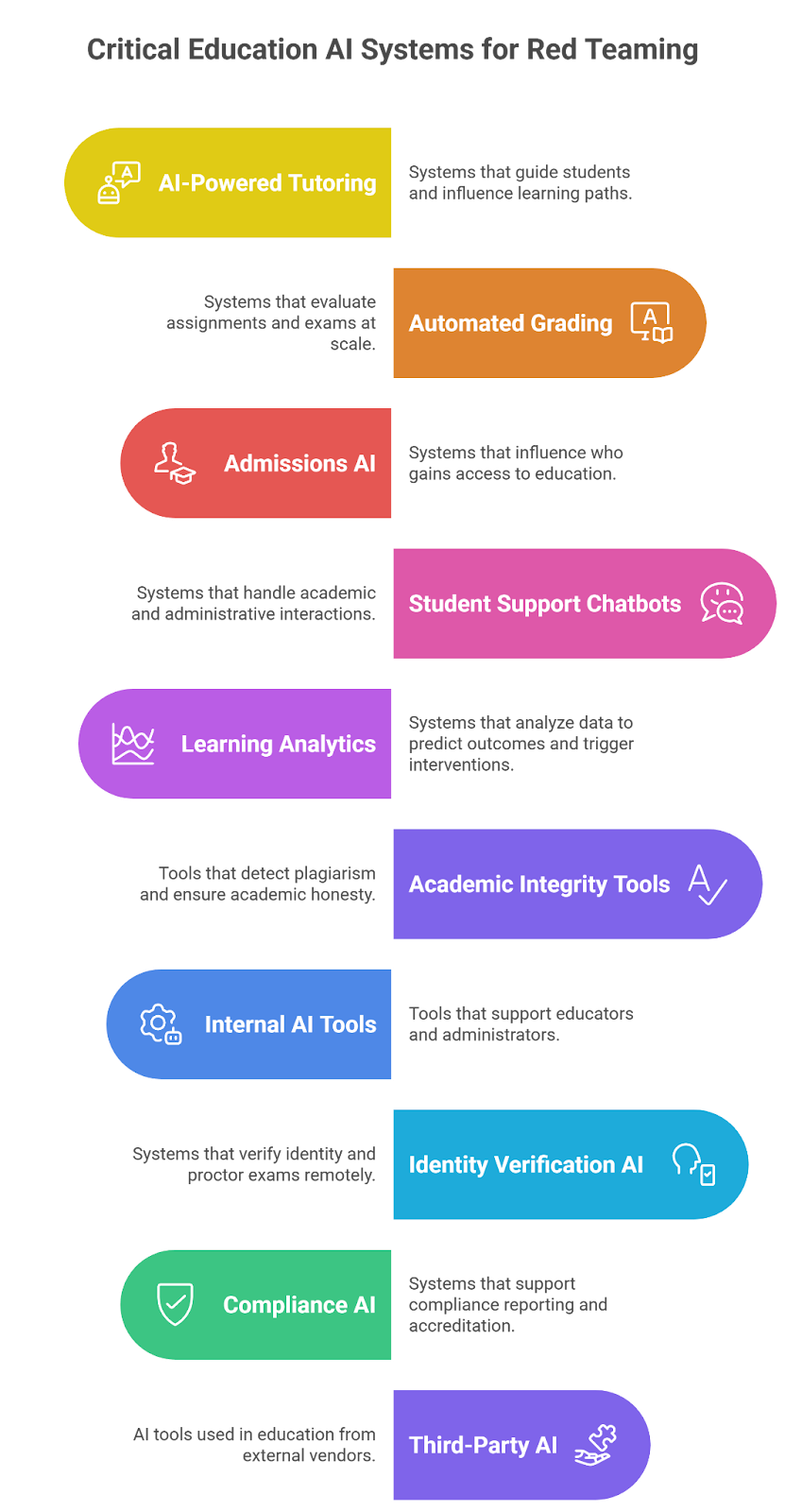

Not all AI systems in education carry the same level of risk. AI red teaming should be prioritized for systems that directly influence student outcomes, institutional decisions, or regulatory exposure. In AI red teaming in education, the focus is on AI systems where failures remain silent, scale quickly, and are difficult to detect through traditional AI security testing in education.

Below are the most critical education AI systems that must undergo continuous adversarial evaluation. For each use case, the emphasis is on how the system can fail and what that failure means for Educational AI security and AI safety in education.

4.1 AI-Powered Tutoring & Personalized Learning Systems

AI tutors and personalized learning systems guide students through academic material and influence learning paths. Because students trust these systems, incorrect or manipulated outputs can cause widespread academic harm.

Failure Impact:

⟶ Misinformation and unsafe academic guidance

⟶ Reinforcement of biased or incorrect learning paths

⟶ Long-term impact on student understanding

4.2 Automated Grading & Assessment Systems

Automated grading systems evaluate assignments, exams, and subjective responses at scale. These systems are high-risk because errors directly affect fairness and academic progression.

Failure Impact:

⟶ Unfair or inconsistent grading outcomes

⟶ Bias amplification across student groups

⟶ Appeals, disputes, and loss of institutional trust

4.3 Admissions & Student Screening AI

Admissions and screening systems influence who gains access to education. Failures here create ethical, legal, and reputational risk.

Failure Impact:

⟶ Proxy discrimination and unfair exclusions

⟶ Regulatory and compliance violations

⟶ Legal challenges and reputational damage

4.4 Student Support Chatbots & Virtual Assistants

AI chatbots handle academic, administrative, and sometimes sensitive student interactions. These systems significantly expand the attack surface.

Failure Impact:

⟶ Unauthorized disclosure of student data

⟶ Prompt injection and jailbreak exploitation

⟶ Loss of student trust

4.5 Learning Analytics & Student Monitoring Systems

Learning analytics systems analyze behavioral and performance data to predict outcomes and trigger interventions. These systems often fail silently.

Failure Impact:

⟶ Incorrect risk labeling of students

⟶ Biased intervention decisions

⟶ Privacy and ethical violations

4.6 Academic Integrity & Plagiarism Detection Tools

Academic integrity tools operate in an adversarial environment where users actively attempt evasion. Traditional testing rarely reflects real misuse.

Failure Impact:

⟶ False positives leading to unfair penalties

⟶ Undetected academic misconduct

⟶ Erosion of academic credibility

4.7 Internal AI Tools for Educators & Administrators

Internal AI tools support grading, reporting, and decision-making. These systems often have access to sensitive information and minimal oversight.

Failure Impact:

⟶ Hallucinated or misleading internal guidance

⟶ Confidential data exposure

⟶ Governance and audit failures

4.8 Identity Verification & Remote Proctoring AI

Proctoring and identity verification systems rely on biometric and behavioral signals. They are increasingly targeted by spoofing and deepfake attacks.

Failure Impact:

⟶ Wrongful accusations of misconduct

⟶ Accessibility and bias concerns

⟶ Legal and regulatory scrutiny

4.9 Compliance, Reporting & Accreditation AI

Some institutions use AI to support compliance reporting and accreditation processes. Failures here carry high regulatory risk.

Failure Impact:

⟶ Incomplete or hallucinated reporting

⟶ Regulatory penalties

⟶ Loss of institutional credibility

4.10 Third-Party & Vendor AI Used in Education

Education institutions increasingly rely on third-party AI tools. These integrations introduce supply-chain risk beyond direct institutional control.

Failure Impact:

⟶ Indirect data breaches

⟶ Compliance violations through vendors

⟶ Limited visibility into AI behavior

5. How to Test Education AI Systems Effectively

The process of testing AI in educational settings is much different than other types of testing (such as testing software or evaluating AI models). Instead of determining whether an AI model performs well in scenarios that are assumed to occur normally, testing of AI in educational settings is about understanding how an AI will perform when its assumptions fail.

This is where AI red teaming, which is designed to test AI in a way that simulates misuse, manipulation and other types of adverse behavior that may be used against an AI, becomes an important part of developing and implementing effective testing strategies for educational organizations.

While many of the current AI security testing in education strategies focus on superficial testing, such as validating accuracy, detecting bias, and reviewing policies; they all assume that users will behave cooperatively and provide predictable input. However, educational settings are inherently unpredictable. Students will inevitably try to manipulate or exploit AI, while adversaries will develop new tactics to evade detection by AI systems. Additionally, AI red teaming in education recognizes that educational AI systems are not static objects that simply require validation. They are dynamic systems that make decisions and can be influenced by a wide variety of factors.

To create an effective testing program, there are several key components. First and foremost, there needs to be an effective Adversarial Threat Modeling strategy in place. Organizations must identify how students, insiders or outside actors would potentially manipulate AI systems in order to gain an advantage over others, including manipulating prompts, poisoning training data, evading detection and manipulating logic at both the model level and workflow level. AI red teaming in education views AI systems as decision-making assets that can be compromised, not as static tools that simply require validation.

Another key area of testing is System-Level Testing. Educational AI systems typically operate within complex workflows and do not exist in a vacuum. Outputs from one system often flow directly into workflows related to grading, admissions, etc. AI red teaming in education assesses how failures in one system cause cascading failures in subsequent systems without generating alerts. Traditional AI security testing in education usually does not account for these linked failure modes, reducing the effectiveness of Educational AI security.

Ongoing testing is another important aspect of ensuring the effectiveness of AI systems in educational environments. Educational AI systems are constantly evolving due to changing data, revised prompts, updated curricula, and new vendor integrations. A single assessment of an AI system's effectiveness is rapidly becoming outdated. AI red teaming in education continues to test AI systems as their underlying architecture and usage patterns evolve to ensure that new vulnerabilities are detected as soon as possible. This ongoing testing methodology is essential to establishing long term AI safety in education.

Lastly, an effective testing methodology must evaluate the impact of AI failures rather than merely the frequency of failures. An organization must determine what occurs when an AI makes a mistake, provides uncertain output or is manipulated. Does the AI incorrectly guide students? Does a biased output negatively impact student grades? Does sensitive information leak quietly? AI red teaming in education measures the impact of AI failures in terms of real-world consequences to strengthen Educational AI security beyond mere theoretical assurances.

6. Real-World Scenarios: What Happens Without AI red teaming

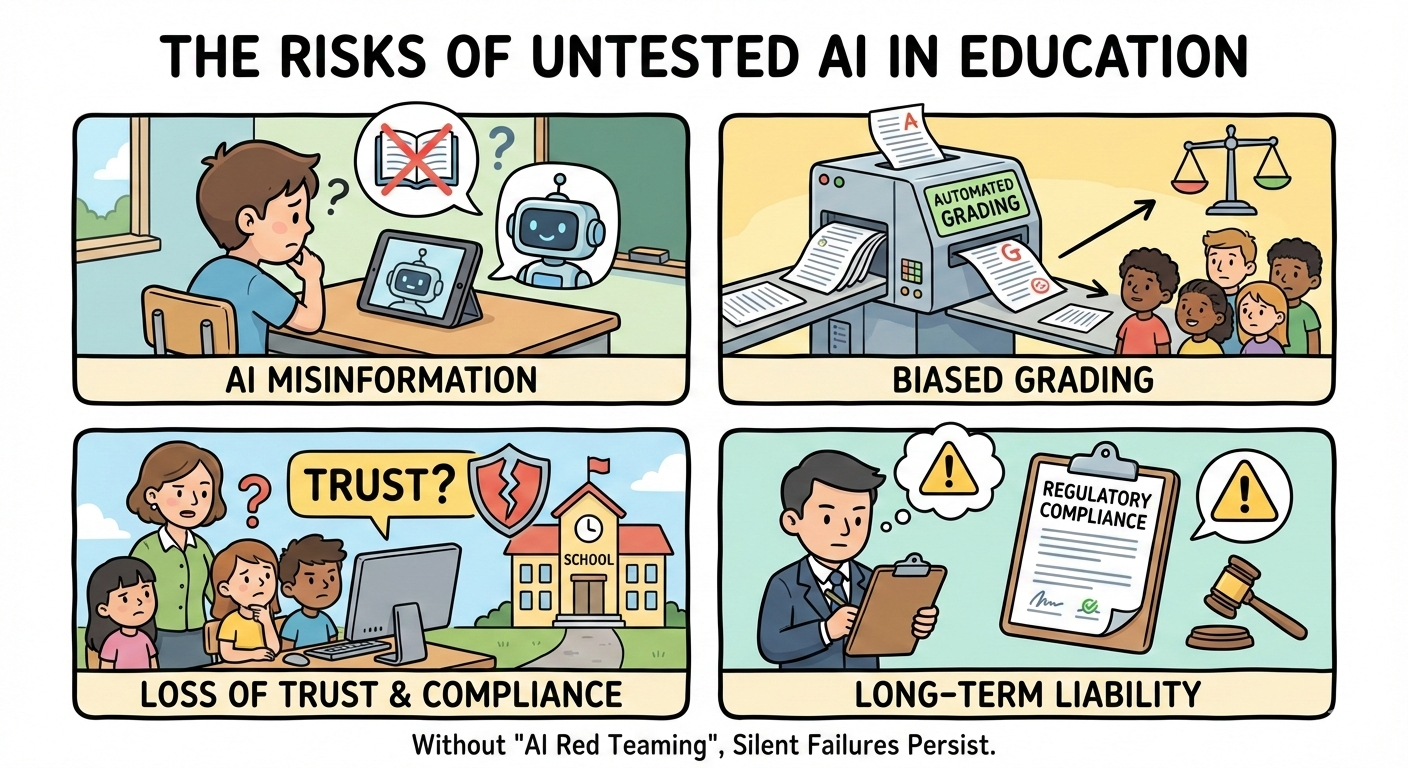

AI failures in education rarely appear as sudden system outages. Instead, they surface gradually through incorrect guidance, unfair decisions, and hidden data exposure. These failures often go undetected because the systems technically continue to function as designed. Without AI red teaming, education institutions may not realize that harm is occurring until patterns emerge at scale.

Traditional AI security testing in education is not designed to detect these silent failure modes. Accuracy dashboards and bias reports do not reveal how AI behaves when intentionally misused, stressed, or manipulated. This is precisely why AI red teaming in education is essential for understanding real-world risk.

6.1 When Educational AI Fails Students and Institutions

The most common type of educational AI error relates to the production of misinformation through AI. Both AI tutors and AI academic assistants can generate answers that appear to be correct but are actually wrong; provide dangerous advice or untrustworthy information; or make suggestions that could mislead a student (or educator) into making poor choices. The reason this type of misinformation is so prevalent is that it is produced with confidence; and as a result, both students and educators will accept those responses at face value. Additionally, because there are no AI safety in education mechanisms implemented to catch and eliminate such errors, they can quietly spread throughout entire classrooms and even across various platforms.

A second very common problem is bias within grading processes. As mentioned previously, automated grading systems can also have a systemic disadvantage against specific student groups based on the nature of the data upon which the system was trained, or based on proxy variables, or based on how the input from a particular student group is processed differently than other student groups. When these grading systems are not subject to an AI red teaming, the institution will likely continue using the same flawed grading system, resulting in a lack of fairness and academic integrity. This lack of Educational AI security will likely lead to numerous complaints, appeals, and potential long-term damage to the reputation of the institution.

6.2 Long-Term Impact on Trust and Compliance

The most significant long term risks from untested education AI are a result of regulatory requirements. Educational institutions will need to demonstrate their ability to proactively manage the risks associated with AI systems that affect students' data and/or educational outcomes. The burden of proof, if there is no AI red teaming in education and AI security testing in education for the failures of AI systems, will be placed upon the educational institution.

Loss of trust by students and educators also poses a major threat as they begin to question the use of AI in the educational decision making process (i.e., AI appears to be making decisions that are inconsistent, biased, or non-transparent). Loss of trust is difficult to recover and will lead to a decrease in the rate of adoption of AI in educational institutions, as well as an increase in the level of oversight that educational institutions will experience. Educational institutions can ensure that they maintain the trust of their students and educators, through the inclusion of educational AI security through structured adversarial evaluation processes, which will help to ensure accountability and provide evidence that educational institutions have evaluated AI driven learning systems and have ensured that those systems are safe for use in education. In the end, Educational AI security is based upon whether or not AI systems have been evaluated and tested against potential misuse and failure in actual world usage.

7. Conclusion

AI is now deeply embedded in how education systems teach, assess, and make decisions. As these systems scale, the cost of failure increases sharply. The most damaging incidents do not come from obvious technical breakdowns, but from subtle weaknesses that only emerge under misuse, pressure, or unexpected behavior. This is why AI red teaming must become a standard practice rather than an optional safeguard.

Institutions that rely solely on traditional validation or surface-level AI security testing in education often discover AI risks too late, after students are harmed, trust is lost, or regulators intervene. In contrast, AI red teaming in education provides a proactive way to uncover how AI systems actually fail in real-world conditions. It shifts the focus from assumed correctness to proven resilience.

As education AI continues to evolve, strong Educational AI security depends on continuous adversarial evaluation. Models change, data drifts, prompts evolve, and new integrations expand the attack surface. Embedding AI safety in education requires ongoing testing that reflects how systems are truly used, misused, and stressed over time.

This is where WizSumo AI plays a critical role. WizSumo AI specializes in AI red teaming for high-impact, regulated environments, helping education institutions systematically identify hidden failure modes across tutoring systems, grading engines, admissions workflows, chatbots, and internal AI tools. By combining deep adversarial testing expertise with practical domain understanding, WizSumo AI enables institutions to move from reactive incident response to proactive risk discovery.

Ultimately, secure and trustworthy education AI is not achieved by confidence in models, but by evidence of rigorous testing. Institutions that invest early in AI red teaming in education, continuous AI security testing in education, and strong AI safety in education foundations will be best positioned to scale AI responsibly, while protecting students, educators, and institutional integrity.

“Education AI doesn’t fail loudly, it fails quietly, shaping outcomes long before anyone notices.”

.svg)

.png)

.svg)

.png)

.png)