AI red teaming in fintech: Securing High-Risk Financial AI Systems

.png)

Key Takeaways

AI red teaming in fintech focuses on how AI systems fail, not just how they perform.

Traditional testing cannot expose model manipulation attacks in real fintech environments.

High-impact fintech AI systems require continuous adversarial evaluation.

AI security testing in fintech must assume intentional misuse, not ideal behavior.

Secure fintech AI is built through ongoing red teaming, not one-time validation.

1. Introduction

Fintech firms have been using artificial intelligence to build upon existing financial workflow. The use of AI is expanding in areas like credit decision-making, fraud detection, automated compliance and customer service. Due to the size and speed of AI-based systems and their direct impact on financial outcomes, fintech organizations must consider the potential for small errors in AI-based systems to result in loss of money, fines/penalties due to violation of regulations and damage to customer trust.

In this environment, using traditional methods of testing and validating AI systems alone may not be enough. Fintech organizations need AI red teaming, which is an adversarial discipline to test how AI based systems will fail in a real world environment.

Traditional methods of testing and validating AI systems typically assume benign input and typical use cases. AI red teaming in fintech assumes that AI based systems will be misused, manipulated and/or abused in ways that developers of those systems could not possibly anticipate. Users who are attempting to cause harm to an AI system, or users who are simply trying to get something done but are doing it in an unintended way, can cause AI systems to behave in an unexpected manner. These types of failures do not usually occur because the AI system crashed; rather these failures occur when the AI system makes incorrect decisions (e.g., approves incorrect transactions, misses a fraud signal, etc.).

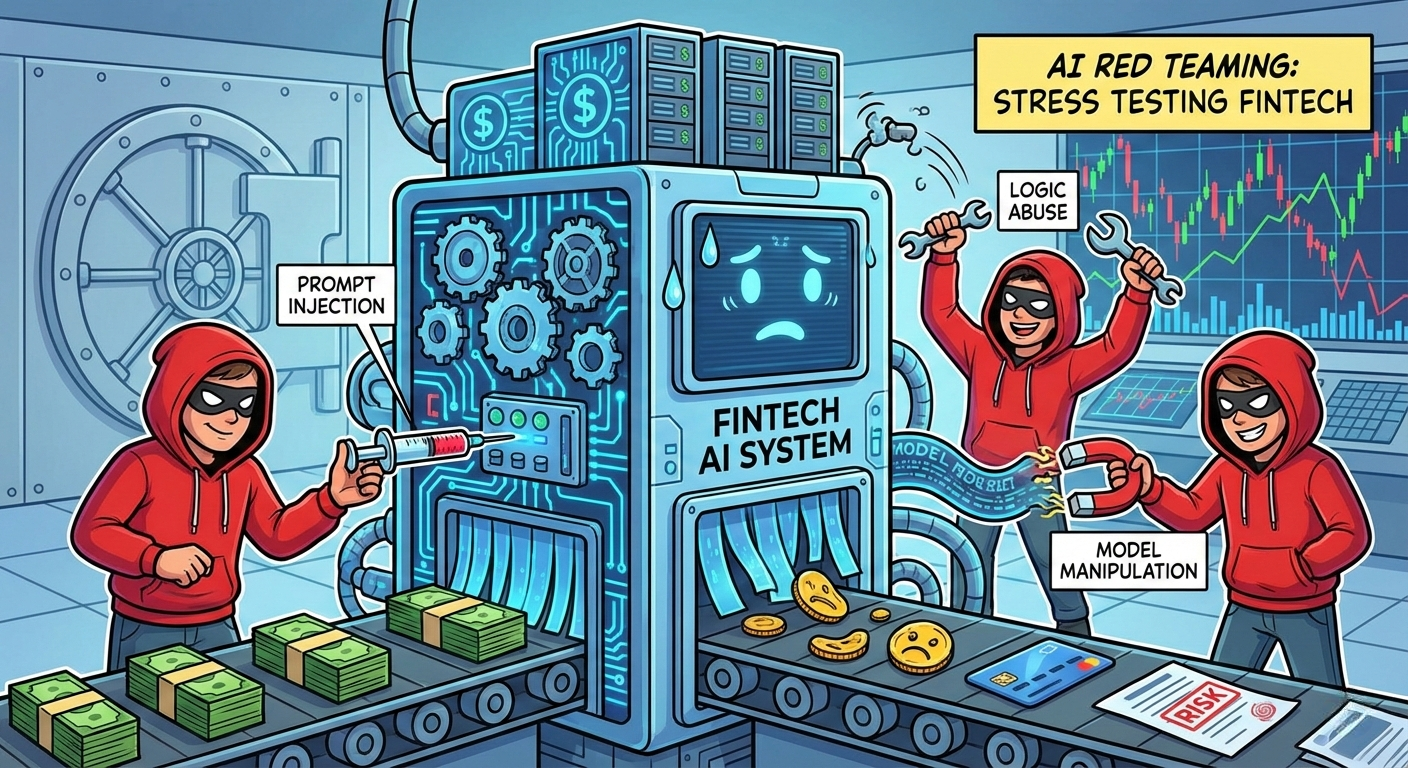

As the use of large language models and complex machine learning pipelines become more common in fintech platforms, the number of potential entry points for attackers increases. Risks associated with prompt injection, logic abuse, data leakage and model manipulation attacks can bypass traditional controls.

These issues represent a new category of financial AI vulnerabilities that are not detectable by standard Quality Assurance (QA) processes or Infrastructure Security Tools. Therefore, AI security testing in fintech must transition from performance tests and accuracy measurements to adversarial, behavior-based evaluations.

This blog examines where AI red teaming in fintech delivers the most value. It explains what AI red teaming means in practice, why fintech companies need it, which AI systems should be prioritized first, and how organizations can test AI models effectively before failures occur. The goal is to provide a clear, practical foundation for securing high-impact fintech AI systems, before silent failures turn into financial or regulatory crises.

2. What is AI red teaming in fintech?

The term AI red teaming in fintech refers to the process of subjectively stressing test AI systems with the use of adversarial thinking to understand how the systems may be able to be misused, manipulated or pushed into risky/unsafe behavior in real-world financial environments. As opposed to confirming that an AI model will work as expected under assumed conditions, AI red teaming identifies how and at what point the AI system fails when the assumptions regarding users, data and/or intent are no longer valid.

Fintech traditionally employs testing methods that usually assume that input is legitimate, data is clean, and users act as expected. The AI red teaming methodology directly calls into question all three of these assumptions. AI Red Teams treat AI systems as if they were decision-making assets that could be influenced, coerced, or otherwise taken advantage of. Given that AI failures are less likely to manifest as apparent system crashes in fintech, more likely examples include incorrect risk assessment, missed fraud, inequitable customer treatment, or non-compliance with rules that occur over time and silently accumulate.

From a practical perspective, AI red teaming in fintech involves significantly more than just testing individual machine learning models. Rather, it includes large language models, decision engines, data pipelines, prompts, APIs, and integrations downstream from the original AI that ultimately produce a financial outcome. Red teams evaluate how each component behaves when presented with adversarial inputs including misleading transaction patterns, misleading prompts, biased data, and/or edge cases that target logic gaps between different components. Many of these are part of the larger class of financial AI vulnerabilities, and many are invisible to traditional testing methodologies.

One of the primary goals of AI red teaming is to identify threats such as prompt injection, logic abuse, and model manipulation attacks that allow for the intentional distortion of decisions made by AI-based decision systems, without generating alerts. Logic abuse and model manipulation attacks are both examples of "misuse" based attacks against AI systems, and these types of attacks are typically outside the scope of the typical "accuracy," "stability," and "performance" focused controls employed in AI security testing in fintech.

Instead, AI red teaming requires a slightly different (and perhaps more uncomfortable) question to be asked: How would someone attempt to force an AI system to make a bad financial decision?

Ultimately, AI red teaming in fintech is not about verifying that an AI system functions; it is about verifying that an AI system will function properly even in the event that the system is being misused, there is uncertainty involved, and the system is under adversarial attack. By revealing these potential failure points early in the lifecycle of the development of an AI system, fintech companies will have the opportunity to build safeguards, strengthen their governance, and mitigate the possibility of "silent" AI failures leading to significant financial or regulatory consequences.

3. Why Fintech Companies Need AI Red Teaming

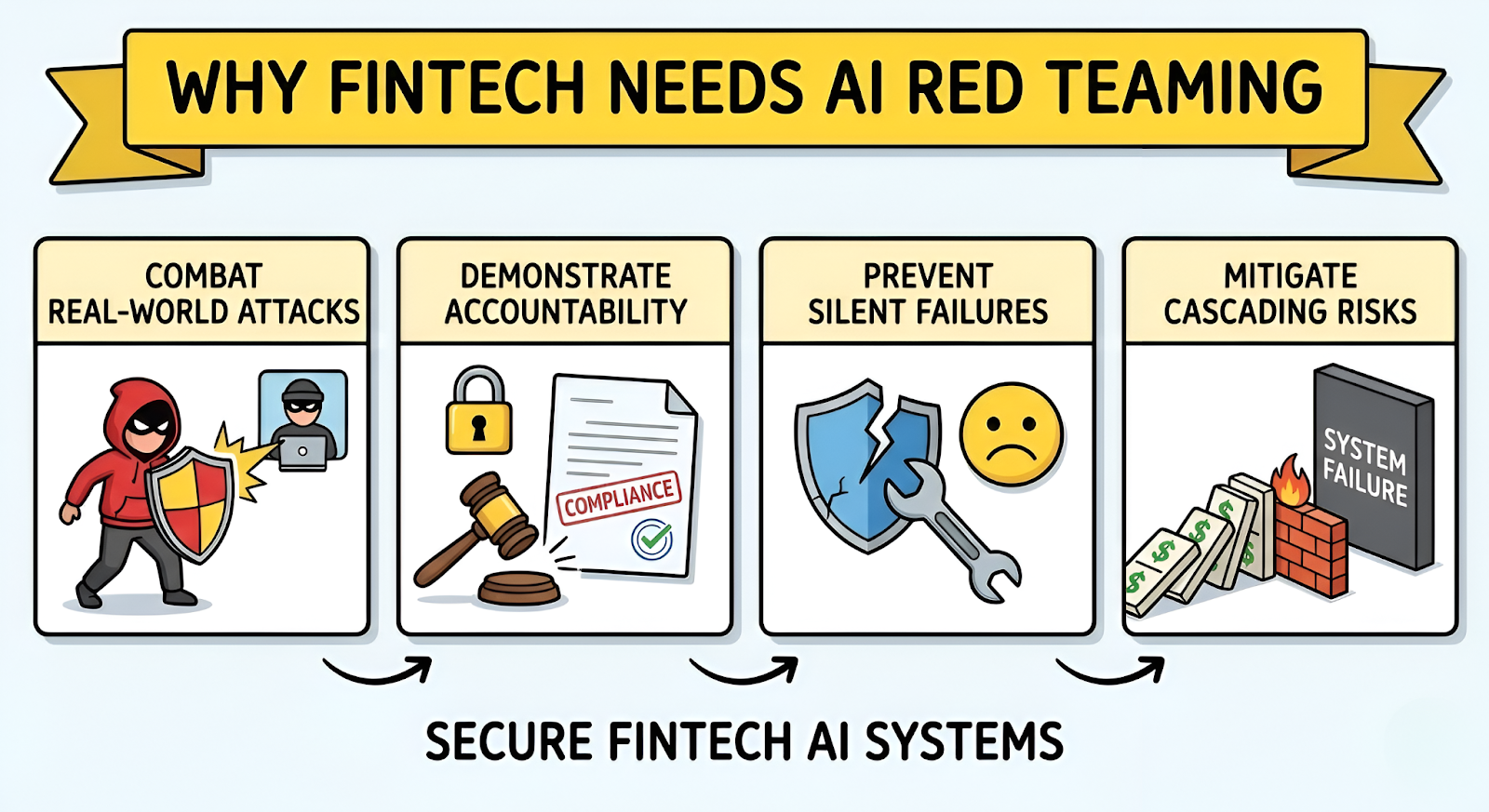

The digital environment in which fintech operates is among the most challenging and hostile there is today. High-risk processes, many involving financial transactions, such as payment authorizations, lending approvals, fraud detection, user authentication, and regulatory compliance, depend upon AI-based systems for their execution. Therefore, when those AI-based systems fail, the consequences are immediately tangible (i.e., financial loss) and quantifiable (e.g., regulatory scrutiny, customer harm). Consequently, AI red teaming is no longer an option for fintech organizations that heavily rely on AI to execute high volume business processes.

Firstly, because fintech companies operate in an inherently competitive and hostile environment, it is natural to assume that testing and evaluation will be conducted within a collaborative paradigm. However, since fintech applications are subject to constant probing and exploitation by fraudsters, malicious insiders, botnets, and other opportunistic users attempting to abuse the intended behaviors of the system, AI models trained on historical data or pristine data sets are poorly equipped to withstand intentional misuse. AI red teaming identifies this gap by intentionally disrupting assumed paradigms regarding generation of data; user behavior; and expected responses from AI systems.

Secondly, fintech companies are increasingly required to demonstrate control, transparency and accountability with respect to the use of AI in decision-making related to their respective businesses. When AI-based systems provide erroneous results (e.g., approve a fraudulent transaction or deny a legitimate application), the responsibility for providing a rational explanation for those results fall to the organization. As such, without direct evidence that a company has proactively engaged in AI security testing in fintech, including through adversarial testing of their AI systems, regulators may determine that they did not properly manage risks associated with AI-related decision-making. AI red teaming in fintech is a method of demonstrating evidence that AI systems have been evaluated against realistic misuse cases, rather than simply idealized cases.

Thirdly, fintech companies have a unique risk profile with regards to their reputations. AI malfunctions in consumer facing applications often remain undetected until identifiable trends emerge at scale. For example, a manipulated fraud model, a biased credit engine or a compromised chat-bot may malfunction for weeks prior to being identified. The causes of these silent failures include model manipulation attacks and/or unmitigated financial AI vulnerabilities that standard monitoring tools do not detect. Through red-teaming methodologies, fintech companies are able to discover these failure modes earlier, thereby minimizing damage to their trustworthiness and reducing remediation costs.

Lastly, AI-based systems used in fintech are highly interdependent. Outputs from one model are often fed into multiple downstream models resulting in magnified effects of small errors. Due to this interdependence, fintech companies must continually perform AI red teaming in order to identify potential weaknesses in AI systems and to contain the blast radius of AI failures in order to design necessary safeguards to prevent localized AI failures from escalating into larger-scale events.

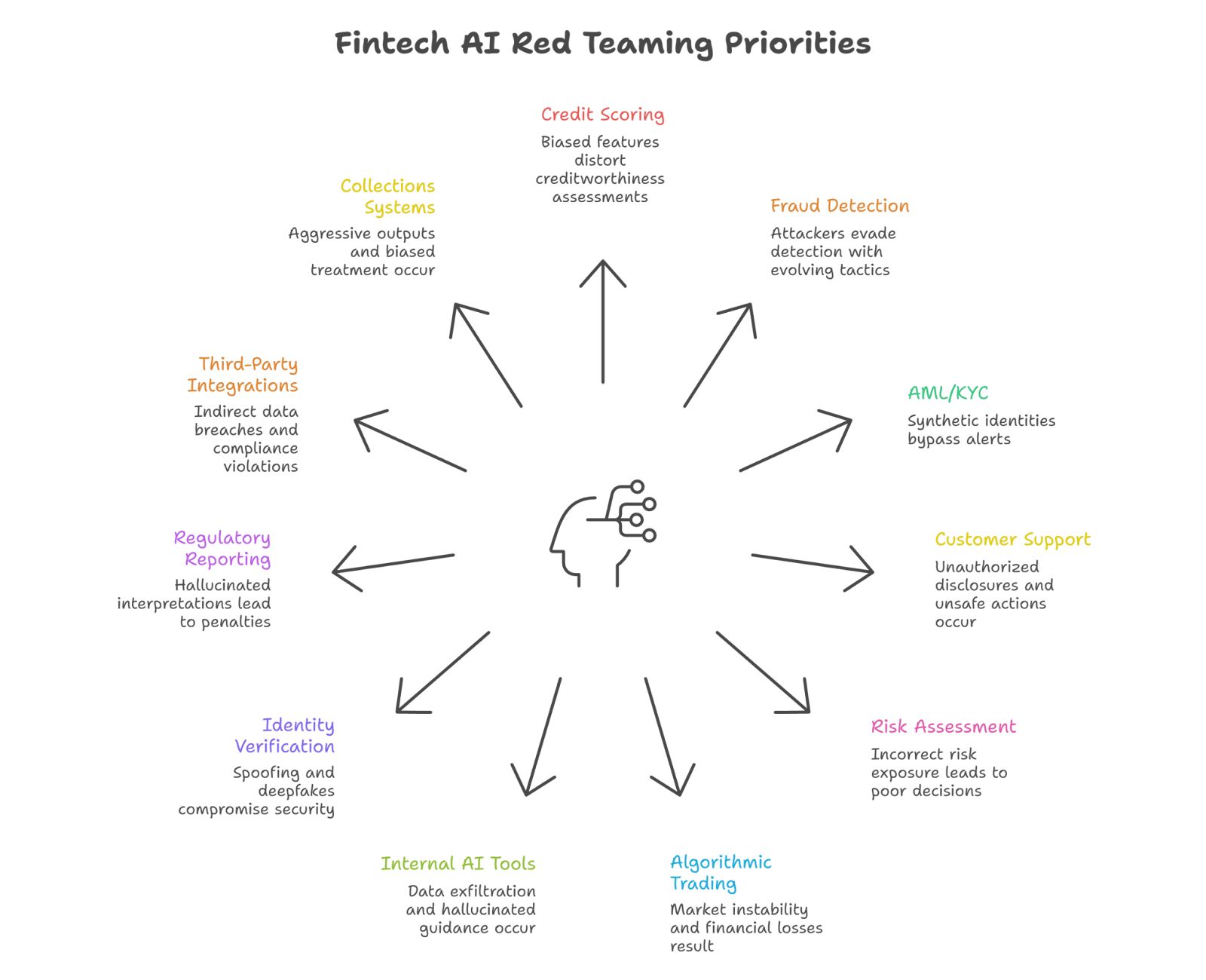

4. Fintech AI Systems That Must Be Red Teamed First

Not all AI systems in fintech carry the same level of risk. AI red teaming in fintech should be prioritized for systems that directly influence money movement, regulatory outcomes, and customer trust. These are environments where silent failure can scale rapidly and where adversarial misuse is both realistic and financially motivated. Effective AI red teaming focuses on understanding how AI behavior can be exploited under pressure, not just whether models meet baseline performance metrics.

Below are the fintech AI systems that should be subjected to continuous adversarial evaluation. For each use case, the emphasis is on how systems fail, how attackers exploit financial AI vulnerabilities, and why traditional AI security testing in fintech alone is insufficient.

4.1 AI-Driven Credit Scoring & Loan Approval Systems

Fintech lenders rely heavily on AI to automate credit decisions and risk-based pricing. These models are attractive targets for adversaries seeking to game approval logic or manipulate outcomes. AI red teaming examines how biased features, proxy variables, or crafted inputs can distort creditworthiness assessments.

Failure impact

⟶ Fair lending violations

⟶ Regulatory penalties

⟶ Elevated default risk and reputational damage

4.2 Fraud Detection & Transaction Monitoring Platforms

Fraud systems operate in an inherently adversarial domain. Attackers continuously evolve their behavior to evade detection, making this a core priority for AI red teaming in fintech. Red teaming simulates transaction patterns designed to bypass thresholds, exploit timing gaps, or overwhelm detection logic.

Failure impact

⟶ Undetected fraudulent transactions

⟶ Increased false positives and customer friction

⟶ Direct financial loss

4.3 AML & KYC Automation Systems

AI-powered AML and KYC platforms assess identity, behavior, and risk signals at scale. These systems often fail silently until regulators intervene. AI red teaming tests synthetic identity attacks, evasion strategies, and data manipulation that exploit financial AI vulnerabilities without triggering alerts.

Failure impact

⟶ Regulatory non-compliance

⟶ Criminal misuse of fintech platforms

⟶ Significant fines and enforcement actions

4.4 AI-Powered Customer Support & Virtual Assistants

LLM-driven chatbots are widely used for account queries, payments support, and onboarding. They dramatically expand the attack surface. AI red teaming in fintech evaluates prompt injection, jailbreaks, and conversational manipulation that can lead to unauthorized disclosures or unsafe actions.

Failure impact

⟶ Sensitive data leakage

⟶ Account compromise

⟶ Loss of customer trust

4.5 Risk Assessment & Financial Forecasting Models

Risk models guide pricing, capital allocation, and strategic planning. Their failures are often systemic rather than transactional. AI red teaming challenges assumptions, simulates extreme market conditions, and evaluates model behavior beyond historical data distributions.

Failure impact

⟶ Incorrect risk exposure assessment

⟶ Poor strategic decisions

⟶ Regulatory audit failures

4.6 Algorithmic Trading, Robo-Advisory & Investment AI

Real-time trading and advisory systems can amplify small errors at scale. AI red teaming in fintech tests susceptibility to adversarial market signals, feedback loops, and model manipulation attacks introduced via poisoned data feeds.

Failure impact

⟶ Immediate financial losses

⟶ Market instability

⟶ Legal and compliance exposure

4.7 Internal AI Tools Used by Fintech Teams

Internal AI assistants support analysis, reporting, and decision-making and often have broad data access. AI red teaming identifies risks such as prompt-based data exfiltration, access control bypass, and hallucinated guidance.

Failure impact

⟶ Confidential data exposure

⟶ Poor internal decisions

⟶ Governance and audit failures

4.8 Identity Verification & Biometric Authentication Systems

Biometric AI is central to onboarding and authentication. These systems face increasing spoofing and deepfake threats. AI red teaming in fintech tests replay attacks, synthetic media, and demographic bias in accuracy.

Failure impact

⟶ Account takeovers

⟶ Legitimate user lockouts

⟶ Regulatory scrutiny

4.9 Regulatory Reporting & Compliance Automation

Some fintech firms use AI to assist with regulatory reporting and compliance interpretation. Errors here carry extreme risk. AI red teaming evaluates hallucinated interpretations, suppressed risk indicators, and incomplete disclosures.

Failure impact

⟶ Regulatory penalties

⟶ Legal action

⟶ Loss of institutional credibility

4.10 Third-Party & Vendor AI Integrations

Fintech platforms frequently integrate external AI services via APIs. These dependencies extend risk beyond organizational boundaries. AI red teaming in fintech assesses supply-chain exposure, cross-system prompt injection, and hidden data usage.

Failure impact

⟶ Indirect data breaches

⟶ Compliance violations

⟶ Vendor-driven incidents

4.11 AI Systems for Collections, Payments & Debt Recovery

AI is used to prioritize collections, automate messaging, and manage payment workflows. Failures here often trigger legal and ethical issues. AI red teaming explores aggressive outputs, biased treatment, and non-compliant communication driven by financial AI vulnerabilities.

Failure impact

⟶ Legal exposure

⟶ Brand damage

⟶ Increased regulatory scrutiny

5. How to Test AI Models in Fintech Effectively

The way you approach testing for AI systems in fintech is completely different compared to traditional software testing or statistical model validation. Testing for accuracy within expected boundaries is no longer enough; you need to test how your model acts when your assumptions don’t hold up, this is where AI red teaming becomes important for AI security testing in fintech.

You start effectively testing with an adversarial point-of-view. Your fintech AI systems should be tested using conditions that simulate how they would act if users were attempting to mis-use them (i.e., not ideal input). Malformed data, transaction patterns intended to cause adverse reactions, misleading prompts, biased input, and edge cases that try to take advantage of gaps in logic all have to be considered in AI red teaming in fintech. Instead of testing how well your AI system operates normally ("validating") your focus should be on how your AI system fails when acted upon adversely ("discovering").

A typical AI testing strategy in fintech will assess the AI system along at least three criteria:

First is Robustness, Does the model behave in a safe manner when the input is altered, missing, or corrupted?

Second is Fairness/Anti-Abuse Resistance, Can protected attributes, proxy variables, or repeated interactions be used to unfairly influence the outcome?

Third is Failure Behavior, What does the AI system do when it is unsure, incorrect or acting beyond its training distribution? Many of the above failures fall into categories of financial AI vulnerabilities which only occur when there is sufficient stress or abuse applied to the model.

Also of importance here is the concept of model manipulation attacks. In this type of attack, attackers will often use subtle manipulations of model inputs over time, use feedback loops or poison upstream data sources to influence model output. Because most of these types of attacks do not appear like traditional attacks, they usually do not trip off conventional AI security testing in fintech alert mechanisms. AI red teaming specifically tests for this type of potential coercion of AI decision-making processes without the knowledge of an attacker's existence.

Another key tenet is Continuity. Fintech AI systems evolve quickly, Models are re-trained; Prompts are modified; Integrations Change; User Behavior Changes. As such, a single test provides only temporary comfort. Continuous AI red teaming in fintech allows organizations to evaluate their level of risk as their systems evolve and provide continuous assurance to Regulators, Partners and Internal Stakeholders of their continued control effectiveness.

6. AI Red Teaming vs Traditional Model Validation in Fintech

Validation of traditional AI systems has long been a core component of managing risk in fintech. The validation process examines if a model is statistically valid, functionally appropriate, properly documented and consistent with either company policy or government regulation. However, as AI systems continue to grow in autonomy and integration, validation alone cannot effectively manage real-world risk. This is when AI red teaming systems in fintech differ from, and complement, existing methods.

The primary differences between validation and AI red teaming relate to what the two assume about their inputs. The validation process generally assumes that input data are reliable and that there are stable distributional characteristics for those data; also, that user intent matches the modeled behavior. On the other hand, AI red teaming in fintech assumes that AI systems will be subjected to stressors, misused and intentionally abused. That is a crucial difference since many of the most significant financial losses incurred due to fintech system failures have occurred in systems that had undergone standard validation but had not been tested against the types of adverse (red team) conditions that would expose financial AI vulnerabilities such as abuse, coercion or exploitation of model logic.

Another area of differentiation relates to the nature of risk that each method is designed to identify. Traditional validation is primarily concerned with evaluating the performance, explainability and consistency of a model. On the other hand, AI red teaming evaluates behavioral risk, i.e., how a model behaves when subjected to adversarial inputs and how model output changes in response to increasing stress levels, and whether AI driven decisions can be manipulated without being detected. Behavioral risk assessment is particularly relevant to identifying model manipulation attacks, where adversaries modify model behavior through repeated exposure, poisoned data, or benign appearing feedback loops that over time produce desired effects on a model's decision-making process.

This is particularly important in fintech because AI systems are frequently running continuously, adapting over time, and therefore subject to evolving risk exposures. An AI model that successfully undergoes validation upon initial deployment may subsequently develop vulnerabilities as data distribution shifts, integrations change, or adversaries discover how to manipulate its decision boundaries. Thus, AI red teaming is designed to test AI systems continuously under a variety of realistic misuse scenarios to identify these evolving financial AI vulnerabilities. Therefore, AI red teaming should be viewed as a complementary method to robust AI security testing in fintech, and not as a substitute.

A final way in which validation and AI red teaming differ is frequency. The frequency at which validation occurs is typically periodic (e.g., quarterly), and usually documentation-driven. On the other hand, AI red teaming in fintech is continuous, and behavior-driven. Because fintech companies continuously deploy new features, retrain models, or integrate third party AI services, the potential attack surfaces evolve continuously. Therefore, AI red teaming provides a mechanism to ensure that previously discovered financial AI vulnerabilities are not simply reintroduced into the system and that new model manipulation attacks are quickly identified.

The strongest fintech AI governance programs will include both validation and red teaming as part of their comprehensive AI security testing in fintech strategies. Validation will provide a level of baseline confidence in an AI system, whereas AI red teaming will assess whether that confidence will hold up to adversity. Together, they represent a complete and comprehensive approach to managing AI security risk in the fintech sector.

7. Conclusion

As AI becomes increasingly integral to the operations of all fintech firms – particularly in terms of the way decisions are made, risk is assessed, and customer interactions occur, it is becoming more and more likely that an incident that causes a great deal of harm will arise not from something that is obvious (i.e., an error in a piece of code or some form of system outage), but rather from something much less apparent (e.g., a small flaw in a model's approval process, a signal related to potential fraud that was somehow overlooked, or an outcome that was influenced by bias). For this reason, AI red teaming has evolved into an essential tool for fintech firms that have reached scale.

The use of "traditional validation," or baseline AI security testing in fintech does nothing more than create a false sense of security. While an AI model may be accurate, well-documented, and compliant based upon the information provided, it may be weak in its application. In many cases, adversaries do not operate based upon assumptions. Rather, they tend to test the boundaries of a model, exploit any feedback loop that exists within the model, and attempt to provoke model manipulation attacks that disrupt the model's output without triggering an alarm. Therefore, without AI red teaming in fintech occurring continually, the risks associated with AI remain unexposed until financial damage occurs or there is a need for regulatory intervention.

It is not the confidence that an organization has in their AI models that differentiates them from other organizations; it is the presence of evidence indicating that an adversary has evaluated the AI system(s) in question. By proactively engaging in searches for financial AI vulnerabilities and gaining an understanding of how an AI system will respond when it is subjected to stress, misuse, or attack, an organization gains a more realistic view of how their AI system(s) function and are able to establish strong guardrails around their systems, limit the extent of the potential damage caused by a breach, and provide regulators with a level of comfort that the organization is proactively managing the risk of using AI, rather than reacting after the fact.

WizSumo is uniquely positioned to fill this gap. WizSumo provides AI red teaming services that focus on how AI systems are misused in the real world, how they exhibit adversarial behavior, and how they fail due to systemic issues. WizSumo works directly with fintech organizations to engage in hands-on AI red teaming in fintech across high-risk systems including, but not limited to, fraud detection models, lending models, anti-money laundering (AML) workflow, large language models (LLM)-powered assistants, and internal AI-based tools. Unlike many AI security testing in fintech engagements that merely scratch the surface of an organization's AI systems, WizSumo engages in activities that simulate realistic attacks against an organization's AI systems, identify previously unknown financial AI vulnerabilities, and determine how model manipulation attacks can impact actual financial results.

Ultimately, secure Fintech AI is not achieved through simply assuming that AI models are safe. Secure Fintech AI is achieved through continuously testing whether AI models continue to remain safe under adversarial pressures. Those organizations that incorporate AI red teaming into their AI development lifecycle will be significantly better positioned to responsibly scale innovation, protect their relationships with regulatory agencies, and avoid silent AI failures before those failures result in significant financial consequences.

“Fintech AI doesn’t fail all at once, it fails quietly, transaction by transaction.”

.svg)

.png)

.svg)

.png)

.png)