AI red teaming in healthcare : Safeguarding Patient-Critical AI Systems

.png)

Key Takeaways

AI red teaming focuses on how healthcare AI systems fail, not just how they perform.

Traditional AI security testing in healthcare cannot detect adversarial misuse and silent clinical risks.

High-impact healthcare AI systems must be tested under real-world misuse scenarios, not ideal conditions.

Healthcare AI security depends on continuous adversarial testing, not one-time validation.

Secure and trustworthy healthcare AI is achieved through evidence of resilience, not assumptions of safety.

1. Introduction

The use of Artificial Intelligence in clinical decision support systems, diagnostic tools, patient engagement platforms, and operational workflow processes is becoming an ever-increasing part of health care organizations’ strategies to improve the delivery of high-quality patient care, ensure patient safety, and maintain regulatory compliance. The increased autonomy of AI systems and their increasing interconnectivity means that even a single small failure within one system can potentially propagate throughout other connected systems at a rapid pace, resulting in potential harm to patients before it is identified.

This is why AI red teaming is essential.

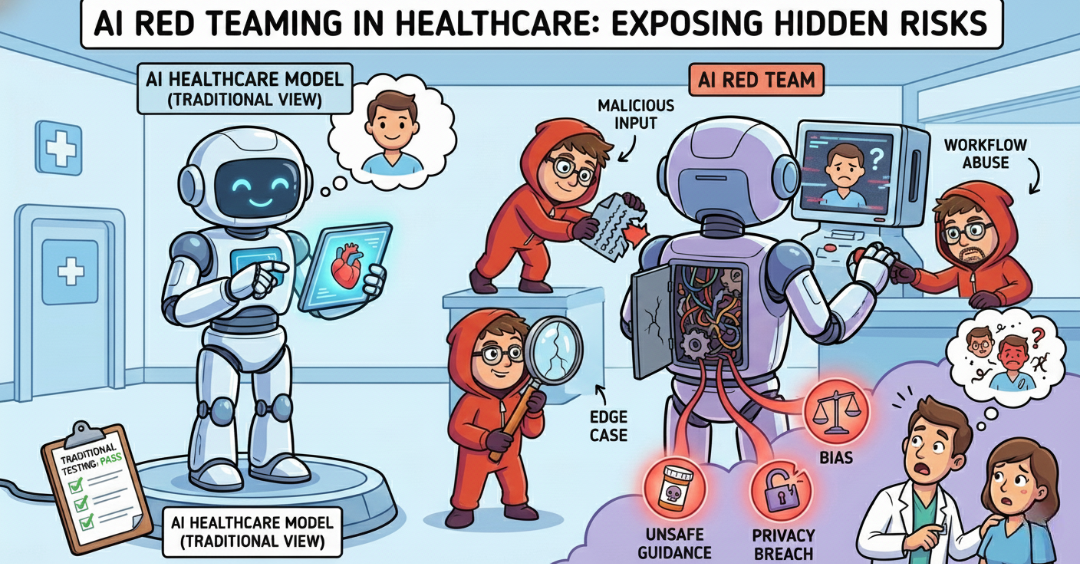

Traditional validation methods focus on demonstrating what is expected of an AI system; conversely, AI red teaming in healthcare was developed to demonstrate how AI systems will fail when subjected to improper use, under duress, and/or through adversarial testing. The clinical environment does not provide a controlled or stable environment for models to learn and make decisions. Models are exposed to incomplete data, edge cases, clinicians who may be stressed or fatigued, and real world ambiguity. Relying on performance metrics and audits alone will create blind spots in healthcare AI security.

Although many health care organizations continue to utilize traditional AI security testing in healthcare, assuming that as long as an organization’s AI system(s) is/are compliant and provides accurate results, the system(s) are also safe; many of the silent failure modes, biased output and unsafe recommendation typically arise from untested healthcare AI vulnerabilities. AI red teaming in healthcare changes the focus from assurance that an AI system functions properly to evidence that an AI system has demonstrated resilience. Through proactive testing of assumptions, AI red teaming in healthcare enables organizations to identify potential risks prior to these risk(s) translating into harm to patients, regulatory actions, or loss of public trust.

2. What is AI red teaming in healthcare?

Healthcare is one of the most high-risk domains for AI, as it has the potential to be used to do both good (e.g., help doctors with diagnosis) and harm (e.g., exacerbate health disparities). AI red teaming in healthcare is the use of adversarial thinking to test and understand how AI can be misused, abused, or cause unsafe behaviors.

AI Red Teamers are not trying to figure out if an input is clean or if the user will follow the rules; they assume that there will be edge cases, malicious inputs, misuse of workflow, and ambiguities in the world.

Traditional testing of AI is based on testing the performance of the AI under assumed conditions (i.e., that the inputs will be clean, the users will behave as expected). In contrast, AI red teaming is focused on what happens when those assumptions don't hold.

AI red teaming is not about demonstrating that an AI system works; rather it's about demonstrating how it fails. From a healthcare AI security viewpoint, this is important, as many AI systems fail silently. For example, a model might appear to be very accurate overall, but produce biased recommendations for certain populations, or provide unsafe clinical guidance in rare scenarios. Additionally, a model might produce misleading results that clinicians will blindly trust.

Because these types of failures rarely surfaced through traditional AI security testing, AI red teaming was created to simulate adversarial conditions like manipulating inputs, providing misleading prompts, having inconsistent data, and abusing workflows. Ultimately, AI red teaming allows organizations to find the "hidden" healthcare AI vulnerabilities that could result in misdiagnoses, dangerous clinical recommendations, breaches of patient privacy, or regulatory issues. Because AI outputs have the ability to affect life-critical decisions, identifying these failure modes is a priority.

3. Why Healthcare Organizations Need AI red teaming

The use of AI in the health care industry occurs in one of the most high-risk and heavily regulated industries. As such, AI systems are increasingly used in diagnostic processes, triage processes, treatment recommendations, patient communication, and compliance workflows. While failures in these systems can cause operational disruptions, they can also affect patient safety and quality of care, as well as the reputation of institutions.

As a result, AI red teaming is not merely a desirable practice - it is necessary for the health care industry's use of AI.

A major challenge associated with the failures of health care AI systems is that they usually do not present themselves as obvious system outages (i.e., "the lights went out"). Rather, they present themselves as subtle errors of decision-making (e.g., a missed diagnosis, a potentially dangerous recommendation, an unfair prioritization, or incomplete clinical documentation). Because these "silent failures" are often missed by conventional controls, they create systemic risks for health care AI security programs. AI red teaming in healthcare was created to find these types of failure modes through stressing AI systems under conditions that are similar to those that could represent the exploitation of the AI system through misuse or lack of understanding.

Another key factor is the increasing expectations of regulatory bodies and courts regarding the demonstration of the safety, fairness, transparency, and control of AI systems in the health care industry. When adverse events occur involving the use of an AI system in a health care setting, regulatory bodies and auditors will likely want to know if the organization had identified potential risks and taken steps to mitigate them before allowing the use of the AI system. Traditional methods of testing the "security" of an AI system in a health care environment (e.g., AI security testing) rarely take into consideration the potential for "adversarial behavior" (i.e., how someone might attempt to manipulate the output of the AI system) or how the AI system may be unintentionally misused. Therefore, AI red teaming provides a clear and direct way for organizations to demonstrate their due diligence regarding the evaluation of AI systems in a health care setting.

Finally, the foundation of trust is essential to the health care industry. Trust is implied when patients, clinicians, and regulatory bodies believe in the accuracy and reliability of decisions made with the assistance of AI systems. The loss of this trust, once eroded, is likely to be rapid and possibly irreparable. Therefore, AI red teaming allows health care organizations to evaluate and stress test the assumptions underlying their use of AI systems, identify potential risks, and provide the evidence-based support required to confidently deploy AI systems in a health care setting.

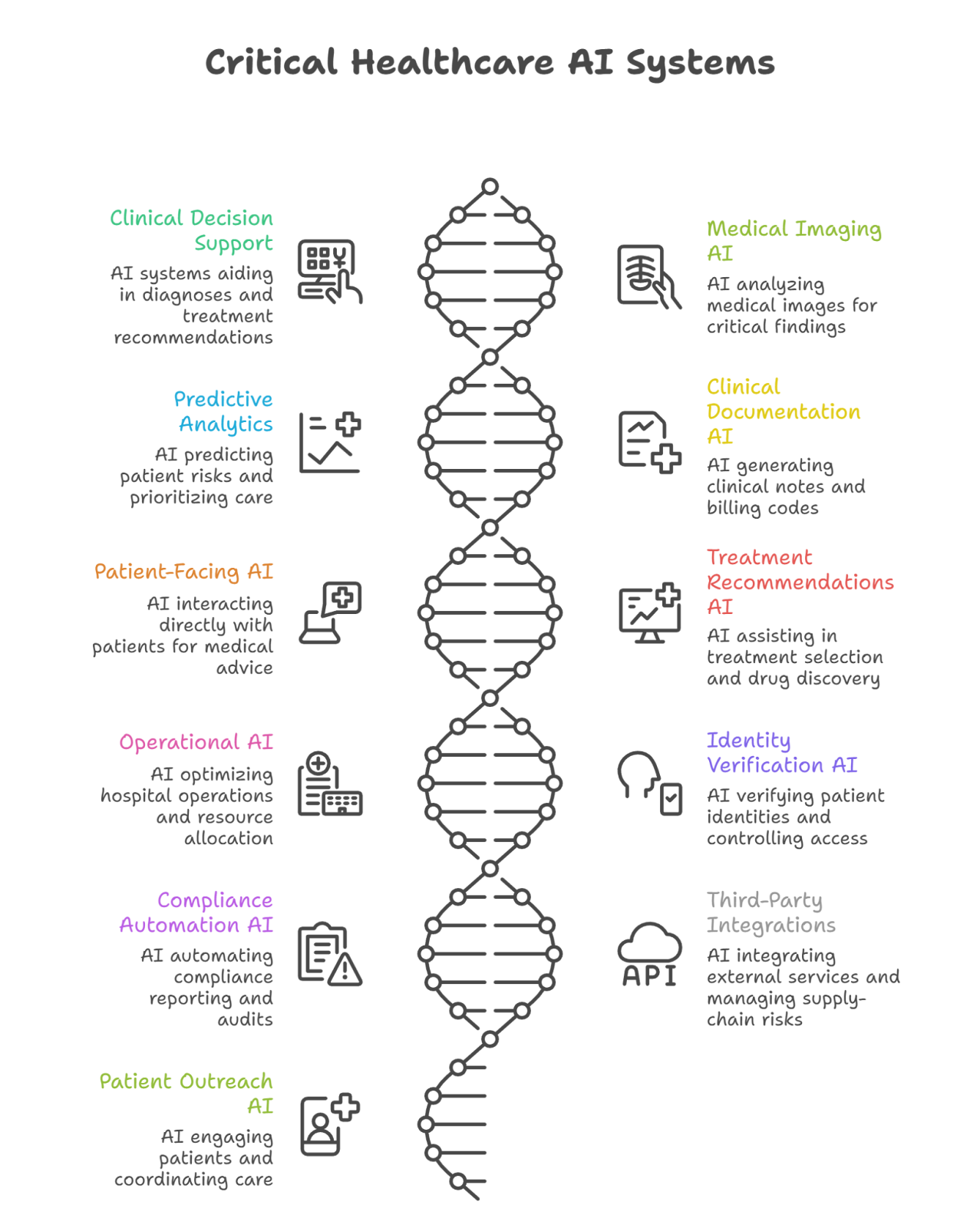

4. Healthcare AI Systems That Must Be Red Teamed First

Not all AI systems in healthcare carry the same level of risk. AI red teaming in healthcare should be prioritized for systems that directly influence clinical decisions, patient safety, regulatory outcomes, or institutional trust. The objective is not to test every model equally, but to focus AI red teaming efforts on systems where silent failure can scale quickly and where adversarial misuse is realistic.

Below are the most critical healthcare AI systems that must be subjected to continuous adversarial evaluation. For each use case, the emphasis is on how the system can fail and what that failure means for patients and providers. This approach strengthens healthcare AI security by uncovering risks that traditional AI security testing in healthcare rarely exposes.

4.1 Clinical Decision Support & Diagnostic AI

Clinical decision support systems assist clinicians with diagnoses, risk scoring, and treatment recommendations. Because clinicians often trust these outputs, even small errors can cascade into serious harm.

AI red teaming focuses on biased feature exploitation, adversarial inputs, and edge-case scenarios that push diagnostic models toward unsafe conclusions.

Failure impact:

⟶ Misdiagnosis or delayed diagnosis

⟶ Incorrect treatment pathways

⟶ Patient harm and malpractice exposure

4.2 Medical Imaging & Radiology AI

AI models increasingly analyze X-rays, MRIs, CT scans, and pathology images. These systems are sensitive to noise, artifacts, and adversarial perturbations that may be invisible to human reviewers.

Through AI red teaming in healthcare, teams test robustness against manipulated images, rare presentations, and demographic bias.

Failure impact:

⟶ Missed critical findings

⟶ False positives leading to unnecessary interventions

⟶ Erosion of clinician trust

4.3 Predictive Analytics for Patient Risk & Triage

Predictive models are used to prioritize care, allocate ICU beds, and flag deterioration risk. These systems influence time-sensitive clinical decisions.

AI red teaming evaluates how small data shifts, missing signals, or biased patterns affect predictions and downstream actions.

Failure impact:

⟶ Incorrect patient prioritization

⟶ Resource misallocation

⟶ Adverse clinical outcomes

4.4 AI-Powered Clinical Documentation & Medical Coding

LLMs are increasingly used to generate clinical notes, summaries, and billing codes. These tools often operate with minimal oversight.

Adversarial testing examines hallucinations, prompt manipulation, and propagation of incorrect clinical information, key healthcare AI vulnerabilities.

Failure impact:

⟶ Inaccurate medical records

⟶ Compliance and billing violations

⟶ Audit and legal risk

4.5 Patient-Facing AI Assistants & Symptom Checkers

Chatbots and virtual health assistants interact directly with patients, often handling sensitive medical questions.

AI red teaming in healthcare tests prompt injection, unsafe advice generation, and data leakage that traditional AI security testing in healthcare may miss.

Failure impact:

⟶ Unsafe medical guidance

⟶ Privacy breaches

⟶ Loss of patient trust

4.6 AI for Treatment Recommendations & Drug Discovery

AI systems increasingly assist with treatment selection and research insights. Errors here are subtle but high impact.

AI red teaming probes logic abuse, biased recommendations, and unsupported extrapolations.

Failure impact:

⟶ Unsafe or non-compliant treatment suggestions

⟶ Ethical and regulatory exposure

4.7 Operational AI in Hospitals

Hospitals use AI for scheduling, staffing, and resource optimization. While not always clinical, failures can indirectly affect care delivery.

Adversarial testing explores cascading failures and workflow manipulation.

Failure impact:

⟶ Care delays

⟶ System-wide inefficiencies

⟶ Increased operational risk

4.8 Identity Verification & Biometric Systems

Biometric AI supports patient onboarding and access control. These systems face growing spoofing and deepfake threats.

AI red teaming tests replay attacks, synthetic identities, and demographic accuracy gaps.

Failure impact:

⟶ Unauthorized access

⟶ Legitimate patient lockouts

⟶ Regulatory concerns

4.9 Compliance, Reporting & Clinical Audit Automation

Some healthcare organizations rely on AI to assist with compliance reporting and audits. These systems carry significant regulatory risk.

AI red teaming evaluates hallucinated interpretations and suppressed risk signals.

Failure impact:

⟶ Regulatory penalties

⟶ Legal exposure

⟶ Loss of institutional credibility

4.10 Third-Party & Vendor Healthcare AI Integrations

External AI services expand the attack surface beyond direct organizational control.

AI red teaming in healthcare assesses supply-chain risk, hidden data usage, and cross-system prompt injection.

Failure impact:

⟶ Indirect data breaches

⟶ Compliance violations

⟶ Vendor-driven incidents

4.11 AI Systems for Patient Outreach & Care Coordination

AI-driven messaging and prioritization tools influence patient engagement and follow-up care.

Adversarial testing uncovers aggressive messaging, bias, and unsafe automation.

Failure impact:

⟶ Patient dissatisfaction

⟶ Ethical and legal issues

⟶ Increased regulatory scrutiny

5. How to Test AI Models in Healthcare Effectively

The way we test AI systems in health care needs an entirely new paradigm for thinking about health care AI testing versus testing for other types of software. Testing is no longer simply checking to see if the model works within its expected parameters, but rather testing to see what happens when the expectations of that model are violated.

This is where AI red teaming in healthcare comes in as a key component of successful testing strategies.

To be successful in testing, one should assume that a health care AI system will experience at least some level of "adversary input," either through missing data, misuse of the system, etc., and test accordingly. In addition to ensuring that the model tested is accurate and compliant, AI red teaming in healthcare tests the model, workflow and integration, to find those paths to failure, which were previously unknown.

By using this methodology, the organization is strengthening its AI security testing in healthcare by identifying potential threats prior to them affecting the quality of care provided to patients.

Practically speaking, a testing program can focus on three primary dimensions of testing:

Dimension one is "robustness testing." Robustness testing involves challenging the model with manipulative, noisy, or edge case input, and observing how the model reacts when exposed to such input.

Dimension two is "fairness and bias testing." Fairness and bias testing is focused on determining if there is a relationship between the protected attribute or the proxy for the protected attribute (e.g. age, sex) and the outcome produced by the model.

Dimension three is "failure behavior testing." Failure behavior testing is evaluating how a health care AI system responds when the system is unsure of itself, incorrect, or operating beyond the assumptions made during training.

These dimensions are often only partially evaluated through the typical AI security testing in healthcare.

In addition to the testing process described above, ongoing testing is also necessary. The data used to train health care AI systems changes, as do the models, the prompts used to interact with the model, and the workflows associated with each model. A single point-in-time test provides a false sense of security. Therefore, ongoing AI red teaming in healthcare allows the organization to continually assess the risk associated with the evolving systems and to identify emerging healthcare AI vulnerabilities early. Additionally, by incorporating adversarial testing into the AI development lifecycle, the organization can transition from a reactive model of responding to incidents, to a proactive model of identifying potential risks.

6. AI red teaming vs Traditional Model Validation in Healthcare

Validation of AI models has historically served as one of the primary components of AI governance in healthcare. Validation ensures that an AI model has statistical integrity, operates within defined performance thresholds, and satisfies specified requirements. Although validation is a necessary function, it is currently insufficient as the sole means of ensuring the reliability of AI-based systems in healthcare. The majority of significant failures in AI security testing in healthcare have occurred in systems that were technically validated yet never subjected to an adversarial challenge.

Validation generally assumes that the input data is representative, the data pipeline behaves as expected, and users interact with AI systems in the expected manner. However, none of these assumptions commonly exist in the typical clinical environment. Healthcare AI systems are subject to incomplete medical record information, atypical user interactions (e.g., shortcuts), and other forms of human pressure/pressure that can affect how the system operates. This represents the key area for which AI red teaming can play a role. AI red teaming in healthcare assumes that all AI-based systems will experience stress from improper usage, manipulation, and intentional attempts to break decision logic, and then subjects those systems to these types of actions to test their ability to withstand those stresses.

A second limitation of validation is its inability to detect future healthcare AI vulnerabilities including logic abuse, biased decision amplification, and unsafe decision-making under conditions of uncertainty. These risks are typically outside the parameters of accuracy measurements and document reviews. Comprehensive healthcare AI security testing often primarily focuses on validating how secure access controls are and what level of security exists within the underlying infrastructure, rather than how AI-driven decisions can be pressured/coerced into producing unsafe outcomes. AI red teaming directly addresses this gap by using intentional attempts to break decision logic to find and expose silent modes of failure.

Finally, there are differences regarding frequency and timing between model validation and AI red teaming. Generally, validation occurs periodically and based upon documents. On the other hand, AI red teaming in healthcare occurs continuously and is based on observed behaviors. Because models are being continually trained/re-trained, prompts are evolving, and clinical workflows are changing; risk assessments are constantly shifting. Continuously evaluating an AI-based system through adversarial testing allows organizations to continually evaluate their healthcare AI security posture and continually identify new healthcare AI vulnerabilities as they develop.

More mature healthcare AI programs do not select between performing validation and AI red teaming, but instead perform both. Validation creates a basis of trust with respect to an AI-based system, whereas AI red teaming in healthcare evaluates whether the trust created by validation holds when subjected to actual operational pressures. Collectively, both approaches create a much more realistic representation of AI-related risk than each method individually.

7. Conclusion

AI is now deeply embedded in how healthcare organizations diagnose conditions, prioritize patients, document care, and make operational decisions. As these systems grow more autonomous, the cost of failure increases sharply. The most damaging incidents rarely come from obvious breakdowns. Instead, they emerge from overlooked healthcare AI vulnerabilities that surface only under misuse, pressure, or edge-case conditions.

This is why AI red teaming plays a critical role in modern healthcare AI security. Traditional controls and periodic AI security testing in healthcare focus on expected behavior, but healthcare environments are anything but predictable. Silent failures, biased recommendations, unsafe outputs, or misleading clinical guidance, often remain invisible until patient harm or regulatory scrutiny forces discovery. AI red teaming in healthcare helps organizations uncover these risks early, before they scale.

Equally important, adversarial testing strengthens governance and accountability. Regulators and auditors increasingly expect healthcare organizations to demonstrate that AI risks are actively identified and managed. Being able to show evidence of continuous AI red teaming, alongside conventional testing, signals a mature and responsible approach to AI deployment. It also enables organizations to respond confidently when questions arise about safety, fairness, or decision integrity.

This is where specialized expertise becomes essential. WizSumo works with healthcare organizations to conduct domain-aware AI red teaming in healthcare, focusing on real clinical workflows, patient-facing systems, and high-impact decision models. Rather than treating AI as generic software, WizSumo helps teams adversarially test how AI systems behave under stress, misuse, and uncertainty, revealing healthcare AI vulnerabilities that are often missed by standard AI security testing in healthcare programs.

Ultimately, safe healthcare AI is not achieved by assuming models are trustworthy. It is achieved by proving that they remain trustworthy under pressure. Organizations that embed AI red teaming into their AI lifecycle are far better positioned to protect patients, maintain compliance, and scale AI responsibly in an environment where failure is not an option.

“Healthcare AI doesn’t fail like software, it fails like a decision, often silently, and sometimes irreversibly.”

.svg)

.png)

.svg)

.png)

.png)