AI red teaming in HR & Recruitment : Preventing Bias and Decision Manipulation

.png)

Key Takeaways

AI red teaming focuses on how hiring AI fails, not how it performs in ideal conditions.

Resume screening and ranking systems are the highest-risk entry points for manipulation.

Traditional audits miss adversarial behavior that AI security testing in HR reveals.

Silent model drift creates legal and reputational risk in recruitment workflows.

Secure hiring AI requires continuous AI red teaming in HR, not one-time validation.

1. Introduction

AI has become deeply ingrained in all aspects of hiring, screening and workforce decision making. From filtering resumes and ranking candidates to conducting interviews and assessing employees for potential career advancement opportunities, HR teams are increasingly relying on AI-based systems to make many of their important people's decisions. While AI is a powerful tool for identifying patterns in data, it operates quietly behind the scenes and makes high impact decisions at scale about who will be hired/promoted/rejected.

Most failures in HR AI do not look like failures to the average person. They may appear as reasonable decisions, statistically valid outputs or optimized workflows. This is one reason silent errors in HR AI are more damaging than visible system errors. For example, bias, manipulation and unanticipated behavior could exist in hiring processes for months without being detected by any alert system; and expose the organization to serious legal, ethical and reputational risks. Most testing methods traditionally used to evaluate the effectiveness of hiring AI do not test how the system behaves when its assumptions are violated, or when intentional manipulation occurs in the input.

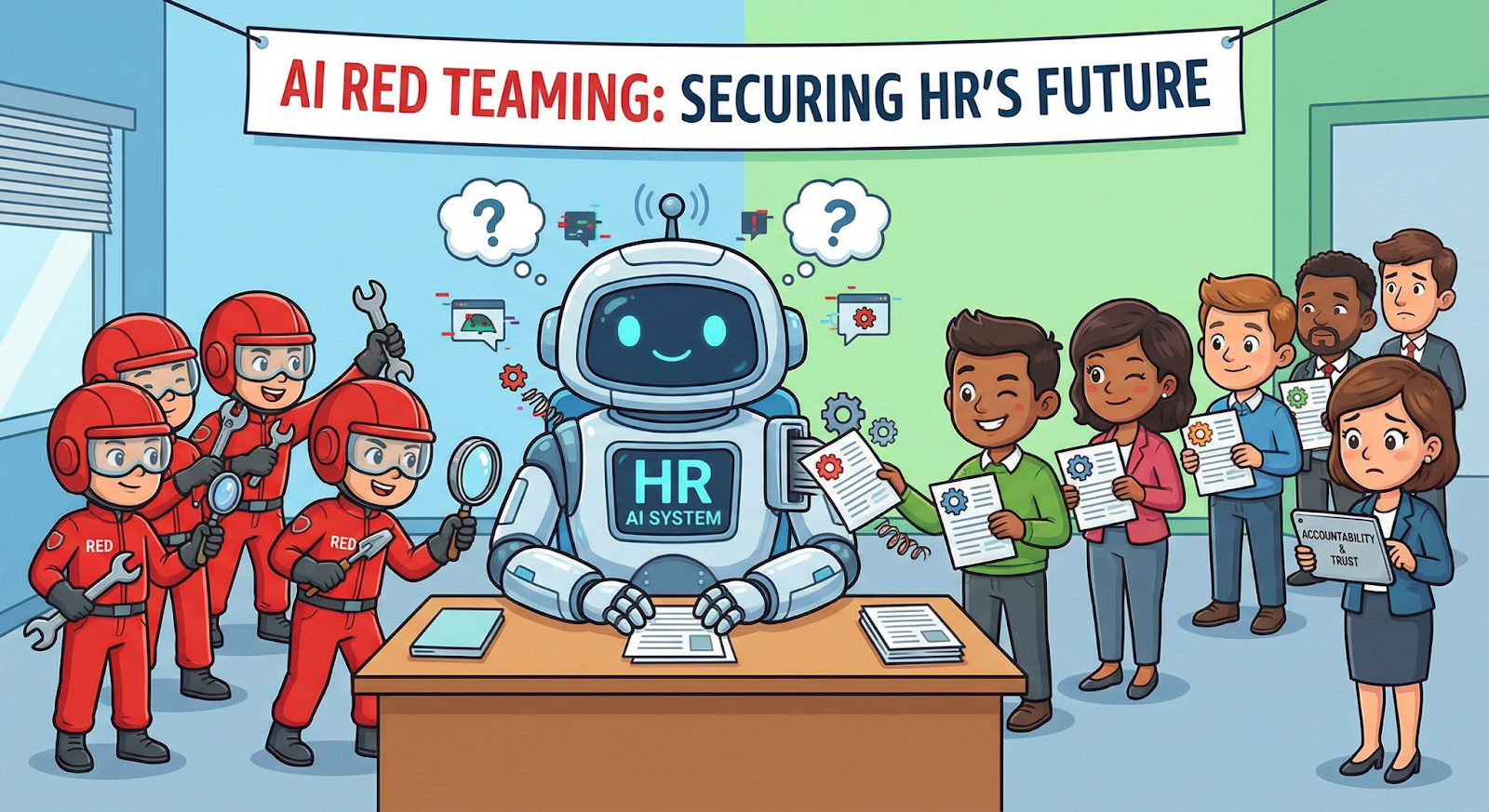

At this point, AI red teaming, specifically for people facing systems (such as hiring) becomes imperative for organizations. As opposed to validating expected performance of hiring AI, AI red teaming in HR would focus on how hiring AI can be intentionally abused, taken advantage of, or allowed to deviate from safe operation in real world environments. Organizations using adversarial thinking and/or AI security testing in HR to identify specific types of adversarial attacks against recruitment work flows, prior to them becoming compliance violations or eroding trust with the public, can proactively identify and mitigate significant risks in their recruitment work flows. In today's world where recruitment AI security can have a direct impact on fairness and accountability, organizations should no longer consider "proactive AI security testing in HR" as discretionary.

2. What is AI red teaming in HR?

The idea of AI red teaming in HR is about creating a controlled environment for intentionally putting stress on recruiting and workforce technology with the intention of taking advantage of them. Rather than focusing on whether the HR model is working at all during normal conditions ("will it work"), AI red teaming looks at how the same HR model works when inputs come in the form of either being adversarial, being biased, or being created strategically to obtain certain outcomes.

There are two main differences between traditional testing for HR AI and AI red teaming. The first difference is the type of testing; the second difference is what the testing is looking for. Traditional HR AI testing is focused around checking off boxes such as how accurate and efficient the model is and also if the model is compliant with regulations. The assumption behind these tests is that the candidate has provided truthful answers to questions, that the resume was submitted as truthfully as possible and that the candidate does not intend to do anything to cause the model to make any errors. Unfortunately, the environment of recruiting is very adversarial; the candidate adjusts their behavior to get better results, resumes are made to look like they fit the best way possible based upon the screening system used, and the internal user could be doing this unintentionally or deliberately. This is where AI red teaming in HR differs from standard validation because it is looking at the intent to abuse, misuse, and exploit the AI system rather than simply looking at how well the model is performing on the surface.

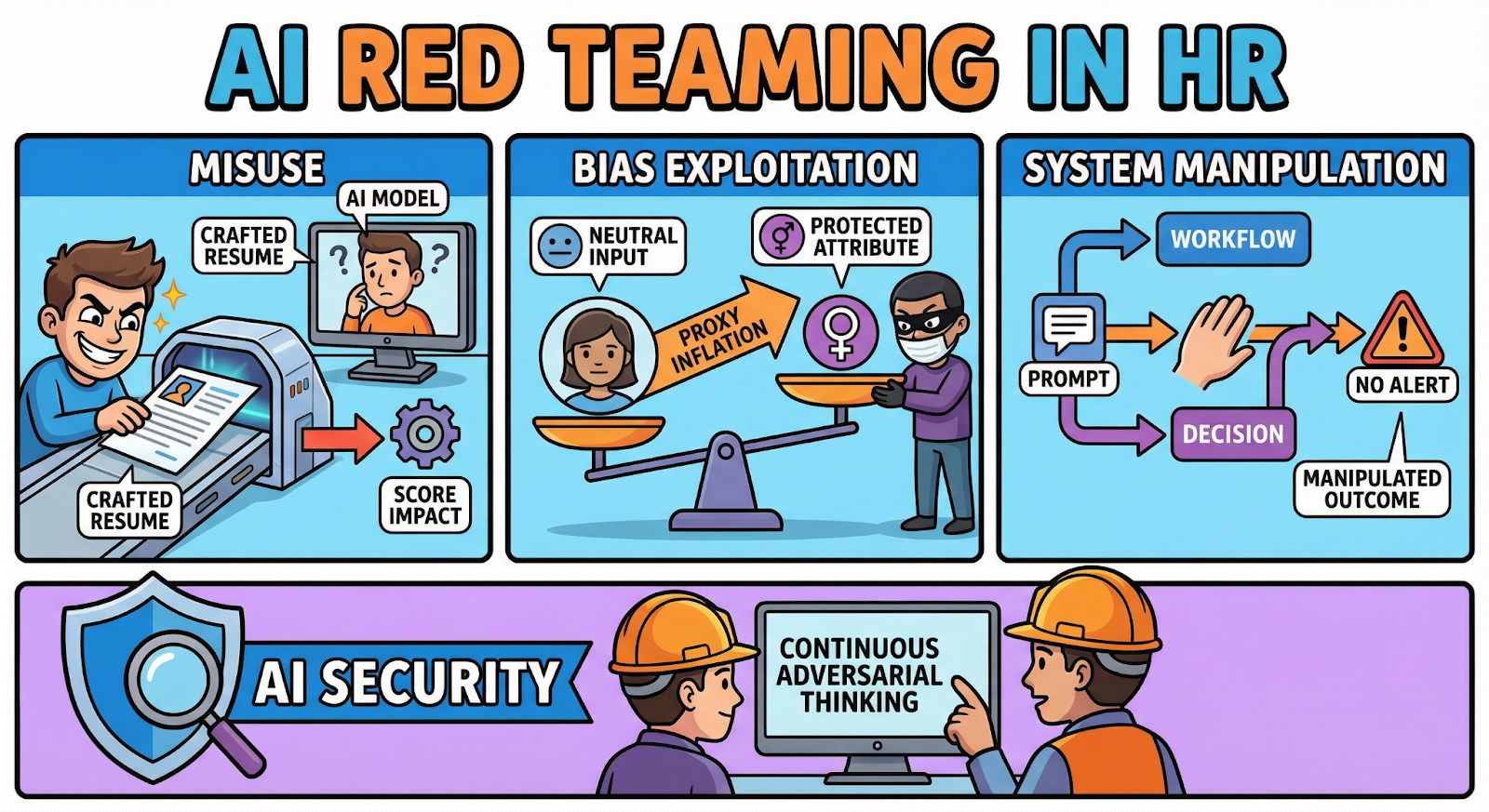

In essence, AI red teaming in HR is centered on three dimensions of failure. Misuse, How the hiring AI reacts to resumes, profiles, and interview responses that have been specifically crafted to impact the score. Bias Exploitation, How protected attributes or proxies can be inflated through inputs that seem neutral. System Manipulation, How workflows, prompts, and integrations can be manipulated to affect decisions without producing an alert. These types of exploits are typically characterized by model manipulation in HR AI, in which the output of the model changes incrementally while still appearing statistically valid.

As opposed to audits or fairness reviews, AI red teaming views HR AI systems as decision-making entities that can be affected by the actions of humans. Through the use of continuous adversarial thinking and AI security testing in HR, companies can see how their recruitment systems fail in reality, not just how they perform in theory. This methodology is a foundation for ensuring that companies maintain strong recruitment AI security as hiring decisions grow and the level of automation grows.

3. Why HR & Recruitment Systems Need AI red teaming

At the intersection of the use of artificial intelligence (AI) within Human Resources and Recruitment exist both the potential for technology to enhance and automate processes, along with the corresponding need for legal and ethical accountability of those same AI-based systems. As such, every decision made through use of AI during the hiring process has a direct impact on issues of fairness, compliance, and ultimately organizational trust.

That is one of the reasons why AI red teaming should be viewed as an integral part of all modern HR functions, rather than simply a necessary security function to protect against threats to other systems.

Hiring AI is subject to continued pressure by a variety of human factors, including candidates adapting their resumes to optimize results, optimizing language used in resumes, and experimenting with new resume formats in order to manipulate the AI into generating favorable rankings. As long as "AI red team in HR" is not utilized to monitor these activities, then the manipulation of resume screening and ranking systems continues unimpeded. Over time, manipulated input begins to appear as "normal," creating a false sense of confidence regarding the safety of hiring pipeline systems while simultaneously hiding the underlying risks associated with manipulated input which creates a statistical illusion of acceptability of hiring system outputs.

Governing mechanisms for AI have been developed in response to the traditional expectations of cooperation with respect to data input and the expectation of consistent behavior from data input providers. However, governing mechanisms that rely on bias auditing, compliance reviews, and testing for accuracy are designed to address cooperation between the provider of data and the system receiving the data. These types of governing mechanisms do not test how AI systems will react to adversarial use cases. The existence of this gap is the basis for the importance of AI security testing in HR. Through the intentional simulation of misuses of systems, AI red teaming create awareness of vulnerabilities created by malicious actors who intend to exploit vulnerabilities in the AI system. In addition to the ability of AI red teaming to identify vulnerabilities created by malicious actors, governing mechanisms that focus solely on compliance also fail to provide assurance that the AI system will withstand misuse. Strengthening recruitment AI security involves recognizing that hiring systems will be gamed, and therefore should be tested to determine if they can withstand gaming.

A third rationale for the necessity of AI red teaming in HR is the silent nature of failures experienced in hiring AI systems. Unlike failures experienced in some other AI applications, hiring AI rarely fails loudly. Rather, it drifts. As a result of model manipulation in HR AI, biased weightings of features used in model development, or as a result of proxy attribute development, hundreds of thousands of candidates may be affected before any alarms are triggered. Therefore, in environments such as these, AI red teaming is the only viable means of identifying how recruitment systems behave when assumptions related to their design are broken at scale.

Lastly, the accountability of HR teams does not cease to exist as a result of the increased reliance on automated hiring decisions. In fact, as a result of these automated decisions, HR teams remain accountable for the explanations provided for the hiring decisions, the outcomes of those decisions, and providing the organization with a defensible position with respect to compliance with applicable law. Therefore, unless AI security testing in HR is performed continuously, organizations take a blind faith approach to the operation of systems that are opaque to them. Trustworthy recruitment AI security cannot occur without performing adversarial testing that demonstrates not only whether hiring AI operates, but whether it can be trusted under duress. For these reasons, AI red teaming is becoming a foundation upon which responsible and scalable HR automation can be built.

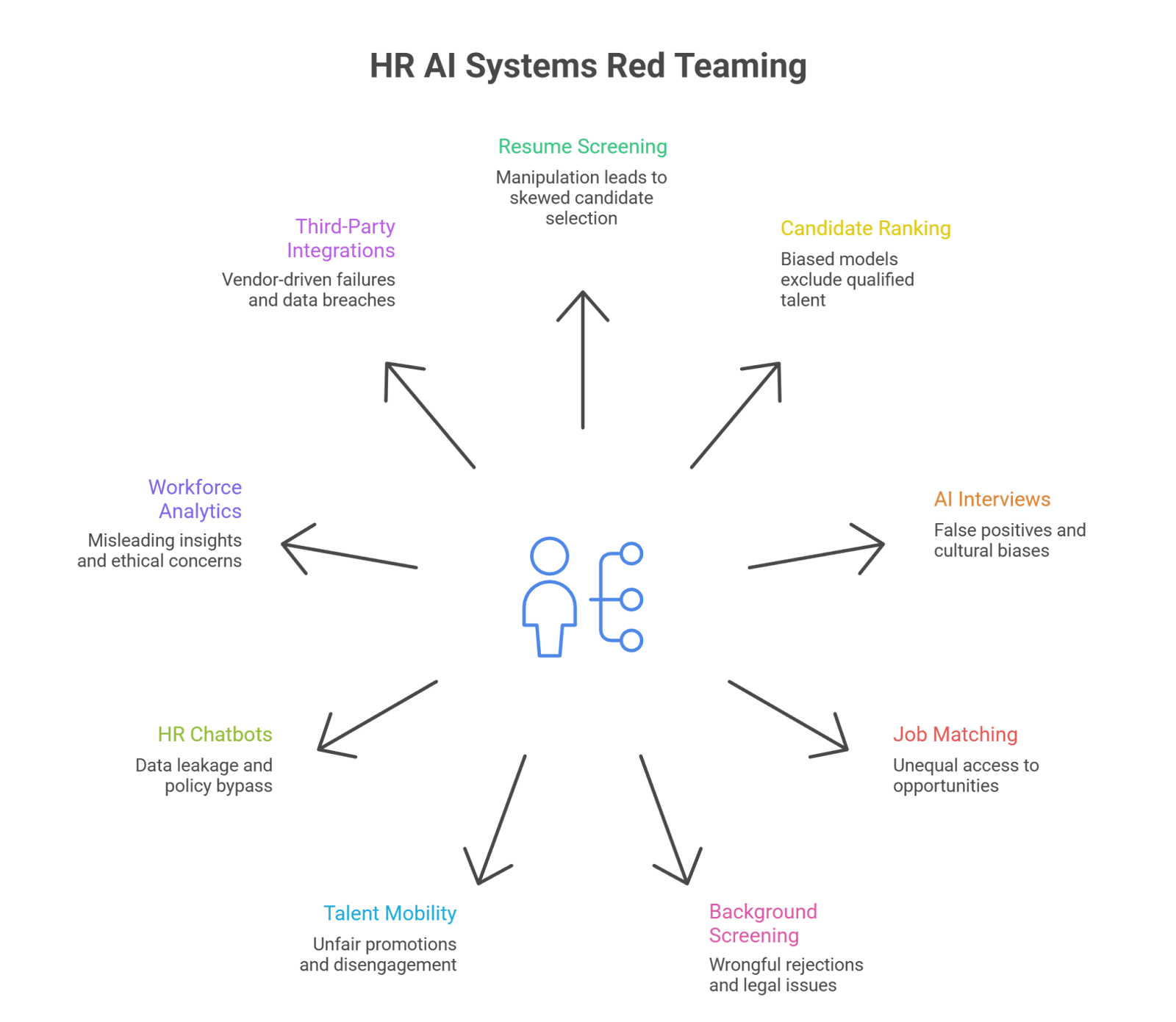

4. HR & Recruitment AI Systems That Must Be Red Teamed First

Not all HR AI systems carry the same level of risk. AI red teaming in recruitment must prioritize systems that directly influence hiring decisions, fairness outcomes, and legal accountability. In AI red teaming in HR, the goal is not to test whether these systems work as designed, but to understand how they fail when exposed to manipulation, gaming, and adversarial behavior.

HR AI systems operate in environments where incentives are misaligned. Candidates want to optimize outcomes, internal teams want efficiency, and models are expected to scale decisions automatically. Without sustained AI security testing in HR, these pressures lead to hidden vulnerabilities that silently distort hiring pipelines. Effective recruitment AI security starts by identifying which AI systems can be exploited first and testing them under real-world misuse conditions.

Below are the most critical HR and recruitment AI systems that must undergo continuous AI red teaming in HR, with a focus on adversarial behavior, misuse patterns, and failure impact.

4.1 Resume Screening & CV Parsing Systems

Resume screening and CV parsing systems are the most exposed components of automated hiring pipelines, which is why AI red teaming consistently prioritizes them first. These systems make binary decisions at scale, often without human oversight, making them highly sensitive to manipulation. In AI red teaming in HR, resume screening tools are treated as high-risk entry points for adversarial behavior.

A primary failure mode is resume screening manipulation. Candidates exploit keyword matching, formatting structures, hidden text, and AI-generated language to influence parsing and scoring logic. Without continuous AI security testing in HR, these tactics blend into normal traffic, allowing manipulated resumes to pass screening while qualified candidates are filtered out. This pattern represents a clear case of model manipulation in HR AI, where decision quality erodes without obvious performance degradation.

Through targeted AI red teaming, organizations intentionally test adversarial resumes to understand how parsing rules, feature weighting, and thresholds can be abused. From a recruitment AI security perspective, this reveals how early-stage hiring decisions can be skewed quietly and repeatedly at scale. In practice, AI red teaming in HR is the only reliable way to expose these vulnerabilities before they become systemic.

Failure Impact:

⟶ Manipulated resumes ranked higher due to resume screening manipulation

⟶ Qualified candidates excluded without detection

⟶ Increased legal and compliance risk

4.2 Candidate Ranking & Shortlisting Models

Candidate ranking models determine who advances in the hiring funnel, making them a core focus of AI red teaming. In AI red teaming in HR, these systems are tested for scoring manipulation, feature gaming, and ranking drift.

Adversarial candidates can influence rankings through optimized profiles, language tuning, and repeated applications, leading to subtle model manipulation in HR AI. Without continuous AI security testing in HR, biased or manipulated rankings appear valid while excluding qualified talent. Strong recruitment AI security depends on exposing how easily shortlists can be skewed.

Failure Impact:

⟶ Biased and distorted shortlists

⟶ Discriminatory hiring outcomes

⟶ Loss of trust in AI-led hiring decisions

4.3 AI-Powered Interview & Assessment Tools

AI-driven interviews and assessments analyze speech, text, and behavior, making them highly sensitive to adversarial interaction. AI red teaming evaluates how candidates game sentiment analysis, response timing, and LLM-based scoring.

In AI red teaming in HR, these tools often reveal model manipulation in HR AI through coached responses and prompt exploitation. Regular AI security testing in HR is essential to maintain fair and defensible outcomes and protect recruitment AI security.

Failure Impact:

⟶ False positives in candidate evaluation

⟶ Cultural, language, and neurodiversity bias

⟶ Inconsistent and unfair assessments

4.4 Job Matching & Recommendation Engines

Job matching systems influence opportunity distribution across roles. AI red teaming tests how profile tuning and feedback loops distort recommendations over time.

Through AI red teaming in HR, teams identify how small input manipulations lead to systemic bias and model manipulation in HR AI. Without adversarial AI security testing in HR, fairness and diversity goals are quietly undermined, weakening recruitment AI security.

Failure Impact:

⟶ Poor role-fit decisions

⟶ Unequal access to opportunities

⟶ Reinforced historical bias

4.5 Background Screening & Risk Scoring AI

Background screening AI relies heavily on external data, making it vulnerable to noise and poisoning. AI red teaming tests false signal amplification and data dependency failures.

In AI red teaming in HR, these systems often exhibit model manipulation in HR AI when unreliable data influences rejection decisions. Strong AI security testing in HR is critical to protect candidates and maintain recruitment AI security.

Failure Impact:

⟶ Wrongful candidate rejection

⟶ Compliance and legal violations

⟶ Reputational damage

4.6 Internal Talent Mobility & Promotion AI

Internal mobility AI shapes promotions and career growth, making silent bias especially dangerous. AI red teaming evaluates insider manipulation and performance signal gaming.

Within AI red teaming in HR, these systems frequently show model manipulation in HR AI driven by feedback loops and proxy signals. Continuous AI security testing in HR is required to preserve internal fairness and recruitment AI security standards.

Failure Impact:

⟶ Unfair promotions

⟶ Employee disengagement

⟶ Loss of organizational trust

4.7 HR Chatbots & Employee-Facing AI Assistants

HR chatbots interact directly with candidates and employees, expanding the attack surface. AI red teaming tests prompt injection, policy bypass, and data leakage.

In AI red teaming in HR, these tools are common vectors for model manipulation in HR AI through conversational abuse. Without rigorous AI security testing in HR, recruitment AI security is easily compromised.

Failure Impact:

⟶ Confidential HR data exposure

⟶ Incorrect policy guidance

⟶ Compliance risk

4.8 Workforce Analytics & Attrition Prediction Models

Workforce analytics AI influences planning and retention strategies. AI red teaming focuses on feedback loop exploitation and behavioral distortion.

Through AI red teaming in HR, teams uncover how model manipulation in HR AI leads to misleading insights. Ongoing AI security testing in HR is essential to maintain ethical decision-making and strong recruitment AI security.

Failure Impact:

⟶ Poor workforce planning

⟶ Ethical and morale issues

⟶ Strategic misalignment

4.9 Third-Party Recruitment AI Integrations

Third-party AI tools extend hiring risk beyond organizational control. AI red teaming evaluates supply-chain exposure, data leakage, and cross-system manipulation.

In AI red teaming in HR, vendor systems often introduce hidden model manipulation in HR AI risks. Effective AI security testing in HR is critical to enforce end-to-end recruitment AI security.

Failure Impact:

⟶ Vendor-driven compliance failures

⟶ Indirect data breaches

⟶ Loss of governance control

5. How to Test HR AI Models Effectively

In order to test HR AI appropriately, we need to transition away from validating our assumptions about how HR AI works, and instead, engage in adversarial thinking , i.e., think like an adversary , to simulate how HR AI may fail in the future. In this way, AI red teaming (testing how well HR AI systems will continue to function when they are pushed to the limit, abused or used in ways that are not anticipated) is different from traditional testing of AI, which simply tests to see if the AI performs as it was designed to.

This difference is particularly relevant in hiring environments where there is often a strong incentive for candidates to continuously game the system in terms of making the hiring process work in their favor.

To implement effective AI red teaming in HR, we need to begin with the assumption that all aspects of the hiring process (resumes/profiles/interview responses/internal inputs) will be optimized by candidates to manipulate the outcome of the hiring decision-making process. As such, red teams must develop and execute "misuse scenarios" (i.e., real candidate behaviors), such as resume screening manipulation, profile tuning, and interaction abuse, repeatedly, to determine how hiring AI degrades and ultimately fails, not just during single, isolated tests.

It is at this point that AI security testing in HR goes beyond mere compliance and accuracy metrics.

Another important aspect of AI red teaming in HR is testing for the accumulation of failure. While many HR AI systems fail catastrophically, many others fail gradually, and through the accumulation of small, incremental changes to model parameters (e.g., feedback loops, biased features, and threshold tuning). Such gradual failures may go unnoticed until after they have created model manipulation in HR AI. Continuous AI red teaming allows HR teams to detect such failures before they cause serious problems with recruitment AI security by identifying potential vulnerabilities that would only be exposed under prolonged stress.

In addition, AI red teaming in HR should include end-to-end testing. The fact is that hiring AI operates in conjunction with other processes (e.g., resume screening influences ranking models which affect interviews, recommendations, etc.), and therefore, must be tested to ensure that it will not fail in a manner that creates systemic hiring bias that can only be identified by monitoring the effects of repeated small-scale adversarial inputs on hiring decisions. Ultimately, the best means of achieving recruitment AI security is to continually evaluate how potential failures propagate across workflows between and among various components of hiring AI systems.

Ultimately, effective AI red teaming in HR must be ongoing. Models change, prompts are revised, data drifts, and candidate behavior evolves. Therefore, one-time reviews of AI systems rapidly become outdated. A continued commitment to AI red teaming ensures that recruitment AI security remains current with actual use cases, providing HR teams with the basis for evidence-based confidence that their AI systems will continue to function correctly and prevent adverse consequences from misuse, manipulation and scaling.

6. Conclusion

HR and recruitment AI systems now make decisions that directly affect people, careers, and organizational culture. When these systems fail, the damage is rarely immediate or obvious. It accumulates quietly through biased filtering, distorted rankings, and unchecked resume screening manipulation that gradually reshapes hiring outcomes. This is why AI red teaming must be treated as a foundational control, not a last-mile safeguard.

By applying AI red teaming in HR, organizations stop assuming that hiring AI will behave correctly and start proving that it can withstand misuse. Adversarial testing exposes how resume screening manipulation evolves over time, how model manipulation in HR AI can remain statistically invisible, and how recruitment workflows drift under real-world pressure. Without this visibility, even mature governance programs struggle to defend hiring decisions when challenged.

As automation deepens, AI red teaming provides the practical lens HR leaders need to understand where risk actually lives. It shifts security from static reviews to continuous discovery, ensuring that AI red teaming in HR keeps pace with changing models, data, and candidate behavior. This approach also strengthens recruitment AI security by addressing failures before they scale into legal, ethical, or reputational crises.

This is where WizSumo AI plays a critical role. WizSumo AI helps organizations operationalize AI red teaming across HR and recruitment systems by simulating real misuse scenarios, testing for resume screening manipulation, and uncovering hidden model manipulation in HR AI across end-to-end hiring workflows. By combining adversarial expertise with domain-specific insight, WizSumo AI enables teams to move from assumption-based trust to evidence-backed confidence in their hiring AI.

Ultimately, responsible hiring automation is not achieved by believing AI is fair, secure, or compliant. It is achieved by continuously challenging those assumptions. With structured AI red teaming in HR, organizations can scale AI-driven hiring while maintaining fairness, accountability, and trust.

“Hiring AI rarely breaks, it quietly decides who never gets a chance.”

.svg)

.png)

.svg)

.png)

.png)