AI red teaming in insurance : Testing Underwriting, Claims, and Risk Models

.png)

Key Takeaways

AI red teaming focuses on how insurance AI systems fail, not just how they perform.

Traditional validation cannot detect real-world model manipulation in insurance AI.

High-impact insurance AI systems must be tested under adversarial and misuse scenarios.

Continuous AI security testing in insurance helps detect silent failures before they scale.

Strong insurance AI security is built through evidence-based testing, not assumptions of safety.

1. Introduction

AI is quietly transforming the insurance industry from underwriting and premium pricing, to claims processing and risk assessment of entire portfolios; all with the assistance of AI systems that make continuous, scalable, and usually non-human decisions directly impacting the insured party, capital reserves and regulatory compliance.

Because these AI systems are running constantly and at large scales, their failures are rare and seldom loud. They occur gradually over time through miscalculated risk pricing, unfair treatment of certain claimants, un-detectable fraudulent activity, and incorrect claims adjudication. This is exactly why AI red teaming in the insurance industry is important.

Notably different from typical testing methodologies that focus solely on measuring accuracy within the parameters of normal operation, AI red teaming in insurance is designed to identify how an AI system operates when its original assumptions are violated. In other words, AI red teaming in insurance identifies how an AI System responds to unexpected input, edge cases and how easily an attacker, internal actor, or simply a user attempting to use the system as intended can take advantage of potential vulnerabilities within automated decision making.

As the reliance of insurers upon automation increases, so will the likelihood of adversary behavior.

While many organizations currently view AI risk through the lens of compliance related items (Model Validation, Performance Testing, Security Reviews), these items are not enough. Compliance items typically test for adherence to rules rather than identifying how users may intentionally manipulate a system, nor do they test for how a system may be exploited by adversaries.

The primary reason that AI security testing in insurance has to move away from mere compliance checklist testing and accuracy metric testing is because the goal of such testing needs to shift from merely identifying if a model works, to understanding how a model can be broken.

The attack surface for insurance AI continues to grow exponentially. Insurance AI uses complex data pipelines and multiple third parties for integration purposes; this creates new types of failures and failure modes that traditional review processes ignore. Silent failures over time due to model manipulation in insurance AI, including but not limited to biased feature exploitation, data poisoning and logic abuse, will lead to loss of trust from consumers, loss of capital, and regulatory issues.

This blog post provides information to assist insurers to proactively address these types of challenges. The blog post addresses what AI red teaming looks like in an insurance environment, why AI red teaming in insurance is growing into a critical risk management tool, and which areas to prioritize first. The ultimate goal of this blog post is not to instill fear regarding the adoption of AI technology, but to provide an adversarial perspective for creating AI-based systems that are both resilient and trustworthy prior to those systems failing in a real-world application.

2. What Is AI red teaming in insurance?

The main concept of AI red teaming refers to the process of strategically using counterintuitive thinking to deliberately challenge AI systems and discover how they will fail with intentional misuse, manipulation or unforeseen circumstances. With regard to the insurance industry, this new way of evaluating AI approaches the evaluation of how an AI system works when the underlying assumptions of the system are no longer valid, instead of simply determining if an AI system performs well using historical data. AI red teaming in insurance is not about validating that an AI system works – but instead to validate where, how and why an AI system fails.

Conventional AI testing for the insurance environment traditionally assumes that users operate in good faith. Users are expected to input clean data, follow the designed workflow and measure model performance based on accuracy, precision and stability metrics. Unfortunately, real world insurance business environments are much more messy. For example policyholders can attempt to manipulate underwriting models to obtain better premiums; fraudsters can actively test and evaluate claims processing systems; employees can use decision support software incorrectly; and automated systems can increase error rates exponentially due to their ability to perform tasks at high volumes.

AI red teaming takes into consideration that these types of behaviors will occur. Practically speaking AI red teaming in insurance evaluates all components of the entire AI system (not just the model) including data ingestion and processing pipelines, feature engineering, user interface/ API / third party interfaces, etc. The purpose is to determine whether an AI system can be manipulated into producing unfair results, inaccurate approval/denial decisions or misleading risk assessments without causing obvious malfunctions. Conventional AI security testing in insurance typically focuses on ensuring the perimeter of an AI system is secure vs. ensuring decision integrity within the AI system.

Another important component of this work includes identifying model manipulation in insurance AI. Model manipulation does not necessarily look like an attack. A model can be manipulated by entering information that exploits proxy variables, slowly poisoning a model with altered data which alters model behavior over time, or creating interaction patterns that cause an automated system to make unsafe decisions. These types of failure modes do not normally appear during testing, and can significantly affect the fairness of underwriting, claims leakage and overall portfolio risk.

Finally, AI red teaming is different than a compliance audit or model validation exercise. Compliance audits ensure that an AI model meets its documented specifications; while red team testing challenges the very same specifications. Through the application of adversarial testing to insurance AI systems organizations gain insight into previously unknown failure pathways that could have remained hidden until financial losses or regulatory oversight required the organization to expose them.

3. Why Insurance Companies Need AI red teaming

Insurers operate in an environment with potentially catastrophic consequences for small errors made while taking business decisions. The deployment of Artificial Intelligence (AI) across all aspects of insurance including underwriting, pricing, fraud detection, claims processing and portfolio risk management significantly reduces the window for undetected errors to grow into significant financial and regulatory risks. Therefore, AI red teaming is no longer a discretionary activity for insurers — it is an essential component of their Risk Management.

While most insurers have implemented traditional controls such as audits, model validations and governance reviews, none of these controls were created to test how AI systems would react if a user intentionally provided incorrect information, if a workflow was intentionally abused or if users engaged with a system in unintended ways. AI red teaming in insurance closes this gap and assumes that at some point, an insurer's employees or contractors will intentionally abuse an insurer's AI system and tests its ability to withstand those attacks.

From a Security perspective, insurers now face an increasing threat landscape which goes well beyond threats to their IT Infrastructure. In addition to being a target for hackers, insurers' decision making AI systems are now vulnerable to intentional manipulation by both insiders and external actors. For example, an underwriting model could be intentionally modified to produce lower than warranted premiums, a claims model could be altered to produce excessive payments on questionable claims and an actuarial risk model could be intentionally skewed to produce lower than actual levels of expected losses. It is in this area that AI security testing in insurance needs to move away from perimeter based security thinking to "Decision Integrity" type security testing and "AI red team testing". Most importantly, without Adversarial Testing of AI Systems, most of the adverse impacts of failures in AI systems will remain hidden.

Another issue facing insurers is the increased risk of model manipulation in insurance AI. Model manipulation is different from other types of cyber-attacks because, unlike typical hacking, manipulation typically appears legitimate. Insurer staff who develop detection models, applicant staff who want to obtain coverage at favorable rates, and internal staff who rely heavily on recommendations generated by AI systems are among the groups who can unintentionally manipulate an insurer's models. Because of the fact that manipulation is typically carried out in a gradual manner, most forms of model manipulation will not generate alerts; however, successful manipulation can result in material changes to an insurer's Loss Ratio and Reserve Planning.

The Regulatory Environment also adds to the necessity of AI red teaming in insurance. Increasingly, regulators are requiring insurers to demonstrate that their AI Models are not only accurate, but also Fair, Explainable, Resilient and Well-Controlled. If an insurer experiences a failure in an AI System related to underwriting or claims, the insurer will likely be required to explain why the failure occurred and what testing was conducted to prevent similar future failures. Documented insurance AI security Practices, including documented AI red teaming exercises, can provide strong evidence of an insurer's Proactive Risk Management.

Additionally, there are Reputational Implications. An insurer's AI driven failures typically affect customers directly — for example, incorrect denial of claims, discriminatory pricing, etc. Typically, these types of failures are not isolated events — they occur as part of larger patterns over time. Therefore, by the time an insurer is aware of these failures, it is usually too late and the damage has been done. "AI sed teaming in insurance" provides insurers with an opportunity to identify these failures prior to them becoming identifiable failures affecting thousands or millions of policies.

In addition, as insurers begin to combine AI Systems with Third Party Data Providers, Vendors and Automated Workflows, the speed at which a failure propagates increases exponentially. A failure in one model can cause a ripple effect on multiple downstream decisions without providing an obvious trail of causality. The use of continuous AI security testing in insurance, along with targeted testing for model manipulation in insurance AI will enable insurers to better understand how a failure spreads and how to contain that failure before it reaches either the customer or regulators.

4. Insurance AI Systems That Must Be Red Teamed First

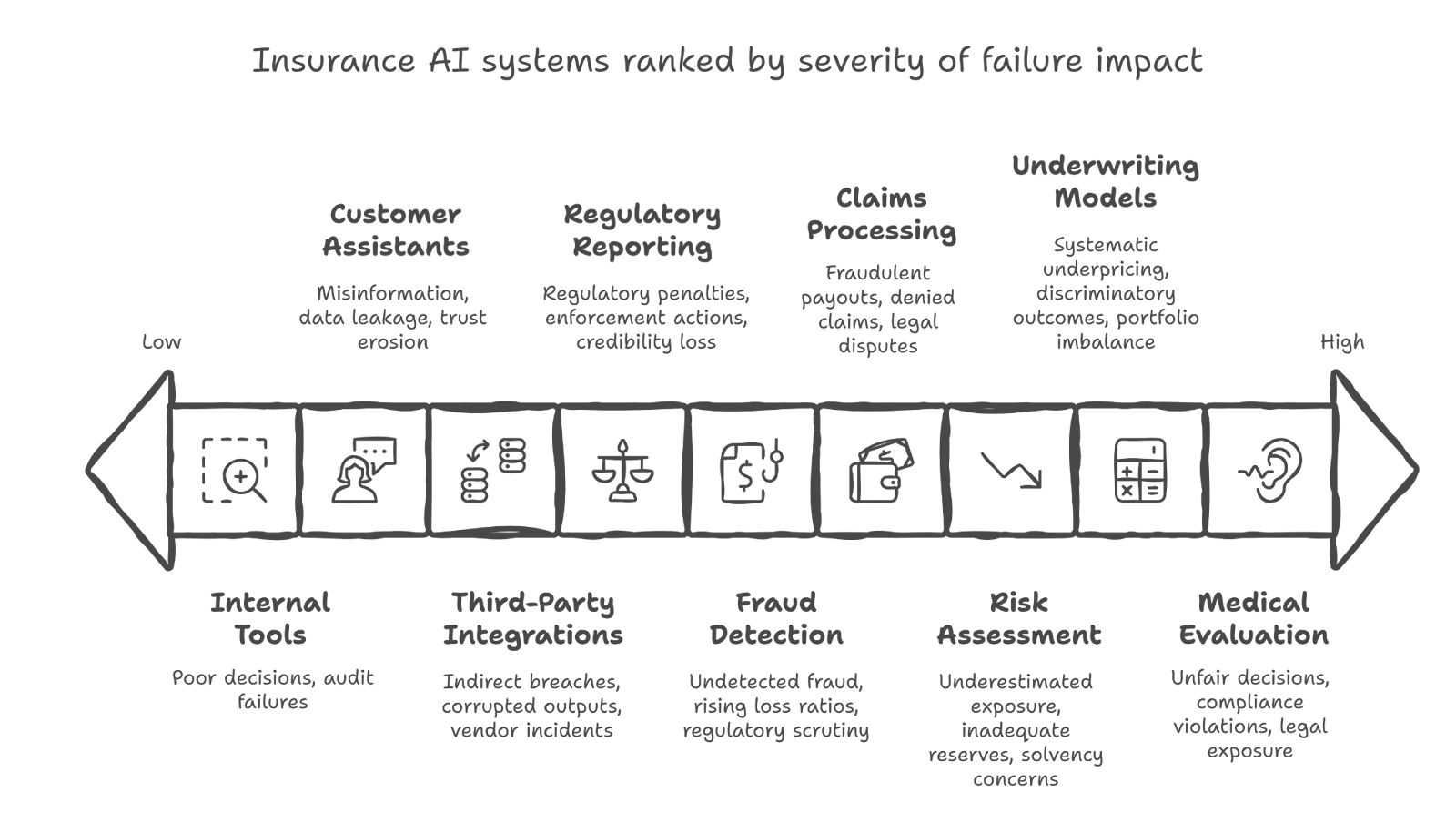

Not all AI systems in an insurance organization carry the same level of risk. Some models influence marginal operational efficiency, while others directly affect underwriting decisions, claims outcomes, capital exposure, and regulatory posture. AI red teaming should always prioritize systems where silent failure can scale quickly and where misuse—intentional or accidental—is realistic. In AI red teaming in insurance, the focus is on AI systems that shape financial outcomes, customer trust, and compliance obligations.

Below are the most critical insurance AI systems that should be subjected to continuous adversarial evaluation through AI security testing in insurance. Each subsection highlights not just what the system does, but how model manipulation in insurance AI can occur and why the impact is severe.

4.1 AI-Driven Underwriting & Policy Pricing Models

Underwriting models are at the core of insurance profitability. These systems assess applicant risk, determine eligibility, and calculate premiums—often in real time. Because they operate at scale, small distortions can lead to large financial consequences.

AI red teaming in insurance focuses on how underwriting models can be influenced through manipulated inputs, proxy discrimination, or feature gaming. Attackers or applicants may intentionally adjust data to exploit correlations the model relies on, leading to underpriced risk or unfair exclusions.

Failure Impact:

⟶ Systematic underpricing or adverse selection

⟶ Discriminatory outcomes and regulatory violations

⟶ Long-term portfolio imbalance

This makes underwriting models a top priority for AI red teaming and advanced insurance AI security programs.

4.2 Claims Processing & Automated Claims Approval Systems

Claims automation systems determine whether claims are approved, denied, or escalated. These decisions directly affect customer satisfaction, loss ratios, and legal exposure.

Through AI security testing in insurance, red teams simulate adversarial claim patterns, edge-case scenarios, and workflow abuse. model manipulation in insurance AI may involve structuring claims data to bypass fraud checks or exploiting logic gaps that favor approval.

Failure Impact:

⟶ Fraudulent payouts at scale

⟶ Legitimate claims incorrectly denied

⟶ Legal disputes and reputational damage

Because claims failures often appear valid on the surface, AI red teaming in insurance is critical to uncovering hidden weaknesses.

4.3 Fraud Detection & Claims Abuse Models

Fraud detection models are inherently adversarial. Fraudsters actively probe these systems, learn their behavior, and adapt over time. This dynamic makes them prime candidates for AI red teaming.

Red teaming simulates evolving fraud strategies designed to evade detection thresholds. Subtle model manipulation in insurance AI—such as timing attacks or behavior mimicry—can slowly degrade model effectiveness without obvious alerts.

Failure Impact:

⟶ Undetected claims fraud

⟶ Rising loss ratios

⟶ Increased scrutiny from regulators and reinsurers

Robust AI security testing in insurance ensures these systems remain resilient against adaptive adversaries.

4.4 Risk Assessment, Actuarial & Loss Prediction Models

Risk models inform pricing strategy, reinsurance decisions, and capital allocation. Their failures are strategic rather than transactional, often remaining hidden until stress events occur.

In AI red teaming in insurance, teams challenge model assumptions, simulate extreme but plausible scenarios, and test sensitivity to biased or poisoned data. model manipulation in insurance AI at this level can distort long-term risk forecasts.

Failure Impact:

⟶ Underestimated catastrophe exposure

⟶ Inadequate reserves

⟶ Regulatory and solvency concerns

These models require continuous insurance AI security oversight due to their systemic impact.

4.5 Customer-Facing AI Assistants & Chatbots

Insurers increasingly rely on AI assistants for policy explanations, claims support, and renewals. These systems interact directly with customers and often have access to sensitive information.

AI red teaming tests for prompt injection, hallucinated policy guidance, and unauthorized data disclosure. AI red teaming in insurance evaluates how conversational systems behave when users push boundaries intentionally or unintentionally.

Failure Impact:

⟶ Misinformation and incorrect guidance

⟶ Data leakage

⟶ Erosion of customer trust

Strong AI security testing in insurance is essential to keep these systems safe and compliant.

4.6 Medical, Health & Life Risk Evaluation Models

Health and life insurers use AI to assess medical risk, longevity, and eligibility. These models are particularly sensitive due to ethical, legal, and fairness considerations.

Red teaming focuses on biased data inputs, proxy health indicators, and adversarial medical histories. model manipulation in insurance AI in this area can result in discriminatory outcomes that are difficult to detect post-hoc.

Failure Impact:

⟶ Unfair underwriting decisions

⟶ Compliance and regulatory violations

⟶ Legal exposure

This makes these models a high-risk category for AI red teaming in insurance.

4.7 Third-Party Data & Vendor AI Integrations

Many insurance AI systems depend on external data providers and vendor models. These integrations extend risk beyond the insurer’s direct control.

AI red teaming evaluates supply-chain vulnerabilities, hidden data usage, and cascading failures. AI security testing in insurance must account for how model manipulation in insurance AI can originate outside the organization.

Failure Impact:

⟶ Indirect data breaches

⟶ Corrupted model outputs

⟶ Vendor-driven incidents

4.8 Internal AI Tools for Adjusters & Risk Analysts

Internal AI tools support claims adjusters, underwriters, and analysts. While not customer-facing, these systems influence human decision-making at scale.

Red teaming examines hallucinated recommendations, over-reliance risks, and access misuse. AI red teaming in insurance helps ensure that internal tools augment rather than distort human judgment.

Failure Impact:

⟶ Poor internal decisions

⟶ Audit and governance failures

⟶ Hidden operational risk

4.9 Regulatory Reporting & Compliance Automation

Some insurers use AI to assist with regulatory reporting, compliance summaries, and risk disclosures. These systems carry extreme regulatory risk.

AI security testing in insurance evaluates hallucinated interpretations, incomplete disclosures, and suppressed risk indicators. AI red teaming ensures that automation does not introduce compliance blind spots.

Failure Impact:

⟶ Regulatory penalties

⟶ Enforcement actions

⟶ Loss of institutional credibility

5. How to Test Insurance AI Models Effectively

Testing insurance AI requires a fundamentally different mindset from traditional software testing or periodic model validation. The goal is not to confirm that a model performs well under normal conditions, but to understand how it behaves when assumptions fail. This is where AI red teaming becomes the backbone of effective testing strategies. In practice, AI red teaming in insurance focuses on discovering how AI systems fail silently, how those failures propagate, and how easily they can be exploited.

Effective testing begins with adopting an adversarial perspective. Instead of asking whether an underwriting or claims model is accurate, insurers must ask how it can be manipulated. AI security testing in insurance should simulate malicious applicants, adaptive fraudsters, biased data sources, and internal misuse scenarios. This approach exposes weaknesses that performance metrics alone will never reveal.

A comprehensive testing program for insurance AI typically spans three interconnected layers. First is model-level testing, where teams probe robustness, bias sensitivity, and susceptibility to model manipulation in insurance AI. This includes testing for proxy discrimination, feature gaming, and data poisoning that gradually alters outcomes without obvious signals. Second is system-level testing, which evaluates how models interact with data pipelines, APIs, prompts, and downstream workflows. Third is decision-level testing, which focuses on how incorrect or uncertain outputs influence real business decisions.

Another critical aspect of AI red teaming in insurance is continuous testing. Insurance AI systems evolve constantly—data distributions shift, fraud patterns change, and models are retrained or fine-tuned. One-time assessments quickly become obsolete. Continuous AI security testing in insurance allows insurers to track how risk changes over time and ensures that controls remain effective as systems adapt. This is especially important for detecting slow-burn model manipulation in insurance AI, where harmful effects accumulate gradually rather than triggering immediate failures.

Testing must also account for human interaction with AI. Underwriters, claims adjusters, and analysts often rely on AI-generated recommendations. AI red teaming evaluates how humans interpret and act on these outputs, identifying over-reliance risks, automation bias, and situations where misleading guidance can influence critical decisions. These socio-technical failure modes are a major source of hidden risk in insurance environments.

Ultimately, effective testing is not about breaking AI for its own sake—it is about building confidence through evidence. Insurers that embed AI red teaming in insurance into their governance frameworks gain a far clearer understanding of where their AI systems are resilient and where they are fragile. This clarity enables stronger insurance AI security practices and supports defensible, regulator-ready risk management.

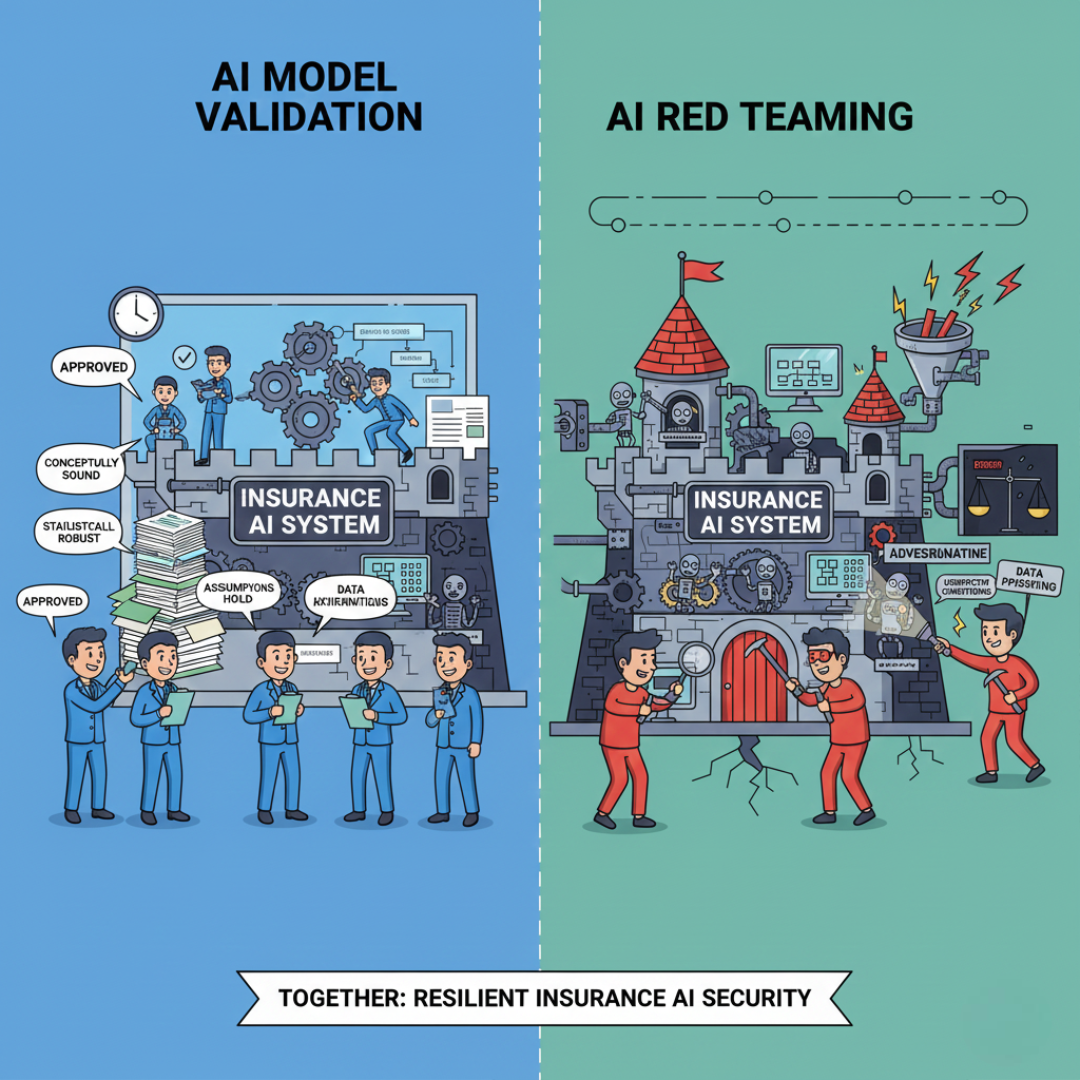

6. AI red teaming vs Model Validation in Insurance

For many years, model validation has been a cornerstone of managing risk within the insurance industry. The process of validating models in an insurance environment provides assurances that models have appropriate conceptual and statistical characteristics, as well as meet regulatory expectations. As insurance companies begin to develop, implement and operate increasing amounts of complex artificial intelligence (AI) based systems across all phases of the value chain - including underwriting, claims processing and risk management, the limitations of traditional model validation methods have become apparent. AI red teaming, which represents a fundamentally new method of providing assurance of AI-based systems, addresses these limitations.

Validation generally tests if a model performs as anticipated under specific sets of assumptions. Validation reviews evaluate documentation, performance metrics, explanations and stability over time. Validation does not ask how a system would perform when those assumptions are violated. AI red teaming in insurance assumes that AI systems will be used in unintended manners (misuse), altered or subject to stressors that were not envisioned by the designer.

This is a critical distinction. Many of the worst-case insurance-related AI failures occurred in systems that had been validated as technically sound. Exploitation of bias, manipulation of prompts, gradual poisoning of training data and abuse of workflow processes often do not fall within the scope of validation reviews. These types of problems are typically identified via adversarial pressure (not via compliance checklists). For this reason, AI security testing in insurance should consider AI red teaming as an additional area of study, as opposed to assuming validation alone is enough.

In terms of timing, validation is generally done on a schedule and/or is documented. In contrast, AI red teaming is ongoing and behavior-based. As underwriting models are re-trained, as claims systems continue to be automated, and as new data sources are introduced into the systems, the risk profile of the insurer continues to evolve. Continuous AI security testing in insurance can help to identify evolving vulnerabilities and potential new avenues of model manipulation in insurance AI prior to them being realized in the world.

Finally, there is a difference in the mindsets of validation and AI red teaming. Validation seeks to establish confidence in a model. AI red teaming seeks to challenge that confidence under realistic conditions. Given the nature of AI decision making in insurance - i.e., direct impacts upon consumers and capital - an adversarial mindset is essential to effectively govern AI within insurance environments. An adversarial mindset forces organizations to contemplate adverse scenarios and to design controls for misuse, not simply intended use.

The most effective programs of insurance AI governance will not select between validation and AI red teaming. Rather, the most effective programs will leverage both approaches. Validation establishes an initial level of confidence in a model, and AI red teaming tests that confidence in a realistic manner. When taken together, the two approaches provide a much more robust way of addressing insurance AI security, consistent with regulatory requirements and actual risks faced by the organization.

7. Conclusion

AI is no longer a peripheral capability in insurance—it is embedded directly into how risk is assessed, policies are priced, claims are paid, and capital is allocated. As these systems grow more autonomous and interconnected, the cost of failure rises sharply. The most dangerous failures are rarely obvious. They emerge quietly through biased underwriting, undetected fraud, incorrect claims decisions, or distorted risk forecasts. This is exactly why AI red teaming must be treated as a core control rather than an optional exercise.

Throughout this blog, one pattern is clear: traditional controls alone cannot keep pace with modern insurance AI. Validation confirms that models meet expectations, but it does not reveal how those expectations break. AI red teaming in insurance fills this gap by deliberately challenging systems under adversarial, abusive, and unintended conditions. It exposes weaknesses that performance testing, audits, and governance reviews consistently miss. When combined with continuous AI security testing in insurance, insurers gain visibility into how failures actually occur in real-world environments.

Just as importantly, adversarial testing changes how organizations think about risk. Instead of asking whether an AI system works, insurers begin asking whether it can be trusted under pressure. This shift is critical for addressing subtle model manipulation in insurance AI, where harm accumulates slowly and silently. By identifying these risks early, insurers can protect customers, preserve capital, and demonstrate defensible insurance AI security practices to regulators and stakeholders.

This is where specialized expertise becomes essential. WizSumo works with insurers to operationalize AI red teaming across high-impact systems, including underwriting models, claims automation, fraud detection, and risk assessment workflows. WizSumo’s approach combines deep domain understanding with hands-on adversarial testing to simulate realistic misuse scenarios that internal teams often cannot replicate. Rather than relying on assumptions of safety, insurers gain evidence-backed insight into how their AI systems behave when stressed, manipulated, or misused.

Ultimately, secure insurance AI is not achieved through confidence alone—it is achieved through proof. Insurers that invest in AI red teaming in insurance move from reactive incident response to proactive risk discovery. They identify silent failures before they scale, strengthen governance before regulators intervene, and build AI systems that are resilient by design. In a future where AI decisions increasingly define insurance outcomes, adversarial testing is not just good practice, it is essential.

“Insurance AI doesn’t fail all at once, it fails quietly, decision by decision, until the impact is unavoidable.”

.svg)

.png)

.svg)

.png)

.png)