AI Red Teaming in Payments : Securing Transaction AI and Anti-Fraud Engines

.png)

Key Takeaways

AI red teaming in payments focuses on how transaction AI fails under real adversarial pressure.

Traditional fraud model validation cannot detect adaptive AI-driven fraud strategies.

Payment fraud attacks are designed to appear normal, not suspicious.

AI security in payment systems requires continuous adversarial testing, not one-time reviews.

Proactive AI red teaming helps payment platforms prevent silent failures before financial and regulatory impact occurs.

1. Introduction

In modern payment systems, much of the AI used to make real-time decisions at enormous scale has introduced the potential for a new type of silent threat , the ability for those systems to fail without causing system-wide crashes, while allowing for some level of fraudulent activity to occur, blocking legitimate customers from receiving funds, creating regulatory issues etc.

This is exactly why AI red teaming has become a crucial part of maintaining secure systems , and will continue to be an important tool for organizations seeking to defend against potential threats facing the AI-powered components of their transactions.

Traditionally, identifying a security breach typically results from detecting evidence of the breach through monitoring or logging. However, in cases involving the use of AI in payment processing, the potential for failure (and thus, the potential for a security breach) may not always present itself in such a manner. Many times, payment processing AI failures can manifest themselves in less than obvious ways including the presence of unknown or previously undetected fraud patterns, increases in false decline rates, increased frequency of chargebacks, and unexplained changes in a model's behavior.

Attackers have identified the fact that AI based systems utilize decision boundary logic to determine what actions to take when processing transactions. Therefore, in order to effectively test the defenses of these systems, organizations must begin using Payment fraud AI attacks simulation, which would allow them to understand how their decision boundary logic behaves and how an attacker might adapt their behavior to avoid being detected.

Currently, most organizations' assessments of the risk associated with their payment processing AI systems are based on standard performance metrics, historical validation data, and static control measures; however, these types of assessment methodologies assume that the input into the systems is benign and the behavior of the systems is predictable. Unfortunately, the payment ecosystem is continuously subject to attempts by adversaries who seek to experimentally design and execute Payment fraud AI attacks that are capable of evading detection while appearing to be statistically valid. These vulnerabilities are frequently discovered after financial losses or regulatory scrutiny have occurred, because there was no prior "adversarial testing" performed on the payment processing AI system.

As more organizations adopt the use of AI technology within their authorization engines, fraud models, and orchestration layers, AI security in payment systems must evolve beyond the focus on measuring accuracy and uptime. Organizations must develop structured methods to intentionally stress their AI systems, simulate the abuse of those systems, and identify failure modes in those systems before an adversary does. The primary purpose of AI red teaming is to help identify how payment AI systems will function when subjected to pressure, misused, and manipulated.

2. What Is AI red teaming in Payment Systems?

AI red teaming for payment systems – the process of making a deliberate attack on an AI-based system (transaction and/or fraud) to test its limits when operating under real world adversarial conditions – is different from typical testing methods because AI red teaming uses malicious, adaptable, and specifically created to find blind-spots in the models input instead of behaving in ways you would normally expect.

When it comes to payments, AI red teaming in payments refers to the use of transaction authentication, fraud detection, behavioral analytics, and decision-orchestration levels that all work together within milliseconds to make decisions about transactions. Payment systems are attacked constantly by individuals trying to manipulate their transaction behaviors by altering their pattern, timing, amount, device(s), and identity(ies). AI red teaming in payments is used to mimic these types of behaviors before attackers can succeed at them in a live environment.

Historical data is traditionally used to test methods such as accuracy, precision, recall, and false-positive rates for payment fraud models. However, these methods will not show how models perform when exposed to AI threats in digital payments, including coordinated low value fraud attempts, threshold probing, and slow and incremental behavioral changes. AI fraud detection model testing through red teaming makes a conscious effort to introduce these adversarial patterns to see how the models react when the underlying assumptions are broken.

Understanding that payment AI does not fail independently is also important to understanding AI security in payment systems. Fraud models work in conjunction with velocity checks, device fingerprinting, rule engines, and third party risk signals. AI red teaming tests the interaction between each component under pressure and identifies how small vulnerabilities can lead to larger failures throughout the entire payment flow.

Through simulation of Payment fraud AI attacks including evasive strategies that appear statistically normal and/or take advantage of confidence thresholds, AI red teaming in payments shows the hidden failure modes that traditional validations are unable to identify. An adversarial approach ensures that AI security in payment systems is based on actual attacker behavior and not just theoretical assumptions.

3. Why Payment Platforms Need AI red teaming

The world of payment platforms operates in one of the most hostile digital environments today. The transactional nature of all of the transactions that are processed by AI-based systems is a constant probe into how those decisions are being made by attackers to see if there is an exploitable weakness to be found. That is why payment fraud AI red teaming is not a luxury in the payments industry; it is necessary to protect against constantly evolving fraud tactics.

Fraudsters create Payment fraud AI attacks that are designed to be neither random nor noise; rather, they are stealthy, distributed, and optimized to look like statistical outliers. Attacks on this level are created to exploit specific vulnerabilities in the fraud scoring threshold, confidence band, and behavioral assumptions upon which many fraud models are based. As long as payment fraud AI red teaming is absent from the equation, these types of attacks will continue to go undetected until large-scale losses have been incurred.

One of the primary challenges is that AI threats in digital payments evolve much faster than traditional control methods. Fraudsters test small-dollar transactions, vary the timing of their attempts, use multiple devices, and modify the behavior they exhibit to remain under the radar of detection capabilities. While standard monitoring tools may report steady performance, the amount of fraud leakage occurring will increase quietly.

The only means of identifying these hidden points of failure is through "adversarial simulation," or AI fraud detection model testing.

Another reason that "payment fraud AI red teaming" is required is due to the complexity of modern payment architecture systems. Fraud models rarely exist in isolation within a system; rather, they function in conjunction with other components such as rule engines, velocity systems, device fingerprinting, geolocation checks, and third-party risk signals. Failure in any of these components can compromise the integrity of the decision-making process for the entire system. Therefore, "payment system AI security" must assess both the functionality of each model individually and how each component functions collectively when subjected to an "end-to-end" attack.

Pressure from regulatory bodies and compliance requirements also emphasize the need for "payment fraud AI red teaming". When a fraud incident occurs, regulators and business partners will increasingly ask not only what went wrong, but why did it not fail sooner? In addition, without proof of prior AI fraud detection model testing, payment platforms will find it difficult to demonstrate sufficient risk management.

Finally, "payment fraud AI red teaming" provides payment organizations with the opportunity to transition from reactive fraud responses to proactive risk discoveries. Through simulation of actual Payment fraud AI attacks and prediction of future AI threats in digital payments, payment platforms can proactively identify areas of vulnerability before attackers are able to exploit them , thereby protecting customer trust and revenue.

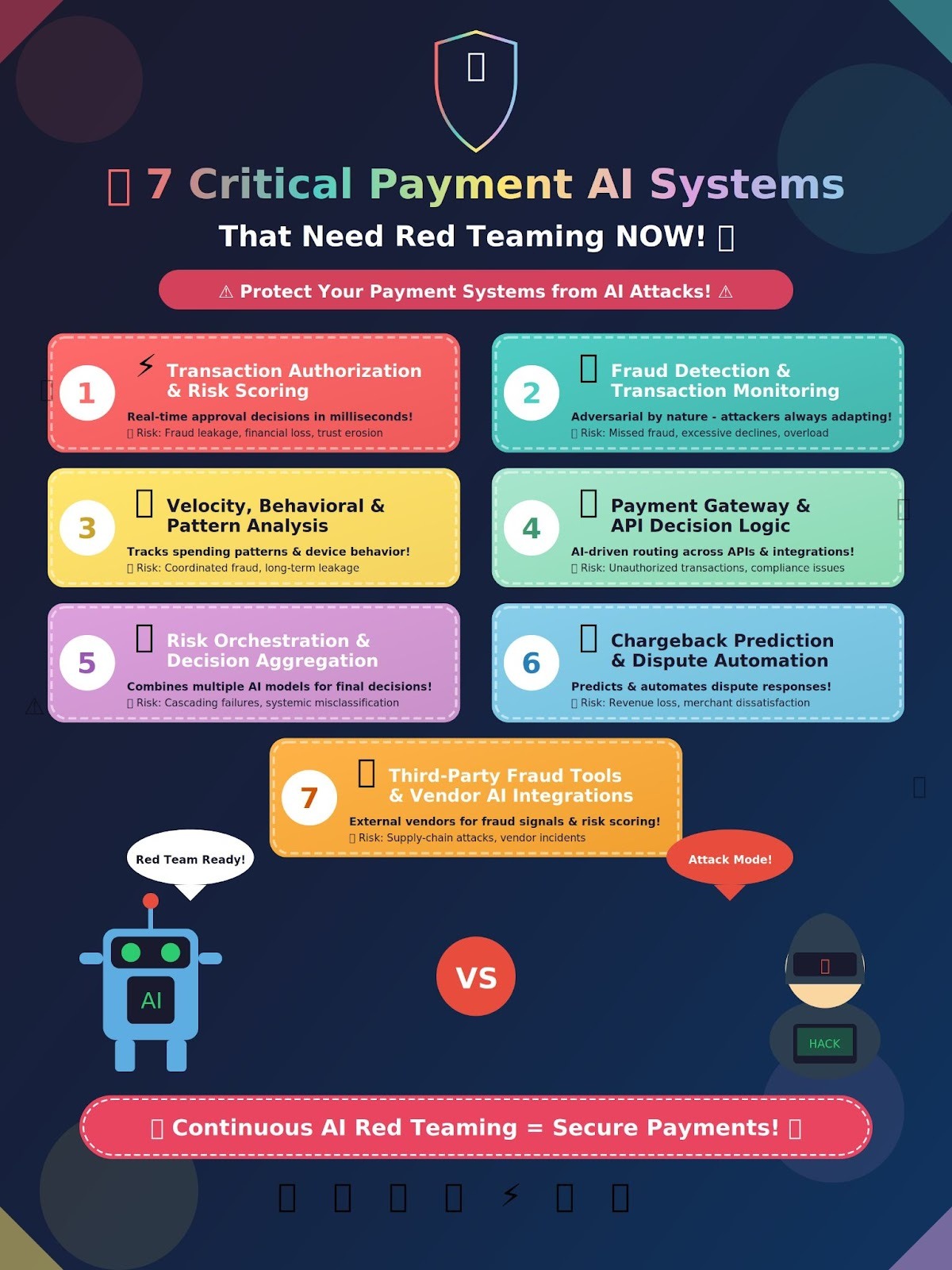

4. Payment AI Systems That Must Be Red Teamed First

Not all AI systems in payments carry the same level of risk. AI red teaming in payments must prioritize systems that directly influence transaction approval, fraud outcomes, financial loss, and regulatory exposure. These systems operate in real time, at scale, and under constant adversarial pressure,making them prime targets for Payment fraud AI attacks and other AI threats in digital payments.

Below are the most critical payment AI systems that must undergo continuous AI red teaming and AI fraud detection model testing to ensure resilient AI security in payment systems.

4.1 Transaction Authorization & Risk Scoring Engines

Transaction authorization engines use AI to approve, decline, or challenge payments within milliseconds. These systems sit at the front line of fraud prevention and revenue protection.

AI red teaming targets how attackers manipulate transaction attributes,amounts, timing, merchant categories, devices,to push fraudulent transactions through approval thresholds. AI red teaming in payments reveals how small model confidence shifts can lead to large-scale fraud leakage.

Failure impact:

Undetected fraud, financial loss, customer trust erosion

4.2 Fraud Detection & Transaction Monitoring Models

Fraud detection models are inherently adversarial by nature. Attackers continuously adapt their behavior to evade detection while remaining statistically normal.

This is where AI fraud detection model testing is most critical. Through AI red teaming, teams simulate adaptive fraud strategies, saturation attacks, and false-positive inflation. These Payment fraud AI attacks often bypass traditional validation while degrading performance silently.

Failure impact:

Missed fraud, excessive declines, operational overload

4.3 Velocity, Behavioral & Pattern Analysis Systems

Behavioral AI systems track transaction frequency, device usage, location changes, and spending patterns over time.

AI red teaming in payments evaluates how attackers slowly manipulate behavior to stay just below detection thresholds. These systems are especially vulnerable to long-term AI threats in digital payments that exploit gradual model drift rather than single high-risk events.

Failure impact:

Coordinated fraud, long-term leakage, delayed detection

4.4 Payment Gateway & API Decision Logic

Modern payment gateways embed AI-driven routing, retries, and risk logic across APIs and integrations.

AI red teaming tests malformed inputs, logic abuse, and adversarial API usage that exploits inconsistencies between systems. Weak AI security in payment systems at this layer allows attackers to bypass upstream controls entirely.

Failure impact:

Unauthorized transactions, integration abuse, compliance exposure

4.5 Risk Orchestration & Decision Aggregation Layers

Risk orchestration layers combine outputs from multiple AI models and rule engines to make final decisions.

Through AI red teaming in payments, teams uncover cascading failures, mis-weighted confidence scores, and conflicting signals. These failures often amplify Payment fraud AI attacks across multiple channels simultaneously.

Failure impact:

Systemic misclassification, inconsistent fraud decisions

4.6 Chargeback Prediction & Dispute Automation Systems

AI models increasingly predict chargeback likelihood and automate dispute responses.

AI fraud detection model testing via AI red teaming exposes how attackers manipulate dispute signals, exploit bias, or trigger incorrect automation. These AI threats in digital payments directly impact revenue and merchant relationships.

Failure impact:

Rising chargebacks, revenue loss, merchant dissatisfaction

4.7 Third-Party Fraud Tools & Vendor AI Integrations

Payment platforms heavily rely on external AI vendors for fraud signals, identity data, and risk scoring.

AI red teaming in payments evaluates supply-chain risk, hidden data dependencies, and cross-system abuse. Weaknesses here undermine overall AI security in payment systems, even when internal models are robust.

Failure impact:

Indirect breaches, vendor-driven incidents, regulatory scrutiny

5. How to Perform AI red teaming for Transaction AI and Anti-Fraud Engines

Conducting a AI red teaming in a payment environment to test an organization’s defensive posture against Payment fraud AI attacks is fundamentally about evolving away from testing for accuracy in controlled (performance) based testing, toward the use of behavioral and adversarial testing methods.

In the payment industry, it is not the lack of accuracy in AI fraud detection model testing in controlled testing that leads to failure,it is the ability of an attacker to take advantage of how the model behaves under duress that can lead to failure. Therefore, AI red teaming in payments must focus on simulating “real-world” attack goals,rather than idealized input values.

Designing scenarios to simulate Payment fraud AI attacks , including transaction splitting, low-and-slow fraud, confidence threshold probing and coordinated behavioral manipulation , are the types of scenarios used in AI red teaming in payments. These scenarios are constructed so they will bypass traditional AI fraud detection model testing metrics and remain statistically normal.

A key element of AI security in payment systems is testing the robustness of models to stress. In AI red teaming, fraud models are tested by applying poisoned transaction patterns, misleading behavioral signals and edge-case combinations that have never appeared in the training data. These tests demonstrate whether the model fails safely or allows fraudulent transactions to increase , one of the greatest AI threats in digital payments.

Another important aspect of AI red teaming in payments is the testing of interactions. Fraud detection models typically don’t operate in isolation; they interact with velocity systems, rules engines, device fingerprinting and third-party risk signals. AI red teaming examines how vulnerabilities multiply across systems and demonstrates how cascading failures compromise AI security in payment systems.

Ongoing AI fraud detection model testing is necessary due to the constant evolution of “payment AI” models. Models continuously retrain, thresholds are changed, data drift occurs and attackers continually adapt. As a result, static assessments rapidly become outdated. On-going AI red teaming enables payment organizations to monitor how their “resilience” evolves over time and determine if new Payment fraud AI attacks occur as controls continue to evolve.

Ultimately, effective AI red teaming in payments provides tangible results. Rather than simply attempting to “break” models, the purpose of AI red teaming in payments is to identify conditions that result in model failures, blind spots in decision-making and unguarded areas of controls. Payment organizations can then apply this knowledge to improve AI security in payment systems, adjust “fraud detection” logic and develop “payment fraud AI defense strategies” that address “real world” AI threats in digital payments , not hypothetical ones.

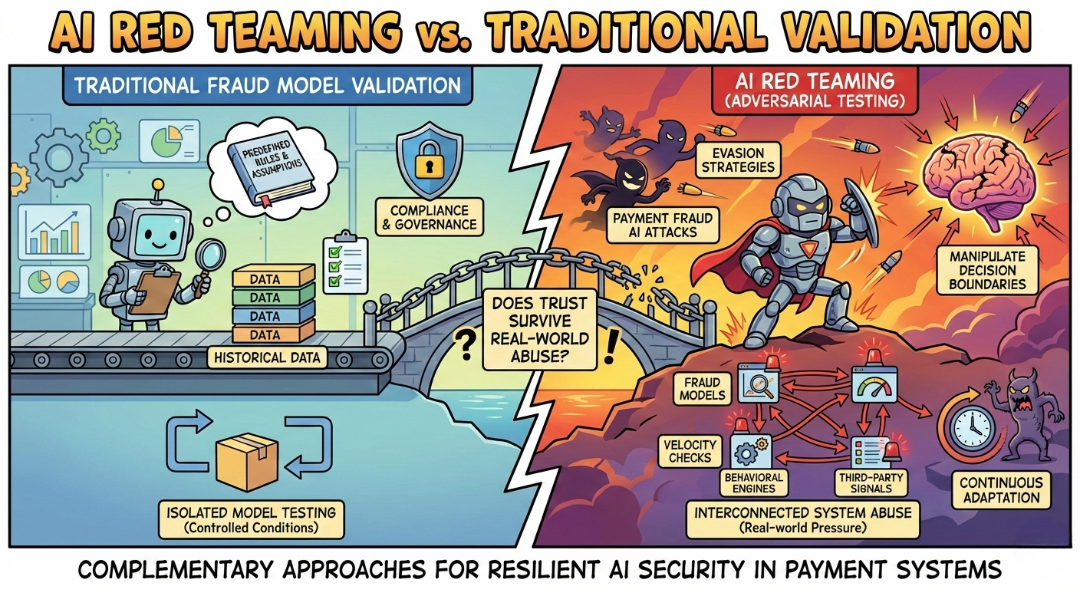

6. AI red teaming vs Traditional Fraud Model Validation

Payment organizations often assume that fraud model validation is enough to secure AI-driven decisioning. In reality, validation and adversarial testing solve very different problems. Understanding this difference is critical for effective AI security in payment systems and for defending against evolving AI threats in digital payments.

6.1 What Traditional Fraud Model Validation Actually Covers

Traditional fraud model validation focuses on whether models behave as expected under controlled conditions. It evaluates accuracy, stability, explainability, and compliance using historical data and predefined assumptions. This process ensures models meet internal governance standards and regulatory requirements, but it largely assumes that inputs are honest and patterns are representative.

What validation does not account for are intentional Payment fraud AI attacks designed to manipulate decision boundaries. Fraudsters do not behave like historical data. They actively probe thresholds, distribute transactions over time, and adapt behavior to evade detection. Standard validation rarely reveals how models behave when assumptions break, which creates blind spots in AI fraud detection model testing.

Another limitation is scope. Validation typically evaluates individual models in isolation. Modern payment environments rely on interconnected systems,fraud models, velocity checks, behavioral engines, and third-party signals. Validation alone cannot reveal how failures propagate across these systems, weakening overall AI security in payment systems.

6.2 How AI red teaming Addresses Real Payment AI Risk

AI red teaming starts from the opposite assumption: payment AI systems will be attacked, manipulated, and abused. Instead of asking whether a model performs well, AI red teaming in payments asks how systems fail under adversarial pressure.

Through adversarial scenarios, AI red teaming simulates real Payment fraud AI attacks, including evasion strategies, behavioral manipulation, confidence exploitation, and cross-system abuse. This form of AI fraud detection model testing reveals silent failure modes that validation cannot detect,missed fraud, inflated false positives, and cascading decision errors.

Crucially, AI red teaming in payments is continuous. As attackers adapt and transaction patterns evolve, adversarial testing evolves with them. This makes AI red teaming far more effective at addressing emerging AI threats in digital payments than periodic validation reviews.

Rather than replacing validation, AI red teaming complements it. Validation establishes baseline trust, while AI red teaming in payments tests whether that trust survives real-world abuse. Together, they form a realistic and resilient approach to AI security in payment systems.

7. Conclusion

AI has become inseparable from how modern payment platforms operate. Transaction approvals, fraud decisions, behavioral analysis, and dispute automation are now driven by models that act at speed and scale. As a result, the cost of failure has shifted. The most damaging incidents no longer come from outages, but from silent weaknesses exploited through Payment fraud AI attacks and other evolving AI threats in digital payments.

This is why AI red teaming must be treated as a foundational control, not an optional enhancement. Payment environments are inherently adversarial, and static assurance methods cannot keep pace with attackers who actively study and adapt to fraud models. Without continuous AI red teaming in payments, weaknesses in transaction AI and anti-fraud engines often remain hidden until losses, chargebacks, or regulatory scrutiny force action.

Effective AI security in payment systems requires intentionally breaking assumptions. Through adversarial simulations and continuous AI fraud detection model testing, organizations gain visibility into how systems behave when pressured, manipulated, or misused. This proactive approach allows teams to identify failure modes early, tune defenses intelligently, and build resilience against real-world abuse.

This is where WizSumo AI plays a critical role. WizSumo AI specializes in adversarial AI red teaming for high-risk, regulated environments, including payments and fintech. By simulating realistic fraud strategies, stress-testing transaction AI, and uncovering hidden decision failures, WizSumo AI helps payment platforms strengthen AI security in payment systems before attackers exploit them. Rather than reacting to incidents, organizations gain evidence-driven insight into how their AI systems truly behave under adversarial conditions.

Ultimately, secure payment AI is not achieved by confidence in models, but by proof of resilience. Organizations that embed AI red teaming in payments into their security and risk programs will be far better positioned to defend against emerging AI threats in digital payments, protect customers, and scale AI responsibly.

“Payment AI doesn’t fail when it’s inaccurate, it fails when attackers learn how to stay invisible.”

.svg)

.png)

.svg)

.png)

.png)