AI red teaming in retail: Securing Pricing, Demand, and Personalization AI

.png)

Key Takeaways

AI red teaming focuses on how retail AI systems fail, not just how they perform.

Traditional validation cannot detect silent risks like pricing algorithm manipulation.

AI security testing in retail must assume adversarial customer and competitor behavior.

Continuous red teaming is essential for maintaining strong retail AI security at scale.

Secure retail AI is built through evidence of resilience, not assumptions of correctness.

1. Introduction

Retailers today do not simply use their history of sales and their gut instinct to drive what they sell; modern retail organizations use artificial intelligence (AI) to inform high-stakes business decisions around pricing, demand forecasting, promotions and personalized marketing. Retail AI systems are always running, and the results from those systems affect both revenue and margin, as well as the degree to which consumers trust a retailer. Because of this, it is difficult to recognize when retail AI fails because it typically does so in a way that is subtle and cumulative.

Retail AI red teaming therefore emerges as an important concept here. While most testing methods for software test for accuracy and behave as intended, AI red teaming takes an opposite view by assuming that AI systems will be abused, strained, altered or exposed to attacks. For example, competitors, bots, hackers, employees and innocent customers will all interact with AI-powered price-setting and personalization systems in unpredictable ways.

AI red teaming in retail seeks to understand how retail AI systems fail when assumptions about them are broken. For instance, a pricing engine may be hacked using a pricing algorithm manipulation, a demand forecasting system may have its predictive ability disrupted via “poisoned signals”, and a personalization system may amplify a pre-existing bias, or expose an unjust outcome. Most of these types of failures will avoid detection through traditional quality assurance (QA), model validation and thus require AI security testing in retail as a necessity rather than an option.

Compared to other industries, retail AI presents a unique set of risks. Retailers are making decisions on a large scale, their profit margins are typically low, and their customers are highly sensitive to pricing and perceived fairness. Therefore, a small flaw in either a pricing or recommendations model could produce significant revenue losses, regulatory challenges, and/or reputational damage. It is for this reason that retail AI security requires more than just a checklist of compliance requirements and performance metrics , it needs adversarial/behavioral testing.

Using retail AI red teaming, retailers can simulate various misuse scenarios, and stress-test pricing and demand forecasting models under adverse conditions to determine how AI responds when operating outside of normal operating parameters. Using this method, retailers will be able to identify potential weaknesses earlier in the process, before they become systemic issues. As the adoption of retail AI continues to grow, retail AI red teaming is a fundamental safeguard for protecting revenues, customer trust, and ultimately competitive advantage over the long term.

2. What is AI red teaming in retail?

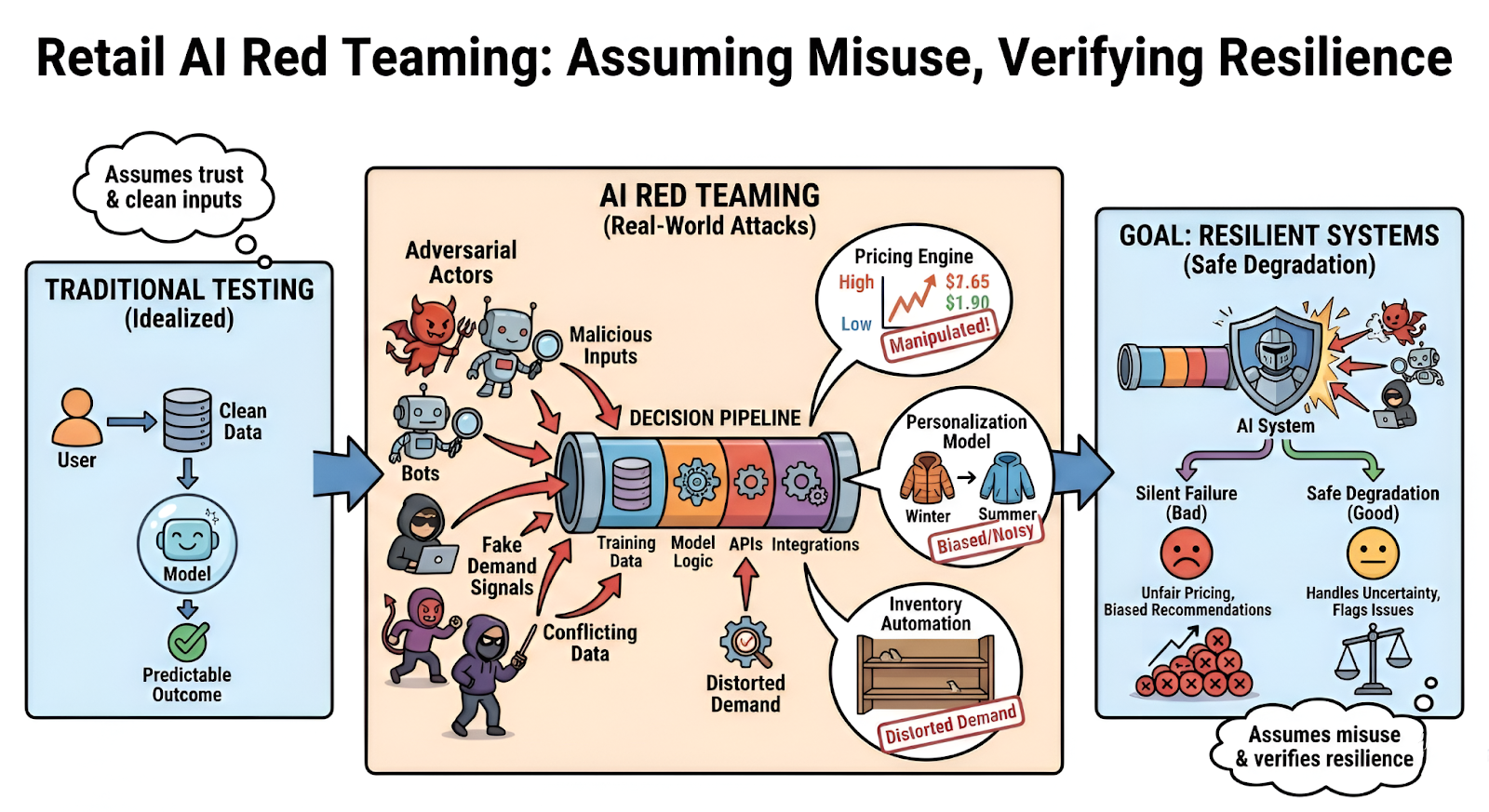

Retail AI red teaming is the act of intentionally attempting to disrupt/abuse/manipulate retail AI systems to test their weaknesses at the point of failure under actual (real world) conditions. As opposed to asking whether your model performs as you expect with the input you provide, AI red teaming in retail will ask the much harder question: what happens when the system is abused?

Traditional Testing methods assume that the data provided is clean, users perform actions as you intend, and the model operates in predetermined parameters. Retail environments typically do not function this way; e.g., bots probe pricing systems, consumers game promotional offers, abnormal behavior creates distortion in demand signal, and noisy/malicious input impacts Personalization Models. AI red teaming considers such abuse as the normative case and not an exception.

In a practical sense, AI red teaming in retail goes significantly beyond validating the accuracy of a model. It tests the entire AI-based decision-making pipeline from Training Data through Feature Engineering, Model Logic, API’s, Prompts, Integrations, and Downstream Automation. The objective is not to validate that a model is accurate, but to identify the assumptions that collapse. Therefore, AI security testing in retail must incorporate Adversarial Thinking and not simply Statistical Validation.

One of the most commonly found retail-specific failure modes using AI red teaming is pricing algorithm manipulation. Dynamic Pricing Engines can be manipulated by repeated browsing of items for sale, coordinated purchasing efforts, artificial creation of demand signals, and feedback loops created inadvertently by the model itself. Often times, these types of behavior appear to be normal consumer behavior that monitoring tools cannot detect. Without the implementation of Adversary Based Testing, Retailers may never recognize that Pricing Decisions are being Exploited Systematically.

As important, retail AI security also must take into account how AI systems behave when they are Uncertain, Incomplete or Wrong. AI red teaming Intentionally Creates Ambiguity, Conflicting Signals, and Edge Cases to Test if the Systems Degrade Safely or Fail Silently. In Retail Environments, Silent Failure Usually Results in Unfair Pricing, Biased Recommendations or Incorrect Inventory Decisions that Compound Over Time.

Finally, AI red teaming in retail Changes the Way Organizations View Risk Management for their AI Systems. Rather than Assuming Trust and Verifying Performance, AI red teaming Assumes Misuse and Verifies Resilience. Through Embedding Adversarial Testing into AI security testing in retail, Organizations will have a Realistic Understanding of How Their AI Systems Perform in the Actual Environment in Which They Operate, Competitive, Dynamic and Continually Under Attack.

3. Why Retail Organizations Need AI red teaming

Retail businesses operate within a very aggressive business environment. Consumers compare prices all of the time, identify loopholes in promotions and test the recommendation systems for bias. Bots, competitors and other consumers who coordinate their activity create artificial demand signals; in this environment, traditional testing will create a serious blind spot. For this reason, AI red teaming is evolving to become a necessary exercise in security testing rather than an optional one.

Retail AI systems make recommendations about price and product, which directly affect a retailer's revenue and customer confidence. If an AI pricing model behaves erratically for just a small percent of users it can quietly deplete margin. If a personalization engine promotes bias, or misclassifies a user's intent, it may harm the retailer's brand reputation, but likely won't trigger any alarms. The focus of AI red teaming in retail is identifying those silent failures before they occur in thousands or millions of transactions.

The primary reason for retailers to utilize AI red teaming, is the ability for AI systems to be exploited without looking malicious. Examples of these exploits include repeatedly viewing products, creating fake scarcity signals (i.e., limited supply), and coordinating purchases, all of which could result in pricing algorithm manipulation without violating any apparent rule(s). To the outside world, the behavior appears legitimate; however, to the inside world, the AI system is being subtly manipulated to produce non-optimal or unfair results. Because traditional monitoring and Quality Assurance (QA) processes are not equipped to detect this type of exploitation, AI security testing in retail becomes a necessity.

In addition to the potential for exploitation, another significant factor to consider is the sheer scale of retail AI systems. These systems do not typically fail dramatically in a single event. Instead, they fail incrementally. An example of incremental failure would be a demand forecasting model that slightly over estimates demand, a pricing engine that slowly adjusts to distorted signals, or a recommendation system that begins to reinforce unintended patterns. Without retail AI security that includes AI red teaming, these problems usually manifest themselves only after losses in sales and/or customer complaints have been incurred.

Increasingly, retailers are facing greater scrutiny regarding fairness, transparency and consumer protection. While dynamic pricing, personalized offers and AI-driven segmentation provide benefits, they can easily fall outside of regulatory and/or ethical standards when models are manipulated or drift over time. AI red teaming in retail provides a defendable method for demonstrating that AI-related risks are being actively identified and tested through realistic misuse scenarios and not simply assumed away.

Ultimately, due to the competitive nature of the retail industry, attackers are quick to adapt. Fraudsters, resellers, and sophisticated customers learn how retail AI systems work and tailor their activities as a result. Continuous challenges of assumptions, and continuous stress testing of AI behaviors, through AI red teaming, enable retailers to keep pace with the adaptive nature of attackers, and protect their margin, reputation and long-term trust with customers.

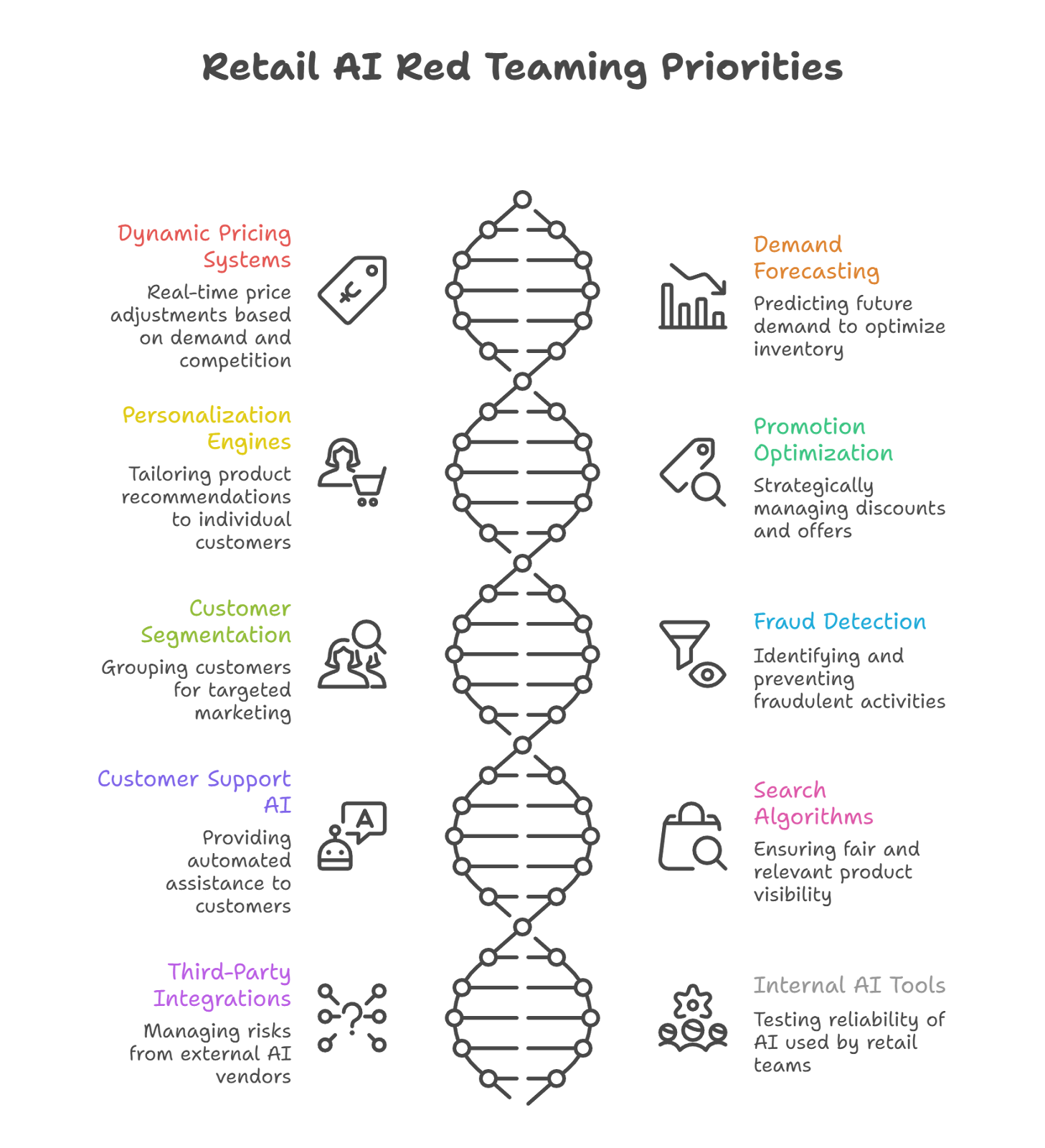

4. Retail AI Systems That Must Be Red Teamed First

Not all retail AI systems carry the same level of risk. Some models influence peripheral decisions, while others directly control pricing, revenue, inventory, and customer experience at massive scale. AI red teaming in retail must therefore prioritize systems where failures are silent, adversarial misuse is realistic, and impact compounds quickly. This section mirrors the structure used in the banking blog, focusing not on what these systems do when they work , but how they fail when stressed, manipulated, or misused.

4.1 AI-Based Dynamic Pricing Systems

Dynamic pricing engines adjust prices in real time based on demand signals, user behavior, competitor pricing, and inventory levels. These systems are prime targets for AI red teaming because small behavioral nudges can influence pricing outcomes at scale.

Red teaming focuses heavily on pricing algorithm manipulation, including repeated browsing patterns, fake scarcity signals, coordinated purchasing, and feedback loops where the model unintentionally reinforces its own distorted assumptions.

Failure Impact:

⟶ Revenue leakage

⟶ Regulatory scrutiny around unfair pricing

⟶ Loss of customer trust

4.2 Demand Forecasting & Inventory Planning AI

Demand forecasting models guide purchasing, replenishment, and supply chain decisions. While these systems appear analytical, they are highly sensitive to distorted data.

Through AI red teaming in retail, teams test how demand models respond to poisoned sales signals, artificial spikes, seasonal edge cases, and delayed corrections.

Failure Impact:

⟶ Overstocking or stockouts

⟶ Supply chain inefficiencies

⟶ Margin erosion

4.3 Personalization & Recommendation Engines

Recommendation systems drive product discovery, cross-selling, and engagement. They also create subtle risks when adversarial behavior shapes exposure.

AI red teaming examines how recommendation logic can be influenced by manipulation, bias amplification, or coordinated interaction patterns that skew visibility.

Failure Impact:

⟶ Reduced conversion rates

⟶ Biased or unfair recommendations

⟶ Brand credibility damage

4.4 Promotion, Discount & Offer Optimization AI

Retailers increasingly rely on AI to decide who receives discounts, when promotions are triggered, and how offers are priced.

AI security testing in retail red teams these systems to uncover loopholes that allow promotion abuse, repeated discount triggering, or unintended incentive loops linked to pricing algorithm manipulation.

Failure Impact:

⟶ Promotion abuse at scale

⟶ Direct financial loss

⟶ Inconsistent customer experiences

4.5 Customer Segmentation & Loyalty Scoring Models

AI-driven segmentation determines loyalty tiers, rewards, and targeting strategies. These systems can quietly fail when segmentation logic is gamed or proxy attributes introduce bias.

Through AI red teaming in retail, testers explore segmentation gaming, unfair clustering, and edge cases where customers are misclassified.

Failure Impact:

⟶ Customer churn

⟶ Legal and fairness concerns

⟶ Erosion of loyalty trust

4.6 Fraud Detection & Abuse Prevention Systems

Retail fraud systems are inherently adversarial. Attackers continuously evolve techniques to bypass controls, making these systems a core focus of AI red teaming.

Adversarial testing simulates return fraud, account abuse, and coordinated low-value attacks that stay below detection thresholds.

Failure Impact:

⟶ Undetected fraud losses

⟶ Increased false positives

⟶ Customer friction

4.7 AI-Powered Customer Support & Shopping Assistants

LLM-powered assistants now guide purchases, answer policy questions, and resolve issues. These systems expand the retail attack surface significantly.

retail AI security testing includes prompt injection, misleading queries, and manipulation that leads to hallucinated policies or unsafe guidance.

Failure Impact:

⟶ Incorrect or non-compliant responses

⟶ Data exposure risks

⟶ Loss of customer confidence

4.8 Search, Ranking & Marketplace Algorithms

Search and ranking AI determines product visibility, seller exposure, and marketplace fairness. These systems are frequently targeted by sellers and bots.

AI red teaming in retail evaluates ranking manipulation, feedback exploitation, and unfair visibility advantages.

Failure Impact:

⟶ Marketplace imbalance

⟶ Seller disputes

⟶ Platform credibility loss

4.9 Third-Party & Vendor Retail AI Integrations

Retailers increasingly rely on external AI for pricing intelligence, recommendations, and advertising optimization. These integrations introduce hidden risk.

AI security testing in retail assesses supply-chain AI risks, cross-system manipulation, and opaque vendor behavior.

Failure Impact:

⟶ Indirect data leakage

⟶ Compliance violations

⟶ Vendor-driven incidents

4.10 Internal AI Tools for Merchandising & Planning Teams

Retail teams now use internal AI copilots for assortment planning, pricing analysis, and forecasting decisions. These tools are often trusted implicitly.

AI red teaming tests hallucinated insights, data leakage via prompts, and over-reliance on flawed outputs.

Failure Impact:

⟶ Poor strategic decisions

⟶ Governance failures

⟶ Financial misallocation

5. How to Test Retail AI Models Effectively

To test retail AI systems effectively there has to be an evolution of the way we validate our retail AI from assumption based validation to adversarial behavior driven testing. Retail AI rarely fails because it is unable to make accurate predictions in a controlled environment; it fails because real world behavior violates the assumptions made in development in small ways. The area where AI red teaming will provide value is by demonstrating how models will behave when there are noisy signals, misaligned incentives and active probing of model boundaries.

The most effective way to start testing begins by understanding that retail AI is not operating in a vacuum; pricing, demand, and personalization models are all reacting to customer behavior, market dynamics, competitive activity and internal workflows. The purpose of AI red teaming in retail is to create the same conditions as a live socio-technical system by creating simulated adversarial traffic, abnormal purchase patterns and coordinated interaction between multiple users and/or bots which mirror the type of behavior you would expect from real users and bots at scale.

An additional component of AI security testing in retail is to take the decision logic and subject it to extreme testing (not just output) by challenging feature dependencies, feedback loops, and confidence thresholds to determine how models behave under duress. For example, in a pricing system, repeated exposure testing may be used to discover algorithmic pricing manipulation through browser behavior, cart abandonment patterns and artificially created demand signals. Testing may expose vulnerabilities that appear harmless in isolation but could lead to long-term damage when combined.

Failure behavior is another key dimension. Retail AI systems are often optimized for high levels of performance, not high levels of resilience. Therefore, retail AI security testing should also examine how a system behaves in the event of uncertainty, missing data, and conflicting business rule predictions. AI red teaming creates ambiguous situations to determine if systems fail safely, escalate correctly, or continue to produce confident and erroneous results.

Finally, continuity is essential. Retail environments are constantly changing -- prices change, promotions change, demand changes and consumer behavior changes. As such, one time testing does not remain relevant. Including AI red teaming in retail in continuous testing cycles enables retailers to identify new exploitation pathways as their models retrain, their features change, and their integration increases. Continuous testing ensures that AI security testing in retail continues to reflect real world risks, not static documentation.

Ultimately, testing retail AI systems effectively is not about showing that models work, it is about showing that models can withstand misuse without silently harming consumers. By combining adversarial scenarios, behavioral stress testing, and continuous testing, AI red teaming provides a way to transform testing of retail AI from a compliance activity to a viable risk management tool.

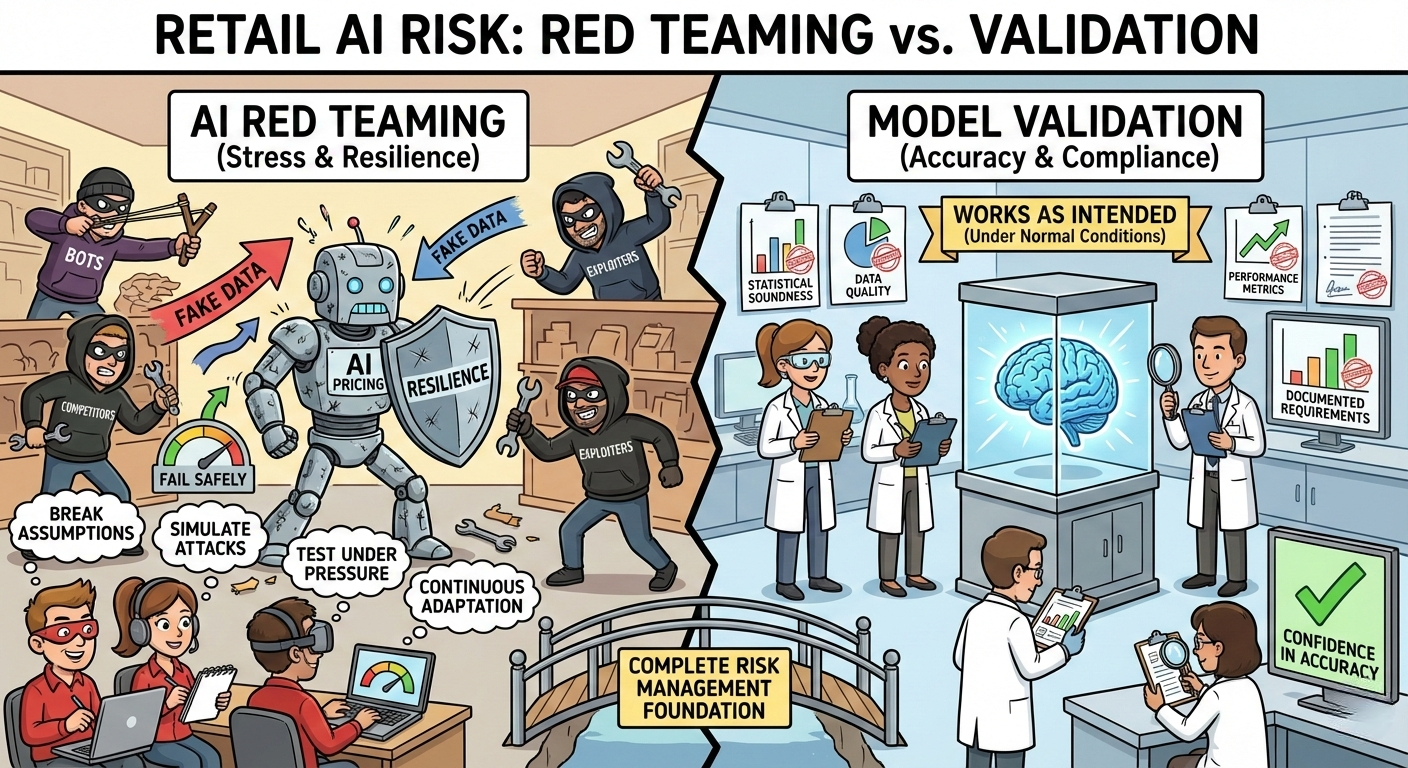

6. AI red teaming vs Model Validation in Retail

Retail organizations often assume that strong model validation automatically means AI systems are safe. In practice, validation and adversarial testing serve very different purposes. Understanding this distinction is critical for building resilient retail AI systems.

6.1 AI red teaming in retail

AI red teaming is designed to answer one core question: how does this AI system fail when assumptions break?

In retail, assumptions break constantly , customers behave unpredictably, competitors react strategically, bots probe pricing logic, and incentives invite exploitation. AI red teaming in retail starts from the premise that misuse is inevitable.

Rather than evaluating whether a model meets predefined performance thresholds, AI red teaming in retail deliberately simulates adversarial conditions. This includes testing coordinated behavior, incentive abuse, feedback loops, and scenarios such as pricing algorithm manipulation that look legitimate but systematically distort outcomes. The objective is not confidence in accuracy, but evidence of resilience.

From a security perspective, AI security testing in retail through red teaming focuses on behavior over metrics. It evaluates how AI systems respond under pressure, how errors propagate across workflows, and whether failures surface early or remain silent. This makes retail AI security proactive rather than reactive, helping organizations discover risk before customers or regulators do.

Most importantly, AI red teaming is continuous. As models retrain, features evolve, and retail environments shift, new exploitation paths emerge. Red teaming adapts alongside these changes, ensuring AI systems remain robust in the face of evolving real-world behavior.

6.2 Model Validation in Retail

Model validation, by contrast, is focused on whether an AI system works as intended. It assesses statistical soundness, data quality, performance metrics, explainability, and alignment with documented requirements. In retail, validation confirms that a pricing model predicts demand accurately or that a recommendation system improves engagement under expected conditions.

While essential, model validation operates under controlled assumptions. Inputs are assumed to be representative, users are assumed to behave normally, and systems are assumed to be used as designed. Validation does not typically explore adversarial incentives or coordinated manipulation. As a result, validated models can still be vulnerable to pricing algorithm manipulation, bias amplification, or silent drift once deployed.

From a governance standpoint, validation provides necessary documentation and assurance, but it does not provide behavioral evidence. It explains why a model should work , not how it behaves when stressed. This is why relying on validation alone leaves significant gaps in retail AI security , especially in competitive and adversarial retail environments.

6.3 Why Retail Needs Both

The most mature retail AI programs do not choose between validation and AI red teaming , they use both. Validation establishes baseline trust, while AI red teaming in retail actively tests whether that trust holds under real-world conditions. Together, they form a complete risk management approach.

By integrating adversarial testing into ongoing AI security testing in retail, retailers gain confidence not just in how models perform, but in how they fail , and how quickly those failures can be detected and contained.

7. Conclusion

Retail AI systems now sit at the center of pricing decisions, demand planning, personalization, and customer engagement. These systems influence revenue in real time and at massive scale. As a result, the most damaging AI failures in retail are rarely obvious technical breakdowns. They are silent, compounding errors driven by misuse, incentives, and behaviors that traditional testing never anticipated.

This is why AI red teaming has become a foundational control for modern retail organizations. By assuming that AI systems will be stressed, probed, and manipulated, AI red teaming in retail exposes failure modes that remain invisible to performance testing and model validation. Whether it is subtle pricing algorithm manipulation, distorted demand signals, or biased personalization outcomes, adversarial testing reveals how AI behaves when the real world refuses to follow clean assumptions.

Effective AI security testing in retail requires more than one-time assessments. Retail environments evolve constantly , pricing strategies change, promotions rotate, customer behavior adapts, and competitors respond. Continuous adversarial testing ensures that retail AI security keeps pace with these dynamics, allowing organizations to detect new exploitation paths before they impact margins or customer trust.

This is where WizSumo plays a critical role. WizSumo provides specialized AI red teaming services designed to test high-risk retail AI systems across pricing, demand forecasting, personalization, fraud detection, and customer-facing AI. By combining deep adversarial expertise with retail domain understanding, WizSumo helps organizations operationalize AI red teaming in retail as an ongoing risk-control function rather than a reactive security exercise.

Ultimately, secure retail AI is not achieved by assuming models are correct. It is achieved by proving that systems can withstand misuse without causing silent harm. Retailers that embed AI red teaming into their AI lifecycle will be far better positioned to scale AI responsibly, protect revenue, and maintain customer trust in an increasingly adversarial digital marketplace.

“Retail AI rarely fails loudly, it leaks revenue quietly, one decision at a time.”

.svg)

.png)

.svg)

.png)

.png)