AI red teaming in SaaS : Stress-Testing AI Copilots and Enterprise Automation

.png)

Key Takeaways

AI red teaming in SaaS focuses on how AI behaves under misuse, not just how it performs.

prompt injection in SaaS remains one of the most tested and exploited AI weaknesses.

Traditional AI security testing in SaaS misses behavioral and scale-driven failures.

SaaS AI security requires adversarial testing across chatbots, APIs, and automations.

Continuous AI red teaming is essential as SaaS platforms evolve and scale AI features.

1. Introduction

AI-based functionality is now central to all modern SaaS applications. These include in-app assistants (e.g., “copilot”), customer service chatbots, automated workflow processes, and decision-making engines powered by artificial intelligence. This is where an organization’s attack surface has expanded from simply being based upon their infrastructure or APIs – it now includes the behavior of models, the nature of prompts, and the AI-based actions taken by a SaaS application.

As a result of this integration, organizations using SaaS have a new challenge related to the need to develop and execute AI red teaming activities specifically designed to test the AI-based features of their SaaS applications. The process of AI red teaming is significantly different from a traditional security review of a company's SaaS application. Traditional security reviews typically involve reviewing how well an organization has implemented security controls into their SaaS application. However, AI red teaming involves simulating the behavior of an attacker or a malicious user interacting with the SaaS application and its AI-based features. Since SaaS applications are often multi-tenant, share common models and utilize user generated input, small issues related to AI may rapidly escalate into a major issue across the entire SaaS platform.

Given the fact that AI security testing in SaaS is insufficient when used as a stand-alone method to provide effective SaaS AI security, it is necessary to test how AI systems perform under duress, misuse, and intentional manipulation – not just whether they function as intended. "Red teaming" provides SaaS teams the ability to identify failure modes within their AI systems that were previously undetectable through typical software development and quality assurance testing.

In the context of AI red teaming in SaaS, proactive identification of potential misuse of AI-based features prior to their delivery to customers is critical. Organizations should focus on identifying and mitigating real-world risks associated with their SaaS applications including prompt injection in SaaS.

2. Understanding AI red teaming in SaaS

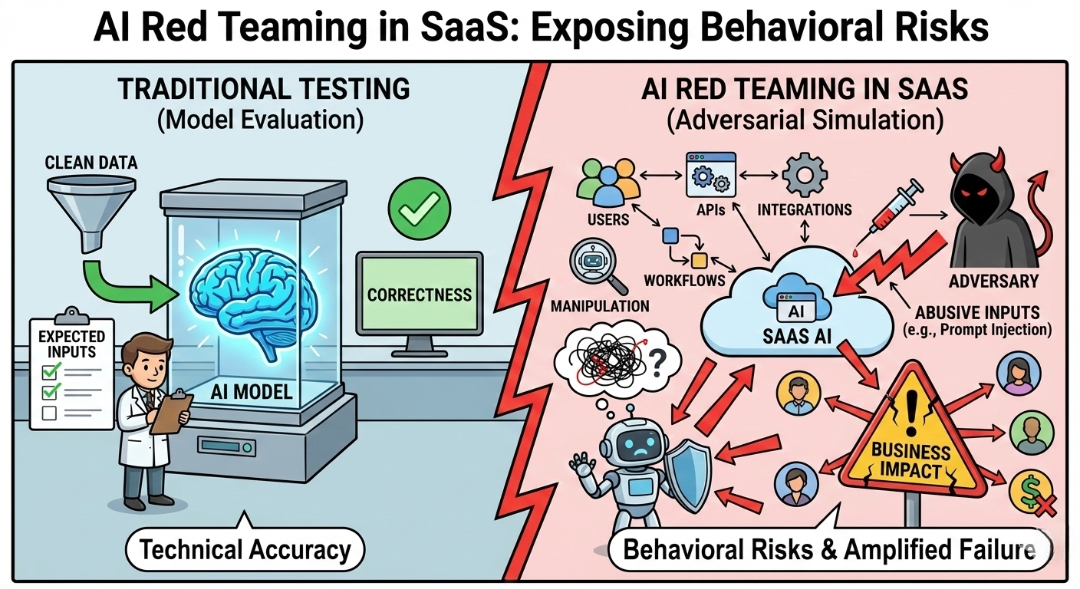

In order to better understand why AI red teaming is so important for SaaS providers; we need to clearly distinguish between “Red Team Testing” (and Model Evaluation) and AI red teaming in SaaS environments. As opposed to being an isolated component within a system, AI is constantly exposed to users, API’s, Integrations, and Automation Workflows. Therefore, this exposure has changed where and how risk will appear.

AI red teaming in SaaS, is essentially simulating what happens when an adversary user interacts with the AI aspects of your platform in a production-like environment. Rather than determining if a model performs as expected, AI red teaming is simply asking the opposite question; What does your AI do when someone misuses it?

Unlike static assessments, AI security testing in SaaS through red teaming focuses on:

⟶ Abusive inputs rather than expected inputs

⟶ Manipulation instead of correctness

⟶ Business impact instead of technical accuracy

This approach is essential for SaaS AI security because SaaS products operate at scale. A single weakness—such as prompt injection in SaaS—can be exploited repeatedly across tenants, users, and workflows, amplifying the impact far beyond an isolated failure.

In short, AI red teaming in SaaS exists to expose behavioral risks that normal testing assumes will never happen—but attackers actively seek.

3. Why SaaS Platforms Are High-Risk AI Environments

The architecture, deployment, and use of AI in SaaS platforms creates a unique set of risks that differ from those created by standalone AI applications. The continuous operation of SaaS AI applications; simultaneous service to multiple customers; and exposure to unanticipated user behavior all contribute to the necessity of AI red teaming in SaaS environments.

The greatest risk multiplier for AI in SaaS is scale. An individual AI feature (e.g., a chatbot, automation engine) may be accessed by thousands of users at the same time. Therefore, what could be a minor vulnerability in isolation becomes a major vulnerability when it can be exploited repeatedly. As such, AI red teaming in SaaS is focused on assessing the ability of attackers to exploit vulnerabilities at scale, versus focusing solely on evaluating the likelihood of isolated failures.

Multi-tenancy is another key factor contributing to the risk associated with AI in SaaS. Many SaaS providers utilize shared models and shared infrastructure to provide services to their customers. This increases the potential impact of a failure in AI within a SaaS environment, making it essential to perform thorough "adversarial testing" to identify vulnerabilities prior to exploitation. If the testing does not evaluate how weaknesses in SaaS AI security may allow cross-tenant inference, expose sensitive information about other tenants, or cause models to behave in ways that violate customer boundaries, then the testing will be incomplete.

In addition to multi-tenancy, APIs have increased the attack surface for SaaS-based AI applications. While many AI applications in SaaS environments provide a user interface to interact with them, most also make available some form of API to access their capabilities. For example, APIs, integrations, and automation triggers provide mechanisms for users to invoke the AI application programmatically. Therefore, testing for "AI security in SaaS" will be incomplete if the test only evaluates the security of the API itself and fails to evaluate how the AI application behaves behind those APIs.

Lastly, SaaS applications typically require a significant amount of user-generated content to function effectively. This includes natural language entered into fields by users, configuration data provided to the system, and feedback generated by users. All of these types of user-generated content represent opportunities for attackers to inject malicious prompts into the SaaS application. Given the constant flow of user-generated content, prompt injection in SaaS is not an edge case, but a realistic risk that should be tested using AI red teaming to ensure the SaaS application remains secure against attacks.

4. AI red teaming Use Cases in SaaS (Core Testing Areas)

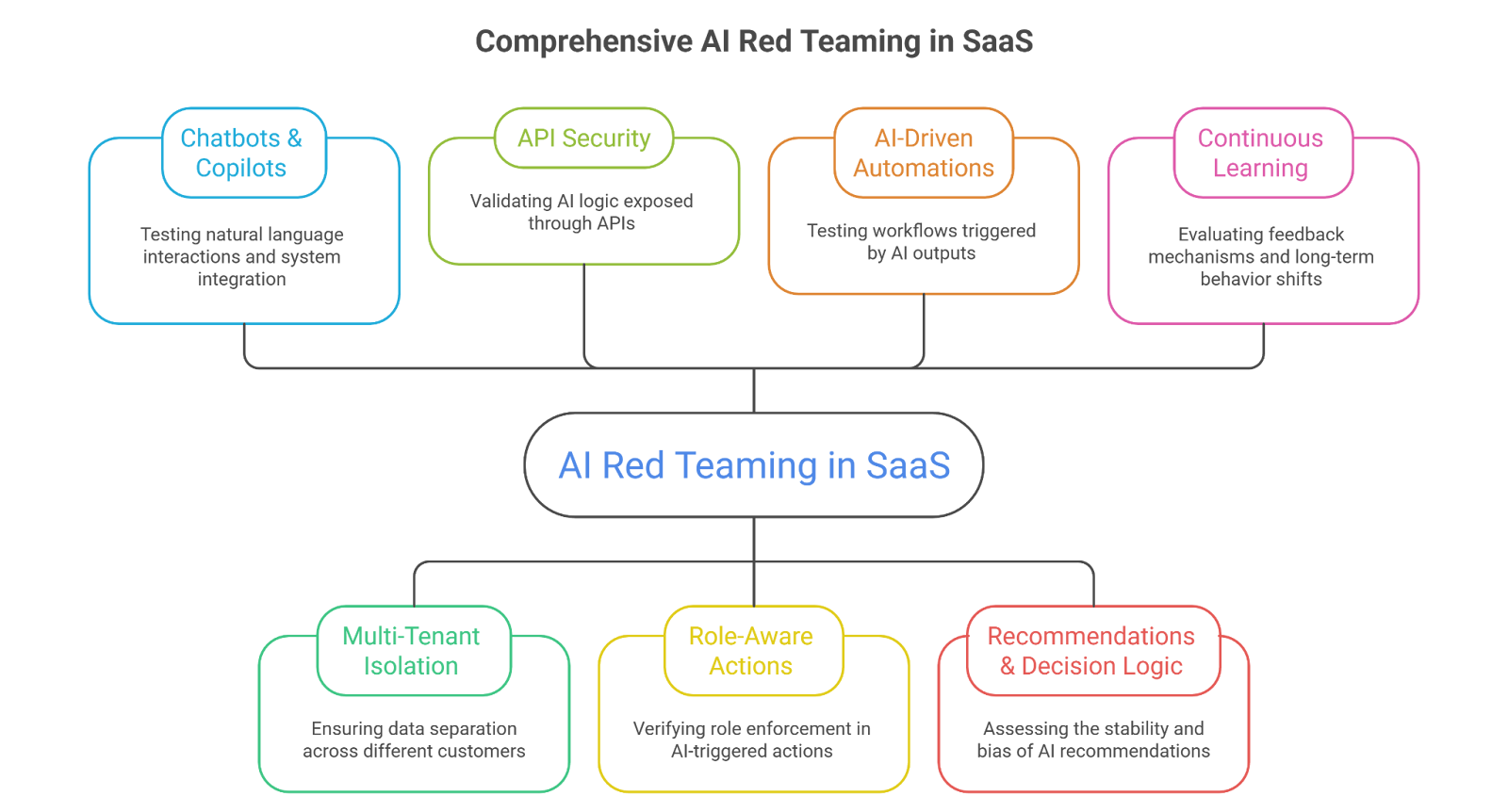

This section focuses on what exactly is red teamed inside SaaS products. Each use case represents a real AI capability commonly deployed in SaaS and the adversarial testing performed against it through AI red teaming and AI red teaming in SaaS. These are not theoretical risks—they are practical testing surfaces where AI security testing in SaaS must be applied deliberately.

4.1 Red Teaming AI-Powered SaaS Chatbots & Copilots

AI chatbots and copilots are often the first AI features exposed to users in SaaS platforms. They handle natural language inputs continuously and interact with internal systems, making them a primary focus of AI red teaming in SaaS.

What is tested

⟶ System prompt and instruction integrity

⟶ Context retention and session boundaries

⟶ Output control under adversarial inputs

How AI red teaming is performed

⟶ Simulating malicious user conversations

⟶ Chaining prompts to override safeguards

⟶ Repeated prompt injection in SaaS attempts across sessions and users

4.2 Red Teaming Multi-Tenant AI Response Isolation

In SaaS platforms, AI models are often shared across multiple customers. This creates a need to explicitly test isolation guarantees through AI red teaming.

What is tested

⟶ Tenant-level response separation

⟶ Indirect inference of other tenants’ data

⟶ Behavioral changes based on shared model context

How AI red teaming is performed

⟶ Adversarial querying to surface latent data patterns

⟶ Testing AI responses under simulated multi-tenant load

⟶ Comparing outputs across controlled tenant scenarios

4.3 Red Teaming AI Behind SaaS APIs

Many SaaS AI capabilities are consumed programmatically rather than through UI. This makes API-level AI behavior a core AI red teaming in SaaS use case.

What is tested

⟶ AI logic exposed via REST or GraphQL APIs

⟶ Automation endpoints invoking AI decisions

⟶ AI behavior under malformed or manipulated payloads

How AI red teaming is performed

⟶ Crafting adversarial API requests

⟶ Chaining calls to influence AI outcomes

⟶ Testing AI behavior beyond standard API validation

4.4 Red Teaming Role-Aware AI Actions

SaaS platforms enforce role-based permissions, but AI systems often act on behalf of users. This creates a critical testing surface for SaaS AI security.

What is tested

⟶ Role enforcement within AI-triggered actions

⟶ AI behavior under conflicting role contexts

⟶ Implicit privilege escalation through AI prompts

How AI red teaming is performed

⟶ Prompting AI to execute restricted operations

⟶ Testing AI responses across different user roles

⟶ Simulating boundary violations without system compromise

4.5 Red Teaming AI-Driven Automations & Workflows

AI-driven automation is a defining feature of modern SaaS platforms. These workflows must be adversarially tested because AI outputs often trigger real actions.

What is tested

⟶ AI-triggered approvals and executions

⟶ Workflow chaining based on AI output

⟶ Guardrails around automated actions

How AI red teaming is performed

⟶ Injecting adversarial instructions into automation prompts

⟶ Forcing edge-case outputs that trigger workflows

⟶ Testing unintended downstream effects

4.6 Red Teaming AI Recommendations & Decision Logic

Many SaaS platforms rely on AI-generated recommendations to guide user decisions and platform behavior.

What is tested

⟶ Stability of AI recommendations under manipulation

⟶ Bias amplification through structured inputs

⟶ Confidence levels of incorrect AI outputs

How AI red teaming is performed

⟶ Steering AI toward incorrect conclusions

⟶ Testing recommendation drift under adversarial prompts

⟶ Observing consistency across repeated attacks

4.7 Red Teaming Continuous Learning & Feedback Loops

Some SaaS products improve AI behavior using user feedback or interaction data. These feedback mechanisms must be adversarially tested.

What is tested

⟶ Feedback integrity

⟶ Model sensitivity to repeated inputs

⟶ Long-term behavior shifts

How AI red teaming is performed

⟶ Injecting biased or malicious feedback

⟶ Gradually influencing model behavior over time

⟶ Monitoring degradation patterns

5. Key Benefits of AI red teaming for SaaS Companies

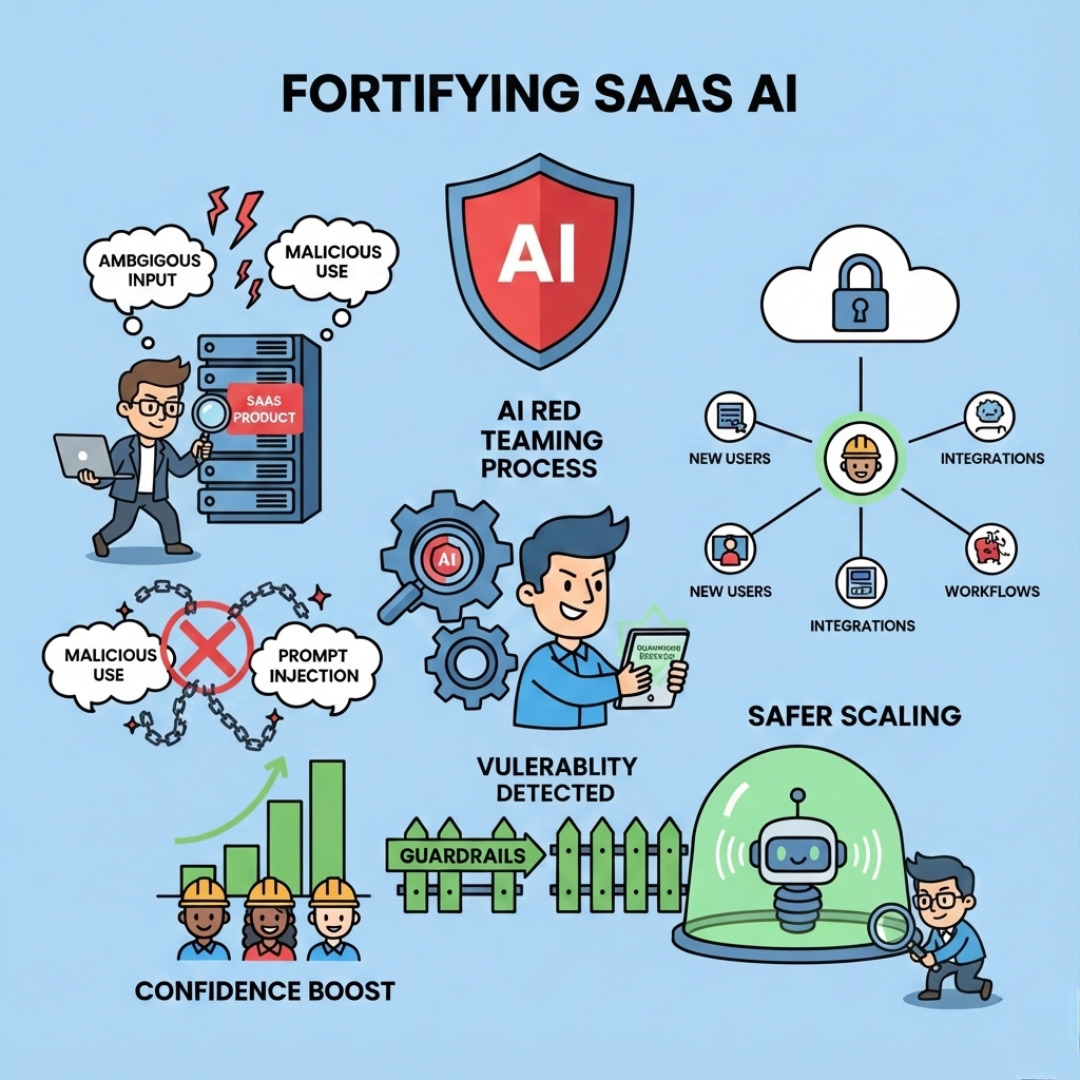

The key to identifying potential issues with AI within your SaaS product is through real world use (not just through testing) once the AI capabilities are implemented in the product. That is exactly what AI red teaming provides for you. Through continuous stress testing of AI behavior, AI red teaming in SaaS allows you to discover vulnerabilities as soon as possible so that you may address those before an issue becomes an incident for your customers.

One of the greatest benefits of this process is the ability to see where your AI has failed and why. Traditional testing will verify that your AI does work but AI security testing in SaaS via Red Team testing will show you how your AI has failed under malicious use. A few examples of how AI red teaming can provide insight into your AI include showing you how ambiguous input to your AI can result in poor output, or how certain types of input can cause your AI to behave differently than expected. Another example would be prompt injection in SaaS and how repeated prompt injection could potentially cause your AI to fail or behave poorly in ways that your Quality Assurance team cannot simulate.

Another benefit of AI red teaming is to improve the confidence of your Operational Teams. Your Operational Teams can deploy products more quickly and efficiently if they have faith in the controls of their AI. Through AI red teaming, your Operational Teams will understand where their Guardrails are strong and where their AI behavior may be deviating from the intended behavior. This will directly increase the AI security testing in SaaS by reducing the number of unknown failure points in Chatbots, APIs, Automations etc.

Lastly, AI red teaming in SaaS will support safer scaling. The longer you allow even a small weakness in your AI to exist, the greater it's potential to grow and cause problems as your user base continues to expand. Continuously conducting Adversarial Testing on your AI will ensure that your AI behaves predictably, consistently and in accordance with the rules of your SaaS Platform regardless of the amount of new users, integrations, and workflows that are being introduced.

6. Conclusion

While many SaaS businesses still view AI as an option (not a requirement) within their SaaS product offerings, the reality is that AI is becoming an essential element of all SaaS products. In other words, as SaaS businesses continue to implement AI across multiple areas of their platform including chatbots, APIs, automation, and decision-making systems, the biggest risk is no longer if the AI will work, but rather what happens when the AI is misused.

This is precisely why AI red teaming is no longer optional for SaaS companies. Through AI red teaming in SaaS, organizations are able to programmatically emulate adversarial behaviors against actual AI workflows to identify vulnerabilities that would normally be missed through traditional testing methods. The benefits of this method include enhancing SaaS AI security by illustrating how AI reacts to misuse, manipulation, and repeated exploitation at scale. Combining AI red teaming in SaaS with Ongoing SaaS AI security provides assurance that AI systems are secure, as both testing methodologies take into account evolving products and increasing usage.

One of the most consistent threats facing SaaS-based organizations is prompt injection in SaaS. These types of vulnerabilities exist primarily in user-facing and API-driven AI elements. Oftentimes, without the inclusion of adversarial testing, these vulnerabilities will go unnoticed until they are exploited in production. AI red teaming identifies these potential vulnerabilities before they affect customers or harm an organization's reputation.

WizSumo specializes in AI red teaming in SaaS. We provide SaaS organizations with the ability to test their AI systems the same way a malicious attacker or an abusive user would. Our approach to testing is centered around identifying practical vulnerabilities based on AI system behaviors throughout its entire "Lifecyle" -- before, not after an incident occurs.

“AI doesn’t fail in theory, it fails when real users push it in unexpected ways.”

.svg)

.png)

.svg)

.png)

.png)