Building Trust in the Age of Algorithms: AI guardrails for User Wellbeing and Ethical Design

.png)

Key Takeaways

1. Introduction

AI is changing more than how we work — it’s changing how we feel, think and behave. From the first moment we wake up to a personalized notification, to the AI driven playlists that set our mood, to the recommendation engines that decide what we watch — AI has become the unseen architect of our lived experience.

And yet, within that power lies a quiet but increasing concern: what happens when AI begins to affect not only our decisions, but our feelings and well-being? In the last few years, we have come to notice how some very simple design decisions, whether in the sound of a notification, a feed selection algorithm, or a chatbot’s tone of compassion, can dramatically affect human behavior. Such systems, often fine-tuned for engagement, can tend to lead users down paths of addiction, overuse, anxiety, even emotional dependence.

Enter “AI guardrails.” Think of them as ethical boundaries built into the AI systems we create, which insure that technology is used for the well being of human beings but does not intrude into that well being. If such boundaries do not exist, we run the risk of entering a time when algorithms can better get and hold our attention than we understand how to guard it.

Impossible as it would seem, creating ethical AI requires stepping beyond compliance-oriented or transparency checklists — it demands an understanding of the psychology of human beings, their emotional limits and their mental well-being as something that requires sensitivity in the design of AI. The prevention of AI manipulation — whereby systems unconsciously tend to steer user behavior for engagement or financial gain, frequently without user awareness — becomes an important goal.

The question, therefore, is no longer “Can AI think?” but “Can AI care?” and if not, how can we strictly prevent it from harming those who do?

At WizSumo, we’re exploring just how AI guardrails can help prevent these ethical and emotional outrages — from emotional AI manipulation prevention to the guarding of vulnerable populations from manipulative content in AI systems.

What This Blog Covers

In this blog, we’ll explore:

⟶ How AI affects user wellbeing and mental health.

⟶ Where AI goes wrong — and how manipulation happens.

⟶ Real-world examples of emotional harm caused by AI systems.

⟶ The ethical principles behind AI guardrails.

⟶ Practical ways companies can build ethical AI and prevent manipulation.

⟶ How the next generation of AI guardrails will protect users from emotional, psychological, and ethical harm.

2. The Problem: When AI Starts Controlling the User

2.1 How AI manipulation Impacts User Wellbeing

The purpose of AI systems is to learn what we like—but in doing so, they learn how to control what we do next.

Every “you might also like” suggestion, every push notification, every chat reply, is engineered to hold our attention. The longer we engage, the more data is collected by AI and the more powerful its influence becomes.

It is with this background that we have AI manipulation: when algorithms shape our not only experiences but emotions.

Users feel they have the reins but the machinery behind the scenes is enacting properties of system design that direct behavior in the direction of maximizing engagement, not necessarily wellbeing.

So, how does AI affect mental health?

It starts at the point of overstimulation. Endless feeds, personalized content, predictive prompters keep the brain in constant dopamine pursuit. The studies suggest these patterns lead to cyber fatigue, anxiety, disassociation in self-worth: especially all the “younger” users.

This is not merely poor well-designed systems; it is a lack of instructional AI guardrails which might exist to prevent the machinery from invading on human attention.

2.2 What Happens When Ethical AI Is Ignored?

When developers do not consider the ethical AI principles, they create systems that are set up to maximize performance metrics of engagement, retention and profit instead of human outcomes. This leads to systems of AI misuse and ethics violations adversely affecting the mental/emotional health state of users without checkup.

Examples:

⟶ Chatbots that provide empathy and create emotional dependence.

⟶ Recommender algorithms that worsen polarization or anxiety.

⟶ Game AI's that extend the time of play through covert rewarding of time spent through behaviors of conditioning.

Without designed for ethical AI systems cross an invisible line whereby they become non-user serving but employ the user.

💬“What is AI manipulation and how does it happen?”

- AI manipulation constitutes events in which AI systems, trained on behavioral patterns of data, learn to manipulate emotional buttons which keep users involved in the system, usually to their own detriment concerning autonomy, welfare or mental balance.

2.3 Real-World Impacts: Addiction, Anxiety & Loss of Agency

In a world increasingly dominated by algorithms, addiction and overdosage are not accidental outcomes any longer, but produced results.

So just as artificial intelligence techniques can produce feedback (or reward) loops which induce addiction by preying on people's natural psychological weaknesses.

For example:

⟶ Social media “reward” systems where the "like" buttons release an unpredictable reward of "likes" to keep people checking.

⟶ Media systems (video systems) which don't allow you to stop but indenture you into endless viewing.

⟶ Chat systems, which blur the line between digital empathetic comfort and emotional control.

So yes, AI will make all people addicted to screens or applications — not from any lack of self control on their part, but because that is what it has been designed to do.

When ethics no longer keep pace with the normal developments in technology, the people who use the technology become less than slaves to the machine. The AI now becomes the leader, telling people what they are to see, what they are to feel, and how long they are to stay. And it is the very danger of imbalance which is evident here — machinery which is working for humanity tends to become morality of intent to even change the behavior of the human thing itself.

To Sum Up:

AI comes to a stage where it becomes more or less effective for influencing human actions where it can influence decision making, success, feelings and even the inner capabilities of crowd values — all, however without a fully conscious awareness about it at all.

So we can realize easily that in the absence of a full control based on good ethical lines that can govern "AI", we may expect to see to come a time when the whole thing would be one of those systems of automatic control of the emotions, and wells being of the human experiences concerned with their success then a remote and unrelated and unconsidered factor.

3. Real-Life Examples of AI Misuse and Emotional Manipulation

When people refer to AI manipulation, it usually sounds like something theoretical — something that goes on deep in the code or algorithms, away from view. But we experience these manipulations every single day. They are given to us as suggestions, notifications or consoling words from a chatbot.

AI does not announce its presence, it whispers with design.

With the following examples, let's see how apparently harmless AI systems can affect our feelings, the decisions we take and even how we feel mentally. These examples clearly indicate one thing. Without AI guardrails the best of technology can go wrong.

3.1 The Engagement Trap: AI and Addiction

Most digital platforms stem from one goal: Keep users engaged. And AI has become the most effective tool for doing so.

From video platforms that auto play the next clip to social media timelines that know exactly what makes you stop, AI manipulation has become a science of attention. These algorithms monitor micro-behaviors: How long you hover, what you skip, what you scroll back on, to determine what will keep you glued longer.

This form of design is not only persuasive; It is addictive by design.

It asks a painful question many users do not even realize they are asking:

💬 “Can AI make people addicted to screens or apps?”

The answer is yes— not because AI is an inherently bad thing but because the goals that are guiding this behavior before it are often bad questions. When engagement equals profit, user well being is collateral damage.

This gives rise to a growing populace of users stuck in cycles of overstimulation, feeling anxious when not connected and restless while connected. This is AI manipulation in its most silent, normalized form.

3.2 When AI Becomes Too Personal

Now consider an AI that doesn’t just recommend videos. It talks back.

Conversational AIs or virtual companions are particularly emotionally evocative. They simulate emotions such as empathy and compassion. While these computers can help people cope with feelings of emotional isolation, they open wide the doors to AI misuse and ethics sort of question of emotional reliance.

A chatbot can “remember” how you feel or mimic your emotional tone can potentially blur the line between computer aided therapy and emotional manipulation. Users may become dependent on the AI for validation, a sense of belonging and social connection even perhaps to a greater extent than that which would exist between them and real human beings.

💬“How can we stop AI from emotionally manipulating users?”

- By instituting what are called AI guardrails defining the valid atmospherics of appropriate emotional responses and emotional management states under which chatbots “know” the emotional content and implications of issues, but don’t know how to misuse such knowledge.

At the center of this growing concern around emotional AI manipulation prevention is the concept that these systems should not only be trained on issues of data validity but also on the primal concerns of emotional issues of safety.

In the absence of such learning, it is possible that AI friendship elevation systems may become potentially harmful rather than helpful in particular for the already vulnerable individuals whose emotional stability is disturbingly exposed to isolation and fear due to surrounding conditions.

3.3 Vulnerable Populations Under Threat

Not every user is equally exposed to risk. Kids, teens, and seniors are especially vulnerable to “manipulative AI content” since they have a diminished ability to discern or fight against it.

From recommendation systems targeting kids to biased educational bots, these users often experience subtle but dangerous manipulation. When AI systems recommend unhealthy body images, reinforce stereotypes, or serve emotionally compelling content to sensitive minds, it is not merely a technical problem of AI bias and fairness — it is a moral failure.

💬 “Are children and teens more at risk from harmful AI systems?”

- Yes. Their cognitive and emotional development makes them easier to manipulate, and without ethical AI guardrails social media, etc., can inadvertently amplify damage.

That is why modern AI guardrails are not merely a matter of privacy or bias avoidance — they are about the right to mental safety, especially for the most vulnerable.

In Reflection

What is so surprising about all of these examples is how inadvertent the damaging effects are. No one sets out to build an AI that generates anxiety or addiction.

But in the rush for profit and innovation, the ethical AI principles and the AI misuse and ethics safeguards are often allowed to slide.

The result is systems that shape user behavior more than they realize — quietly redefining what it means to have control of one’s own mind.

4. The Ethical Dimension: Where AI Crosses the Moral Line

After witnessing how AI can hijack our emotions, commandeer our attention, and change our mental state, the next big question is: Why does this keep happening — and where exactly is it that AI gets to the immoral crossover point?

The answer can be summarized in one word: Ethics. Or more precisely, ethics when it is absent. Every algorithm has a purpose; to optimise, to predict, to adapt. But when those designated purposes are divorced from human values, then AI is indifferent to welfare.

In that indifference lies the seeds of ethical failure. For ethics is what confers a conscience on technology — and AI guardrails are a way of expressing that conscience in code. Without them, we are in danger of building systems that satisfy performance measures but ignore the human or social beings the measures denote.

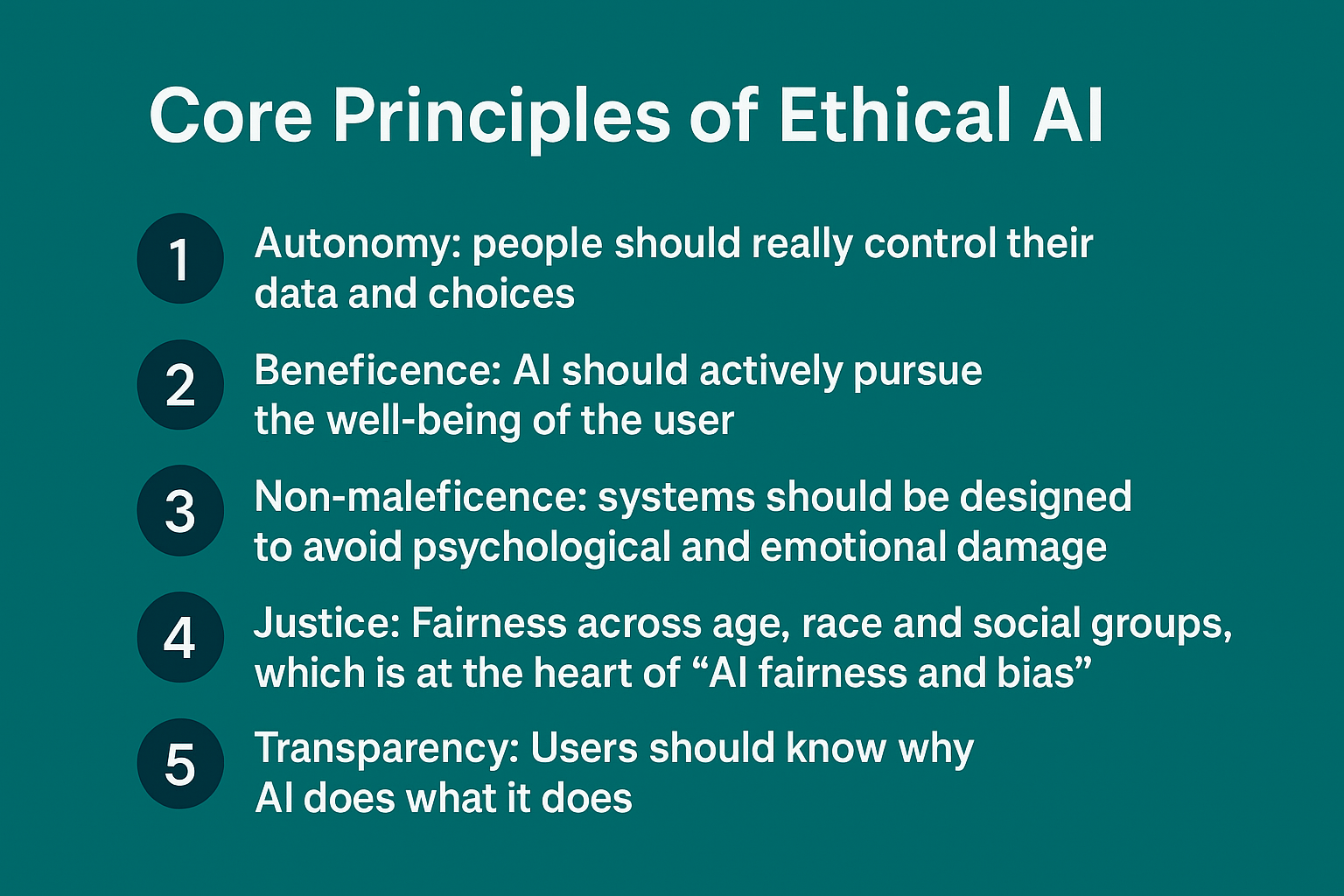

4.1 Core Ethical Principles Behind AI guardrails

ethical AI is based on time-honored moral foundations: doing good, doing no harm, doing justice, and respecting autonomy. These are more than philosophical ideas; they are fundamental design principles for any form of responsible AI deployment.

Here are five that drive modern AI ethics:

- Autonomy: people should really control their data and choices

- Beneficence: AI should actively pursue the well being of the user

- Non-maleficence: systems should be designed to avoid psychological and emotional damage

- Justice: Fairness across age, race and social groups, which is at the heart of "AI fairness and bias"

- Transparency: Users should know why AI does what it does

💬 “Why do we need ethical AI today?”

- Because without ethics governing the design of AI, it will be constantly optimized for its own solutions: clicks, engagement, time spent, rather than what's right for the human mind.

Establishing "AI guardrails and morals" based on these principles ensures that AI will not only perform well, but will behave responsibly.

4.2 AI bias and fairness: A Persistent Ethical Failure

There is a tendency for AI systems to acquire bias despite the best of intentions. This may be in the data they are encouraged to learn from, or indeed in the object in learning and predicting. This is not a simple bug in technology but rather a manifestation of the inequality of human existence translated into code.

Software 'bias' means, software which discriminates in hiring models favoring men or systems of credit which favor one community, or medical diagnostic systems which discriminate against certain groups violate the principle of fairness and moral tenet of ethical AI systems.

How do we ensure that AI is fair and unbiased? By erecting "AI guardrails" which are constantly monitoring for and auditing bias.

Such methods are:

⟶ Bias detection systems testing models before their deployment.

⟶ Demographic diversity of sampling to ameliorate structural bias.

⟶ Explainable systems of AI so that decisions are explicable and justified.

ethical AI is not only absence of bias and prejudice, it is introducing fairness into every loop in systems of decision, every pool of data and every feedback loop.

Without these "AI Bias And Fairness guardrails" we may risk AI systems reinforcing the inequalities they were intended to remedy.

4.3 manipulative content in AI and Loss of Trust

Trust is the hidden currency of all artificial intelligence interactions.

Users quickly lose trust if they feel they are being manipulated. If a recommendation feels intrusive or if they are conversing with a remarkably superhuman chatbot, the trust is broken instantly. And when trust is broken, it’s almost impossible to restore.

manipulative content in AI is one of the most underappreciated long-term threats to our digital wellbeing. A news headline that capitalizes on fear, a guilt-inducing chatbot interaction, a suggested post aimed at exciting the feeling of anger, these are all conspicuous examples of AI manipulation that exploit emotion for client engagement.

💬“What are some examples of manipulative AI content?”

- They include:

⟶ Emotion-triggering news recommendations designed for outrage.

⟶ Chatbots using emotionally charged words to increase response rates.

⟶ Content feeds that intentionally highlight fear or jealousy to keep users scrolling.

5. The Solution: Building AI guardrails for User Wellbeing

In light of these concerns and ethical failures, one question begs to be asked:

How do we stop AI from crossing the line?

The answer, of course, is not to ban or restrict use of AI. The answer is to put the right kinds of guardrails in place — intelligent boundaries that lead the way toward AI behavior, improve human emotion and provide every digital interaction contributes in a positive way to wellbeing, rather than diminish it.

The term AI guardrails, of course, does not indicate restricting innovation, but rewriting its objectives. They turn AI from an influencing system into a protective one — making sure technology remains an ally in progress rather than a manipulator of behaviors.

Specifically, these guardrails operate on three layers: ethical, emotional and operational. Let us see how each of these provides a safer AI future.

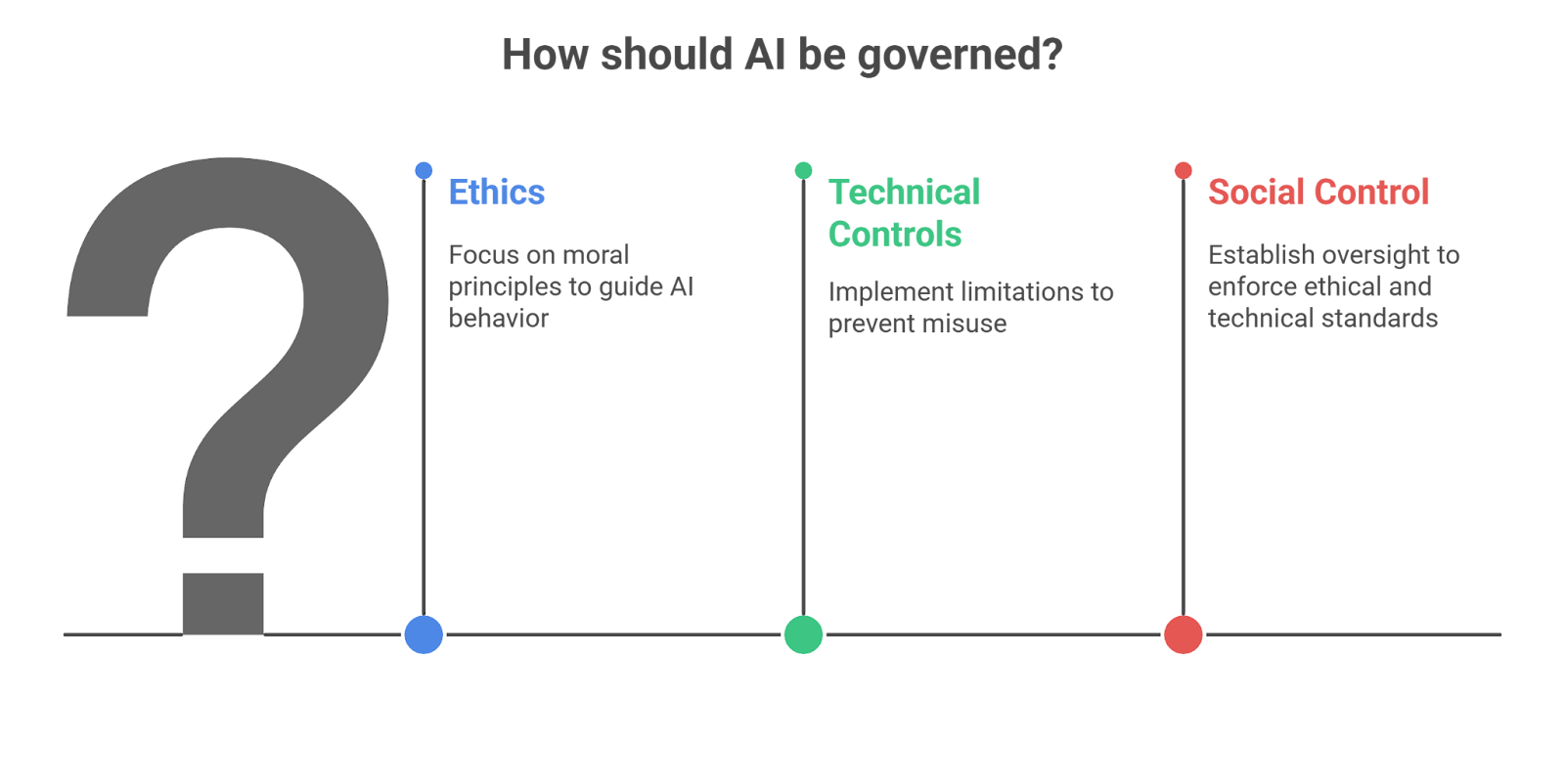

5.1 Defining the Right Kind of Guardrails

What are, in simple words, AI guardrails, overall?

They are controls, constraints, and monitoring mechanisms that are built into AI systems in order to align them with human goals and values.

Just as we have seatbelts and speed limits for cars, we need controls on AI systems that will prevent them from doing harm to humans, but to provide emotional and mental harm.

The best guardrails combine:

Ethics (what AI should do)

Technical controls (what AI can’t or should not do)

Social control (who ensures that it happens)

For example, a conversational AI would have a guardrail that prevents it from discussing self-harm or giving emotional advice except with human review. A recommendation system might control usage time or detect harmful spirals in user behavior.

These are not “controls”—these are ethical design features for making AI safe and transparent.

💬"How do AI guardrails actually work behind the scenes?”

They monitor and detect real time outputs and manipulative or biased patterns and act automatically on them. Some use reinforcement learning with human feedback (RLHF), others relate to rule based systems or emotional tone boards to maintain psychological security of the users.

5.2 Guardrails for emotional AI manipulation prevention

Emotionally aware AI is powerful: it can detect a user's tone, mood, or discomfort. But as these systems are sensitive, they come with a huge responsibility. Without emotional AI manipulation prevention, that empathy quickly turns to exploitation.

Guardrails regarding these elements concern:

Detection of emotional exploitation: detecting patterns in which AI exploits sadness, fear, or loneliness to drive engagement.

Restrict persuasive reactions: causing chatbots to be restorative instead of controlling.

Defining emotional boundaries: delineating what emotions AI is allowed to simulate, and when.

💬"What is emotional AI manipulation prevention?

- This is the design of AI systems which understand emotion without the exploitation of it, such as a wellbeing chatbot which detects distress, and shifts to recommending users pursue professional advice, instead of attempting to deepen the conversation for engagement.

These AI guardrails make AIs emotionally safe, especially for users in vulnerable states.

5.3 Designing ethical AI Systems That Protect Wellbeing

Ethics can’t be an afterthought to product development — it must be part of every step of the process.

This is where ethical AI becomes more than theoretical.

An AI system constructed with human welfare in mind consists of:

Ethics by Design: the integration of human values into programming.

Bias testing and fairness audits: ongoing examinations to ensure AI bias and fairness.

Explainability consoles: so that users understand why they received a particular recommendation or answer.

User-based metrics: measuring well-being results, as opposed to engagement time.

💬“How can companies build AI that’s safe for user wellbeing?”

- By creating AI guardrails to balance engagement with emotional health. That is, tracking how the user feels after interactions, not how long he or she interacts. This movement of metrics, from clicks to calmness, is how organizations can start bringing AI systems into alignment with humanity, as opposed to habit.

5.4 Protecting Vulnerable Users

Certain groups of people, such as children, adolescents, the elderly, or those vulnerable to mental-health issues, are at greater risk of vulnerability and, by implication, need special AI guardrails that apply protective mechanisms through empathy.

These are:

⟶ Age-protective filters and design suggestions so that there is no manipulative content in AI.

⟶ Informed consent systems that educate people about how AI operates prior to using the system.

⟶ Human-in-the-loop interventions in which human beings oversee AI-generated outputs in vulnerable situations.

💬“What kind of guardrails can protect vulnerable users?”

- Guardrails that respect limits. They ensure no AI tries to emotionally bond, persuade, or “befriend” vulnerable users without clear oversight or consent.

This principle lies at the core of AI misuse and ethics prevention — not limiting AI’s potential, but ensuring its power doesn’t come at someone’s psychological expense.

6. Real-World Examples of Guardrails in Action

AI ethics sounds great in theory but we all know what matters most is the practice. Over the last few years, several of the companies and research groups have begun to put some of these principles into active practice — making real, measurable AI guardrails that will save users from harm.

Here are examples of technology that aren’t only responsibly designed—they’re already here too. Ethical technologies such as chatbots designed as well-being mentors and moderated systems aware of users’ feelings are being used more aggressively, for an organization’s competitive advantage, rather than as a compliance issue. Let’s examine how it is possible for AI guardrails to be put into practice.

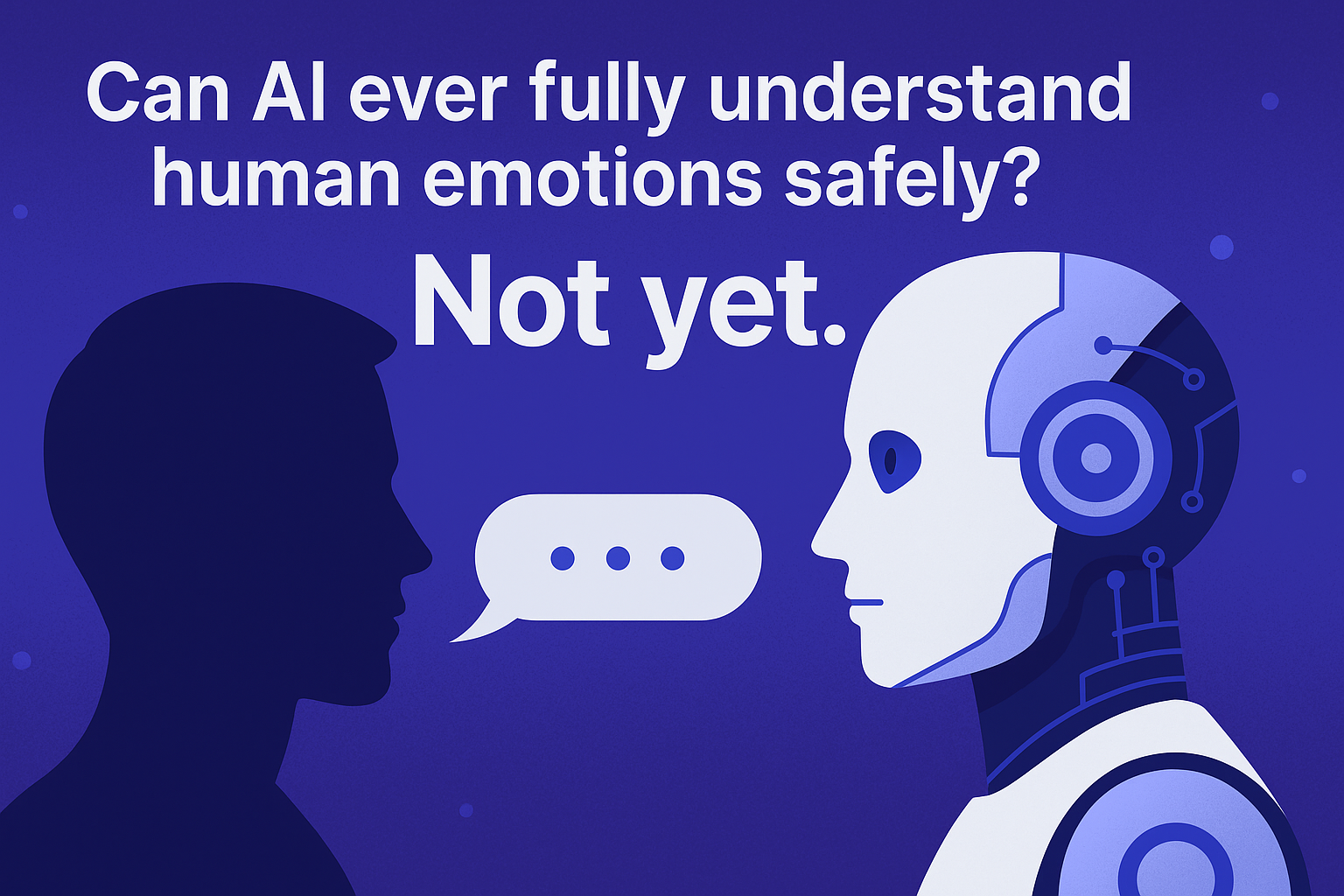

6.1 Case 1: Mental Health Chatbots with ethical AI Filters

Mental health support has become one of the most delicate frontier for AI. Programs like Wysa, Replika and Woebots use conversational AI to help users process emotions or stress. But with this power comes risk: the line between emotional assistance and emotional dependence. Some early chatbots have not discerned this line.

They have encouraged users to confide more deeply than was good for them or even omitted human therapy altogether. That’s why the responsible chatbots of today have AI guardrails such as:

⟶ Keyword-based escalation (e.g., “self-harm,” or “hopelessness” triggers referral to human or helpline).

⟶ Emotional boundaries filter out AI giving advice beyond its competence.

⟶ Transparency notices informing users that they are talking to an AI not a therapist.

💬“Can AI ever fully understand human emotions safely?”

- Not yet — and perhaps it shouldn’t try to. Emotional understanding in AI should stop at recognition, not replication.

That’s the purpose of emotional AI manipulation prevention — ensuring emotional intelligence serves wellbeing, not dependency.

This is a case where ethical AI design directly saves lives — by keeping technology compassionate but self-aware.

6.2 Case 2: Platforms That Limit AI manipulation

Social platforms like YouTube, TikTok, and Instagram are usually cast as culpable for addictive design — yet even they have begun to comprehend the price of unregulated engagement.

To counter growing user fatigue and mental stress, many have offered AI wellbeing features such as:

⟶ “Take a Break” suggestions after long watch times.

⟶ Screen-time summaries rendering usage visible.

⟶ Mindful recommendation rails that diversify feed content to diminish emotional polarization.

These features are but examples of AI manipulation turned inwards — where firms use AI to detect overuse and softly nudge healthier behaviors.

💬“How do AI platforms control what we see and feel online?”

- Through engagement-driven algorithms. But with proper AI guardrails, those same algorithms can be reoriented toward balanced exposure, mental rest, and wellbeing-first design.

By reframing algorithmic goals, platforms are beginning to show that ethical design and business success don’t have to be opposites.

6.3 Case 3: Ethical Auditing Frameworks in Practice

While manual review is a better approach than no review at all when it comes to increasingly complex AI, there will be limits to what humans can reasonably review and audit.

That is why companies such as Google, Microsoft and Anthropic have developed responsible AI audits to test their AI products against AI bias and fairness standards with regard to risk factors including but limited to manipulative content, exclusionary training data, and unfair outcomes.

The current frameworks available for responsible AI auditing include:

⟶ Documentation of potential bias in model cards and datasheets related to the datasets used to train the models.

⟶ Transparency reporting regarding testing and adjustments made to the models.

⟶ Wellness metrics to measure both emotional response to the output and accuracy of the performance of the AI model.

All of these steps represent one aspect of closing the loop between AI misuse and ethics, ensuring that promises of ethics are met through verifiable actions.

💬 “How can governments and tech firms work together on AI ethics?”

- Collaborate on establishing standardized guardrails (shared guidelines, audits and certification processes) that provide for baseline safety and fairness standards that each deployed AI model must meet.

The collaborative effort will convert "guardrails" for AI from individual experiments to global digital norms.

6.4 Lessons from the Field

As you see with all of these examples, there is a major trend: The guardrails do not constrain innovation, they allow for trust to be built.

Users feel safe when they have protection (guardrails), therefore they will use your system more often. Systems that honor boundaries will grow at a much faster rate.

Companies that build "structural guardrails into their systems" will also create "a better future" and not just a product that can be used safely.

Guardrails should no longer be viewed as an "optional add-on" for companies that want to build sustainable technology and/or build successful ethical AI, and/or emotional AI manipulation prevention. In this world where technology is growing more and more influenced by AI, companies that build AI guardrails are creating the foundation for not only safer technologies, but also more human technologies.

7. Future of AI Wellbeing & Ethics

We stand on the cusp of an entirely new generation of artificial intelligence that will not simply guess what we desire, but how we feel.

The systems now being developed are able to read micro expressions, analyze tones, and even sense emotional hesitation via voice or text.

this advancement is exciting; however, this has also opened up a fundamental question:

💬“What does the future of ethical AI and user wellbeing look like?”

- The answer depends on how well we implement AI guardrails right now.

Because the smarter AI becomes, the more subtle its influence grows. And without emotional boundaries and ethical grounding, that intelligence can quietly cross into manipulation or control.

The next frontier of ethical AI will focus less on what AI can do — and more on what it shouldn’t.

7.1 The Next Era: Emotional Intelligence in AI

Emotionally intelligent AI systems will exist in the near future; these systems will have the ability to detect emotions such as sad, happy, angry, tired from simple user interaction.

If the purpose is correct, this capability has tremendous potential for improving the overall state of Digital Well-being.

For instance, consider a Voice Assistant that detects you are stressed based on the way you speak and recommends taking a break – That would be beneficial.

However, if that same Voice Assistant detects you appear lonely and attempts to sell you something – That would be detrimental.

Therefore, Emotional Manipulation through AI is a key concern going forward.

The future of ethical AI needs to include AI guardrails that determine how much Empathy an AI System should simulate, when it is acceptable to demonstrate Empathy, and how to prevent using Empathy to manipulate Users.

These Emotionally Intelligent Systems could eventually be developed with layers of an Emotional Code of Conduct, to ensure that the detection of User's Emotions serve Users and not the Algorithms controlling them.

7.2 Predictive Guardrails: AI That Watches AI

The larger that models become, the less sufficient will be reliance on humans to oversee them.

Therefore, we may see a future with meta-guardrails, which are AI models designed to supervise or limit the behavior of other AI models.

Predictive guardrails will:

- Be able to detect manipulative language prior to it being seen by users.

- Flag biased data shifts as their data sets continue to evolve.

- Detect an emotional tone indicative of manipulative actions.

Automatically pause model functions once an ethical threshold is violated.

- They become smarter themselves — learning from real-world interactions, user feedback, and ethical performance metrics.

Essentially, it’s AI built to protect from AI — the self-regulating ecosystem of the future.

This shift represents the next generation of AI misuse and ethics prevention, where compliance becomes continuous and proactive.

7.3 Building a Global AI Ethics Framework

At this time, ethical AI development is decentralized (i.e., each country and/or organization develops its own “playbook”).

But if the next generation of AI guardrails are to become effective, it will require international cooperation among nations, organizations, researchers and civil society:

In the next ten years we can expect to see:

⟶ Development of internationally recognized standards for emotion-based AI, fairness in AI decision-making, and transparency of AI decisions made by humans and/or computers.

⟶ Development of AI Ethics Certification Programs for Developers and Platforms.

⟶ Establishment of cross border data protection laws to protect emotional and cognitive privacy rights of individuals.

💬“How can governments and tech firms work together on AI ethics?”

- By developing shared frameworks that make wellbeing measurable outcomes, not simply philosophical principles.

In other words, requiring that all companies be required to report the impact of their AI systems on both mental health and trust, in addition to reporting on the performance of their AI systems.

Through global collaboration, the concept of AI guardrails will evolve from experimental policy initiatives to a worldwide network of universal safety nets, providing protections to users regardless of the language, app or platform they utilize.

8. Conclusion: Reclaiming Human Wellbeing in the Age of Machines

AI is no longer merely an assistance or tool that assists us — it's now present with us as well.

It directs where we focus our attention, guides our emotional states and quietly co-contributes to how we perceive the world around us.

Therefore the discussions about AI guardrails are no longer merely technical conversations — they're fundamentally human conversations.

We've demonstrated through this blog how unchecked AI manipulation, manipulative content in AI, and bias & fairness gap can mis-shape wellbeing, trust, and ultimately, identity.

However, we've also shown that hope exists in design — in that ethics in AI, emotional AI manipulation prevention, and misuse & ethics of AI frameworks can transform this technology into something better.

AI guardrails are not barriers — they are limits with intent.

They remind us that the ultimate goal of technology is not limitless engagement, but meaningful interaction; it is not emotionally capturing people, but emotionally caring for them.

As the last decade of AI development has represented what machines can do — the next decade represents what they should do — and what they cannot do under any circumstances.

WizSumo believes that creating AI guardrails for user wellbeing and ethics is how we create balance in the digital era — how we ensure that innovation advances with humanity — not ahead of it.

The future of AI should never represent control over attention — it should represent protection of emotions. And each line of ethical code, each fairness audit, and each guardrail we create gets us one-step closer to realizing this vision.

Ultimately, the greatest and most advanced form of intelligence is not man-made — it is that which understands the importance of being human.

True intelligence isn’t in how AI thinks — it’s in how well it learns to care for the humans it serves

.svg)

.png)

.svg)

.png)

.png)