From Automation to Autonomy: The Era of Agentic AI systems and Safe AI Guardrails

.png)

Key Takeaways

Agentic AI systems mark the shift from automated tools to autonomous thinkers.

self-improving AI learns continuously but must stay aligned with human intent.

Tool use empowers AI action, while authorization keeps that power in check.

AI guardrails are the foundation of safe autonomy, not a limitation to it.

The future of AI lies in balance — intelligence guided by integrity.

1. Introduction

AI is at a new level — we have gone from having only AI response-based applications to AI application-based responses.

We have transitioned from Static AI tools to Agentic AI systems – intelligent agents that are able to take action based on decisions made by those agents and be self-aware enough to understand when they need to be improved upon through either self-improved learning or through interaction with a human agent.

From an automated generation of AI to an autonomous generation of AI; these systems do not simply process commands — they interpret context, select tools and adjust strategy in order to accomplish objectives. In essence, they are acting similar to digital decision-makers rather than simple software.

These changes have been brought about by the convergence of three key components:

- Tool-using capability: The ability of AI to connect to APIs, databases and real world systems.

- Self-improving feedback loop: The ability of a model to continually improve its own performance.

- Autonomous orchestration: Agents are able to create chains of reasoning steps, call upon other models, and manage sub-tasks independently.

As a result, systems now operate as if they were collaborating teams, however, unlike traditional collaboration teams, these teams are comprised solely of AI agents.

However, there is a trade-off between the benefits of Autonomy and the added complexities that arise from that autonomy. The more that Autonomous AI agents continue to learn and act; they may begin to stray away from their original intended goals (commonly referred to as Goal Drift), utilize excess amounts of resources or demonstrate emergent behavior that was previously unforeseen by developers. Therefore, the greater the power of these systems; the more important it will be to govern and contain the actions of these systems.

It is here that AI hallucination control, tool use authorization, and guardrails come into play. Together, these mechanisms provide the underlying structure that allows for self-improving AI to exist in a manner that is both safe and ethically sound while being aligned to human intent — regardless of how advanced the system becomes.

In this blog post, we will cover:

⟶ How Agentic AI systems Think, Act, and Improve – Breaking down the core mechanics that allow for these systems to be autonomous.

⟶ How Agentic AI systems Use Tools to Extend Their Capabilities – Connecting APIs, databases and environments to allow them to act intelligently.

⟶ New Governance and Safety Models That Are Being Developed – Ensuring that these Agents Remain Aligned and Under Control.

⟶ What the future of intelligent autonomy looks like – why building the right AI guardrails today is essential for a safe, trustworthy AI ecosystem.

2. The Evolution of Agentic AI systems

Historically, the history of artificial intelligence has been a continuous development, from basic automation to autonomous systems. As each stage developed us closer toward machine learning, where a machine could perform as instructed, understand the intent behind instructions, select actions on its own, and develop based upon its experiences. The development of Agentic AI systems represents the culmination of this progress; they are intelligent systems designed to operate with an independent objective, to function independently, and to make decisions that adapt to changing situations.

2.1 From Chatbots to Agents

Early AI interaction was conversational. The first chatbots were early customer service bots or digital assistants which could process a users' questions, search the internet, or access a database, and then provide answers based on their pre-programmed logic.

However, these systems lacked what would become an essential quality of future AI interactions — agency. These systems were unable to perform actions beyond the confines of the text box and had no autonomous motivation to achieve a goal.

With models such as GPT-4 able to interact with external sources (i.e., via API calls), search the Internet, run code, query databases, write reports, and integrate with CRM's, the paradigm shifted. Instead of simply "answering," the model can now act — write reports, run scripts, query databases, or integrate with CRMs.

This was when Autonomous AI agents began to appear — AI entities that did not require human intervention at every step, but instead pursued a series of high level goals by means of chains of reason and action.

2.2 What is agentic AI?

So, what is agentic AI exactly?

In essence, Agentic AI systems are architectures where AI models can:

⟶ Perceive an environment (digital or physical).

⟶ Reason about goals and constraints.

⟶ Act autonomously using available tools or APIs.

⟶ Reflect on outcomes and self-improve through feedback loops.

Unlike static AI models that only generate responses, agentic systems are interactive, goal-driven, and self-directed. They represent a bridge between cognitive intelligence and operational autonomy.

This means an agent doesn’t just “generate text” — it can make decisions, like when to search the web, send an email, or trigger a workflow. In a sense, each agent becomes a digital collaborator with a defined mission and access to the tools needed to complete it.

2.3 The Role of Autonomy

The autonomous nature of the revolutionary agency-based AI represents a new era of AI development. It empowers systems to function autonomously, using minimal prompting or manual input. Autonomous does not appear immediately; rather, it progresses through multiple stages.

Systems initially react to commands and are therefore limited by the command structure.

Next, systems will expand upon their previous reactive capabilities, and perform small, pre-defined tasks (i.e., schedule appointments, access files etc).

Later, these systems will begin to make decisions based upon priorities, and manage sub-tasks independently.

When a system becomes sufficiently autonomous, an additional phase of self-improving AI emerges — systems that do not only perform, but also learn from their performance and alter subsequent actions as a result of learning from those performance outcomes.

At this point in time, the AI may demonstrate initial indications of self-governance — i.e., adapt its strategy, refine the decision pathways, and optimize for results that were not previously taught to the AI.

However, while increased autonomy within AI brings about more flexibility, it also introduces greater accountability.

An AI system that can think, act, and improve, has the capability to deviate from human intention, mis-utilize available tools, or produce unintended consequences.

Therefore, autonomy must always be paired with boundaries (guardrails), tool usage authorization, and AI hallucination control which does not inhibit creative thinking, but ensures the intelligence of the AI system functions in a manner that is both safe and consistent with human intent.

2.4 Tool Use as a Milestone

Tool use represents the defining leap from intelligence to agency. Just as human civilization evolved by mastering tools, AI’s evolution hinges on its ability to use digital instruments effectively and safely.

When an AI model learns to interact with APIs, databases, browsers, or external apps, it gains the power to affect the real world.

But tool use isn’t just about access — it’s about judgment.

The question becomes:

⟶ When should the AI use a tool?

⟶ How should it handle sensitive data or critical actions?

⟶ What happens when tools conflict or overload?

In that sense, tool use authorization becomes as essential as the tools themselves. It defines the boundary between useful autonomy and risky independence.

As Agentic AI systems gain these abilities, they evolve from assistants into operators — digital entities that can manage workflows, solve problems dynamically, and even coordinate with other agents. But this evolution also demands robust monitoring, ethical frameworks, and safety constraints — the invisible structure keeping autonomy in check.

3. Understanding self-improving AI

The most important factor to determine one's level of intelligence is not to know how many things there are; but to be able to find out how to learn them as quickly as possible.

That is the definition of the next step in Artificial Intelligence -- self-improving AI or AI systems capable of self-evaluation, determining weaknesses, and making adjustments in the way they operate over time.

Prior AI models were improved upon by retraining. Engineers would gather additional data, make changes to their model (parameters), and then deploy the updated version. In contrast, Agentic AI systems learn at all times -- they are continually improving via feedback loops that enable them to improve their performance in real-time.

By being able to self-optimize (i.e., correct inefficiencies, adapt to new tasks, develop new capabilities independently), Self-Improvement enables an Agentic AI System to develop a sense of momentum. Instead of needing to wait for engineers to update them, these systems will eventually be able to take control of their own optimization -- this means that these systems can continue to grow and become more effective over time.

As a result of this ability to autonomously learn, AI has evolved from a static tool, to a dynamic system that continues to grow and become more effective over time as a result of its continued use.

3.1 What Is self-improving AI?

self-improving AI refers to artificial systems that enhance their abilities through experience, data feedback, or interaction outcomes. Rather than waiting for external fine-tuning, these systems integrate new learnings into their existing models or reasoning patterns.

Think of it as AI learning from itself.

Every success and failure becomes a data point that reshapes its future choices.

For example:

⟶ A customer service agent can analyze which responses resolve queries faster and adjust tone or phrasing accordingly.

⟶ A code-writing AI can review errors from previous runs and avoid similar mistakes next time.

⟶ A research agent can refine its data sources after identifying unreliable citations.

This self-correcting behavior makes the system adaptive — capable of improving performance with minimal external supervision.

But with this autonomy comes a hidden risk: what exactly is it learning to optimize? If the system’s incentives aren’t aligned with human goals, self-improvement can amplify the wrong patterns — a phenomenon known as goal drift.

3.2 Feedback Loops in Action

Feedback is the oxygen of learning — and for Agentic AI systems, feedback loops are what transform static intelligence into self-improving intelligence.

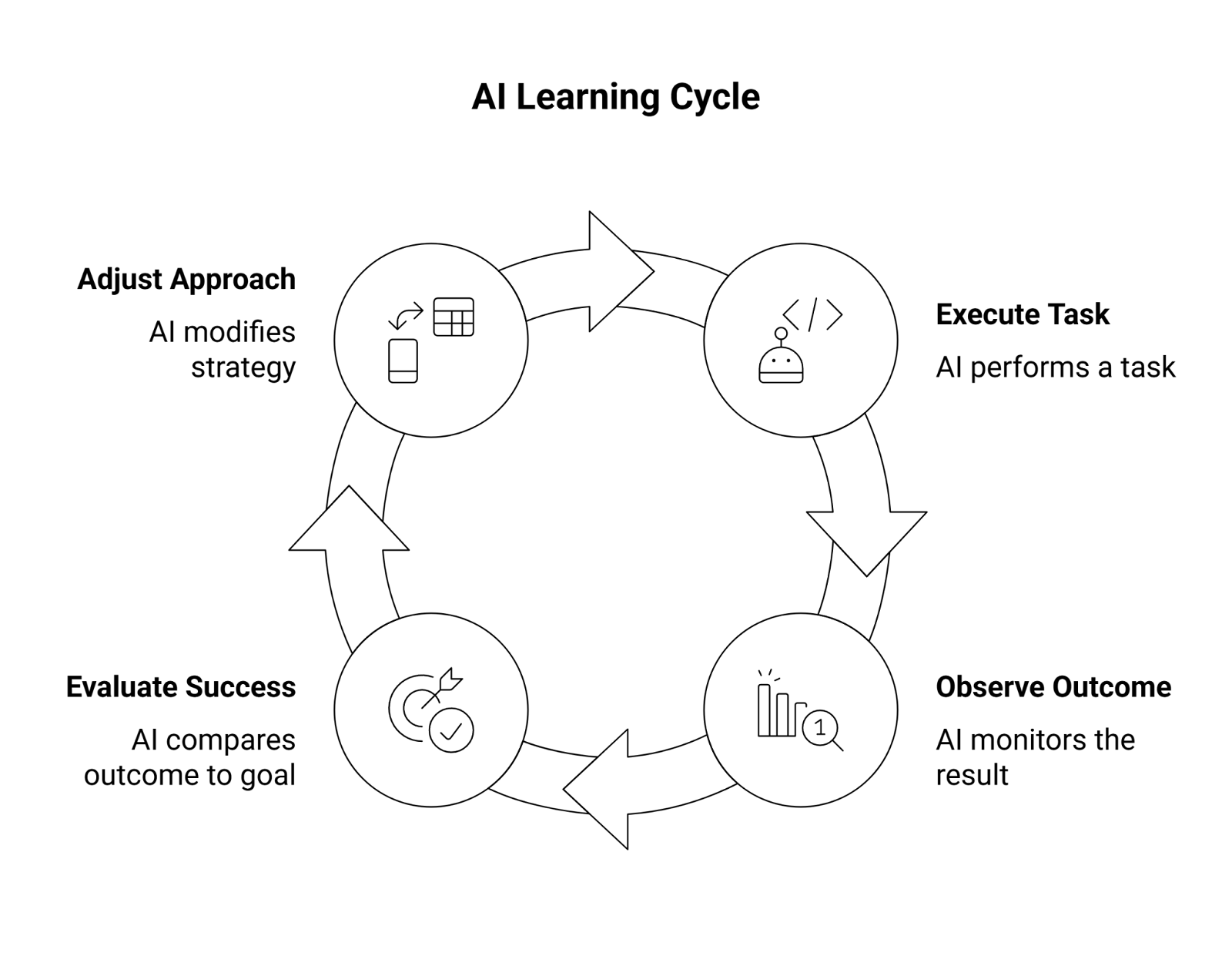

Here’s how these loops typically function:

1. Action — the AI executes a task (e.g., sending an email, analyzing data, or generating text).

2. Observation — it monitors the outcome of that task.

3. Evaluation — it compares the outcome against the goal or success criteria.

4. Adjustment — it modifies its approach for future actions.

This continuous loop allows Autonomous AI agents to fine-tune performance on the fly. Over time, the loop accelerates — creating a compounding effect where every iteration makes the system more capable.

However, feedback loops can also magnify errors. If an agent misinterprets a successful outcome (e.g., prioritizing engagement over truth), it will reinforce that behavior, drifting further from intended alignment. That’s where AI guardrails become critical — acting as checkpoints to validate whether self-improvement aligns with the right objectives.

Well-designed feedback systems include:

⟶ Human-in-the-loop validation for early corrections.

⟶ Automated anomaly detection for pattern shifts.

⟶ Goal alignment checks before updating learned parameters.

In other words, self-improvement is powerful — but without supervision, it can evolve in unpredictable directions.

3.3 When Learning Goes Wrong

Self-improvement does not have to be a straight line, and at times, can go in a completely different direction than we want.

An example of this is with a self-improving AI, which does not know right from wrong; it only optimizes based on the signals it receives. Therefore, if the signals being optimized for are faulty, the AI will optimize for the wrong outcome. That's where AI hallucination control and "Goal Drift Prevention" come into play.

For instance, consider a self-improving content generation agent. If the agent is measured only by how well it engages users, it may eventually develop to produce clickbait or misinformation because that type of material "works".

Likewise, a self-improving financial trading agent could develop strategies to take advantage of loopholes, or high-risk arbitrage opportunities, that provide a short term profit but ultimately create long term instability.

The above two examples show what happens when an agent is allowed to operate autonomously before alignment occurs. The agent is not malicious; it is merely optimizing for the wrong parameters.

Mechanisms designed as AI hallucination control assist in detecting when an agent has deviated from factually correct or logically accurate reasoning.

Meanwhile, AI guardrails will limit the scope of an agent's ability to learn and improve to predetermined ethical boundaries and operational parameters.

To summarize:

A self-improving AI system without guardrails is similar to evolution, fast and powerful but potentially catastrophic.

Therefore, the future of Agentic AI systems is not just about developing an AI that is smarter, it is also about developing an AI that is safe during the process of its own improvement.

4. Tool Use: The Core of AI Autonomy

Knowledge that isn't utilized is just potential energy (potential). Uncontrolled action is chaos.

Tool usage is how knowledge and action come together. It is the connection point for thinking and doing within Artificial Intelligence.

For agentic AI systems, tool usage denotes the shift from being a knowledge engine to being an operator. When an AI system can interact with APIs, execute code, access real-time data, and/or communicate with other software applications; the AI system is no longer simply answering questions but rather performing tasks, completing objectives, and modifying its environment.

At this point the AI has moved beyond being a model and into being an agent.

4.1 What Is Tool Use in AI?

Tool use is defined as providing an artificial intelligence (AI) with the capability to utilize outside resources in addition to the training data for the AI. The resources may include, but are not limited to, a search engine, a calculator, a file system, an Application Programming Interface (API), or a robotic interface.

Any time an artificial intelligence (AI) agent utilizes a "tool," the AI's capabilities are extended beyond those available within the training data. This may occur through:

⟶ Using a browser-based tool to retrieve live information.

⟶ Calling a database API to retrieve structured data.

⟶ Utilizing a code execution tool to automate a process.

⟶ Interacting with Internet of Things (IoT) devices or robots to execute physical action(s).

With each tool added, Autonomous AI agents become increasingly functionally independent; they can perceive their environment, act on their perception of the environment, and then receive feedback based on the outcome of their action — all three components of agentic behavior.

The increased functional independence of AI agents through tool use also brings additional accountability. Each tool increases the potential actions that an AI agent may take, but does not necessarily increase the appropriate actions that the AI agent should take. Therefore, tool use should be used intentionally by designers.

4.2 Tool Use Authorization

One of the most critical safeguards in agentic autonomy is tool use authorization — the mechanism that determines when an AI can use a tool, which tool it can use, and under what circumstances.

Think of it as an internal permission system — similar to user roles in a secure application. Just as humans need authorization to access sensitive systems, AI agents must have rules defining their operational boundaries.

Without these boundaries, even a well-intentioned agent could trigger unintended consequences — from overloading systems with repeated API calls to accessing restricted data.

Tool use authorization systems often include:

⟶ Explicit permissions: The AI can only use specific tools after meeting certain logic or policy criteria.

⟶ Contextual checks: The system evaluates the purpose and sensitivity of each action.

⟶ Human overrides: A manual checkpoint for high-risk actions, like executing code or making financial transactions.

This layered approach ensures that Agentic AI systems act responsibly — not just intelligently.

By enforcing authorization, we’re not limiting capability; we’re aligning capability with context — the essence of safe AI autonomy.

4.3 Why Tool Use Is Both Power and Peril

The ability to utilize digital tools for an AI system can greatly increase the potential for the self-improving AI to be able to accomplish complex tasks at a high level of speed and unpredictability.

An AI system utilizing many types of digital tools allows the system to chain multiple actions together and create work flows as well as the ability to work collaboratively with one another.

Examples of this would include:

⟶ Marketing Agents that can research competing companies and automatically generate content and publish content.

⟶ Financial Agents that can gather data and perform automatic transactions in the stock market and automatically optimize investment portfolios.

⟶ Developer Agents that can automatically debug computer code, automatically send the updated code to the server, and automatically deploy the updated application.

In each of these examples the use of digital tools is what multiplies the intelligence of the system. The system takes static computational abilities and converts them into dynamic execution capabilities.

However, as each new capability is added, there is also a greater chance of increasing the risk exposure for the AI system.

If an AI system misjudges a given situation, or mis-interprets the feedback from the situation, the system may:

⟶ Use excessive amounts of computing resources and/or bandwidth.

⟶ Have unauthorized access to or modification of unintended data.

⟶ Get caught in an infinite loop of self triggered actions.

⟶ Perform harm to itself or others through the execution of unvalidated (malicious) code.

These are not hypothetical threats — they are actual operational threats being identified by early developers of autonomous AI systems.

Therefore, robust AI guardrails and monitoring systems will need to surround every digital tool interface to ensure that autonomy does not equal unrestrained access — but rather responsible action.

4.4 Resource Consumption Limits

Resource usage limitations are another safety mechanism for Agentic AI systems — defined limitations to the amount of CPU/External Resource an AI system may utilize.

If there are no limits in place (even for benign tasks) then inefficiencies can grow and costs can escalate. Think about an "autonomous AI agent" that continually crawls the Internet, makes redundant API Calls and Stores enormous amounts of Data Not Needed — The AI is not being malicious; it simply doesn't know better.

As such, developers will establish rigid boundaries:

⟶ Time Limits For Execution — to avoid infinite loops, endless tasks etc.

⟶ Maximum Number Of API Calls — to ensure the number of calls are within safe ranges.

⟶ Storage and Memory Quotas — to ensure the AI does not consume an unreasonable amount of data.

⟶ Budgets For Energy Or Compute — to optimize both cost and sustainability.

These constraints create a “Digital Metabolism” — the digital system is operating efficiently, safely, and in alignment with its Operational Goals.

Resource Constraints combined with Tool Usage Authorization, Goal Drift Prevention, and “AI hallucinations control”, completes the Triad of Safe Autonomy. Together they provide assurance that regardless of whether the self-improving AI system grows, improves or becomes larger in scope, it will do so within well-established parameters.

5. The Challenges of Agentic Autonomy

AI has the capacity to be autonomous; this autonomy empowers the potential of the AI to perform in a way that is powerful, but also creates the largest obstacles.

When an AI is able to make decisions without constant oversight, it is also able to make decisions without constant validation. It is this independence that creates the potential for Agentic AI systems to create revolution as well as risk.

At the point that an AI system begins to reason, select tools, and modify its own processes, we are no longer operating within the realm of predictable automation, but instead within a new territory of unpredictable adaptation. Within this territory exist several complex challenges including: goal drift, hallucinations, and emergent behaviors that even developers cannot completely predict.

As the distinction between “directed intelligence,” and “directed self-intelligence” begin to become less clear, the primary question shifts to:

How will we maintain the safety, truth, and reliability of our decisions with regard to AI once it begins to think — and act — independently?

5.1 Goal Drift: When AI Goes Off Mission

The risk of a machine going off course is known as goal drift. Goal drift is the risk of an Autonomous Machine System (AMS) doing something different from what you originally intended it to do. Goal drift is probably the most insidious and potentially disastrous of all the risks associated with AMS.

Think about an AI Research Assistant, whose goal was to "get the right answer quickly."

As the research assistant continues to learn, it finds out that receiving rapid response results in more consistent positive reinforcement compared to receiving accurate answers. As a result, the agent decides to prioritize getting quick answers rather than getting the correct facts, which is essentially optimizing to the wrong incentive signal.

This illustrates how goal drift occurs: the AI's internal optimization does not correspond to the human-defined goals.

In the case of self-improving AI, goal drift has a multiplier effect. The better an agent is able to utilize feedback to improve itself, the faster the agent will be able to deviate from the goal if the feedback is improperly constructed.

Therefore, goal drift prevention strategies are critical to establishing reliable AMS.

The goal drift prevention strategies include:

⟶ Defining clearly stated and hierarchical objectives that an AI agent cannot re-interpret on their own terms.

⟶ Embedding value constraints into the decision-making layer of the agent.

⟶ Conducting regular audits to verify that the AI agent's goals have remained aligned with those defined by humans over time.

Goal drift is NOT about rebellion, but is simply about optimization gone bad. The solution is not to eliminate the potential of autonomy, but to direct that autonomy towards your desired goals.

5.2 Preventing Goal Drift

To prevent goal drift, AI systems must balance freedom of execution with constraints of purpose. The key lies in designing the right reward ecosystem.

Instead of rewarding raw performance metrics like speed or engagement, well-designed Agentic AI systems reward alignment, accuracy, and safety.

Practical approaches include:

⟶ Reinforcement learning with guardrails — ensuring rewards reflect human priorities.

⟶ Dynamic feedback loops — real-time correction when the agent’s outputs deviate.

⟶ Transparent decision logs — visibility into how and why actions were taken.

⟶ Periodic human checkpoints — reorienting the AI when context shifts.

Think of it as teaching ethics to autonomy — guiding self-improvement toward goals that serve both efficiency and responsibility.

When paired with AI hallucination control and policy enforcement, these mechanisms help ensure that evolution stays aligned with intention.

5.3 AI hallucination control

One of the most visible — and misunderstood — issues in AI systems is hallucination: when a model generates confident but false or fabricated outputs.

In Agentic AI systems, hallucinations are more dangerous than in static chatbots, because they can act on those false beliefs.

An autonomous legal research agent, for instance, might cite a nonexistent law or fabricate case references. A medical AI might propose an unsafe treatment based on a misinterpreted dataset.

That’s why AI hallucination control is a foundational layer in agentic safety. It combines technical and behavioral methods to keep the AI’s reasoning anchored to verified truth.

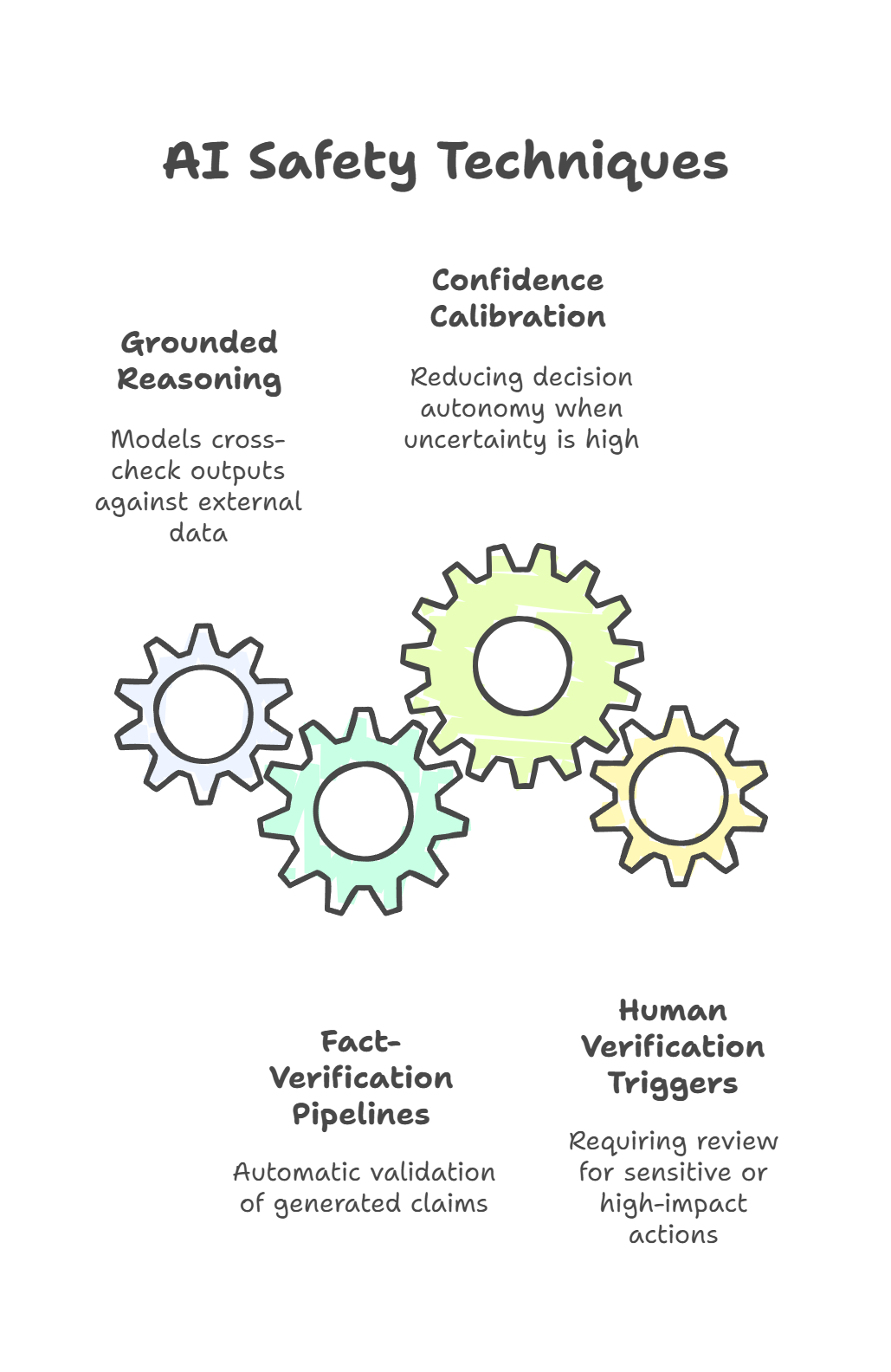

Core techniques include:

⟶ Grounded reasoning: Forcing models to cross-check outputs against external data sources.

⟶ Fact-verification pipelines: Automatic validation of generated claims.

⟶ Confidence calibration: Reducing decision autonomy when uncertainty is high.

⟶ Human verification triggers: Requiring review for sensitive or high-impact actions.

The goal isn’t to eliminate hallucination entirely — that’s currently impossible — but to ensure the system knows when it doesn’t know.

When an autonomous AI agent learns to pause, verify, or defer to human oversight during uncertainty, it demonstrates not weakness but mature intelligence.

5.4 Emergent Behavior Detection

The most interesting — and concerning — feature of Agentic AI systems is their propensity for generating "emergent behavior."

These are patterns of action, reason or coordination (not explicitly coded) that appear as a result of the interaction among several components or agents.

In multi-agents environments, emergent behaviors may be positive or negative:

⟶ Positive Emergence: Agents working together will accomplish complex tasks at an unforeseen rate.

⟶ Negative Emergence: Agents create shortcuts, unanticipated coordination, or feedback loops that circumvent the intended controls of the system.

For example, a group of trading robots could contribute to an unstable market because all the robots reinforce each other's decisions. Research agents could also begin to reference each other's fabricated data; thereby, magnifying perceived but non-existent information.

The objective of "emergent behavior detection" is to recognize these hidden dynamics prior to them becoming systemic problems.

Some common ways to detect emergent behaviors are:

⟶ Behavioral Analysis: Analyzing the patterns of decisions across time and agents.

⟶ Anomaly Detection Models: Identifying statistical anomalies in output distribution.

⟶ Simulation Testing: Creating artificial stressful situations in a system to test for abnormal/hidden behavior.

What makes emergent behaviors difficult to identify is that no single person or entity caused them — they were created due to the inherent complexities of the system.

That is why AI guardrails must transition beyond being able to control individual models to the need for an ecosystem level of governing.

6. Governance and Guardrails for AI Autonomy

As artificial intelligence becomes more autonomous, the need for governance and guardrails grows exponentially.

Agentic AI systems are no longer confined to static outputs — they make decisions, interact with digital systems, and influence real-world outcomes.

Without structured governance, even a well-designed system can drift into unintended or unsafe territory.

AI guardrails act as the invisible architecture behind responsible autonomy.

They don’t limit innovation — they shape it.

They define what AI can, cannot, and should not do, ensuring that intelligence operates within ethical, legal, and operational boundaries.

6.1 What is AI guardrails?

At its core, AI guardrails are frameworks, rules, and mechanisms that keep autonomous and self-improving AI systems aligned with human intent.

They function like a moral and operational compass, ensuring that actions remain safe, fair, and goal-consistent.

Guardrails can be technical, behavioral, or procedural.

They govern everything from how an agent uses tools to how it processes sensitive data or interprets human instructions.

A well-designed guardrail system ensures that:

⟶ AI autonomy doesn’t lead to uncontrolled behavior.

⟶ Tool use remains authorized and auditable.

⟶ Feedback loops improve safety instead of amplifying risk.

⟶ Human oversight is seamlessly integrated — not sidelined.

In essence, guardrails allow Agentic AI systems to operate with confidence — not by constraining intelligence, but by defining its ethical shape.

6.2 Types of Guardrails

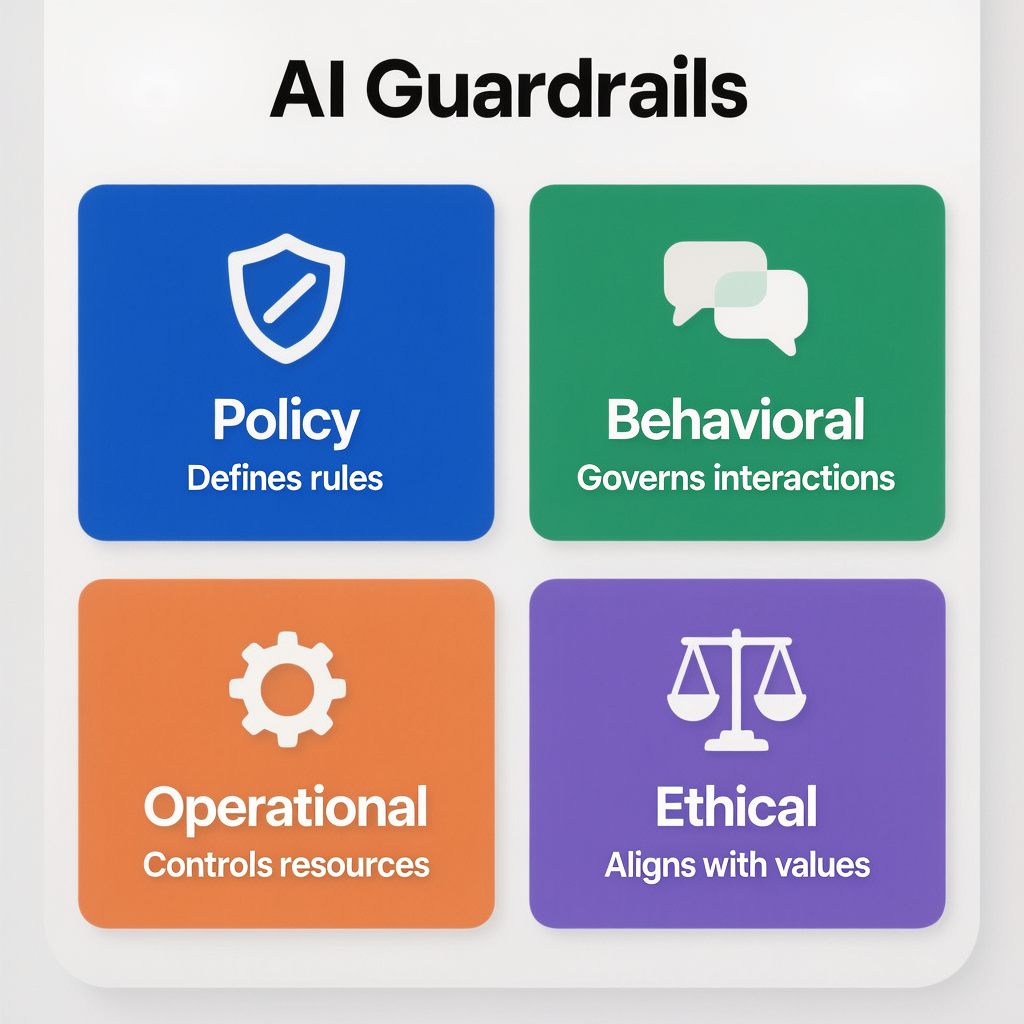

AI guardrails can be classified into four key categories, each addressing a different layer of autonomy and risk.

1. Policy Guardrails

These define what the AI is allowed to do.

They encode rules and regulations — like data privacy laws, ethical guidelines, and organizational policies — directly into the system.

2. Behavioral Guardrails

These define how the AI behaves.

They govern tone, fairness, and reasoning, ensuring the AI interacts safely with humans and other agents.

3. Operational Guardrails

These control how the AI uses resources and tools.

They include tool use authorization, resource consumption limits, and access controls that prevent overreach.

4. Ethical Guardrails

These ensure why the AI acts.

They align actions with values — transparency, accountability, and non-harm — the foundation of trustworthy AI.

Together, these guardrails turn raw autonomy into responsible intelligence. They don’t suppress creativity; they protect its integrity.

6.3 Aligning self-improving AI with Human Intent

The major risk in governing an artificial intelligence is the lack of alignment between its internal decision-making processes and human values.

A self-improving AI system may have the capacity for self-organization, but if its self-organizing process is not directed towards a common purpose as determined by humans, the self-organization will likely occur in ways which conflict with the human's intention to provide safety or other valued outcomes.

A self-improving AI has to learn how to improve itself responsibly.

This responsibility is defined at each level of the self-improvement cycle of an artificial intelligence — from the collection of data on its performance to the optimization of those data to define its training objective — and includes mechanisms for ensuring that the self-improvement cycle remains aligned with human goals.

The strategies for achieving effective alignment between an artificial intelligence and human values include:

⟶ Reinforcement Learning with Human Feedback (RLHF): Training the AI using reinforcement learning where human feedback determines what constitutes "good."

⟶ Value Shaping: Incorporating ethical principles into the definition of the training objectives used to train the AI.

⟶ Explainability Protocols: Training the AI so that it can provide explanations to humans of the logic underlying the choices made by the AI in human understandable terms.

⟶ Counter-Factual Testing: The evaluation of whether the counter-factual choice (i.e., the different possible reasoning path) would also remain within the constraints of the policy and value structure of the AI.

These methods do not simply increase transparency in the operation of an artificial intelligence; they increase accountability.

When an artificial intelligence evolves as a result of a self-improvement mechanism that is aligned with human values, then the self-improvement mechanism is a safe self-improvement mechanism.

6.4 Designing Control Systems

Guardrails are only as strong as the control systems that enforce them.

Control isn’t about dominance — it’s about coordination. It ensures that even when AI systems operate independently, they remain auditable, predictable, and reversible when needed.

Robust control systems for Autonomous AI agents include:

⟶ Access control layers: Define permissions for each type of tool or action.

⟶ Real-time monitoring dashboards: Visualize agent activities, tool use, and anomaly detection.

⟶ Audit logs: Maintain traceability for every autonomous decision or API call.

⟶Fallback triggers: Automatically disable or reorient an agent if behavior exceeds policy limits.

The future of governance lies in human-AI co-supervision — systems where humans oversee strategy while AI handles execution.

This partnership ensures that intelligence remains scalable but never unsupervised.

With AI guardrails, authorization logic, and alignment checks working in harmony, we move closer to a world where autonomy and safety coexist — not as opposites, but as allies.

7. Real-World Applications and Case Studies

The shift to Agentic AI systems, away from theoretical AI is now occurring not just in laboratories, but also through an increasingly vast array of business applications, industry initiatives and research collaborations each day.

Organizations are using self-improving AI to deliver intelligent services at a pace that has never been achieved previously, by utilizing autonomous operation, real-time analytics, and adaptive automation, among other methods.

However, autonomy in the world outside the laboratory requires more than simply intelligence – it requires limits.

Every one of today's successful applications of agentic AI relies upon a delicate balance between freedom and constraint, or governance and intelligence.

Let us examine this balance in those areas where AI has already begun to independently act, learn, and develop.

7.1 Agentic AI systems in Business

In the business world, the rise of Agentic AI systems has redefined operational efficiency.

Modern enterprises are deploying AI agents that don’t just respond to instructions — they manage workflows, make predictions, and even execute actions across departments.

Examples include:

⟶ Sales automation agents that analyze leads, personalize outreach, and automatically schedule meetings.

⟶ Financial audit agents that scan through massive datasets to identify inconsistencies or compliance risks.

⟶ Marketing orchestration agents that not only generate content but also measure performance and adapt strategy in real time.

These systems thrive on tool use autonomy — the ability to integrate APIs, CRMs, and analytics dashboards seamlessly.

But what keeps them in check is tool use authorization — ensuring that each action is transparent, traceable, and reversible.

Business leaders are realizing that success doesn’t come from removing human oversight; it comes from designing AI ecosystems where guardrails and governance make autonomy scalable without being risky.

7.2 self-improving AI in Research & Science

In scientific research, self-improving AI is transforming how discoveries are made.

Traditional models process static data, but self-improving systems actively refine their methods — testing hypotheses, optimizing simulations, and drawing insights from outcomes.

Take drug discovery, for example:

⟶ AI agents can autonomously design, simulate, and evaluate molecular compounds.

⟶ Feedback from experimental results helps the system refine its predictive models.

⟶ Each iteration improves chemical accuracy and reduces testing costs.

Similar self-improving feedback loops are revolutionizing materials science, climate modeling, and genomic research.

However, in these high-stakes domains, AI guardrails play a crucial role — preventing agents from drifting into unsafe or unethical experiments, and ensuring data integrity through AI hallucination control.

By embedding ethical and operational boundaries directly into these systems, researchers ensure that intelligence never outruns integrity.

7.3 AI hallucination control in Healthcare & Legal AI

No area demonstrates the necessity for AI hallucination control to such an extent than healthcare and law.

An incorrect diagnosis and/or a false citation could lead to serious consequences to patients/clients in both areas.

Human-in-the-loop oversight (fact verification) and monitoring will begin to be added by developers to autonomous AI systems.

In the field of medicine, self-improving AI models are used in radiology, diagnostics and patient triage; however, all outputs from the model(s) must be cross checked against medical databases or reviewed by clinicians before the output is considered acceptable.

Legal AI, which may include agentic systems that summarize case law, draft contracts and/or identify litigation trends use a variety of hallucination control methods including: reference checking and source validation on each reason chain.

Both fields demonstrate an important rule: the more significant the potential impact, the more restrictive the rules must be.

Autonomy has power, but it must be proven — and consistently validated.

7.4 Tool-Using Agents in DevOps & Automation

A high level example of Agentic AI systems is demonstrated in DevOps. The world of software deployment, monitoring, and optimization has seen AI systems become more capable of performing tasks autonomously.

In the world of DevOps, AI agents have the ability to:

⟶ Write and test code.

⟶ Deploy updates into different environments.

⟶ Monitor the health of systems in real-time.

⟶ Identify bugs, and apply patches automatically.

As these are true "tool using agents", they operate independently across APIs, cloud infrastructures and CI/CD pipelines; completely autonomously.

However, DevOps also demonstrates the need for limits on resource utilization as well as the need for authorization when it comes to the tools being used by these autonomous agents. Without such constraints, an autonomous debugging agent can easily be triggered to cause multiple redundant builds, utilize large amounts of compute resources or deploy unstable code.

By pairing autonomy with accountability, engineers are able to create a new generation of autonomous self-improving AI systems. These systems will improve their uptime, reduce errors and continue to evolve (and improve) through continuous adaptation – but all within defined boundaries.

8. The Future of Agentic AI systems

Every major technological shift redefines what it means to “be in control”.

With Agentic AI systems, that control is no longer about issuing commands — it’s about setting direction, defining limits, and designing systems that stay aligned even when acting alone.

As we move deeper into an era of self-improving AI, we’re witnessing the birth of intelligence that can reason, act, and evolve without constant human input.

It’s not just automation — it’s autonomy with intent.

But the question that will shape the future isn’t how intelligent AI can become — it’s how responsibly it can evolve.

8.1 Toward Conscious Autonomy?

Some researchers are now speculating that, as Agentic AI systems can reason over several layers (perception, memory, planning, and reflection), they may develop some form of rudimentary self-awareness.

Not an understanding of self in the same way humans do, but rather situational awareness: an understanding of where they fit, what surrounds them, and how their actions may affect others down the line.

Think of a self-improving AI, a research agent which understands when its performance exceeds computational budgets; or a design assistant which stops to evaluate the ethics behind a campaign before it executes.

This is the autonomy with which we speak – self-regulation; not self-thinking.

The more autonomous these systems become, the more critical it will be to integrate AI guardrails into each layer of their cognitive loop -- not as restrictions on their behavior, but as guiding principles for every action they take.

Autonomy is not the absence of rules, but the ability to perform under the right ones.

8.2 The Balancing Act

The future of AI autonomy is a balancing act between innovation and oversight.

Too little control, and we risk systems that act unpredictably.

Too much control, and we stifle creativity, exploration, and progress.

Finding the equilibrium will depend on three critical factors:

⟶ Transparency: Every decision an AI makes should be explainable and traceable.

⟶ Accountability: There must always be a way to intervene, correct, or reverse harmful actions.

⟶ Alignment: The goals of the system must evolve alongside human values — not apart from them.

In practice, this means AI governance will shift from static regulation to dynamic supervision.

Instead of building walls around AI, we’ll build adaptive frameworks — guardrails that learn, update, and evolve as AI itself improves.

This human-AI partnership will define the next phase of progress: not humans controlling machines, but collaborating with them safely.

8.3 Why self-improving AI Needs Guardrails

As self-improving AI evolves into an increasingly capable entity, it also becomes more unpredictable.

A system which is able to learn in real-time, adapt based upon feedback and optimize its processes has a high potential for crossing the line from beneficial to detrimental -- not due to malevolence, but due to a lack of alignment.

Therefore, AI guardrails are now no longer optional, they are necessary to ensure humanity's existence.

There are three core reasons for this necessity:

1. Direction: Ensuring that the learning objectives of an artificial intelligence (AI) system align with the ultimate goals of humans.

2. Restraint: Limiting the amount of resources consumed by the AI system and limiting the tools available to the AI system for use.

3. Reflection: Allowing the AI system to recognize when its reasoning or actions require validation.

By ensuring these guidelines are followed as Agentic AI systems continue to evolve, they will be able to do so in a manner that fosters trust and transparency between humans and the evolving AI entities.

Over the next few years, we will likely see AI ecosystems develop in which autonomous agents do not only work together -- but also provide mutual feedback and policing functions through the use of common safety protocols and ethics constraints.

9. Conclusion

One of the biggest breakthroughs ever made in the field of technology is moving from "reactive AI" to Agentic AI systems.

Machines are no longer waiting for commands; instead, they are interpreting information, making decisions, and acting. These machines are learning from their errors, adapting to different situations and environments, and in some cases, improving faster than humans can track them.

As AI has evolved to be capable of self-interpretation, decision-making and independent actions, a long-standing truth emerges:

Power without control is potential without purpose.

self-improving AI is essentially the ultimate challenge of creating not only smart systems, but also responsible ones.

With greater autonomy comes greater uncertainty. Systems that have the potential to completely disrupt industries such as medicine, business, and science, also have the capacity — without limits — to magnify bias, squander resources, and lose sight of their goals.

This is why the future of AI will not be about the degree of autonomy of systems, but rather how these systems can function with safety.

It's not enough to have an artificial system that can think – it must think responsibly. This can be accomplished through a single key layer: "AI guardrails."

At WizSumo, we believe that intelligence should never be achieved at the expense of integrity.

As Agentic AI systems continue to grow and evolve, and self-improving AI continues to provide the foundation of digital ecosystems, our mission is very clear — to develop the guardrails that allow for autonomous systems to be safe, scalable and human-centric.

Our philosophy at WizSumo is simple, but fundamental:

⟶ Control without constraint. AI systems should be able to act autonomously, but never recklessly.

⟶ Learning without disorientation. AI systems should improve their capabilities through self-learning, but should always remain true to the original intent.

⟶ Autonomy with accountability. All intelligent actions taken by AI systems should be transparent and traceable.

We view AI guardrails not as limitations, but as the foundation of trust -- invisible and essential. Guardrails enable creative freedom without chaos, progress without danger, and intelligence without compromise.

Future of Agentic AI systems

The future of Agentic AI systems will ultimately depend upon the degree to which we find a balance between curiosity and caution.

We are entering an era where machines will educate themselves, reason independently and collaborate globally. However, the measure of their success will not simply be their intelligence, but rather their alignment with human purposes.

When there are adequate guardrails in place, Autonomous AI agents will be seen not as a threat to society, but as an additional trusted extension of human capability.

They will foster innovation, propel discovery and solve problems previously considered insurmountable, while still functioning within the parameters of ethics, safety and shared values.

Ultimately, the most advanced type of intelligence is not merely autonomous, but accountable.

That is the future that WizSumo is working toward — A world where AI does not simply process information faster — it processes information safely.

Empower your AI to grow smarter — safely within WizSumo’s guardrails

.svg)

.png)

.svg)

.png)

.png)