Legal & Compliance Guardrails: Building AI That Respects Law, Ethics, and Humanity

.png)

Key Takeaways

1. Introduction — When Innovation Meets legal compliance in AI

In 2024, an ambitious AI startup by the name of MindScale became the poster child for innovation (and irresponsibility).

The company’s generative model was trained on millions of online images and documents, producing stunningly realistic visuals for marketing clients. But the company promptly crashed back to earth when some artists brought a suit against the company, claiming copyright infringement, alleging that the company had used their work without permission. Not long afterward, a European regulator launched an inquiry into the company’s data protection practices, citing possible violations of the GDPR as well as U.S. privacy statutes.

What began as a breakthrough quickly turned into a cautionary tale — a reminder that innovation without legal guardrails can become a liability faster than any algorithm can be taught.

In an age of rapidly evolving technologies, artificial intelligence is forcing us to test the legal boundaries of the system. From copyright and trademark infringements, to theft of data, bias, nondisclosure of decisions — AI systems are confronting situations where legal compliance in AI is no longer optional, but existential.

With every new model, database, deployment, a question arises: Can this system be trusted not only to work, but to be compliant? And that’s where the AI legal guardrails come in, not as obstructions, but as principles of design by which to integrate the ideals of ethics, regulation and accountability into the AI development lifecycle. They ensure that creativity exists with compliance, and that progress exists with principles.

When we talk about legal compliance in AI we’re not talking about bureaucratic red tape, we’re talking about trust architecture. Just as a seat belt does not preclude you from driving fast, legal and ethical guardrails do not inhibit innovation, but provide sustainability to it.

What This Blog Covers

This blog explores how legal and compliance guardrails shape the future of responsible AI.

It will examine:

⟶ How copyright infringement and trademark violations arise in AI systems.

⟶ The role of data protection laws like GDPR, CCPA, and HIPAA.

⟶ How AI policy frameworks and the EU AI Act are redefining compliance.

⟶ The growing importance of sector-specific regulations (healthcare, finance, education).

⟶ The emerging principles of Indigenous Data Sovereignty.

⟶ Real-world case studies showing both failures and successful implementations of AI guardrails around the world.

Together, we’ll uncover why legal compliance in AI is not merely about avoiding lawsuits — it’s about designing intelligence that respects law, ethics, and humanity itself.

2. The Problem: When AI guardrails Are Missing

Artificial intelligence has grown faster than society has been able to harness it.

While AI holds great promise for efficiency and innovation, it has an abysmal track record for complying with existing law. Most companies do not seek to break the law. Rather, they run into violations by pushing ahead of structure in the name of speed, and of accountability in the name of ambition. And they ignore the very AI guardrails that were designed to protect creators, consumers, and citizens.

So, what is the fundamental issue?

The conclusion is that the AI models are being created more quickly than the legal frameworks can be implemented. Hence, the developers find themselves in that "zone of ambiguity" where copyright, data protection laws, and ethical obligations collide, overlap, and even quite often are in conflict with one another.

Three areas of major recurring “fault lines” arise below, where lack of legal guardrails present real life risk.

2.1. Copyright and Trademark Violations: The Hidden Cost of Speed

In the race to build powerful models, data often becomes collateral damage. Many artificially intelligent systems ingest publicly available data scraped from the internet — text, images, music and code that belong to other people. What starts as a technical shortcut can cavalierly devolve into copyright violation or false endorsement of a trademark.

For example, consider the explosion of generative models, many of which have inadvertently reproduced copyrighted images or text in outputs, thereby violating U.S. and international intellectual property law. Others have synthesized branded logos or the likenesses of celebrities, leading to claims of trademark violations.

These great examples indicate a truth others choose to ignore: data isn’t free in AI, it’s regulated.

When AI developers fail to devise legal compliance in AI pipelines, they subject their companies to lawsuits, fines and reputational harm. Adequate AI guardrails, such as licensing agreements, data audits or clarity of ownership of intellectual property, are not bureaucratic roadblocks. They are a means of industry survival.

2.2. data protection laws & AI: Privacy in Peril

If copyright defines what data can be used, privacy tells how that data must be used.

AI is a series of systems that collect, process and extrapolate data from individuals — often without direct consent. This puts them at odds with global data protection laws, from Europe’s GDPR to California’s CCPA and the U.S. HIPAA laws relating to health data.

Consider the GDPR’s “right to be forgotten.” Once personal data goes into the training of an AI model, it is practically impossible to remove it or locate it — and so compliance is almost paradoxical. Similarly, healthcare AI systems that analyze patient data must comply with HIPAA’s privacy and security criteria, while many third-party AI providers are unable to comply fully with the laws.

The absence of broad legal guardrails around privacy threatens more than fines — it threatens the public’s trust.

If people lose faith in how their data is utilized, they lose faith in the technology itself. For AI to flourish, data protection laws must grow and adapt in tandem with innovation — and organizations must make it a priority to remain compliant and make compliance a core design principle instead of an afterthought.

2.3.legal guardrails Struggling to Keep Pace

Governments and regulators are scrambling to catch up. The EU AI Act and emerging AI policy frameworks in the US, UK and Asia are being introduced to categorize AI systems based on the risk they pose, but global enforcement remains fragmented. Certain sectors have clear mandates with regard to compliance — finance and healthcare, for instance — while others are run with little oversight.

This lack of uniformity causes confusion:

A company compliant with one jurisdiction might be in breach of another.

A model which is compliant with the GDPR may run afoul of the US’s consumer laws.

This leads to a patchwork of regulations — a maze of overlapping, often contradictory standards that make compliance with “legal issues concerning AI” a moving target.

Without a harmonized set of “guardrails” pertaining to AI, organizations are in danger of straying into non-compliance even when they feel their intentions are overall benign.

What the world needs is not merely new rules, but interoperable guardrails — systems that harmonize legal, ethical and technological standards throughout the globe. Absent such, AI’s greatest challenge will not be development, but regulation.

3. Real-World Examples of Legal Compliance Failures in AI

The theoretical dangers of AI become painfully real when companies are found in courtrooms and not in boardrooms.” Each year there are new cases in which the absence of legal guardrails changes innovation into litigation. These exemplifications show, though, that “the greatest challenge to AI is not capability, but accountability.”

In the cases pointed to here are seen some of the better-known illustrations of the consequences when corporations ignore legal compliance in AI, fail to know or to pay any attention to data protection laws, or underestimate the ethical dimensions of their engineers' toy.

3.1. Getty Images vs Stability AI — Copyright Infringement in Training Data

When Getty Images filed a lawsuit against Stability AI in 2023, it was a turning point for the global AI industry.

Getty accused Stability AI of using more than 12 million copyrighted images - complete with watermarks - to train its image generation model, Stable Diffusion, without permission.

The case highlighted an inconvenient truth: a number of generative AI models are trained on datasets compiled in the absence of clear licensing arrangements or informed consent. The developers had assumed that "publicly available" meant "usable legally." It did not.

⟶ For the first time, the courts were confronted with questions such as, Who owns the data that trains an AI model?

⟶ Can a model "learn" from copyrighted material without reproducing it?

This case became a cautionary tale that underscored the need for AI guardrails, in particular, with respect to copyright compliance, dataset transparency, and IP licensing.

For startups and tech giants alike, the message was clear: legal compliance in AI begins at the dataset level, not after deployment.

3.2. Chatbot Privacy Leaks — When data protection laws Fail

In 2024, a wave of data exposure incidents impacted a large number of chatbots that are among the most used worldwide — and include personal assistants and enterprise AI products. In several incidents, users found snippets of their private information unexpectedly resurfacing in unrelated conversations about entirely different matters.

These incidents pointed to severe flaws in how companies deal with data protection laws and AI architecture. Despite claims of encryption, sensitive data was still being made available for training cycles of models which violates key principles of GDPR, CCPA and HIPAA.

In one particularly serious incident, a healthcare chatbot inadvertently made anonymized patient data available due to a faulty logging system. The incident triggered a multi-million-dollar settlement under HIPAA and an audit by the U.S. Department of Health & Human Services.

These lapses reveal a serious knowledge deficit:

AI design processes often focus on accuracy performance rather than compliance. In the absence of integrated legal guardrails and AI policy frameworks there is a conceptually high risk that even well intentioned systems cross legal lines.

3.3. Healthcare AI Under HIPAA Scrutiny

Healthcare was one of the first industries to use AI — but also one of the first to feel the legal repercussions.

Hospitals and health-tech companies were leveraging AI models for diagnostic, billing and patient-care recommendation purposes. But many were not fully HIPAA compliant, the nationwide statute regulating the privacy of medical records.

In numerous instances, AI models built on patient scans or records were later utilized for commercial products, all without patient consent or thorough de-identification.

The line became blurred between innovation and violation.

Hence, in the aftermath of these events, regulators have required greater AI guardrails across the medical industry, such as:

⟶ Data minimization (to collect only what's necessary)

⟶ Purpose limitation (so the data are used only for intended purpose)

⟶ Algorithmic transparency (for explicability of decision-making)

As the health care industry deploys more generative and predictive models, legal compliance in AI is no longer a box that’s checked but becomes a crucial element of patient trust and institutional integrity.

4. Building Legal & Compliance Guardrails for AI

If the last decade was a decade of creating intelligent machines, the next decade will be a decade of making such machines accountable. Innovation unregulated is chaos, while regulation which lacks appreciation is paralysis. The future of responsible AI, between the two, lies in a framework of legal and compliance guardrails, which shields both people and progress.

The idea of legal compliance in AI has evolved from a responsive checklist to a proactive ecosystem. The world no longer asks whether AI can do anything, but whether it should and if it does, under what limits?

These limits are measurable legal, not ethical, dimensions. They are enforceable legal guardrails, which have their foundation in the application of law into the design of technology.

To implement such guardrails it is necessary to create a multi-tiered approach, which includes governance, regulation, transparency and human rights compliance.

The six basic pillars below, will outline how modern organizations can create A.I. which is compliant with legal and aligned to ethical standards.

4.1. Understanding AI guardrails and legal guardrails Together

Think of the AI guardrails as the invisible code of ethics embedded in the architecture of all intelligent systems. They assure that the automation occurs within the ethical, social and legal constraints of right and wrong — shielding the user from harm and organizations from liability.

But the AI guardrails are incomplete without legal guardrails, which firmly root them in enforceability in the real world. If AI ethics tells you the why, the statutory compliance tells you the how.

For example, an AI model may be programmed to avoid any discrimination — which is ethical. But when it uses protected demographic data, it must also be compliant with the U.S. Civil Rights Act, the fairness principles of the GDPR, or the Equal Credit Opportunity Act — which is legal.

The intersection of these fields is the foundation of legal compliance in AI, which is where innovation and legality meet.

A mature organization has flowered a compliance-by-design model:

⟶ Pre-training guard-rails: verification of dataset ownership and consent

⟶ In training guardrails: bias checks, legal data filters and traceability logs

⟶ Post deployment guardrails: human-in-the-loop reviews, explainability reports and ongoing audits

This lifecycle approach assures that both AI ethics and data protection laws are built-in — not bolted-on — to the AI process.

4.2. AI policy frameworks: Global Blueprints for Compliance

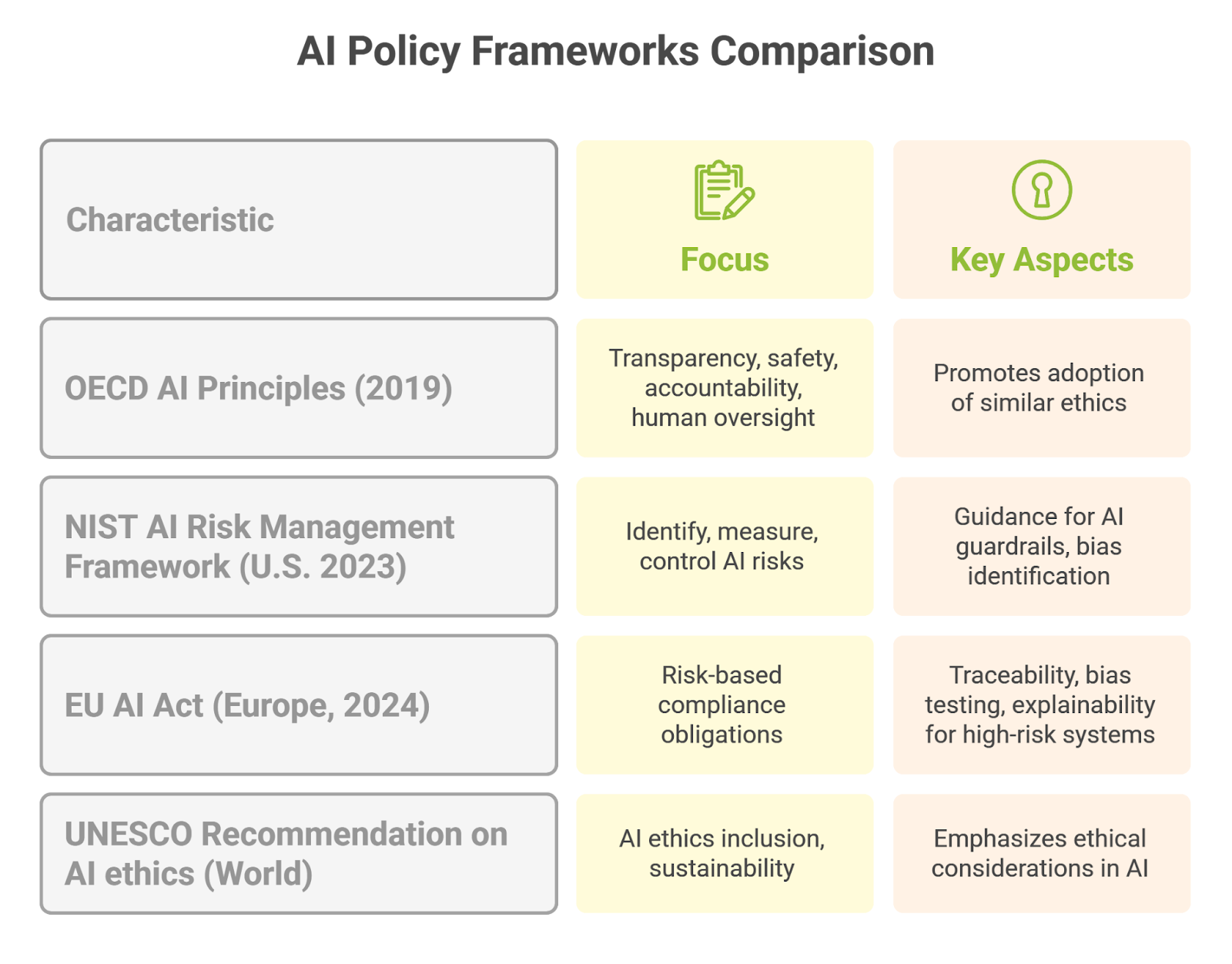

Legislators are coming together across the globe to work on common principles of AI governance. The AI policy frameworks are the structure that makes it possible for responsible innovation to occur in that they give to developers and regulators a common language and vocabulary for risk and responsibility.

Here are valuable examples that are establishing the international legal guardrails:

OECD AI Principles (2019): These principles promote transparency, safety, accountability and human over-site and urge the countries of the world to adopt anything similar in the AI ethics and compliance.

NIST AI Risk Management Framework (U.S. 2023): This represents a structured way to identify, measure and control the risks of artificial intelligence systems. The framework gives guidance to developers who can from here on provide AI guardrails in AI systems and identify bias, misuse of data and compliance.

EU AI Act (Europe, 2024): The world’s first comprehensive law or set of laws in regard to AI applications. AI systems are labeled as minimal risk, limited risk or high risk concerning the compliance obligation imposed for each. Thus, a credit scoring system (high risk) must provide full traceability, bias testing of bias and explanation of outputs of computer assisted systems.

UNESCO Recommendation on AI ethics (World): The world’s first universal ethical advice exhibited by close to 200 countries, emphasizing the AI ethics inclusion and sustainability.

By harmonizing the development of AI with the AI policy frameworks, corporations can become aware of values prior to enactment, create a seamless compliance with international laws and re-establish themselves as socially acceptable innovators.

The legal compliance in AI does not merely save costs through evading penalties, but assures their credibility in a world which is scrutinizing.

4.3. data protection laws as Foundational Guardrails

If data is the new currency of AI, data protection laws are the constitution which governs its flow. They ensure that the quest for intelligence does not take place at the expense of an individual’s privacy and autonomy.

Let’s further look at the legal situation which provides the framework for data use around the world.

GDPR (Europe):

There is no higher standard for privacy. It demands express consent, restricts data collection to necessary data and gives customers rights of access and erasure of their data.

For AI systems this means that the models now have to show compliance with “purpose limitation” and retrainability on such data being withdrawn.

CCPA (California):

This is primarily concerned with transparency and consumer choice. It mandates disclosures of personal data collected, and provides the right for customer opt out of data sale — a foundation of legal compliance in AI on the part of U.S. organizations.

HIPAA (U.S. Healthcare):

This strictly regulates the use of medical data, which requires developers of healthcare AI to ensure the anonymisation of patient data, and safe storage.

DPDP ACT (India):

This provides Asian governance, which brings it into global balance, whereby penalties are provided for those organisations which are not compliant, with customer rights in respect of consent — span.

The implementation of legal guardrails around data involves the following:

⟶ Data Mapping: Identify every dataset with respect to source, consent and legal status.

⟶ Data Minimisation: Only use that which is necessary for the function of the model.

⟶ Anonymisation: Eliminate all identifiers of personal data from training datasets.

⟶ Security Controls: Provide encryption, logging and continuous monitoring of access.

Organizations which incorporate these principles do not just comply with data protection laws, but ensure the future proofing of their AI systems for a controlled world.

4.4. AI ethics Beyond the Rulebook

Laws can only control behavior, while ethics can cultivate culture.

AI ethics transcends regulatory compliance. The relevant inquiry is not "Is this legal?" but rather, "Is it right?"

The trouble is that AI works in the moral gray areas — in situations which legislatures can only partially, if at all, anticipate. That is where internal AI guardrails guided by ethical principles are so valuable.

Several key ethical principles:

⟶ Transparency: Users have a right to know when they are interacting with AI.

⟶ Accountability: Human decision-makers must be liable for AI results.

⟶ Fairness: Models must be designed and tested to preclude systemic bias.

⟶ Explainability: High-risk AI results must be interpretable and auditable.

Big tech companies are beginning to formalize these principles.

⟶ IBM's AI ethics Board is tasked with examining models to determine if they present discrimination risks.

⟶ Google's Responsible AI Team enforces standards for model interpretability.

⟶ Microsoft's Responsible AI Standard links ethical compliance to business KPIs.

By internalizing AI ethics into the corporate DNA, companies can reformulate compliance from an outside pressure to an inside value system. Ethics then become a strategic differentiator, not a roadblock.

4.5. Sector-Specific legal guardrails

The space occupied by legal compliance in AI is not a "one-size-fits-all" problem, since numerous areas are impacted by it.

Each of the industries has different innate risks and different stakeholders involved with different regulations, leading to customized AI guardrails.

Healthcare

AI models that examine scans or recommend treatments are subject to HIPAA, mandating anonymity for patient data, audit trails for access of patient data, and control by the clinician. The FDA now requires algorithms to use transparency as part of the legal method for medical devices using AI - so again we see the explainability part of obtaining legal permission to use these devices.

Finance

Financial AI is subject to regulations as a function of SEC, CFPB & FinCEN regulations. The model outputs which often impact the credit score for the consumer and as to who will get loans and not, or what fraud models use are subject to disclosure of legal methodology used to reach these results since non-disclosed methods often lead to discrimination, fraud and deception, all of which are a violation of US law.

Education

AI learning programs that utilize student performance data are subject to FERPA regulations. Schools must insure whereas the AI analytical tools must not disclose the personally identifiable information and also must insure that the AI tools do fall into the trap of reinforcing biases as to grading or academic assessment.

Defense and Security

Autonomous systems raise issues as to accountability as to International Humanitarian law. Here all laws as to legal guardrails virtually mesh with moral imperatives which raise issues as to the necessity of humans being under control of lethally effective means of decision-making.

If companies customize the compliance methods for each sector, the patchwork quilt that has evolved over time is now a cohesive one with a strategy as to governance. The future belongs to those companies that consider “legal AI compliance” not as a necessity for compliance, but an opportunity for innovation through accountability.

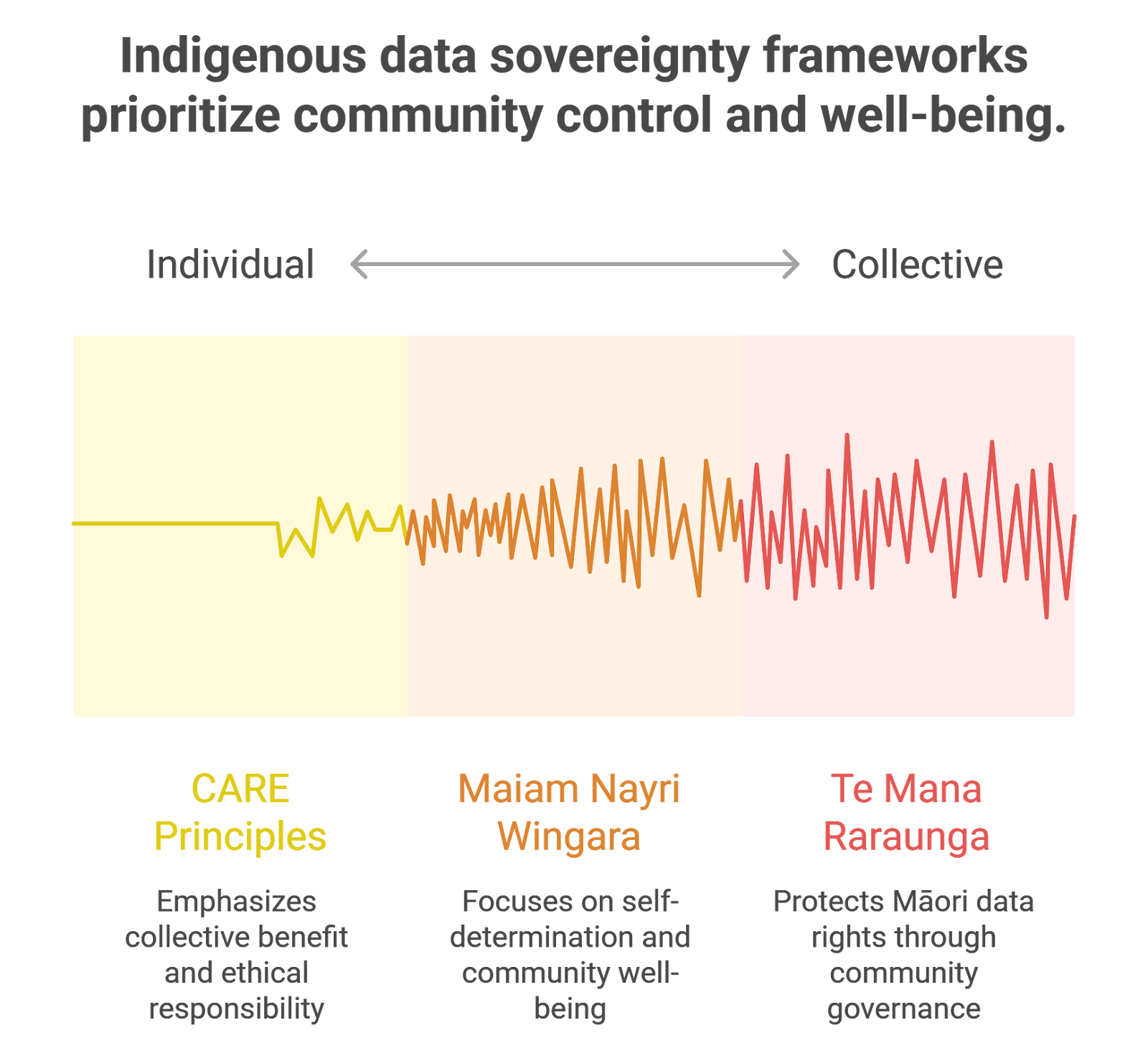

4.6. Indigenous Data Sovereignty: Respecting Cultural Ownership

A movement that is growing is changing how we think about data ownership, a collective right instead of an individual right. Indigenous Data Sovereignty (IDS) recognises that Indigenous peoples have the inherent right to control the collection, use and sharing of data about their peoples, lands and culture.

Too often AI systems have mined datasets linguistic or cultural about Indigenous peoples with no consent, maintaining historical power imbalance in the age of the digital.

True legal compliance in AI therefore needs to broaden beyond Western centric data privacy to cultural and ethical compliance.

Here are a few important frameworks leading this change:

CARE Principles (Canada): Collective Benefit, Authority to Control, Responsibility and Ethics.

Maiam Nayri Wingara Principles (Australia): Data must be used to enhance Indigenous self-determination and well-being.

Te Mana Raraunga (New Zealand): Protecting Māori data rights through community governed data.

If these frameworks are embedded into the AI guardrails it is not only about inclusivity but also about justice.

When AI systems ensure respect for Indigenous data rights, they are complying with a better and elevated form of AI ethics which values dignity as well as legality.

5. Legal & Compliance Guardrails in Action

Theories, principles and frameworks are of little value without practical tests in the real world. Across industries, progressive companies are proving that AI guardrails aren’t inhibitors to innovation — they’re creators of trust, transparency, and resilience.

These companies — by including legal guardrails in their product pipelines and in agreement with global AI policy frameworks — are shaping what the operationalization of legal compliance in AI means.

Let’s take a look at three powerful examples — from Silicon Valley to Brussels — of how legal and ethical compliance can be harmonized with technological ambition.

5.1. Google’s Privacy Sandbox — Embedding legal compliance in AI Advertising

Advertising has always been the economic bedrock of the internet — and one of the most invasive privacy markets of the digital economy. But when regulators around the globe round started introducing tougher new data protection laws like GDPR and CCPA, Google found itself in a dilemma, namely how to maintain its personalized advertising without relying on third-party cookies, which are used to track users everywhere they go on the web.

A solution emerged called the Privacy Sandbox, which rethinks the way advertising targeting could be done by integrating legal and ethical AI guardrails into the equation. Rather than track individuals, it instead uses and builds on-device machine models to analyze behavior locally and produce anonymized societal interest groups, called "Topics".

What makes the Privacy Sandbox an excellent paradigm of legal compliance in AI is the following:

⟶ Data minimization: Legal data never leaves the user’s device.

⟶ Transparency: Chrome users can see and change their advertising preferences at any time.

⟶ Accountability: Google posts technical documentation around how its system works and submits to regulatory audits that demonstrate compliance with GDPR.

Ethical design: The act thereof of AI guardrails balances personalization with consent — reflecting both AI ethics and privacy law.

And beyond compliance, it embodies a new ethos, namely a new way of thinking about the production of AI guardrails as part of product innovation.

That is to say rather than resist regulation, Google is adopting it as a mechanism for competitive advantage — showing in the meantime that it is possible to adhere to legality and be profitable!

5.2. OpenAI’s Copyright Mitigation and Model Transparency

Generative AI has changed the way we think about creativity — and renewed the debate on intellectual property. OpenAI’s large language models (LLMS) and image generators, such as ChatGPT and DALL·E, straddle the crossroads of AI ethics and legal compliance in AI where the line between inspiration and infringement is remarkably thin.

To address the growing concerns about copyright infringement and trademark wrongs, OpenAI has rolled out a battery of legal guardrails to its training and deployment strategy:

Dataset filtering: Purging copyrighted material where possible, utilizing licensed or publicly available datasets.

Transparency initiatives: Publications providing documentation of model behavior, limitations and use cases.

Red teaming and legal risk audits: Third-party experts testing models for copyright misuse or privacy violations.

Creator collaboration: Partnerships with publishers and creators to create compensated data-sharing frameworks.

These maneuvers constitute a watershed moment — from a defensive reactive to a proactive legal compliance in AI.

OpenAI’s approach indicates that observing data protection laws and AI policy frameworks is not about stifling progress, but rather acquiring legitimacy. And in a time when trust is commodified, transparency is seen as OpenAI’s most precious commodity.

5.3. EU AI Act Compliance Pilots and Global AI policy frameworks

Europe is leading the way in codifying socially acceptable AI guardrails into enforceable law. The EU AI Act — the first comprehensive regulation of artificial intelligence in the world — translates abstract concepts into concrete obligations. However, what makes the power of the Act distinct is that companies are already testing fundamental compliance with it in advance of it being fully passed into law.

Several pilot programs that involve the EU AI Act are underway, where companies voluntarily align their models in accordance with the risk-based obligations of the law. Under the legal compliance approach:

⟶ High-risk AI programs (i.e., medical, financial, hiring) must carry out fundamental rights impact assessments.

⟶ Providers must document the provenance of their training data and provide mechanisms to develop human oversight.

⟶ Post-market monitoring must take place to guarantee compliance through the lifecycle of the system.

Outside of Europe, similar AI policy frameworks are emerging:

⟶ The US AI Bill of Rights provides for fairness, explainability and privacy.

⟶ Japan’s and Canada’s frameworks presume OECD principles for trustworthy AI.

⟶ The Global Partnership on AI (GPAI) operates through co-ordinate policy experiments across 25+ nations.

These pilot programs demonstrate that legal compliance in AI is increasingly becoming an operational exercise rather than simply a legal checklist exercise.

Regulators are effecting a common legal architecture across data protection laws, AI ethics and policy frameworks to build the scaffolding required for a common AI future; a future in which world growth and safety can take place across borders.

6. Conclusion — From Compliance to Conscience

The story of MindScale, the fictional startup we met at the beginning, is no longer fiction — it’s the reality unfolding around us.

In every corner of the digital world, AI systems are learning, predicting, and creating faster than we can legislate. Yet the real test of intelligence is no longer computational — it’s conscientious.

The world has reached a point where legal compliance in AI is not simply a regulatory requirement, but a measure of technological maturity.

To design responsibly is to design legally. To innovate ethically is to innovate sustainably.

And to build AI guardrails is to ensure that progress never outruns principles.

We’ve seen how missing legal guardrails can lead to lawsuits, breaches, and public backlash — but we’ve also seen how strong compliance frameworks can unlock trust, stability, and growth.

Whether it’s Google’s Privacy Sandbox redefining data ethics, OpenAI’s transparency initiatives protecting intellectual property, or the EU AI Act setting a global precedent for accountability, one truth stands clear: the future belongs to those who integrate law and ethics at the code level.

Every developer, policymaker, and business leader now stands at the same crossroads — to treat AI policy frameworks as restrictions, or as the rails that guide us safely forward.

Compliance is no longer the ceiling of innovation; it’s the floor.

Ethics is no longer a checkbox; it’s a compass.

The next wave of AI won’t be judged by how smart it is, but by how responsible it becomes.

And those who understand this — who embed data protection laws, AI ethics, and legal guardrails into every layer of their technology — will not only avoid risk; they’ll earn the one thing every algorithm needs to thrive: human trust.

Because in the end, AI guardrails aren’t about limiting what machines can do — they’re about defining what humanity stands for.

Let’s build AI that doesn’t just obey the law — it upholds justice.

True intelligence isn’t just artificial — it’s accountable

.svg)

.png)

.svg)

.png)

.png)