Operational Safety & Governance Guardrails: Building AI Systems That Stay Safe by Design

Key Takeaways

AI Guardrails and Governance Guardrails keep intelligent systems safe and aligned.

Operational safety guardrails build safety-by-design into every AI decision.

Red team evaluations reveal hidden risks before real-world failures occur.

Continuous monitoring turns AI safety into a self-learning process.

Wizsumo makes responsible AI the standard, not the exception.

1. Introduction

AI has passed from being predictive to actually making decisions (and thus impacting actual world outcomes) -- for example, medical diagnosis and financial forecasting -- but as we see AI becoming more autonomous, the challenge is no longer "what" it can do, but "how" safely it will do it.

Even in today's high-risk situations, the potential damage from a slight variation in AI behavior could be significant. Mis-classifying medical images through a model, leaking confidential data through a chat-bot, over-reacting to input from an autonomous vehicle – all demonstrate the same fundamental flaw: there is currently a lack of "guardrails".

This is where AI guardrails and AI governance guardrails enter into play.

While these two terms are often used interchangeably, they actually represent two different types of "guardrails". AI guardrails refers to the design of the operationally safe "limits" that define what a particular model can or cannot do. The other type of AI governance guardrails, focuses more on the regulatory/policy/oversight side -- defining who oversees and monitors compliance to ensure that an organization's AI system operates within established safety parameters.

The only way to sustainably move forward as AI continues to expand throughout the various sectors of our economy is through "Safety-by-Design", or designing systems that align with human intent and include considerations of risk from the outset.

What this blog covers:

⟶ How Operational safety guardrails help ensure safe AI behavior during real-time use.

⟶ How AI Governance Guardrails establish policies, oversight, and accountability across the AI lifecycle.

⟶ How Red team evaluations uncover vulnerabilities, failure modes, and misuse risks before deployment.

⟶How Continuous Monitoring Systems track models after deployment to detect drift, anomalies, and safety breaches.

⟶ How all four components work together to create a complete, end-to-end AI safety loop that keeps AI powerful, trustworthy, and secure.

2. Why AI Needs Guardrails Now More Than Ever

2.1 The Rising Complexity of AI Systems

Today's AI systems are no longer just passive models residing in remote servers — today, they are dynamic systems constantly learning, adapting, making decisions autonomously, and functioning in near-real-time.

In addition to facilitating conversations between humans and other humans through chatbots that negotiate financial transactions or health care diagnostic predictive models, AI systems are now capable of acting in place of people.

However, while an AI system's ability to act independently lends itself to increased autonomy, such autonomy also brings about additional levels of complexity — and when complexity exists, so does unpredictability.

As AI systems become increasingly complex, the predictability of their behavior under new circumstances declines significantly. In many cases, even the best-intended AI systems may drift away from their initial goals due to previously unknown or unseen biases, missing data, or un-monitored learning loops. For example, a recommendation engine may begin to recommend false information; a risk assessment model may be overly sensitive to historical trends and/or past events rather than current reality — yet, none of these behaviors will be detected by anyone until the damage is done.

Therefore, Operational safety guardrails have become more important than ever for the safety of individuals and organizations alike.

These Operational safety guardrails serve as intelligent barriers — designed to ensure that an AI system behaves within predetermined (and agreed-upon) safe operating conditions. Without the existence of these AI guardrails, AI systems could rapidly move outside of acceptable boundaries and create harmful behaviors, make biased decisions, and/or fail to follow the rules of ethics before humans can step-in and correct them.

2.2 What Are AI guardrails — And How Do They Work?

AI guardrails represent all the means by which an AI system's thinking, acting, and reacting are directed. In this sense, they are not restrictive; they are systems for directionality that keep an AI on track as it pursues its objectives and aligns with human values.

There are two ways in which AI guardrails operate:

⟶ Operational Level (System) Guardrails:

These indicate how an AI will behave — via constraint, filter and monitoring tools that identify when the AI has taken an unsafe action in real-time. Example: a model cannot generate private user information or take unverified actions.

⟶ Governance Level (Organizational) Guardrails:

These determine who is accountable for ensuring an AI operates responsibly — via policies, accountability systems and auditing processes. These guardrails provide assurance that AI safety is not solely governed by code, but also by culture.

In combination, AI guardrails, and AI governance guardrails, form a feedback loop — where each and every action of an AI is measurable, controllable, and traceable.

As companies expand their use of AI, AI guardrails are not additional options to be considered as organizations deploy AI — they are the fundamental components that support the responsible operation of AI. The presence of AI guardrails allows for rapid innovation to occur without causing loss of public trust.

3. The Core of Governance Guardrails

As AI systems grow to include many different industries, safety cannot be solely controlled by engineers anymore. With so many modern AI systems now comprising numerous models, datasets and teams; the necessary structure to oversee this complex web of processes will need to go beyond just the coding itself.

This is what AI governance guardrails are intended for.

"Governance guardrails" provide a framework for the organizations' responsible use of AI to ensure all decisions created by the AI system reflect the company's ethical guidelines, their level of acceptable risk and their regulatory requirements. Operational guardrails focus on how the AI functions, while governance guardrails focus on who oversees those behaviors.

3.1 What Makes AI Governance Work

While AI guardrails focus on controlling how a system behaves, AI governance guardrails define how organizations behave around AI.

They form the policy and accountability layer — ensuring that every AI decision, dataset, and deployment aligns with human ethics, regulatory expectations, and business integrity.

Think of governance guardrails as the control tower overseeing multiple AI engines running simultaneously. Each model may have its own Operational safety guardrails, but the governance layer ensures that collectively, they operate under shared principles of transparency, fairness, and accountability.

Strong governance guardrails typically include:

⟶ Clear ownership: Defining who is responsible for the safety of each AI system.

⟶ Traceability: Tracking how data flows through models and influences outputs.

⟶ Explainability: Ensuring that every AI decision can be justified.

⟶ Compliance integration: Mapping model behaviors to global regulations (like the EU AI Act, NIST, and ISO 42001).

These aren’t just legal checkboxes — they build trust. When governance is embedded into design, teams stop viewing safety as “extra work” and start seeing it as the foundation of sustainable AI development.

3.2 Key Governance Practices and Risk Detection

Creating an adequate level of governance is not simply about developing new regulations, rather than developing regulatory enforcement methods that are automatic.

The following section will briefly discuss two key components of a robust governance mechanism: AI Governance Best Practices and AI Risk Detection.

AI Governance Best Practices

Those organizations that are leaders in AI risk mitigation view governance through the lens of a life cycle, versus a set of regulations. Therefore:

⟶ They maintain records for all versions, updates, parameters, etc. for their models.

⟶ They implement Human-in-the-Loop (HITL) for critical applications (i.e. healthcare, finance).

⟶ They perform ethics and bias assessments prior to model release.

⟶ They establish AI Safety Boards for review/approval of model releases.

Best practices such as those listed above ensure that governance is part of the data science and engineering work flow.

AI Risk Detection

Without proper visibility, even the best policies can be rendered ineffective. That is why AI Risk Detection exists; this is the process of using continuous scanning/monitoring to identify anomalies, bias, or drifts in the behavior of AIs.

Examples of how AI Risk Detection works include:

⟶ Drift detection systems that will alert you if your model(s) are deviating from acceptable limits in terms of predictions.

⟶ Bias tracking systems that will indicate if there is evidence of discrimination in either the training data or the output decisions of your models.

⟶ Anomaly dashboards that will allow compliance teams to see the model risks and make decisions based on that information.

Together, AI governance guardrails and AI Risk Detection provide a safety nervous system for an organization — enabling each decision made related to governance to be informed by up-to-date information.

When AI governance guardrails and AI Risk Detection work together they create a dynamic feedback loop, which not only protects against potential risks but continually learns from them.

4. Operational safety guardrails — Making AI Safe by Design

AI doesn't fail simply due to poor quality data or flawed coding; it fails when the emphasis placed on performance does not correlate with safety considerations.

Therefore Operational safety guardrails were created. Guardrails provide the framework that allows AI systems to perform reliably ethically and securely in complex environments.

Guardrails do not impede innovation; rather they direct it. Guardrails establish the operational parameters of safe operation of AI systems by precluding them from making decisions based upon insufficient data, violating ethics, and/or overstepping decision-making bounds. This is straightforward however it has immense implications. To put safety first means designing safety into a system's architecture at its inception - not as a post development consideration.

This is the premise of Safety-by-Design.

4.1 Safety-by-Design Implementation and Safety Thresholds

By design, Safety-by-Design incorporates the reduction of risks into each step of the AI development process (from gathering information/data, through to deploying) and instead of reacting to problems after they occur; development teams define exactly what is considered safe operationally and do so using measurable definitions.

To illustrate, in an example where a medical AI model has defined the operational safety limits of providing diagnostic recommendations to only include clinical data validated by a healthcare professional, in the context of financial algorithms and trading, the operational safety limit would be defined as the maximum allowable trade volatility, and in the case of generative AI models, the operational safety limit could prohibit the creation of unsafe or confidential outputs.

The thresholds create operational "barriers" to the behaviors of AI systems, which are designed to be predictive and controllable as opposed to static rules. These barriers are also designed to adaptively evolve based on the new information provided to the model for learning purposes.

When "governance guardrails" are applied in conjunction with the previously described operational boundaries, safety transitions from being reactive to proactive and the system can anticipate when a problem will occur in addition to recognizing a problem exists.

4.2 Continuous Monitoring and Feedback Loops

Although even the best designed guardrails will fail when they are not watched, because AI is a changing entity (the environment, the data distribution and the decisions made by AI) - this is where Continuous Monitoring Systems play a large role today in managing modern AI.

Continuous Monitoring Systems function as an always present monitor; tracking how the model operates in the "real world" and detecting anomalies and alerting when any safety threshold is violated. This creates a continuous real-time loop of feedback between the operational and governing aspects of the AI so that all actions, decisions and outcomes create feedback to improve the AI.

An effective monitoring loop allows for the detection of problems such as bias drift, overfitting of the model and misuse at an early stage. If the monitoring system detects an anomaly in behavior it may immediately alert a safety team and/or prompt retraining of the model. In a continuous loop of detection and improvement, AI transforms from a static product to a self regulating ecosystem.

In addition to being used to maintain compliance of AI models with AI Governance Best Practices, when combined with the use of monitoring, a system will help the model mature and operate responsibly. In other words, the system learns not only from the data but also from the decisions, errors and corrections it has made.

5. Red team evaluation — The Ultimate Stress Test for AI

Building safe AI isn’t just about setting guardrails — it’s about testing them relentlessly.

Even the most well-designed systems can behave unpredictably in complex, real-world scenarios. That’s why organizations conduct Red team evaluations — deliberate, controlled attempts to push AI systems to their limits and uncover hidden vulnerabilities before the world does.

In essence, Red Teaming is the art of breaking your own system safely. It’s an exercise in humility — assuming that your AI, no matter how refined, can and will fail somewhere. The goal isn’t to shame the system or its builders, but to learn how it behaves under stress, manipulation, or deception.

5.1 What Is Red Teaming in AI?

The term Red team evaluation originated in the world of cyber-security. Cyber-security experts who wish to identify vulnerabilities in computer networks employ "ethical hacking." Ethical hackers act as adversaries with the goal of identifying vulnerabilities within a network, to help the owner identify areas for improvement in their defenses.

In the realm of AI a similar type of "evaluation" occurs. This process of "red teaming" allows researchers to probe systems for unsafe behaviors, biases, or exploitation that may go undetected through other forms of testing.

Examples of how a Red Team may evaluate AI include testing whether a chat-bot can defend against bad prompts, testing an image classifier's behavior when exposed to manipulated inputs, or evaluating the performance of a generative model on sensitive subjects. The goal of each of these experiments is to discover the "failure point", that specific condition under which a safety protocol will fail or be compromised.

Unlike traditional Quality Assurance methods, Red team evaluations are intended to be adversarial. A Red Team does this by creating "edge cases," deception within data sets, or malicious inquiries to force the AI to operate outside of its expected operating parameters. The results of the Red team evaluation(s), therefore, are used to develop new Operational safety guardrails, as well as enhance AI governance guardrails, so as to make the entire system more robust.

Many of the leading AI labs — including OpenAI, Anthropic, and Google DeepMind — now view Red Teaming as a required step prior to releasing an AI product to the public. It has evolved from being a niche security methodology, to being a foundational element of developing AI responsibly.

5.2 How Red Teaming Strengthens AI Safety

Each time an organization uses Red Team testing to evaluate its AI system, it builds a level of awareness about its AI.

The earlier an organization identifies unusual or dangerous behavior in its AI, the sooner it can modify its safety mechanisms — possibly avoiding potential misuse, possible negative impacts on reputation, or even possible violations of regulations prior to deployment of the AI.

However, the primary advantage of Red Team testing is not only to identify where an AI may be flawed, but to close the feedback loop created by testing the AI.

Feedback loops are established when the results of Red Teams are fed into the AI's training model, as well as into various dashboards and governance policies used to monitor the AI. Feedback loops lead to a cycle of ongoing hardening of the AI. In addition to improving the performance of the AI, the AI will also learn how to fail safely.

In organizations with fewer resources available to dedicate to a large security department, the use of lightweight Red Team testing methods (such as stress-testing, testing with prompts injected into the AI, and/or external audits) can still have a significant impact on improving the overall safety of the AI. Ultimately, what matters most is the intent of the organization using the Red Team testing methods: the intent to test its systems before reality tests them.

In summary, Red team evaluation changes AI safety from being a checklist to a discipline of forethought, which allows responsible teams to know that their guardrails — the mechanisms put in place to prevent failures — are not mere theoretical ideals, but tested and proven mechanisms — prepared for the uncertainty of the real world.

6. From Incidents to Improvement — The Safety Feedback Cycle

No matter how advanced an AI system is, incidents are inevitable.

Even with strong Operational safety guardrails, rigorous Red team evaluations, and well-defined AI governance guardrails, unexpected behaviors can still emerge once the system interacts with the unpredictability of the real world.

The true measure of AI safety isn’t whether incidents happen — it’s how organizations respond when they do.

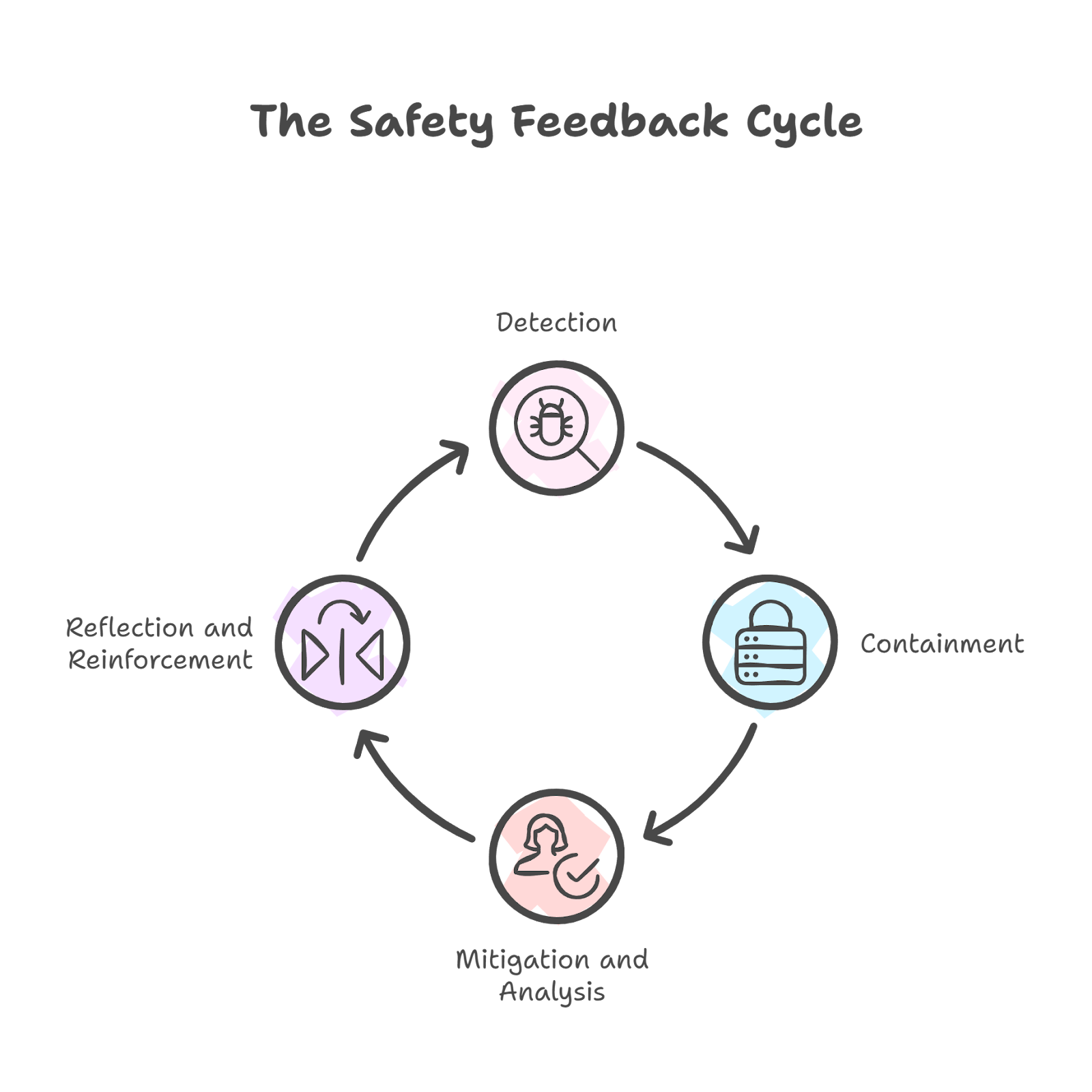

A single model failure, data leak, or safety breach can become an invaluable learning moment if it feeds into what we call the Safety Feedback Cycle — a continuous loop of detection, containment, reflection, and refinement.

The Four Phases of the Safety Feedback Cycle

1. Detection:

The moment an anomaly occurs — whether it’s a model drift, a biased output, or a compliance breach — the system’s monitoring layer should identify it automatically.

Continuous monitoring tools, incident dashboards, and anomaly alerts make early detection possible before harm spreads.

2. Containment:

Once a safety event is detected, the priority shifts to isolating it.

Containment means temporarily disabling the affected model or restricting its function until engineers can assess the root cause.

This prevents cascading failures and protects users while maintaining system integrity.

3. Mitigation and Analysis:

After containment, the root cause must be investigated.

Teams analyze whether the incident stemmed from data bias, code errors, unsafe thresholds, or governance blind spots.

The insights derived here feed into better safety configurations, improved AI Governance Best Practices, and more informed Red team evaluation protocols.

4. Reflection and Reinforcement:

The final phase involves integrating lessons learned back into both operational and governance guardrails.

This could mean updating training data policies, tightening access controls, or adjusting model limits.

Over time, this reflective process builds organizational memory — transforming isolated incidents into systemic resilience.

Why Transparency Matters

The way an organization responds to incidents in AI governance may actually matter more than the incident itself; the act of transparently reporting an incident (internally or externally) creates credibility for users, regulatory bodies, as well as partners.

When organizations openly share what went wrong and how they fixed it, they demonstrate that safety is not just a principle, but a practiced value. This approach turns AI safety from a defensive strategy into a trust-building framework.

The Continuous Learning Loop

The Safety Feedback Cycle isn’t a one-time response — it’s an ongoing rhythm.

Each cycle strengthens the alignment between AI guardrails, Operational safety guardrails, and AI governance guardrails, forming a living system that improves with every challenge.

Over time, this cycle evolves into a continuous improvement engine, where every incident makes the AI more transparent, predictable, and aligned with its intended purpose.

In other words, true safety doesn’t come from avoiding failure — it comes from learning faster than failure can happen.

7. Conclusion

Artificial intelligence has reached a point where power without boundaries is no longer progress — it's a risk.

As AI systems gain autonomy, their decisions ripple across economies, institutions, and individual lives. The only way to scale responsibly is through guardrails — invisible frameworks that protect against unintended consequences while allowing innovation to thrive.

AI guardrails and AI governance guardrails together form the backbone of this responsible growth. One governs how AI behaves, the other ensures how organizations oversee that behavior. Between them, Operational safety guardrails, Red team evaluations, and Continuous Monitoring Systems create the connective tissue — translating principles into practical protection.

This integration marks a shift in mindset:

AI safety is not a compliance checklist. It’s a continuous discipline — a cycle of designing, testing, monitoring, and improving. Every decision, failure, and feedback loop makes the system smarter, safer, and more aligned with human intent.

In a world racing toward intelligent autonomy, the future belongs to those who can balance freedom with foresight — systems that can evolve boldly, but never recklessly.

That balance isn’t achieved by chance; it’s engineered through guardrails.

So as you build, deploy, and scale your AI — remember:

True innovation doesn’t come from removing limits. It comes from knowing where they belong.

.svg)

.png)

.svg)

.png)

.png)