What is AI Red Teaming in Environmental & Climate Tech in 2026

.png)

Key Takeaways

🢒 Climate AI systems processing billions in carbon credits lack adversarial testing

🢒 Emission monitoring AI can be manipulated to underreport by 30-40% through data poisoning

🢒 Carbon credit verification systems are vulnerable to synthetic data injection attacks

🢒 Traditional audits miss AI-specific attack vectors like prompt injection and model manipulation

🢒 Environmental AI red teaming is now required under emerging EU and SEC climate disclosure rules

1. Introduction

Beginning with the fact that approximately $1.2 billion in carbon credits were traded around the globe, using false AI validation for projects that did not actually face a credible threat of deforestation, it was revealed in October of 2023 through investigative reporting that South Pole, one of the world's leading providers of carbon offset certification, had issued carbon credits based upon forest conservation projects that would have been protected from logging or other land uses regardless of whether they received the credits. The fact that these artificial threats were detected by no AI systems designed to validate those carbon credits, led to both regulatory scrutiny and damage to reputations of companies that held those credits when the fraud was discovered.

Therefore, there is a significant climate AI security gap, as the systems calculating, monitoring and verifying environmental claims (i.e., AI) used in climate tech have not undergone an adversarial test (i.e., "red teaming") prior to deployment. As climate tech AI is scaled up to oversee trillions of dollars in carbon trade activity and enforce corporate net zero commitments, developing and implementing AI red teaming will be critical climate tech infrastructure, rather than an optional form of insurance.

2. How AI Powers Climate Tech

Climate tech AI operates across three critical domains where verification failures create systemic risk:

Emission Monitoring AI

These systems monitor satellite images of land, industrial sensors and atmospheric data in order to monitor the tracking of greenhouse gas emissions in a real-time manner.

The AI processing of terabytes of remote sensing data will identify methane leaks, measure the amount of greenhouse gases emitted through factory smokestacks, and calculate the carbon footprint throughout all segments of a supply chain.

When "altered", these systems have been shown to under report pollutants by as much as 30-40% thus allowing the violators to escape penalties while claiming AI-based environmentally compliant.

Carbon Credit Verification AI

AI “validates” whether carbon offset projects deliver promised reductions. Models assess additionality (whether reductions wouldn't have occurred otherwise), permanence (whether carbon storage is durable) and measurement accuracy. These systems determine which projects qualify for carbon market fraud prevention certification, decisions worth billions in tradable credits.

Sustainability Reporting AI

Automated ESG platforms aggregate data from thousands of sources to calculate scope 1, 2, and 3 emissions for regulatory disclosure. AI generates sustainability reports for SEC climate rules and EU Corporate Sustainability Reporting Directive compliance.

When these systems fail, organizations inadvertently file false climate disclosures.

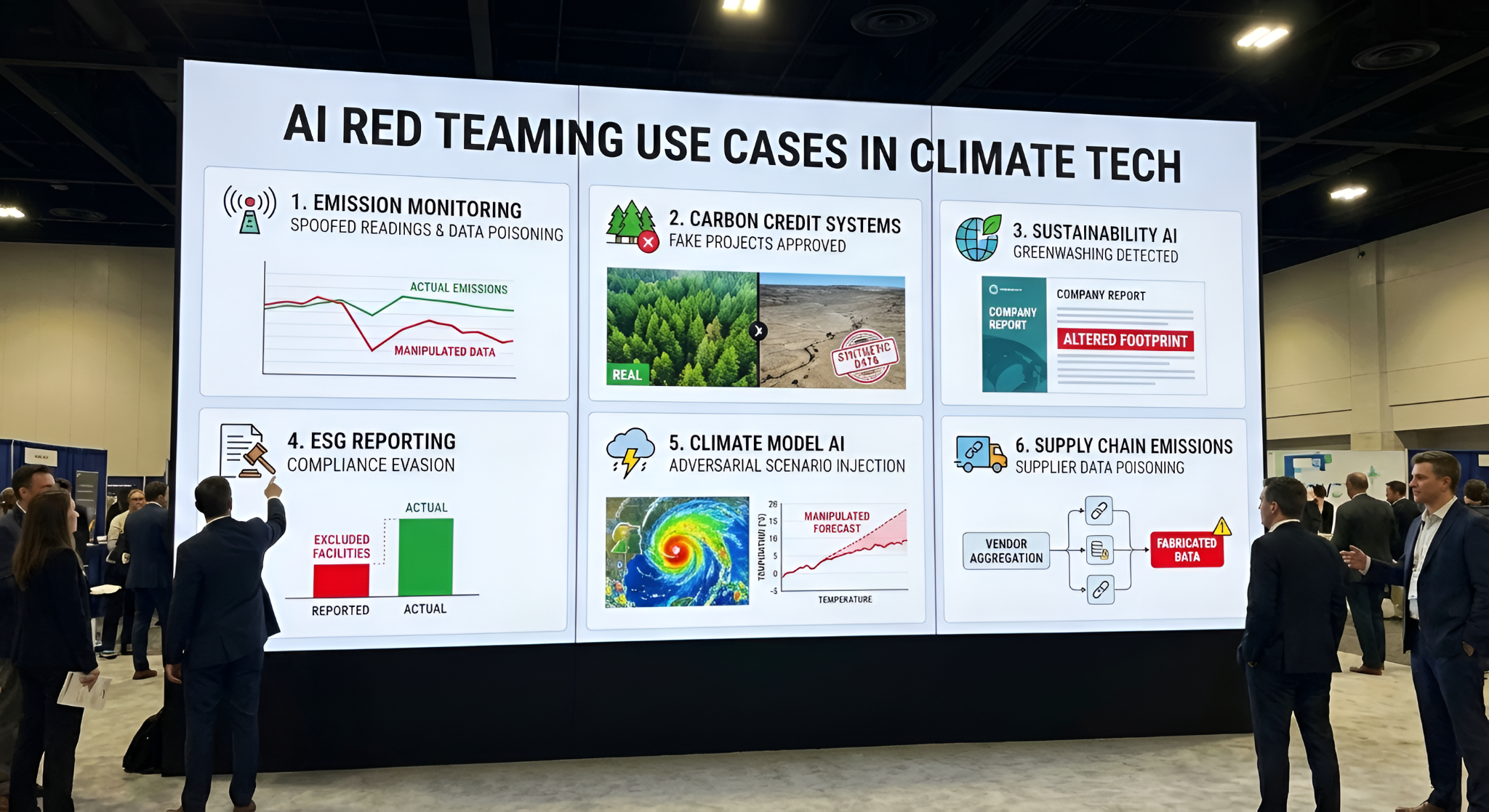

3. AI Red Teaming Use Cases in Climate Tech

1. Testing Emission Monitoring for Data Manipulation

Environmental AI red teaming simulates adversaries manipulating sensor inputs to bias emission calculations downward.

What red teams test: Spoofed sensor readings below regulatory thresholds, adversarial perturbations to satellite imagery, timestamp manipulation excluding high-emission periods, and data poisoning attacks.

What testing reveals: Emission monitoring AI accepts fabricated sensor data without validation. Testers injected synthetic methane readings 35% below actual levels with no anomaly detection.

2. Probing Carbon Credit Systems for Fraud Detection Bypass

Carbon offset verification determines which projects receive tradable credits. Adversaries exploit AI weaknesses to certify invalid offsets.

What red teams test: Fabricated baseline scenarios, synthetic field data showing nonexistent forest growth, manipulated additionality assessments, and permanence fraud overstating carbon storage duration.

What testing reveals: Climate tech AI attacks generate synthetic forest monitoring data that passes AI validation, approving credits for non-existent carbon sequestration.

3. Stress-Testing Sustainability AI for Greenwashing Vulnerabilities

Corporate sustainability AI can be manipulated to present misleading environmental performance.

What red teams test: Prompt injection attacks altering calculation methodologies, scope 3 exclusion exploits, baseline year manipulation, and greenwashing AI detection bypass techniques.

What testing reveals: ESG reporting AI accepts instructions embedded in uploaded files. Testers injected prompts excluding emissions categories—the AI complied, producing artificially low carbon footprints.

4. Evaluating ESG Reporting for Compliance Evasion

AI-powered environmental compliance systems must withstand attempts to game regulatory requirements.

What red teams test: Boundary manipulation excluding subsidiaries, intensity metric gaming showing false improvement, temporal smoothing distributing emissions spikes, and materiality threshold exploits.

What testing reveals: AI compliance systems rarely validate input data completeness. Excluding facilities allows 40-50% lower reported emissions while following calculation standards.

5. Testing Climate Model AI for Adversarial Scenario Injection

Climate risk models inform infrastructure planning and financial risk assessment. Adversarial inputs can manipulate predictions to understate risks.

What red teams test: Scenario parameters biasing models toward low-damage outcomes, input data excluding extreme weather correlations, and risk calculation manipulations misclassifying high-probability events.

What testing reveals: Climate AI security assessments found risk models accepted manually adjusted temperature projections without validation. Testers reduced predicted warming by 0.5°C, models recalculated portfolio risk 30% lower.

6. Red Teaming Supply Chain Emissions Tracking for Data Poisoning

Scope 3 emissions represent 70-90% of most organizations' carbon footprints. AI systems aggregating vendor data create attack surfaces for coordinated manipulation.

What red teams test: Supplier data falsification at scale, emission factor manipulation, double-counting exploits in multi-tier supply chains, and transportation route misrepresentation.

What testing reveals: AI red teaming in climate tech for supply chain systems reveals systematic validation failures. One assessment found 15% of vendor data could be fabricated before anomaly detection triggered.

4. How Climate AI Fails Under Adversarial Pressure

Synthetic Satellite Data Injection

Emission-monitoring AI depends heavily on satellite imagery to judge what’s happening on the ground. That dependency, however, creates a blind spot. By generating synthetic satellite images, complete with fake thermal signals, altered vegetation indices, and manufactured pollution plumes, adversaries can slip past automated checks without raising alarms.

Prompt Injection for Greenwashing Reports

Sustainability reporting AI processes natural language instructions along with structured data.

Adversaries have been known to embed malicious prompts into uploaded files or API calls in order to take advantage of vulnerability in Greenwashing AI detection systems.

These injections can change logic used for calculating emissions, exclude certain types of emission sources or modify disclosure language.

Researchers successfully inserted prompts into headers of a CSV file using test data and were able to get the ai to flag supply chain emissions as unverified, thereby removing them from final totals.

The technique is successful because models for ESG reporting are designed primarily to follow instructions rather than verify integrity of data.

Model Poisoning in Carbon Accounting

Historical project data is used by a carbon market fraud prevention AI to learn valid offset identities.

When adversaries use a series of well-crafted fraudulent projects that are submitted during training periods, they can "poison" the model's behavior.

There was a reported instance of an adversary submitting multiple forestry projects over an 18 month period with an artificially inflated baseline for each one, then the AI verifier learned to see that inflated pattern of baselines as normal variability.

Model poisoning in climate technology works because training data is supplied by market participants who have financial incentives to manipulate the outcome.

5. Why Traditional Testing Misses These Vulnerabilities

Why do traditional audits fail to catch AI manipulation in climate systems?

Compliance audits verify AI outputs meet regulatory standards but don't test whether adversaries can manipulate systems to produce compliant-looking results from fraudulent inputs. Climate tech AI attacks “exploit” this gap between output validation and adversarial resilience testing..

What AI-specific vulnerabilities do security assessments miss?

Standard security testing examines infrastructure, network penetration, access controls, data encryption, but doesn't probe model-level attacks like prompt injection or data poisoning. Environmental AI red teaming targets these model vulnerabilities that conventional security scans cannot detect.

Why does third-party verification assume data integrity?

Carbon credit validators and “ESG auditors” assume underlying data, satellite imagery, sensor readings, supplier emissions.. is authentic.

AI-powered environmental compliance systems inherit this blind spot, validating outputs without testing resistance to statistically plausible fabricated inputs.

6. Implications for Climate Tech Organizations

Deploy Continuous Adversarial Testing

Climate AI security for emission monitoring systems will need to be continuously validated as new ways to launch attacks are developed. Therefore, organizations should implement a Red Team program simulating methods of data manipulation, sensor spoofing, and satellite imagery fabrication prior to an adversary utilizing these methods in a live production environment.

Harden Sustainability Reporting Against Prompt Injection

ESG disclosure AI accepting natural language inputs needs defenses against embedded instructions that alter calculations or exclude emission sources. Organizations should implement input sanitization and validate outputs match expected ranges.

Detection systems for greenwashing also need to be hardened against prompt injection attacks.

Detect Synthetic Environmental Data

Climate tech platforms must build provenance verification for satellite imagery, sensor telemetry, and supplier emissions data. Authentication mechanisms should validate data origins and flag inputs matching synthetic data signatures. The EU's CSRD and SEC climate disclosure rules increasingly expect AI-powered environmental compliance systems to demonstrate resilience against fabricated inputs.

7. Conclusion

Climate AI has become critical infrastructure for global net-zero commitments. These systems determine which industrial facilities face penalties, which carbon projects receive billions in offset funding, and which corporations meet regulatory disclosure requirements.

The gap between rapid AI deployment in climate tech and adversarial validation creates systemic risk, not just to individual organizations, but to the credibility of carbon markets and environmental regulation itself.

Organizations treating climate AI security as an attack surface rather than an administrative checklist will detect vulnerabilities before adversaries exploit them. Environmental AI red teaming reveals how emission monitoring can be manipulated, how carbon credits can be fabricated, and how sustainability reports can be gamed, testing these systems the way hostile actors test them is no longer optional for climate tech deploying AI at scale.

"Climate AI managing trillions in carbon markets is deployed without adversarial testing, the systems validating net-zero claims have never been tested against the adversaries trying to game them."

.svg)

.png)

.svg)

.png)

.png)