What is AI Red Teaming in Public Safety & Emergency Services in 2026

.png)

Key Takeaways

🢒 AI used in emergency services operates under pressure, not clean lab conditions.

🢒 Predictive policing systems can reinforce bias through feedback loops that look statistically valid.

🢒 Dispatch AI failures often surface as delays, not obvious errors.

🢒 Traditional testing misses long-term drift and real-world misuse patterns.

1. The Stakes: When Public Safety AI Fails

In emergency services, AI doesn’t optimize clicks or recommendations. It decides who gets help first. And who waits.

Cities now rely on algorithms to triage 911 calls, route ambulances, and flag neighborhoods for police presence. When these systems fail, the impact isn’t theoretical. A delayed dispatch can turn survivable emergencies into fatalities. A skewed risk score can quietly over-police the same communities, year after year, without a single malicious actor touching the system.

This is why AI security for emergency services is fundamentally different from securing enterprise or consumer AI. The margin for error is razor-thin. Decisions happen under stress, incomplete information, and real-world chaos, exactly the conditions most models are never tested against.

What’s more dangerous is how invisible these failures can be. No outage. No alarm. Just slightly worse decisions, made thousands of times a day, until harm becomes normalized.

2.How AI Is Used Across Emergency Services and Policing

Public safety AI doesn’t announce itself. There’s no dashboard blinking red when it makes a bad call. It’s embedded in dispatch software, routing logic, and recommendation engines that act first and explain later, if they explain at all.

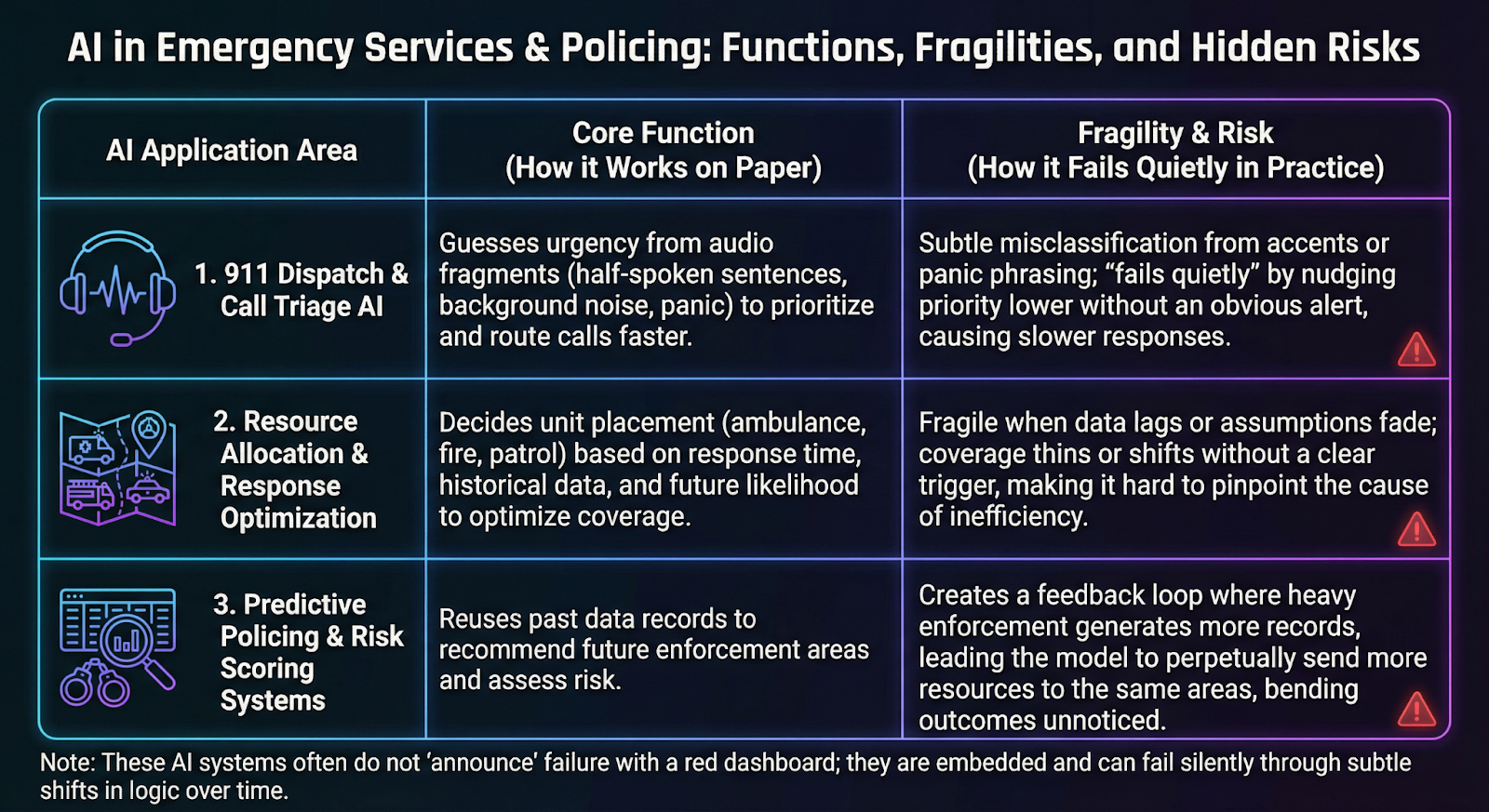

2.1 911 Dispatch and Call Triage AI

In many call centers, the first “decision” isn’t made by a dispatcher anymore. It’s made by a model trying to guess urgency from fragments: half-spoken sentences, screaming in the background, a caller who can’t explain what’s happening fast enough. These systems don’t fail loudly. They fail quietly by nudging a call one priority level lower, or routing it a few seconds slower, where the difference only shows up after something goes wrong.

That’s what makes dispatch AI dangerous to secure. You don’t need to break it. You just need to confuse it.

The risk is not just outages, it’s subtle misclassification. Weak 911 dispatch AI security allows edge-case phrasing, accents, or panic-driven speech patterns to push calls down the wrong priority path without triggering any obvious failure signal.

2.2 Resource Allocation and Response Optimization

Emergency services rely on AI to decide where to place ambulances, fire units, and patrol cars before incidents occur.

These models are built to answer a simple question: where should we send people next?

They juggle response time, historical coverage, and the likelihood that something will happen again in the same place. On paper, it looks efficient.

In practice, it’s fragile.

2.3 Predictive Policing and Risk Scoring Systems

When data starts to lag, or when assumptions quietly stop matching reality, the system doesn’t raise its hand. It keeps recommending. Patrols shift. Coverage thins in some areas and intensifies in others, usually without anyone being able to point to a single decision that caused it.

Predictive policing tools take this a step further. They don’t just react to the past, they reuse it. Areas that were heavily enforced generate more records. Those records feed the model. The model sends more resources back to the same places.

Nothing looks broken. But the outcome keeps bending in the same direction.

3. AI Red Teaming Use Cases in Public Safety

This is where theory stops being useful. Public safety AI doesn’t fail in clean lab conditions. It fails when people are panicked, systems are overloaded, and assumptions break in ways no checklist ever covered. That’s why AI red teaming in public safety focuses on pressure, not perfection.

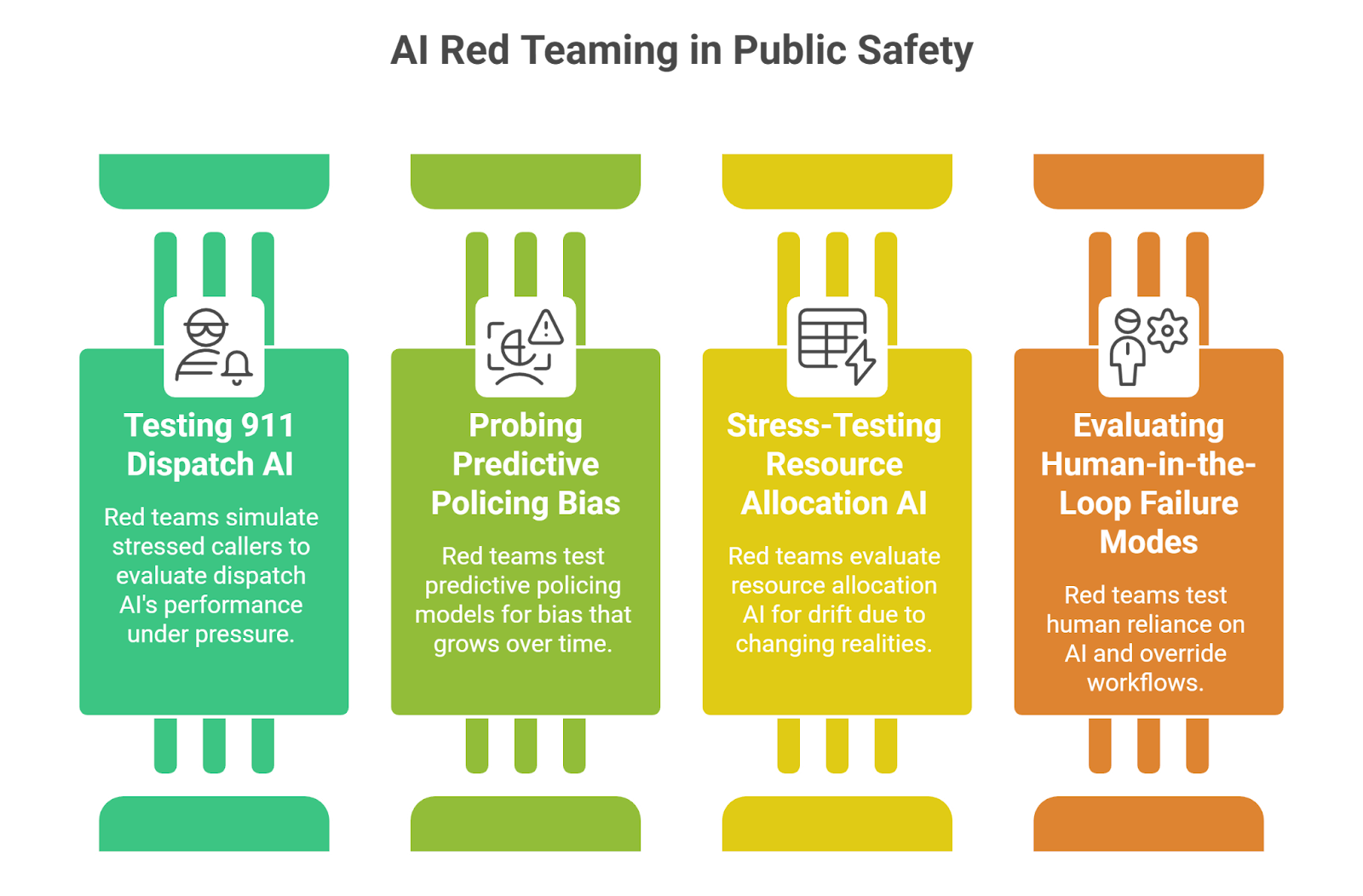

3.1 Testing 911 Dispatch AI Under Pressure

Red teams don’t approach dispatch models like attackers. They approach them like confused, stressed people, because that’s what the system actually sees.

A caller starts explaining an emergency, stops halfway, changes details, raises their voice. Someone else is crying in the background. The address comes late, or wrong, or not at all. None of this is exotic. It’s normal. And it’s where models struggle.

That’s the real risk. You don’t have to compromise dispatch AI. You only have to sound human in the wrong way.

3.2 Probing Predictive Policing Models for Bias That Grows Over Time

Predictive policing systems don’t just observe the world. They reshape it, then learn from the result.

Red teams test this by nudging the past, not dramatically, just enough to matter. A few synthetic crime reports added to one zone. Historical arrest data replayed with context stripped away. An enforcement-heavy neighborhood temporarily removed, then reintroduced. Each change looks harmless on its own.

But the model doesn’t see intent. It sees confirmation.

Over multiple cycles, those small inputs harden into “patterns.” Patrols go where patrols have always gone. New data reflects old decisions.

By the time anyone asks why outcomes look skewed, the model has already learned the answer it was trained to give.

3.3 Stress-Testing Resource Allocation AI for Drift

Emergency response models are rarely retrained as often as reality changes.

What red teams test:

➙ Seasonal population shifts

➙ Policy changes without model updates

➙ Degraded or delayed sensor and call data

What testing reveals:

Early signs of model drift in public sector AI appear as “acceptable” inefficiencies, long before anyone calls it a failure.

3.4 Evaluating Human-in-the-Loop Failure Modes

Humans are supposed to be the safety net. Often, they aren’t.

What red teams test:

➙ Over-reliance on AI recommendations

➙ Friction in override workflows

➙ Alert fatigue during surge events

What testing reveals:

Once trust tips too far toward automation, humans stop questioning outputs, even when something feels off.

4. How Public Safety AI Fails Under Adversarial Pressure

Public safety AI rarely collapses all at once. It degrades. Quietly. And usually in ways that look reasonable until the damage is already done.

Feedback Loops That Lock In Bad Decisions

Some systems fail by learning too well. Predictive models absorb the outcomes of their own recommendations and treat them as ground truth. Patrols sent to the same areas generate more data from those areas, which the model then reads as validation. Over time, the system stops exploring alternatives. It narrows.

Priority Manipulation in Dispatch Systems

Dispatch AI doesn’t need to be attacked to be manipulated. It just needs inputs that sit slightly outside its comfort zone. Stress testing shows that urgency scoring can be nudged by pacing, phrasing, or incomplete information. Under load, the system defaults to what it understands best.

Silent Model Degradation Over Time

Public sector models live longer than their assumptions. Policy changes, population shifts, and new crime patterns arrive faster than retraining cycles. The result isn’t a crash. It’s drift. Decisions still look plausible

5. Why Traditional Testing Misses These Vulnerabilities

Most public safety AI systems pass every test they’re given. That’s the problem.

Traditional testing is built to find things that break. Public safety AI rarely breaks. It misleads. Security teams validate infrastructure, access controls, and uptime. Model teams check accuracy against historical data. Everyone signs off, and the system goes live.

What never gets tested is behavior under pressure.

Dispatch models aren’t evaluated during simulated panic. Predictive systems aren’t challenged with feedback loops that evolve over months. Long-running deployments aren’t retested after policy changes, staffing shifts, or data quality decay. This is where AI security for emergency services quietly erodes, between audits, not during them.

6. Implications and Recommendations for Public Safety Agencies

Public safety agencies don’t need more AI principles. They need operational guardrails that hold up when conditions are worst.

First, treat AI systems as part of the emergency infrastructure itself. If a model influences dispatch order, patrol placement, or response timing, it deserves the same scrutiny as radios or vehicles. This is the foundation of AI security for emergency services, not as a policy exercise, but as operational risk management.

Second, red teaming can’t be a one-time event. AI red teaming in public safety needs to happen before deployment, after major policy changes, and whenever models are exposed to new populations or data sources. Public sector AI lives too long to assume static behavior.

Third, long-running systems must be monitored for gradual decay. Drift doesn’t announce itself. Agencies need mechanisms that surface small, accumulating deviations before they become systemic failures.

Finally, preserve real human authority. If operators can’t question, override, or slow down automated decisions without friction, the system is already unsafe, no matter how accurate it looks on paper.

7. Conclusion

Public safety AI doesn’t fail like software usually does. There’s no crash screen. No obvious error message. Just decisions that feel slightly off, then repeat.

Over time, those decisions add up. A dispatch that takes longer than it should. A patrol pattern that never really changes. A model still “working” long after the world it learned from has moved on. This is where AI security for emergency services actually breaks down: not at the moment of deployment, but months or years later, when no one is watching closely anymore.

That’s why AI red teaming in public safety isn’t about catching bad actors. It’s about accepting that these systems will surprise you. The only real choice is whether those surprises show up in controlled tests, or during real emergencies, when the consequences can’t be rolled back.

Public safety AI doesn’t fail loudly—it fails quietly, one decision at a time.

.svg)

.png)

.svg)

.png)

.png)